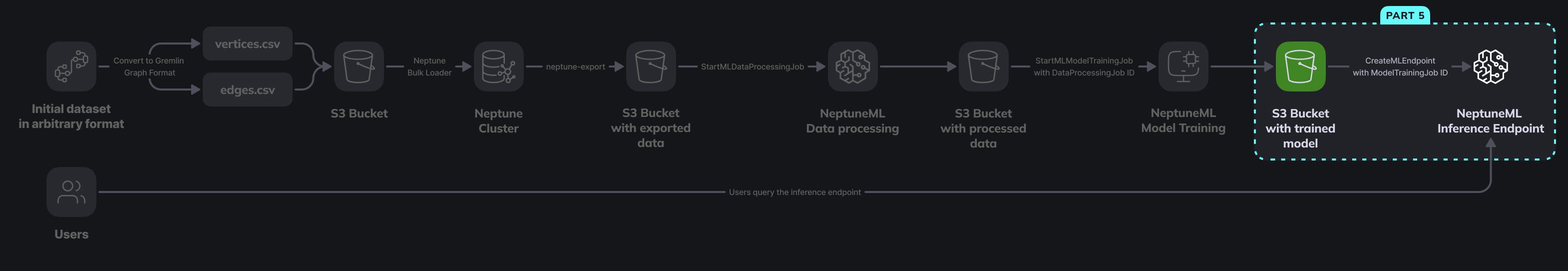

In this fifth and final post of our series on link prediction using Neptune ML, we’re diving into the inference process: setting up an inference endpoint to use our trained GNN model for link predictions. To establish the endpoint, we’ll use the Neptune cluster’s API and the model artifacts stored in S3. With the endpoint live, we’ll query it for link prediction, using a Gremlin query to identify high-confidence potential connections in our graph.

By now, we have already loaded the Twitch social networking data into the Neptune cluster (as described in Part 1 of this series), exported the data using the ML profile (check out Part 2 for details), preprocessed the data (as explained in Part 3), trained the model (see Part 4), and now we’re ready to use the trained model to generate predictions.

Read part 1 here; part 2 here; part 3 here; and part 4 here.

CREATING THE ENDPOINT

Let’s create the inference endpoint using the cluster’s API and the model artifacts we have in S3. As usual, we need an IAM role that has access to S3 and SageMaker first. The role must also have a trust policy that allows us to add it to the Neptune cluster (the trust policy can be found in Part 3 of this guide). We also need to provide access to SageMaker and CloudWatch API from inside the VPC, so we need the VPC endpoints as explained in Part 1 of this series.

Let’s use the cluster’s API to create an inference endpoint with this curl command:

curl -XPOST https://(YOUR_NEPTUNE_ENDPOINT):8182/ml/endpoints

-H 'Content-Type: application/json'

-d '{

"mlModelTrainingJobId": YOUR_MODEL_TRAINING_JOB_ID,

"neptuneIamRoleArn": "arn:aws:iam::123456789012:role/NeptuneMLNeptuneRole"

}'

We can use ‘instanceType‘ and ‘instanceCount‘ parameters to choose the EC2 instance type that will be used for link prediction, and deploy more than one instance. The full list of parameters can be found here. We’ll use the default ‘ml.m5(d).xlarge‘ instance as it has enough CPU and RAM for our small graph, and this instance type was recommended in infer_instance_recommendation.json that was generated after model training in our previous stage:

{

"disk_size": 12023356,

"instance": "ml.m5d.xlarge",

"mem_size": 13847612

}

The API responds with the inference endpoint ID:

{"id":"b217165b-7780-4e73-9d8a-5b6f7cfef9f6"}

Then we can check the status of the inference endpoint with this command:

curl https://YOUR_NEPTUNE_ENDPOINT:8182/ml/endpoints/INFERENCE_ENDPOINT_ID?neptuneIamRole='arn:aws:iam::123456789012:role/NeptuneMLNeptuneRole'

and once it responds with ‘status: InService‘ like this,

{

"endpoint": {

"name": "YOUR_INFERENCE_ENDPOINT_NAME-endpoint",

"arn": "...",

"status": "InService"

},

"endpointConfig": {...},

"id": "YOUR_INFERENCE_ENDPOINT_ID",

"status": "InService"

}

it means we’re ready to start using it with database queries.

The endpoint can also be viewed and managed from the AWS console, under SageMaker -> Inference -> Endpoints.

QUERYING THE ENDPOINT

Let’s use the endpoint to predict new ‘follow’ links in the graph. In order to do that, we need to choose the source vertex of the possible new links.

It helps if the source vertex already has links, so we’ll use this query to get the vertex with the highest number of existing links (outE and inE connections):

g.V()

.group()

.by()

.by(bothE().count())

.order(local)

.by(values, Order.desc)

.limit(local, 1)

.next()

The result is

{v[1773]: 1440}

which means that vertex with ID = 1773 has 1440 connections (720 inE and 720 outE).

To get the number of edges that start at node 1773 and the number of edges that end at that node we can use these queries:

g.V('1773').outE().count()

g.V('1773').inE().count()

The same number of inE and outE connections is expected because the initial dataset contains mutual friendships, and we augmented the data with reverse edges to make it work with Neptune directed graph.

Now let’s get the predicted connections. To do that, we’ll run a Gremlin query with Neptune ML predicates. We’ll use the inference endpoint and the SageMaker role which was added to the DB cluster to get the users that user 1773 might follow with a confidence threshold (minimum likelihood of link existence according to the model) of at least 0.1 (10%), while excluding the users that user 1773 is already following:

%%gremlin

g.with('Neptune#ml.endpoint', 'YOUR_INFERENCE_ENDPOINT_NAME')

.with('Neptune#ml.iamRoleArn', 'arn:aws:iam::123456789012:role/NeptuneMLSagemakerRole')

.with('Neptune#ml.limit', 10000)

.with('Neptune#ml.threshold', 0.1D)

.V('1773')

.out('follows')

.with('Neptune#ml.prediction')

.hasLabel('user')

.not(

__.in('follows').hasId('1773')

)

That returns 4 results:

"Result"

"v[755]"

"v[6086]"

"v[6382]"

"v[7005]"

According to our model, there’s at least 10% chance that user 1773 will follow each of these 4 users. Perhaps we can improve the model by increasing the number of training jobs to at least to 10 as recommended by AWS, and then compare the performance of the resulting model and the predicted links. In real applications, adding user profile and activity data to the graph also improves prediction accuracy.

Predicted connections in social networking datasets can be used to provide personalized recommendations like friend and content suggestions, enhancing user engagement and community growth, reducing churn, and providing additional data for targeted advertising.

Although we applied link prediction to a social networking dataset, it’s just one of many possible applications of this technology. From recommendation engines to network optimization, link prediction is a versatile tool that brings value by uncovering hidden relationships within data. As graph-based applications continue to expand, the potential of link prediction across industries promises new insights, efficiency, and enhanced user experiences.