AI networking is upending how enterprises design digital infrastructure. As artificial intelligence-driven tools spread across the industry, enterprises are reengineering data centers and distributed networks to ensure lasting performance.

But this shift is spotlighting critical shortfalls in scale and reliability. Matthew Landry (pictured, right), vice president of product management for Cisco Wireless at Cisco Systems Inc., points to shifting traffic patterns that demand greater automation. As more enterprises run up against architectures never designed for graphics processing unit-intensive workloads, networks are reaching their limits, according to Murali Gandluru (center), vice president of product management for data center networking at Cisco Systems Inc.

“Early adopters of AI [are hitting] pain points, particularly around scale of their infrastructure, power considerations, cooling considerations, all of that infrastructure stuff, but also very importantly, operational complexity,” Gandluru said. “As you might imagine, networks have not been built or designed for GPU-to-GPU class of communication.”

Gandluru and Landry spoke with theCUBE’s Bob Laliberte (left) at The Networking for AI Summit event, during an exclusive broadcast on theCUBE, News Media’s livestreaming studio. They discussed AI-driven network complexity and the future of enterprise infrastructure. (* Disclosure below.)

AI networking and the future of enterprise infrastructure

As AI networking extends from the data center to the edge, IT faces a twin challenge: Powering GPU-heavy workloads while keeping edge applications low-latency. These challenges mean that enterprises must think differently about networking for AI versus using AI for networking, according to Landry.

“So we think about it collectively at Cisco … what we call networking for AI as opposed to AI for networking,” he said. “In the campus and access networks we build, there’s much more focus on AI for networking. The fundamental design of those networks hasn’t had to evolve.”

Now, however, rising AI adoption is forcing networks to catch up. Instead of humans loading static web pages and pausing to read, applications now produce a continuous flow of data that requires uninterrupted bandwidth, according to Landry.

“We’re seeing a lot more ‘users’ on the network, and these tend to be more machines now,” he said. “They’re agents that look and act like humans even though they’re not, and they’re far more sensitive to latency while able to consume higher bandwidth.”

AI and GPU demands drive new data center priorities

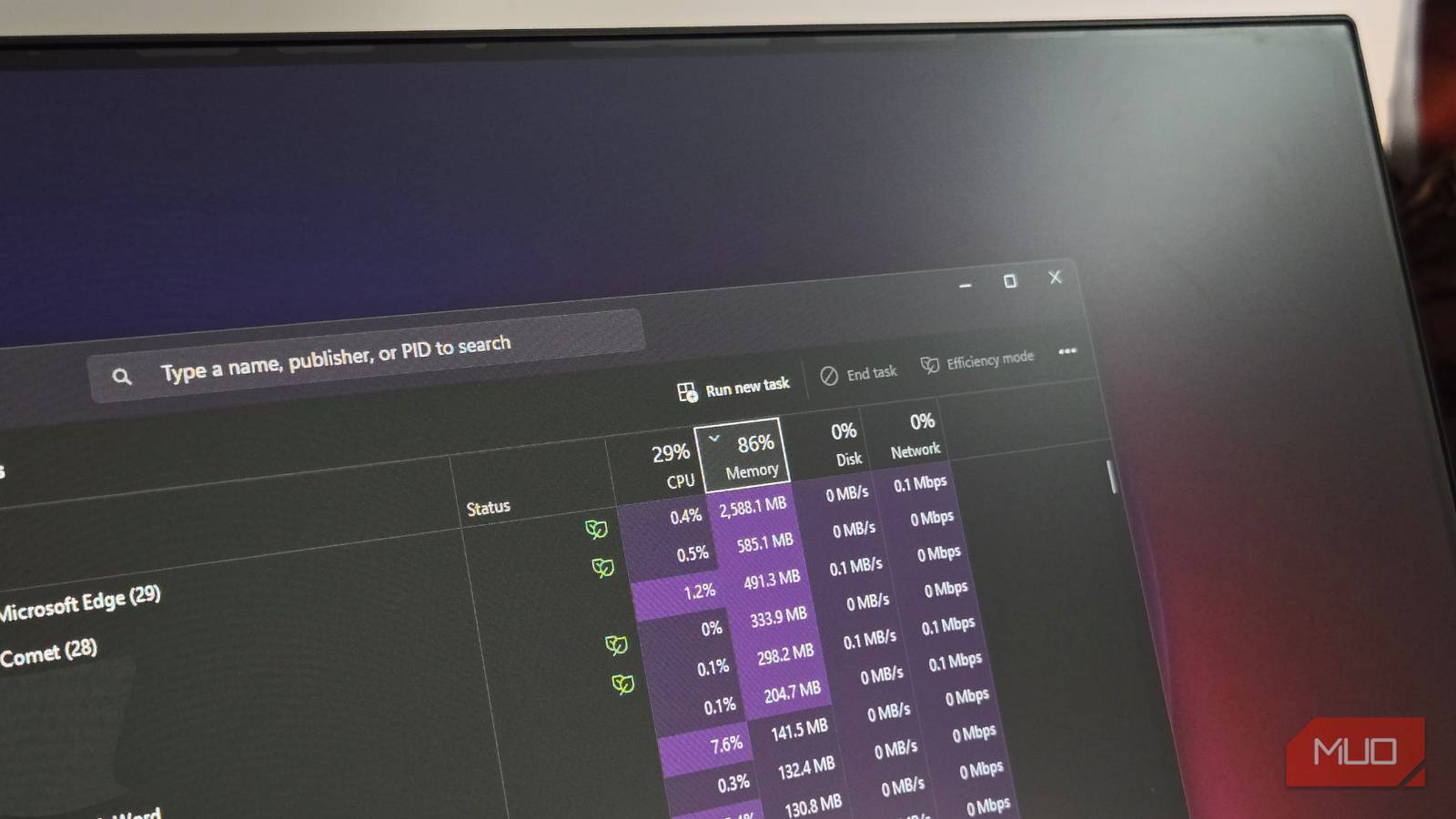

The demands of AI for networking are no longer hypothetical; it’s the new norm. Training massive models in centralized data centers consumes enormous bandwidth, while inferencing at the edge requires ultra-low latency. Constrained by budgets and headcount, IT teams are turning to automation to maintain networks without constant human intervention, reshaping how data centers are built, according to Gandluru.

“One of the key changes is that what’s required and expected of the systems is the ability to have mixed workloads, hybrid workloads, so the ability to have both [central processing unit] and GPU environments,” he said. “Some of our customers have actually deployed with a mix … then the network operations team has to make sure that the priority is given for the higher priority GPU-to-GPU communication versus the CPU-to-CPU communication or CPU-to-storage.”

That shift also raises new challenges in operations. Diagnosing issues across distributed fabrics requires greater visibility and speed, while security concerns put further pressure on NetOps teams to keep data center environments resilient. These challenges point to the need for automation to assist, according to Gandluru.

“You want to have an approach that’s scalable,” he said. “The ability to have a common approach to observability and the actionability across these. That is something that has helped some of our customers as they went and deployed GPU clusters.”

Here’s the complete video interview, part of News’s and theCUBE’s coverage of The Networking for AI Summit event:

(* Disclosure: TheCUBE is a paid media partner for The Networking for AI Summit event. Neither Cisco Systems Inc., the sponsor of theCUBE’s event coverage, nor other sponsors have editorial control over content on theCUBE or News.)

Photo: News

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with more than 11,400 tech and business leaders shaping the future through a unique trusted-based network.

About News Media

Founded by tech visionaries John Furrier and Dave Vellante, News Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.