Airbnb’s engineering team has rolled out Mussel v2, a complete rearchitecture of its internal key value engine designed to unify streaming and bulk ingestion while simplifying operations and scaling to larger workloads. The new system reportedly sustains over 100,000 streaming writes per second, supports tables exceeding 100 terabytes with p99 read latencies under 25 milliseconds, and ingests tens of terabytes in bulk workloads, allowing caller teams to focus on product innovation rather than managing data pipelines.

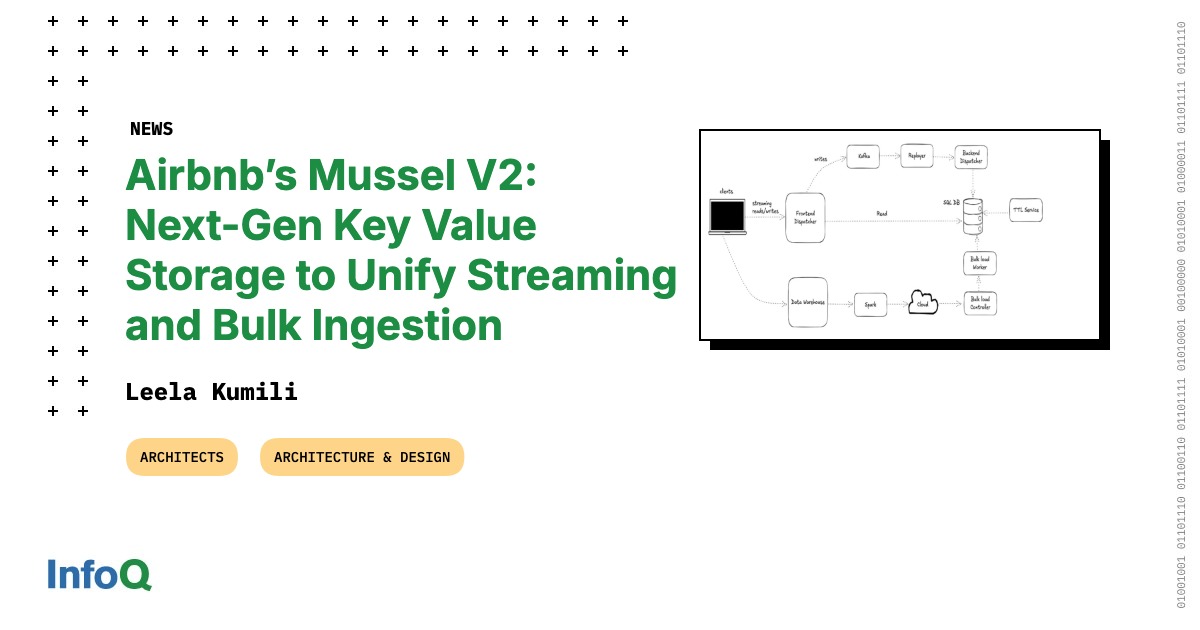

The earlier generation, Mussel v1, powered Airbnb’s internal data services but showed growing limitations as data volumes and product integrations increased. Its static hash-partitioned design ran on Amazon EC2 and was managed through Chef scripts. Separate batch and streaming paths increased operational overhead and made enforcing consistency difficult.

Mussel V1 architecture (Source: Airbnb Engineering Blog)

Mussel v2 addresses these constraints by pairing a NewSQL backend with a Kubernetes native control plane, delivering the elasticity of object storage, the responsiveness of a low-latency cache, and the operability of modern service meshes in a single platform. The system uses Kubernetes manifests with automated rollouts, dynamic range sharding with presplitting to mitigate hotspots, and namespace-level quotas and dashboards to improve cost transparency. The Dispatcher layer is stateless and horizontally scalable, routing client API calls, handling retries, and supporting dual write and shadow read modes to facilitate migration.

Writes are first persisted into Kafka for durability, and downstream Replayer and Write Dispatcher components apply them to the backend database in order. Bulk load continues via Airbnb’s data warehouse using Airflow jobs and S3 staging, preserving merge or replace semantics. The engineering team also introduced a topology-aware expiration service, sharding data namespaces into range-based subtasks processed concurrently by multiple workers. Expired records are deleted in parallel with scheduling to limit impact on live queries, and write-heavy tables use max version enforcement with targeted deletes. According to the team, these enhancements maintain v1 retention functionality while improving efficiency, transparency, and scalability.

Mussel V2 architecture (Source: Airbnb Engineering Blog)

According to Airbnb’s engineering team, migrating from v1 to v2 posed significant challenges. The team used a blue-green approach with table-level granularity, continuous validation, and fallback mechanisms. Because v1 lacked native change data capture or table snapshots, tables were bootstrapped into v2 using backups and sampled data to plan presplitting. After bootstrapping ingestion and verifying checksums, lagging Kafka events were applied, and dual writes were enabled. During cutover, reads gradually shifted to v2 while shadow traffic monitored consistency, with fallback to v1 if error rates spiked. Kafka served as a common log throughout the migration.

Data migration pipeline from Mussel V1 to V2 (Source: Airbnb Engineering Blog)

Airbnb’s engineers reported that migrating from an eventually consistent to a strongly consistent backend involved operational complexities, including write deduplication and controlled retries. Adjustments were made to query execution and workload distribution, while Kafka served as the durable log. Per-table staging, automated fallbacks, and monitoring enabled migration of over a petabyte of data without downtime.