In boardrooms across Wall Street and beyond, AI is now a fixture—discussed in every strategic offsite, featured in every quarterly roadmap. Yet, beneath the noise and novelty lies a far less visible, far more insidious challenge: the Intelligence Gap.

This isn’t about machines replacing humans. It’s about some institutions accelerating faster than their industry’s cognitive center of gravity. We are witnessing the birth of a new kind of systemic risk—one not caused by capital imbalances, but by knowledge asymmetries. In this silent divergence, the firms who understand how to wield AI at scale will not just outcompete others—they’ll reshape the rules of the game before the rest realize the game has changed.

The question is no longer “What can AI do for us?” but rather “What happens when only a few can afford to think at AI’s speed?”

The Unseen Divide: Intelligence as Capital

In the past, financial power was hoarded through three levers: balance sheets, relationships, and regulatory mastery. Today, a fourth force is emerging—intelligence capital: the capacity to synthesize, simulate, and act on data faster than the market, the regulator, or even the client can perceive.

Firms investing heavily in foundation models, proprietary data pipelines, and real-time decision infrastructure aren’t just innovating. They’re compounding knowledge. They’re building cognitive compounding loops—feedback systems that learn faster, get smarter, and deepen defensibility with every transaction.

This advantage isn’t just technical. It’s temporal. When one bank simulates 10,000 credit scenarios in a day and another in a quarter, they’re not just operating at different speeds—they’re inhabiting different futures.

This is the real competitive moat in financial services—and almost no one is talking about it.

From Efficiency to Epistemology

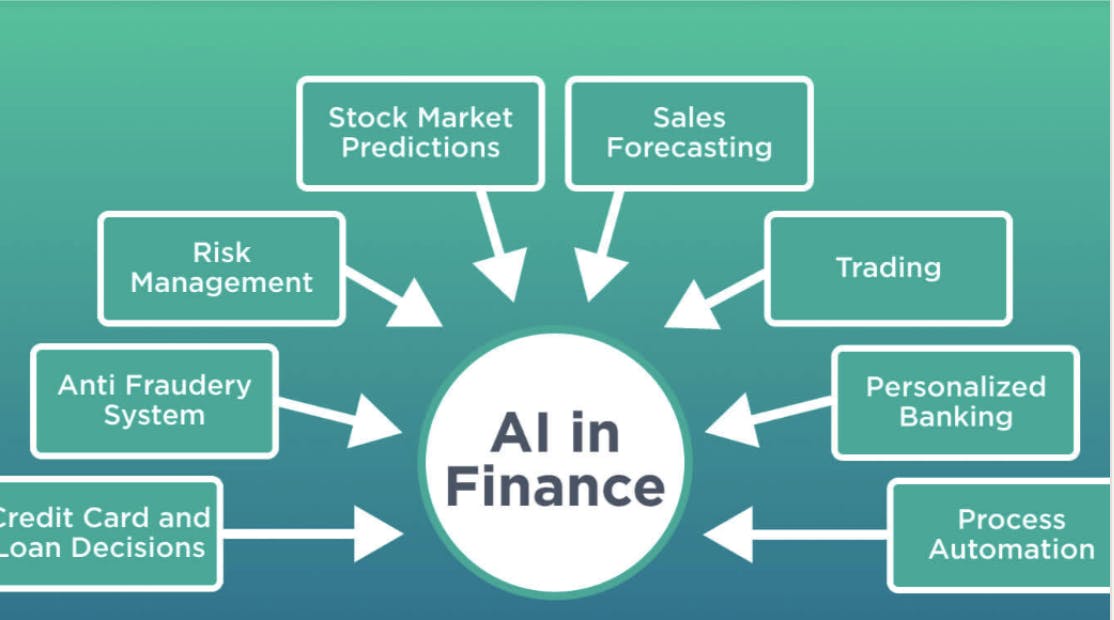

Most AI conversations still revolve around optimization: faster onboarding, lower fraud rates, smarter collections. But the next frontier isn’t operational—it’s epistemological. It’s about how we know what we know.

Imagine an AI that not only detects anomalies in your trade flow but also infers why they occur, simulates what if scenarios, and advises what next. These are not workflows—they are meta-workflows. They’re not just changing the outputs of financial institutions. They’re changing how financial institutions perceive risk, opportunity, and reality itself.

This creates a dilemma. Because as some firms shift into AI-native cognition, the interpretive gap between the human and the machine—and between AI-mature and AI-immature firms—begins to widen. Communication frays. Coordination lags. Mispricing occurs. And over time, the market starts to fracture cognitively.

We are no longer just building models. We are building epistemic engines that shape the very fabric of financial truth.

The Quiet Fragility of AI Concentration

There’s another, deeper risk at play. As AI becomes more expensive to train, more reliant on proprietary data, and more integrated into real-time decision flows, it becomes concentrated—in the hands of a few global banks, tech-forward asset managers, and cloud-native fintechs.

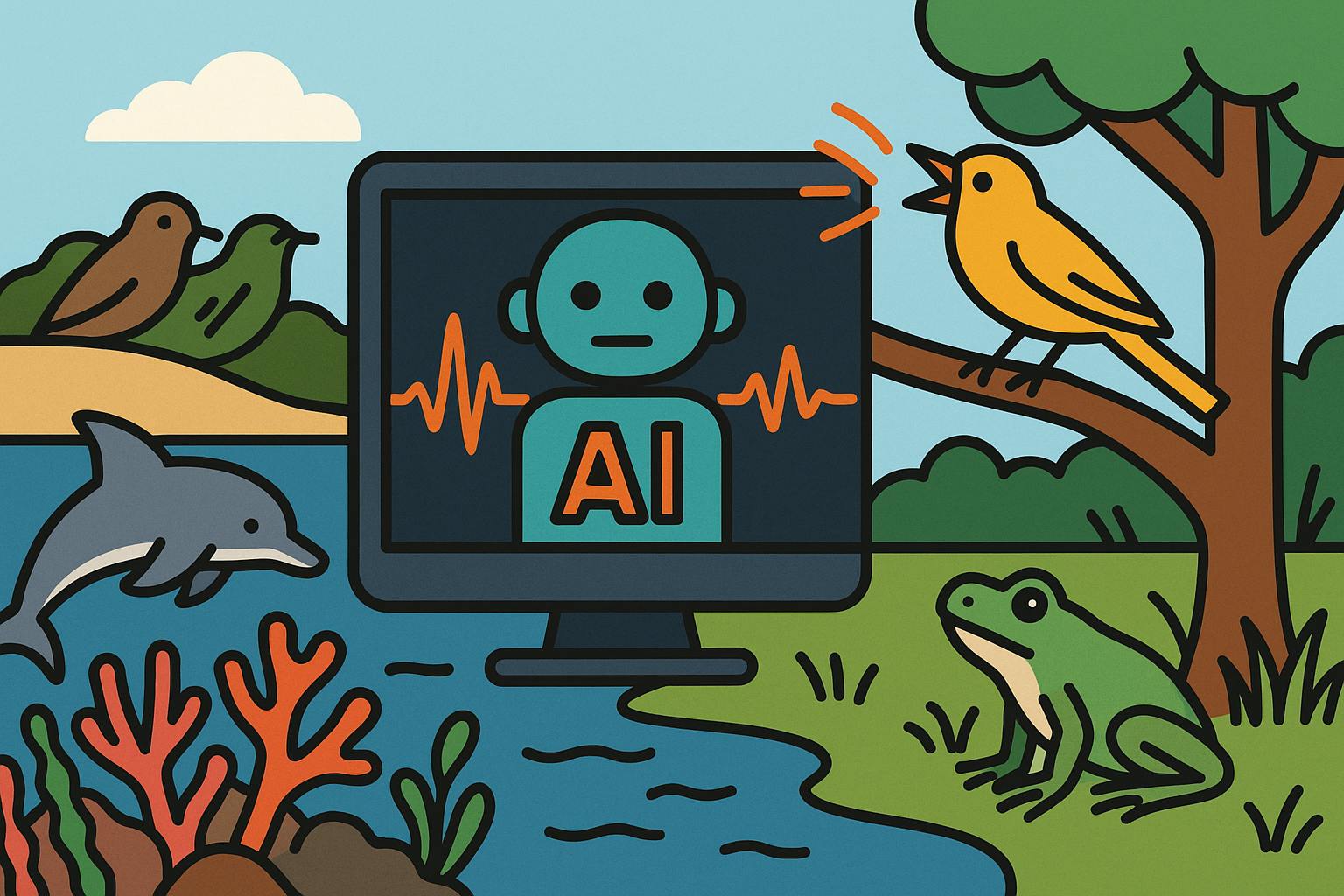

This creates a structural fragility: If too few players own the cognitive infrastructure of finance, systemic blind spots grow. Think of it like the 2008 financial crisis—not triggered by individual bad actors, but by widespread over-reliance on misprized models and assumptions.

Now, imagine a future where half the global credit market is underwritten using the same few AI platforms, trained on overlapping datasets, and optimized for the same risk signals. That’s not diversification. That’s monoculture. And monocultures fail catastrophically.

We are sleepwalking into cognitive concentration risk—and the industry has yet to design a framework to measure, audit, or govern it.

Rethinking Regulation: From Compliance to Cognition

Our regulatory frameworks were designed to govern transactions, not intelligence. Model risk guidelines focus on inputs, outputs, and documentation—but not on learning loops, synthetic data generation, or autonomous model updates.

As AI grows more self-referential—models fine-tuning themselves, agents making recursive decisions—the old paradigm of “check the model annually” becomes dangerously outdated. Supervision must shift from static validation to continuous oversight. Regulators must evolve from examiners into AI-aware risk engineers—capable of understanding how models reason, where they fail, and how to design systems for transparent cognition.

If we fail to bridge this gap, we won’t just see AI failures—we’ll see governance failures that trigger reputational, legal, and systemic consequences.

The Human Imperative in an AI World

Ironically, in a world dominated by machines, human judgment becomes more—not less—valuable. But the kind of judgment we need is different.

We don’t need more manual reviews of output. We need people who can ask better questions of the machine. Who understand that explainability is not a tradeoff with performance—it’s the foundation of trust. Who see that AI doesn’t eliminate ambiguity; it reframes it. Who can sit at the intersection of ethics, policy, and code and say: Here’s what we can do. Here’s what we should do. And here’s what we must never do.

The most strategic role in financial services over the next decade won’t be the trader or the compliance officer—it will be the AI integrator: the leader who can translate strategy into models and models into decisions.

The Next Race Isn’t for Talent or Tools. It’s for Time.

Every institution today has access to AI tools. Most have talent. What separates leaders from laggards is cycle time—the time it takes to test, learn, validate, and deploy intelligence at scale.

This is where legacy firms are most vulnerable—not because they lack smart people, but because their operating models are allergic to experimentation. Governance is designed to prevent failure, not learn from it. Architecture is rigid. Data is siloed. Culture is cautious.

Meanwhile, the frontrunners are shortening cognitive cycles. They’re making three AI-informed decisions for every one their competitors make. Over time, that compounds. It’s not just about getting smarter. It’s about getting faster at getting smarter.

That’s the race. And it’s already underway.

Final Thoughts: The Future Is Unevenly Distributed—Intellectually

AI will not democratize finance. At least not at first. It will amplify the capabilities of those already positioned to use it well—and expose the gaps of those who are not.

The coming decade will be defined not by who has the most data or the most dollars, but by who has the ability to turn intelligence into action responsibly, repeatedly, and at scale.

The real transformation is not technical. It is institutional. And it begins with a question too few are asking:

In a world of infinite intelligence, what will your firm choose to understand better than anyone else?

The firms that answer this boldly—and build accordingly—will define the next era of financial services. The rest? They’ll spend the next decade trying to catch up to decisions that have already been made.