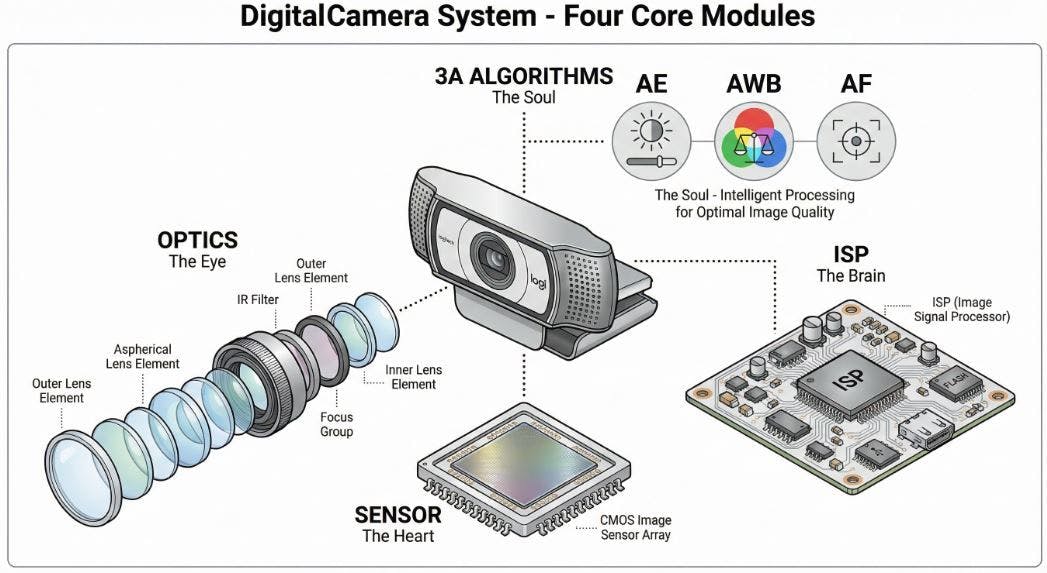

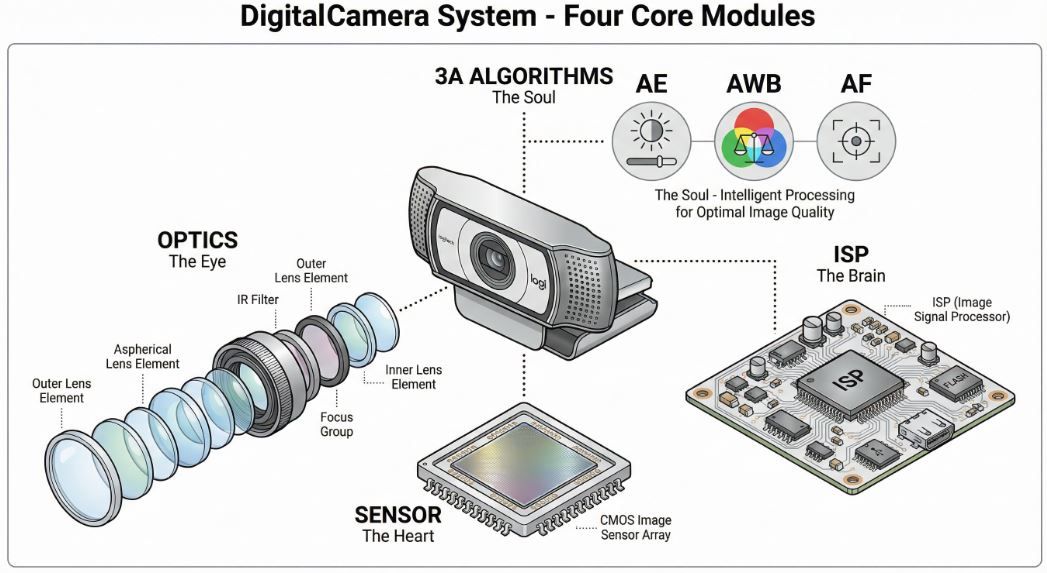

Anatomy of a Digital Camera: The Four Core Modules Every Engineer Should Know

For the vast majority of users, a camera’s quality is judged by intuitive feelings: its aesthetic appeal, its ease of use, and most importantly, the clarity and vibrancy of the photos it produces. However, for engineers on the front lines of development, the picture is entirely different. From an engineering perspective, a camera is not a simple black box but a complex system of precision modules working in concert. Every click of the shutter sets in motion an intricate dance of light, electricity, and algorithms

In fact, viewing the “camera” from different positions in the industry chain provides vastly different perspectives. At a camera manufacturing plant, engineers focus on assembling various components into a reliable product. At an IC design house, the focus shifts to creating powerful chips. And at a brand-name company, the team must define “good image quality” from the standpoint of system integration and user experience. It is this convergence of diverse perspectives that reveals a profound truth: a high-quality image is never the result of a single component’s merit. Therefore, this series of articles will guide readers through a deconstruction of the camera, delving into its core to explore the four modules that truly determine the success or failure of an image: the Optics, the Sensor, the Image Signal Processor (ISP), and the Control Unit & Algorithms.

Module 1: The Optics (The Eye) – The Art of Capturing Light

The optical system, commonly referred to as the lens, is the “eye” of the camera. Its task seems simple—to precisely gather external light and focus it onto the image sensor—but its interior is a marvel of precision optical engineering. A lens is not just a combination of glass pieces; it consists of multiple lens elements of different materials and curvatures, designed to maximally reproduce the light information of the real world. The quality of the optical system fundamentally sets the ceiling for image quality.

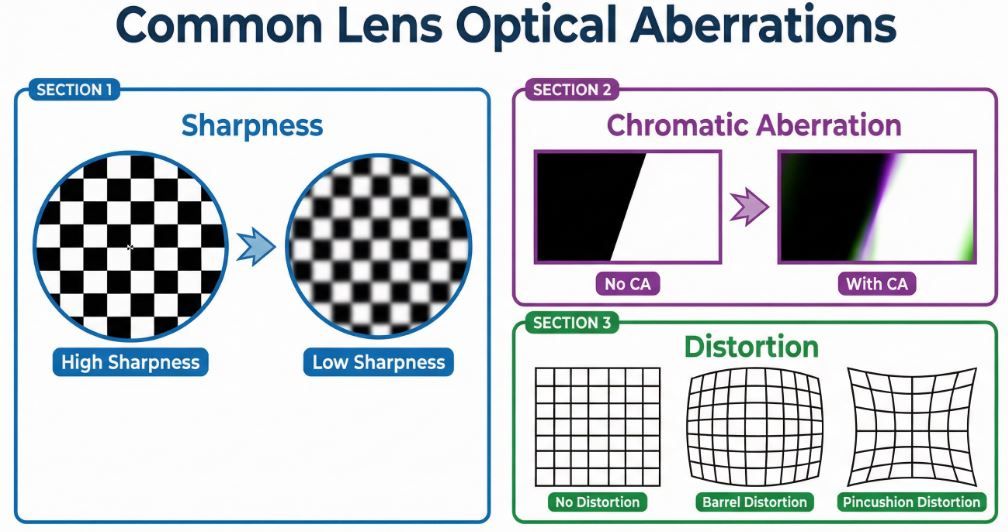

Many users, when evaluating a lens, often focus only on specifications like focal length and aperture. For engineers, however, the key performance indicators hidden beneath the specs carry far greater importance. A subsequent article will delve deeper into how parameters like Sharpness, Chromatic Aberration, and Distortion directly impact the final image quality, uncovering the trade-offs and challenges in optical design.

Module 2: The Sensor (The Heart) – Converting Light into Electricity

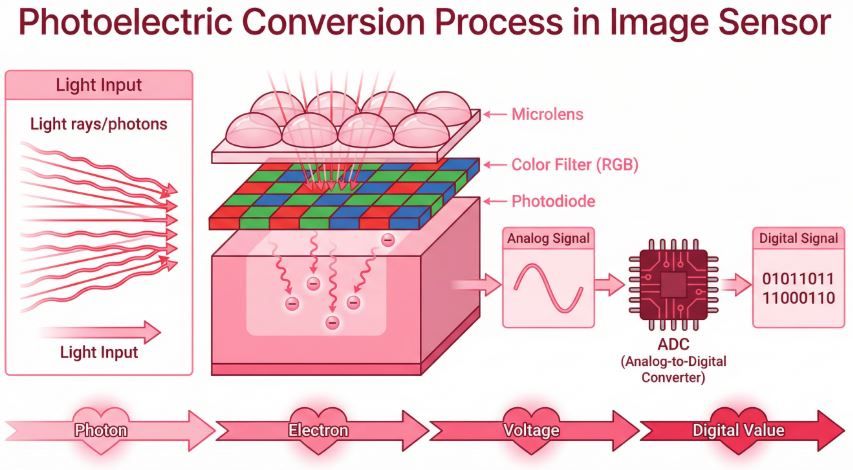

If the optical system is the eye, then the image sensor is undoubtedly the “heart” of the camera. Its core function is “photoelectric conversion,” turning the photons captured by the lens into electrical signals. This process is the starting point of a digital image, and its efficiency and accuracy are paramount. The mainstream sensor technologies on the market today are CMOS (Complementary Metal-Oxide-Semiconductor) and CCD (Charge-Coupled Device). Due to its advantages like low power consumption, high integration, and fast readout speeds, CMOS has become the preferred choice for the vast majority of digital devices.

In actual product development, there is an often-overlooked but crucial concept: “pixel size” is sometimes more important than “pixel count.” Within a limited sensor area, blindly pursuing high pixel counts at the expense of individual pixel size often leads to insufficient light intake, thereby increasing noise and reducing dynamic range. This topic will be explored in greater depth in a future article.

Module 3: The Image Signal Processor (The Brain) – From Raw Data to Stunning Images

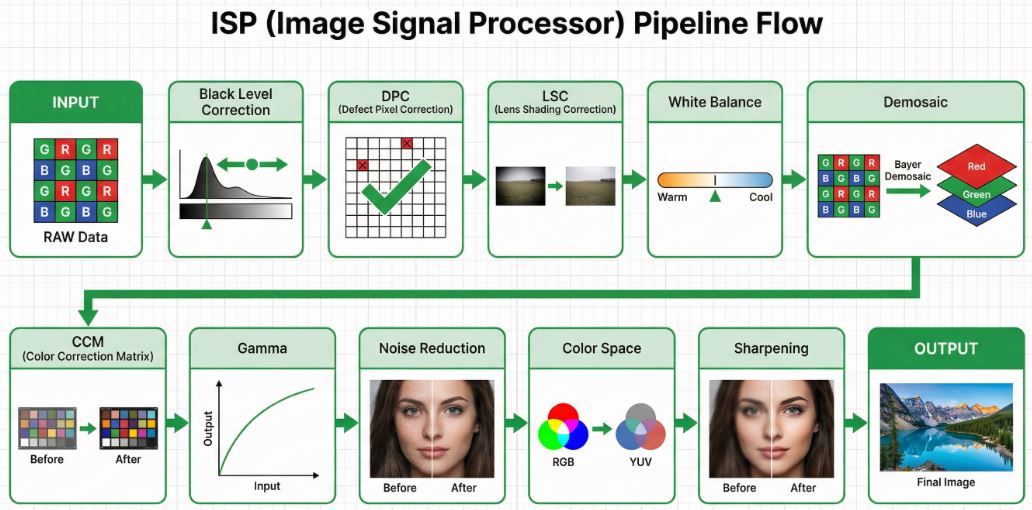

The output from the image sensor is unprocessed RAW data. It records the most primitive light information but is still a long way from the beautiful photos seen on screens. The navigator for this journey is the camera’s “brain”—the Image Signal Processor (ISP). The ISP acts like a powerful “digital darkroom,” receiving RAW data and performing a series of complex calculations in a very short time to ultimately produce a pleasing image.

A typical ISP processing pipeline includes the following key steps:

• Black Level Correction — Corrects the sensor’s baseline black value.

• Defect Pixel Correction (DPC) — Fixes abnormal pixels on the sensor.

• Lens Shading Correction (LSC) — Compensates for brightness fall-off at the edges caused by the lens.

• White Balance (WB) — Corrects color temperature to restore true colors.

• Demosaic / Debayer — Reconstructs a full RGB image from the Bayer pattern.

• Color Correction Matrix (CCM) — Adjusts color accuracy and saturation.

• Gamma Correction — Adjusts the image’s brightness curve to match human perception.

• Noise Reduction (2DNR/3DNR) — Eliminates random noise in the image.

• Color Space Conversion — Converts RGB to YUV or other formats.

• Sharpening / Edge Enhancement — Enhances the clarity of edges in the image.

These seemingly esoteric terms are the magic that turns raw, coarse data into gold. A future article will go deep inside the ISP to decrypt how each of these key steps works and how they influence the final image style.

Module 4: The Control Unit & Algorithms (The Soul) – Giving the Camera Intelligence

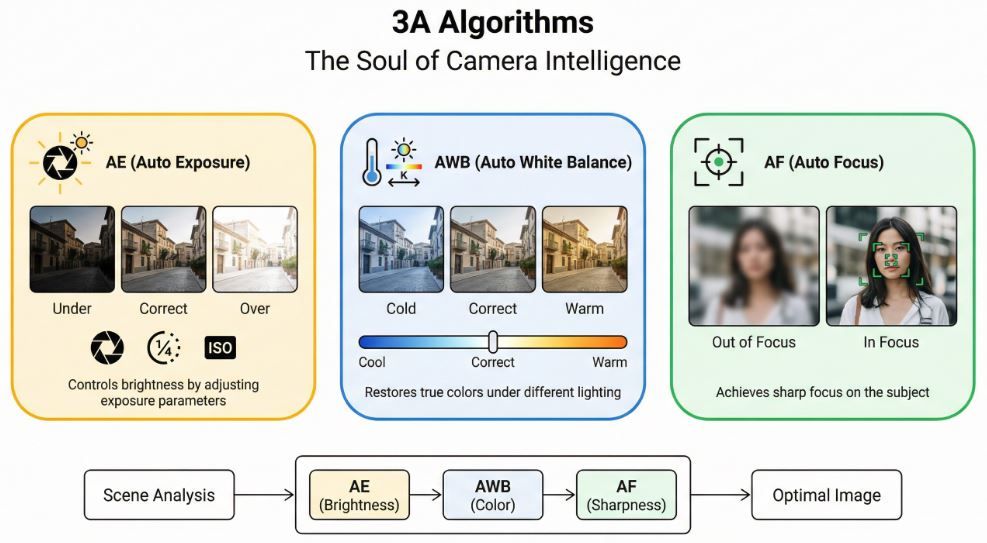

With eyes, a heart, and a brain, a camera still needs a “soul”—that is, the ability to make decisions. In an ever-changing shooting environment, how does a camera know what shutter speed to use? How does it reproduce the true colors of the scene? And how does it accurately focus on the target subject? All of this relies on the camera’s commander: the control unit and the 3A algorithms running on it.

The development of 3A algorithms is an extremely challenging task. They are not just mathematical formulas; they need to simulate human visual perception, enabling the camera to “think” in complex lighting, color, and dynamic environments and make judgments that are closest to what the human eye sees. It can be said that the maturity of the 3A algorithms directly determines the level of a camera’s “intelligence.” A subsequent article will specifically discuss this “soul” that gives the camera its wisdom.

Conclusion: A Collaborative Ecosystem

In summary, a high-quality digital image is the result of the perfect collaboration of four core modules: the optical system, the image sensor, the image signal processor, and the control unit with its algorithms. They are interlinked and indispensable. Even the best lens cannot perform to its full potential without an excellent sensor to receive the light. Even the most powerful ISP is useless without accurate parameters provided by precise 3A algorithms. A bottleneck in any one of these areas will become the bottleneck for the entire imaging system.

Through the high-level overview in this article, a systematic framework has been established for readers. The upcoming articles in this series will build on this foundation, starting with the “Optical System,” and dive deep into the core of each of these four modules. Please stay tuned to “An Imaging Engineer’s Notes” to explore the mysteries of digital imaging.