Key Takeaways

- Document processing is critical in enterprise applications. Failure to correctly extract data leads to operational delays, increased manual correction cycles, and higher risk exposure due to regulatory non-compliance.

- Modern document intelligence systems rely on modular pipeline architecture which typically includes stages for data capture, classification, extraction, enrichment, validation, and consumption.

- Cloud vendors and open-source tools offer a range of document AI services which include Google Document AI, Azure Form Recognizer, AWS Textract, and LayoutLM.

- Unstructured documents like contracts, legal memos, or clinical summaries can be analyzed using NLP with pre-trained language models fine-tuned for specific domains (e.g., legal, healthcare).

- Most real-world document processing pipelines can benefit from a hybrid strategy that combines the speed and simplicity of pre-trained APIs with the precision and control of custom models.

The Document Processing Evolution

Optical Character Recognition (OCR) has long served as the backbone of document digitization. Yet, its traditional implementations struggle to cope with today’s complex and varied document landscape. In the enterprise world, documents come in all shapes and forms – scanned contracts, images, emails with embedded tables, and even handwritten notes. Systems based solely on pattern recognition and templates can’t keep up. Their fragility leads to poor performance as soon as the input deviates from expected norms.

The shift is being driven by several forces. First, there’s the exponential growth in unstructured document types. Businesses are dealing with everything from free-form emails to heavily formatted statements, and legacy systems fail to adapt quickly. Second, the pressure to automate high-volume workflows means that manual intervention must be kept to a minimum. Lastly, the speed of modern business operations demands near-instant access to structured data extracted from documents.

When legacy systems fall short, it creates a ripple effect. Failure to correctly extract data leads to operational delays, increased manual correction cycles, and higher risk exposure due to regulatory non-compliance. These challenges require a more intelligent, adaptive approach to document processing – one that interprets documents in context, not just by visual structure.

A Real-World use case: Improving Mortgage Application Workflows

A mortgage lending company which processes thousands of loan applications daily serves as a useful example to understand this concept better. Loan applications require a diverse set of documents which encompass pay stubs, tax returns, identification proofs, bank statements, and employment letters.

These documents arrive in different formats, often scanned, photographed, or downloaded from various portals. Many are poorly formatted or contain handwriting, making them difficult to process using conventional systems.

The business challenge is straightforward. Reviewing these documents manually takes time and resources. Each application might take one to two days to move through verification, especially when the team needs to cross-check income, match signatures, or validate account balances. With rising customer expectations for quick approvals and strict regulatory checks in place, this delay becomes a serious bottleneck.

Older OCR-based systems do help, but they tend to break down when documents deviate even slightly from expected layouts. A minor change in form structure or a blurry scan can require full human intervention, adding hours to the process and increasing the risk of errors.

This is where a modern document intelligence pipeline becomes useful. By breaking the workflow into modular stages, lenders can process documents much faster and more accurately.

The Six-Stage Document Pipeline

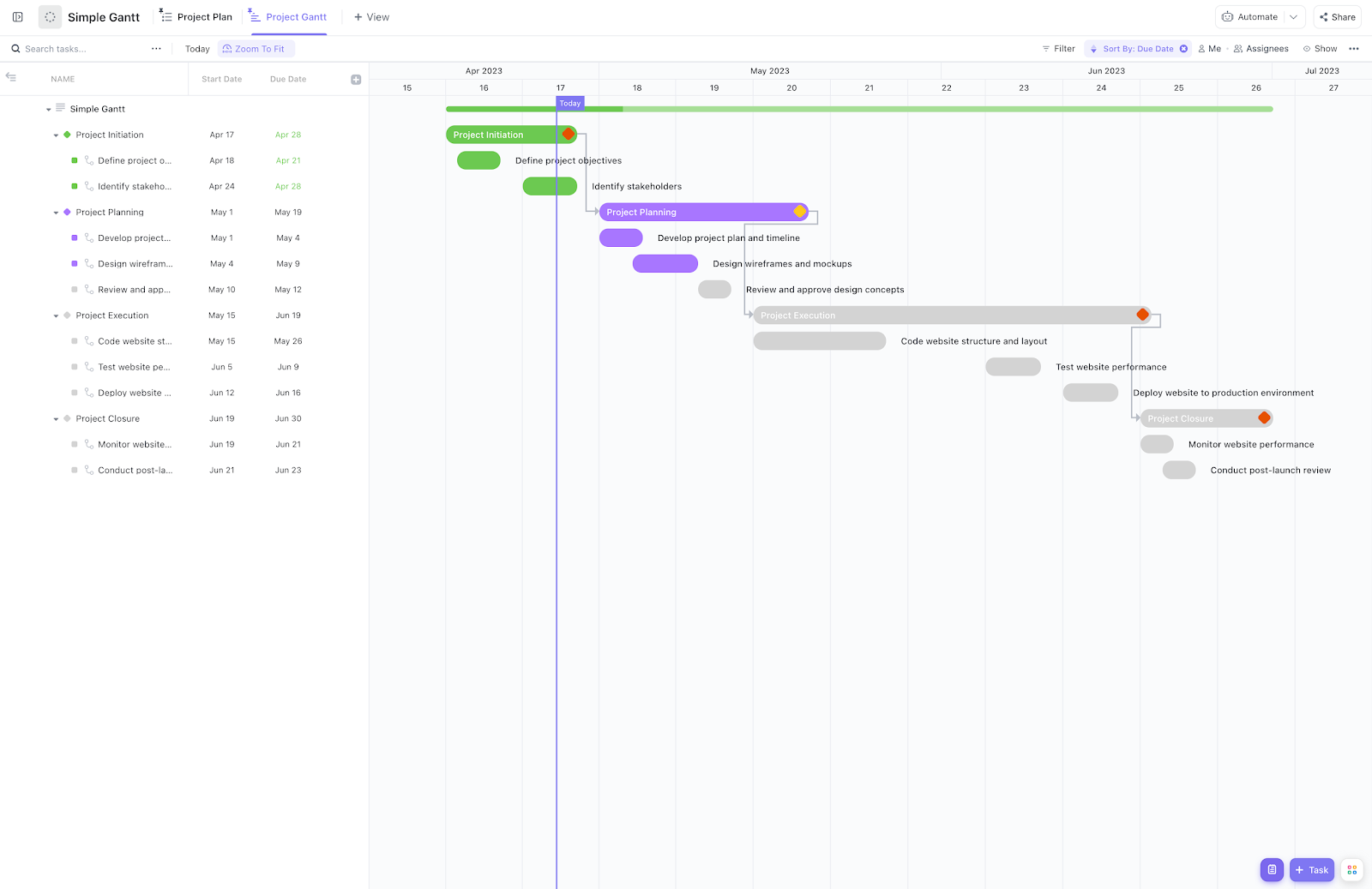

Modern document intelligence systems rely on modular pipeline architecture, where each stage handles a specific responsibility. This design offers resilience and flexibility, enabling teams to upgrade individual stages as technology evolves or business needs shift.

These stages include:

- Data Capture: Intake documents from a variety of sources such as scanned uploads, email attachments, mobile apps, and cloud storage buckets. Services like AWS S3 triggers or Google Cloud Functions often serve as the entry point.

- Classification: Determine the type of document – whether it’s a bank statement, medical record, invoice, or tax form. Pre-trained classifiers using transformer-based architectures (e.g., BERT, RoBERTa) or cloud-native tools like Google Document AI can be leveraged.

- Extraction: Identify and extract key-value pairs, tables, or paragraphs. Layout-aware models such as LayoutLM or services like AWS Textract and Azure Form Recognizer are commonly used here.

- Enrichment: Add context to raw extractions by linking terms to known ontologies, applying business rules, or querying external knowledge graphs.

- Validation: Assess extraction quality using confidence scores or rule-based validators. Ambiguous cases are routed to human reviewers where needed.

- Consumption: Push structured outputs into downstream systems like ERPs, CRMs, or analytics dashboards through APIs or message queues.

Figure 1. AI-powered document processing pipeline

[Click here to expand image above to full-size]

Pre-Trained Models: Fit for Purpose

While cloud vendors and open-source tools offer a range of document AI services, no one-size-fits-all model exists. Each comes with trade-offs around transparency, tuning flexibility, cost, and performance.

In the mortgage industry, teams often rely on Textract to pull information from standard documents like pay stubs and bank statements. It works well when the layout is clean and predictable. But as soon as formatting gets tricky, it can start throwing inconsistent or overly detailed outputs, which adds noise instead of clarity.

LayoutLM, on the other hand, helps handle more irregular inputs like handwritten W2s or documents with mixed layouts. Google Document AI offers robust layout understanding and solid NLP integration but lacks deep customization. Azure Form Recognizer is a middle-ground option with custom training capabilities but may require labeled examples. On the open-source front, LayoutLM is highly powerful for combining spatial layout and language but demands GPU resources and engineering expertise. Lightweight options like Tesseract and OpenCV can still be useful for clean, low-complexity scans.

Choosing a combination of models – known as ensembling is often the best strategy. In highly regulated domains like finance or healthcare, precision matters. Here, LayoutLM and Textract might be paired, with outputs cross-validated by rules engines or human reviewers. In contrast, for use cases like retail receipt parsing where throughput matters more than absolute precision, a combination of Azure Form Recognizer with heuristic fallbacks may suffice.

Figure 2. Document processing – High precision vs High throughput flow

[Click here to expand image above to full-size]

Decoding Visual Cues in Documents

Beyond textual content, many documents include visual markers that convey key meaning – checkboxes, tables, signatures, stamps, and logos. Traditional OCR typically ignores or misreads these.

Computer vision methods help bridge this gap. Object detection models such as YOLO and Faster R-CNN can identify elements like checkboxes or logos. Image segmentation techniques help parse tables and structured layouts. Tools like OpenCV assist in preprocessing – removing noise, correcting skew, and enhancing contrast. LayoutLM, meanwhile, combines positional encoding with language modeling to maintain document context throughout.

Together, these tools allow systems to interpret not just the words on a page but how they’re presented – and why that matters.

Working with Unstructured Documents

Unstructured documents – think contracts, legal memos, or clinical summaries lack clearly defined fields. Extracting the meaning from them requires context awareness.

This is where NLP takes center stage. Pre-trained language models fine-tuned for specific domains (e.g., legal, healthcare) can identify key entities like names, dates, medications, or obligations. Sentence embeddings allow the system to group semantically similar passages. Hybrid approaches that merge linguistic cues with layout features achieve the best results.

Such techniques are especially valuable in regulated sectors where subtle context or phrasing changes the meaning of a document entirely.

Cloud Services: How to Choose Wisely

Document processing capabilities at scale have become more accessible through the use of cloud-based document AI services. Users can access pre-built APIs for OCR tasks along with classification services and entity extraction capabilities while also benefiting from document summarization tools. These tools deliver quick outcomes yet no two platforms offer identical capabilities. Different vendors focus on unique strengths so selecting the appropriate service options determine the success of a production-grade system.

The next step involves comparing major providers while making architectural decisions that support your business objectives within technical limitations.

AWS Textract

Amazon’s Textract service is a popular choice for enterprise users already embedded in the AWS ecosystem. It’s particularly strong when it comes to structured form and table extraction. Its key capabilities include:

- Auto-detection of key-value pairs, tables, and checkboxes

- Seamless integration with services like AWS Lambda, S3, Comprehend, and Step Functions

- Moderate layout awareness, especially for forms

However, Textract tends to produce verbose and sometimes redundant outputs, and its performance on visually complex or degraded documents can be inconsistent. Moreover, costs can scale quickly if large volumes of pages are processed, especially with features like AnalyzeDocument (which charges separately for tables/forms).

Best suited for: Form-heavy workflows, such as invoices, receipts, loan applications, or W-2 documents in financial services or HR.

Google Document AI

Google’s offering focuses on pre-trained processors for specific document types (e.g., invoices, identity documents, W9s) and integrates closely with its NLP platform. Its main advantages:

- Strong semantic parsing and contextual understanding of language

- Effective for unstructured and semi-structured documents

- High OCR accuracy, especially with multilingual and handwritten inputs

However, limited model transparency and customization make it less appealing for organizations needing control over fine-tuned behavior. Google’s Document AI excels when document types are known and supported but is less flexible when new formats are introduced.

Best suited for: Enterprises looking for out-of-the-box intelligence for common documents, especially in industries like logistics, travel, and customer service.

Azure AI Document Intelligence

Microsoft’s Azure Document Intelligence (formerly Form Recognizer) stands out for its custom training capabilities. Key strengths include:

- Ability to train models with both labeled and unlabeled data (unsupervised learning)

- Support for form fields, tables, and selection marks

- Document classification, layout APIs, and model versioning

Azure also offers tighter model lifecycle management, which makes it suitable for internal DevOps teams building MLOps pipelines around document processing. However, it still requires significant data preparation and can be sensitive to template variations.

Best suited for: Organizations seeking a balance between flexibility and ease of use, such as in healthcare, insurance, and compliance-heavy sectors.

When and Why to Use a Hybrid Approach

Relying entirely on a single cloud provider can create blind spots. Most real-world pipelines benefit from a hybrid strategy that combines the speed and simplicity of pre-trained APIs with the precision and control of custom models.

Mortgage lenders, for instance, might combine Textract with custom-trained models and a human review step for higher-risk scenarios such as validating income from self-employed applicants. This layered approach helps ensure accuracy while managing compliance risk.

For example:

- Use AWS Textract or Azure Form Recognizer for extracting structured fields from standard forms.

- Augment with open-source models like LayoutLM for documents that require fine-grained layout or domain-specific semantics.

- Introduce a validation layer that scores confidence and routes uncertain outputs to a human reviewer or custom model.

- Mix in Google Document AI for semantic classification, summarization, or entity linkage across long documents.

Additionally, using orchestration tools like Apache Airflow, Kubernetes, or Azure Logic Apps helps blend these services into a cohesive, scalable pipeline.

Mapping Code to the Document Intelligence Pipeline

The sample application illustrates key stages of the six-stage pipeline discussed in Figure 1:

# modular_pipeline/

# ├── capture.py

# ├── classify.py

# ├── extract.py

# ├── enrich.py

# ├── validate.py

# ├── consume.py

Step 1 Data Capture – simulated S3 upload trigger

# capture.py

def simulate_data_capture():

return {

"bucket": "mortgage-uploads",

"object_key": "paystubs/applicant_1234.pdf"

}

Step 2 Classification – simple file-path based categorization

# classify.py

def classify_document(object_key):

if "paystubs" in object_key:

return "Pay Stub"

return "Unknown Document"

Step 3 Extraction – mocked Textract output

# extract.py

import boto3

def extract_data(bucket, object_key):

textract = boto3.client('textract')

response = textract.analyze_document(

Document={'S3Object': {'Bucket': bucket, 'Name': object_key}},

FeatureTypes=["FORMS"]

)

extracted = {}

for block in response['Blocks']:

if block['BlockType'] == 'KEY_VALUE_SET':

key = block.get('Key', 'Unknown')

val = block.get('Value', 'Unknown')

confidence = block.get('Confidence', 100)

extracted[key] = {"value": val, "confidence": confidence}

return extracted

Step 4 Enrichment – rule-based logic (e.g., inferring pay frequency)

# enrich.py

def enrich_data(extracted_data):

enriched_data = extracted_data.copy()

try:

income = int(enriched_data["Gross Income"]["value"].replace("$", "").replace(",", ""))

enriched_data["Pay Frequency"] = "Monthly" if income > 5000 else "Biweekly"

except:

enriched_data["Pay Frequency"] = "Unknown"

return enriched_data

Step 5 Validation – confidence scoring to flag for review

# validate.py

def validate_data(enriched_data):

for field, info in enriched_data.items():

if isinstance(info, dict) and info.get("confidence", 100) < 85:

return True # review required

return False

Step 6 Consumption – downstream integration or manual review decision

# consume.py

def consume_data(enriched_data, review_required):

if review_required:

return "Flagged for manual review"

else:

return f"Data stored: {enriched_data}"

This workflow can be easily extended to support more document types, incorporate layout-aware models like LayoutLM, and plug into orchestration systems like Airflow or Step Functions for production-scale deployment.

Scaling Architecture for the Real World

Scalability isn’t a nice-to-have – it’s a necessity. Systems must accommodate batch loads, real-time submissions, and everything in between.

Mortgage pipelines, for example, often experience sudden bursts during interest rate changes or policy announcements. In such cases, microservice-based architectures and queueing systems like Kafka help absorb the load and maintain smooth throughput.

A robust architecture typically features:

- Microservices that isolate each pipeline stage

- Kafka or Pub/Sub queues for decoupled communication

- Kubernetes for container orchestration and scaling

- Redis for caching common lookups or inference results

- Object and relational storage to handle raw files and structured outputs

Figure 3. Document processing – scaling with event driven architecture

[Click here to expand image above to full-size]

Implementation Challenges You’ll Actually Face

Deploying such systems in real-world environments reveals tough problems:

- Security: Encrypt documents in transit and at rest, especially for PII.

- Data labeling: Domain-specific training data is expensive to curate.

- Operational cost: OCR and NLP inference can be compute-heavy.

- Quality assurance: Evaluate at field-level accuracy, not just pass/fail.

- Human-in-the-loop: Still critical for error-prone or high-risk extractions.

Industry-Proven Case Studies

- The COiN (Contract Intelligence) platform from J.P. Morgan uses artificial intelligence to expedite the examination of complex legal contracts by converting 360,000 annual work hours into only seconds. The bank’s contract management operations became more efficient and accurate because of this innovation.

- Pennymac decreased their document processing duration from several hours to just minutes.

What’s Next for Document Intelligence

The field of document intelligence is undergoing a fundamental transformation moving beyond simple field extraction into a new era of semantic understanding and continuous learning. Emerging technologies are enabling systems not only to read documents but to truly comprehend their structure, intent, and relevance in a given context. Below are four key innovation frontiers shaping the future of enterprise-grade document processing:

Multimodal AI Models for Richer Comprehension

Traditional document processing systems rely primarily on text data, often supplemented by basic layout information. However, the next generation of document AI leverages multimodal learning, which combines three critical inputs:

- Text (semantic content)

- Layout (spatial relationships between elements)

- Visual features (images, checkboxes, logos, stamps)

Models like LayoutLMv3 and DocFormer are pioneering this space by embedding these modalities into a unified representation. This results in significantly improved accuracy, especially for documents with complex formatting such as forms, reports, medical prescriptions, and contracts. For instance, understanding whether a checkbox is selected or how a signature visually anchors content cannot be captured by text alone – this requires multimodal context.

As more industry-specific pre-trained models become available, organizations will be able to fine-tune them on small datasets, achieving state-of-the-art results without large annotation efforts.

Contextual Reasoning and Automated Summarization

Merely extracting data is no longer enough. Organizations need systems that reason over document content to determine meaning, make inferences, and provide actionable insights. This shift is driving advancements in:

- Automated summarization, where models can condense long documents into decision-ready abstracts (e.g., summarizing a 20-page legal contract into key obligations and risks).

- Contextual understanding, where systems consider surrounding text, domain-specific ontologies, and historical patterns to infer intent and relationships.

For example, in insurance claims, it’s not sufficient to extract dates and policy numbers – the system must also understand if a claim is legitimate, urgent, or potentially fraudulent based on the context of the document and past cases. Tools like GPT-style transformers fine-tuned on structured content are playing a major role in this transformation.

Intelligent Workflow Orchestration Based on Confidence and Risk

Current AI document pipelines often apply the same rules across all inputs. However, future-ready systems will incorporate intelligent routing mechanisms that adapt based on the confidence level of extracted data and the business risk associated with errors.

Consider these real-world strategies:

- A mortgage processing system could automatically escalate documents with low-extraction confidence or inconsistent values to a human underwriter.

- A retail system, on the other hand, might silently accept lower-confidence results if they pertain to low-value transactions like receipt scanning.

This adaptive routing is powered by a blend of confidence scoring, business rule engines, and risk-weighted decision trees, ensuring that human effort is applied where it truly adds value.

Continuous Human-in-the-Loop Learning for Evolving Accuracy

Static models degrade over time as document templates evolve, regulations change, and user expectations shift. The next phase of document intelligence will embrace human-machine feedback loops, where insights from manual review and exception handling are systematically reintegrated into model retraining.

This involves:

- Capturing corrections made by human reviewers

- Logging edge cases where models fail or abstain

- Using weak supervision or reinforcement learning to improve future performance

Platforms that support active learning pipelines – where human input directly informs model refinement will outperform those that require periodic, manual retraining. This is especially critical in regulated environments where traceability, transparency, and accuracy are not negotiable.

In summary, document intelligence is no longer about replacing humans. It’s about augmenting them with systems that are increasingly context-aware, self-improving, and intelligent by design. The shift from “reading” documents to understanding and reasoning over them is not just a technical evolution – it’s a strategic imperative for businesses seeking automation without compromise.

Applying This in Your Organization

- Map each of your key document types to a six-stage pipeline.

- Identify which cloud and open-source tools align with your accuracy, cost, and compliance needs.

- Set up feedback loops for continuous model improvement.

- Prioritize intelligent routing based on confidence thresholds and business risk.

- Invest early in observability, caching, and orchestration for production-scale deployment.