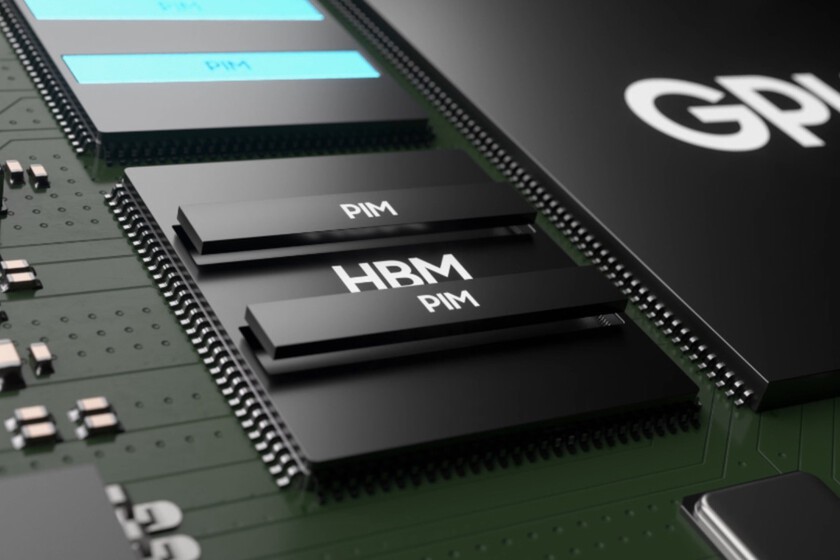

Samsung Electronics will imminently begin manufacturing HBM4 memories in February, the next generation of high-speed chips that power AI accelerators. It has already passed qualification tests from both NVIDIA and AMD, according to industry sources cited by Reuters y Korea Economic Daily.

The first deliveries will go to NVIDIA for its Vera Rubin platform, whose CEO, Jensen Huang, has confirmed that it is already in production with a launch planned for the second half of 2026.

Why is it important. This move is set to change the balance of power in the AI memory market, where SK Hynix has been the dominant supplier to NVIDIA. Demand for HBM chips has skyrocketed with the rise of generative AI, and that has made them one of the most critical bottlenecks in the tech industry.

- For NVIDIA, diversifying supply reduces the risk of relying on a single supplier when demand for its accelerators far exceeds its capacity.

- For Samsung, regaining ground means accessing revenues worth tens of billions annually at a critical time: it needs this boost precisely because SK Hynix has eroded part of its business.

Between the lines. Samsung has played a risky card: adopting a sixth-generation (1c) 10-nanometer DRAM manufacturing process before anyone else, despite suffering from very low initial yields. It has also integrated a 4 nanometer logic chip, more advanced than that of SK Hynix.

The bet is paying off: Samsung’s HBM4 chips reached speeds of 10.7 gigabits per second in NVIDIA tests, surpassing the 10 Gbps threshold. SK Hynix recorded 8.3 Gbps and Micron around 8 Gbps, according to a technical source cited by Korea Herald.

- The change in qualification criteria has favored Samsung. Nvidia and AMD have tightened speed requirements but relaxed thermal limits because they prioritize raw performance.

- This comes after accelerators like Google’s TPUs have demonstrated comparable performance to NVIDIA GPUs.

Yes, but. SK Hynix is already working to avoid losing ground: it has redesigned its HBM4 chips and is awaiting the results of new qualifications that will give it an advantage. In February it will begin deploying wafers at its new M15X plant in Cheongju to produce HBM chips.

In addition, SK Hynix closed all HBM supply agreements with its largest customers in October for 2026. Samsung arrives later, trying to grab whatever share it can, thanks to all manufacturers operating at the limit of their capacity.

The geopolitical background. South Korea concentrates more than 70% of the world’s production of HBM chips, a component so critical for AI that it determines who can train the most advanced models and who cannot.

China, despite its enormous investments in semiconductors, is still a few years behind in this technology due to Western restrictions on advanced lithography equipment. South Korean dominance in HBM is one of the most valuable advantages the Western technology bloc maintains.

And now what. Both companies have to publish their quarterly results this Thursday. And it is to be hoped that they will take the opportunity to provide more details about HBM4 orders and real demand. Those numbers will say a lot about who is winning this battle for the most valuable component of the AI era.

In WorldOfSoftware | There is an unexpected victim of the rise in RAM memory prices: the very modern connected cars

Featured image | Samsung