How organizations can protect sensitive datasets while balancing business goals, legal precedent, and user experience

The Growing Scraping Challenge

The web-scraping industry is no longer niche. Valued at USD 1.03 billion in 2025, it is projected to nearly double by 2030. Once a fringe activity, scraping now underpins competitive intelligence, price tracking, and even AI model training, while simultaneously fueling predation against content owners. Traditional defenses rate limiting, CAPTCHA, and IP bans are brittle against modern toolkits that use rotating proxies, headless browsers, AI-driven fingerprint evasion, and adaptive retry logic.

Meanwhile, litigation may not provide a safety net. The hiQ v. LinkedIn (Fenwick & West) decision confirmed that scraping of publicly available data cannot always be curtailed under the CFAA, leaving purely legal strategies insufficient. The more resilient path is a layered defense, balancing data protection with SEO ranking, respecting user experience, and blocking bots without inducing friction for legitimate users.

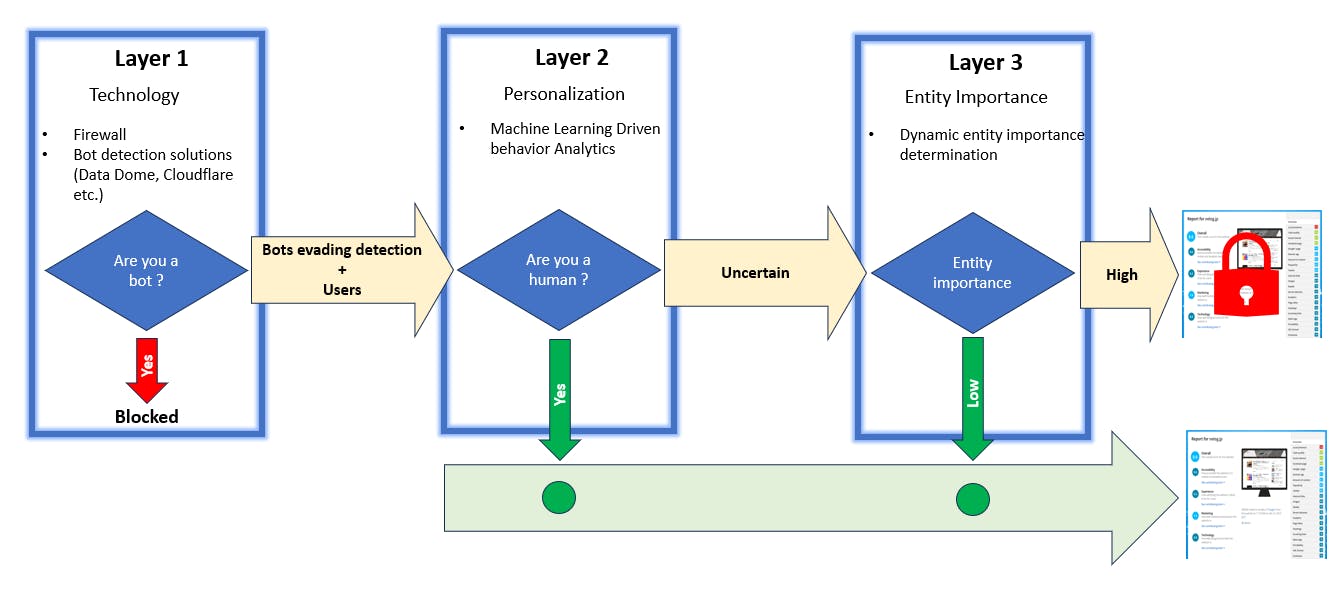

A Three-Layer Model for Data Protection

Before designing such a defense, it is important to recognize the broader context: the scraping industry is still nascent and raises unresolved ethical questions. Enterprises invest heavily to acquire, clean, and structure valuable datasets, investments that can be quickly undermined if a scraping company simply extracts and resells the same information without incurring those costs. Proponents often highlight potential benefits, such as the use of public data in training large language models. Yet even this application is being tested in the courts, with The New York Times v. OpenAI (Nelson Mullins) underscoring how unresolved and contested scraping practices remain.

At the same time, industry research points to the strategic importance of public web data, with organizations treating it as a core input for AI, finance, and e-commerce (Mordor Intelligence).

Still, the legal and ethical foundation of widespread scraping remains unsettled. Attempts to formalize the practice continue, but it remains an open question whether it should be normalized at all. A layered defense acknowledges this tension. It is designed not just to stop technical intrusions but to tilt the balance, amplifying the disadvantages scrapers already face while preserving fair and secure access for legitimate users.

Layer 1: Perimeter Protections

The first layer functions as the outer moat. Web Application Firewalls and commercial bot-mitigation tools (e.g., DataDome, Cloudflare) filter unsophisticated traffic using methods like rate limiting, CAPTCHAs, and IP reputation. This removes the noise of opportunistic scrapers so resources can focus on higher-value threats. This layer removes the “noise” of opportunistic scrapers, keeping the majority of automated traffic out so that internal resources can focus on higher-value threats.

Yet determined bots still remain, those with a clear mission to extract data and an identified revenue opportunity for selling it. To address the traffic that evades this first line, organizations often turn to gating measures such as paywalls or login screens. While effective at restricting access, both approaches significantly increase user friction, making it difficult for businesses to remain sustainable. Pay walling or strict gating is not an industry standard across many sectors, and a single player adopting it risks eroding its competitive position. This tension sets the stage for the second layer of defense.

Layer 2: Content Prioritization

Not all data warrants the same protection. Crown-jewel datasets, where the cost of acquisition, risk of theft, or strategic value is highest, should be gated, while commoditized data remains open. CDPs then tailor who encounter friction, scoring users by device, behavior, and intent. This sequencing ensures only critical data is gated, and only for a small subset of users, reducing friction, limiting bounce risk, and protecting SEO visibility. This selective approach allows organizations to protect their most valuable content while continuing to provide broad access to lower-value information, ensuring they do not undermine their own competitiveness by overprotecting assets that are easily replaceable.

By gating only the most critical content, businesses reduce both customer friction and competitive risk.

Layer 3: Behavioral and Contextual Trust

Once priorities are set, organizations can use customer data platforms (CDPs) to determine who actually encounters the gate. By enriching identity and device signals, such as fingerprinting, login history, and behavioral baselines, CDPs help score users and separate potential customers from anonymous or suspicious traffic. Machine learning further refine this process, distinguishing between those likely to convert (purchasing a product or subscribing to a service) and those who are simply browsing.

For consumers with high conversion potential, the experience should remain seamless, without unnecessary gating or pay walling. There is precedent for this nuanced approach: The New York Times adopted a “leaky paywall”, striking a balance between protection and accessibility. But the story needs to evolve. The next step is to leverage CDPs not only for conversion analytics but also to tailor user experiences dynamically, ensuring that only high-risk or high-value content is gated while legitimate users retain frictionless access.

Why This Layered Approach Matters

- Legal alignment: The reality is that legal protections against scraping remain limited. The Computer Fraud and Abuse Act offers little recourse when data is publicly accessible, as courts have repeatedly narrowed its reach. This lack of enforceable protection is a challenge organizations must acknowledge. A layered defense provides the practical alternative: even if a scraper circumvents one barrier, additional layers help in closing the gap left by weak legal remedies.

- Business balance: Selective disclosure reduces friction for legitimate users while still protecting the crown-jewel data. This avoids the all-or-nothing trade-off that harms SEO and customer experience.

- Operational resilience: Each layer reinforces the others. Perimeter tools stop the bulk of unsophisticated attacks, content prioritization ensures only high-value data is gated while commoditized data remains accessible, and behavioral trust models dynamically tailor access so that legitimate users experience minimal friction while high-risk traffic encounters safeguards.

Operational Roadmap for Layered Defense

Organizations can phase in this model without a “big bang.” A practical roadmap might include:

-

Deploy bot management providers: The first step is deploying perimeter defenses through commercial bot management platforms. These providers combine rate-limiting, device fingerprinting, and CAPTCHA challenges to filter out opportunistic scrapers at scale.

-

Identify highest-value data: Classify “crown jewel” datasets where the cost of acquisition, risk of theft, or strategic value makes exposure unacceptable, while distinguishing these from commoditized data that can remain accessible.

-

Use customer data platform (CDP): Dynamically tailor gating and user experiences, ensuring only high-value data and high-risk users encounter friction.

-

Continuously monitor and refine: Track false positives, solve rates, bounce rates, and engagement metrics. Feed this insight back into the CDP and gating logic to ensure the balance between protection, usability, and business performance remains calibrated.

Challenges and Trade-Offs

This layered defense model is not without hurdles. False positives can frustrate genuine users. Sophisticated scrapers may still mimic human behavior convincingly. Legal frameworks continue to evolve, creating uncertainty around enforcement. Yet, a purely perimeter-based defense leaves organizations exposed. Risk-based gating offers a more nuanced path: strong enough to deter large-scale scraping, yet flexible enough to preserve legitimate business flows.

Looking Ahead

As scraping techniques grow more advanced, the combination of legal precedent, AI-driven anomaly detection, and risk-tiered gating will shape the next generation of data protection. Organizations that act now will be better positioned to safeguard sensitive data assets without undermining customer experience or search visibility.