If you’ve ever had to dig through a 500-page PDF manual to find a single error code, you know the pain.

In the enterprise world, knowledge is often trapped in static documents like user manuals, lengthy E-books, or legacy incident logs. The “Ctrl+F” approach doesn’t cut it anymore. We need systems that don’t just search for keywords but actually converse with the user to solve the problem.

Most tutorials show you how to build a simple FAQ bot. That’s boring.

In this guide, we are going to build a Multi-Domain “Root Cause Analysis” Bot. We will architect a solution that can distinguish between different context domains (e.g., “Cloud Infrastructure” vs. “On-Prem Servers”) and use Metadata Filters to give precise answers.

Then, we’ll take it a step further and look at how to integrate Azure OpenAI (GPT-4) to handle edge cases that standard QnA databases can’t touch.

Let’s build.

The Stack

We aren’t reinventing the wheel. We are composing a solution using Azure’s industrial-grade AI services:

- Azure Language Studio: For the “Custom Question Answering” (CQA) engine.

- Microsoft Bot Framework: To orchestrate the conversation flow.

- Azure OpenAI (GPT-4): For generative responses and fine-tuning on specific incident data.

Phase 1: The Architecture

Before we write code, let’s understand the data flow. We aren’t just throwing all our data into one bucket. We are building a system that intelligently routes queries based on Intent and Metadata.

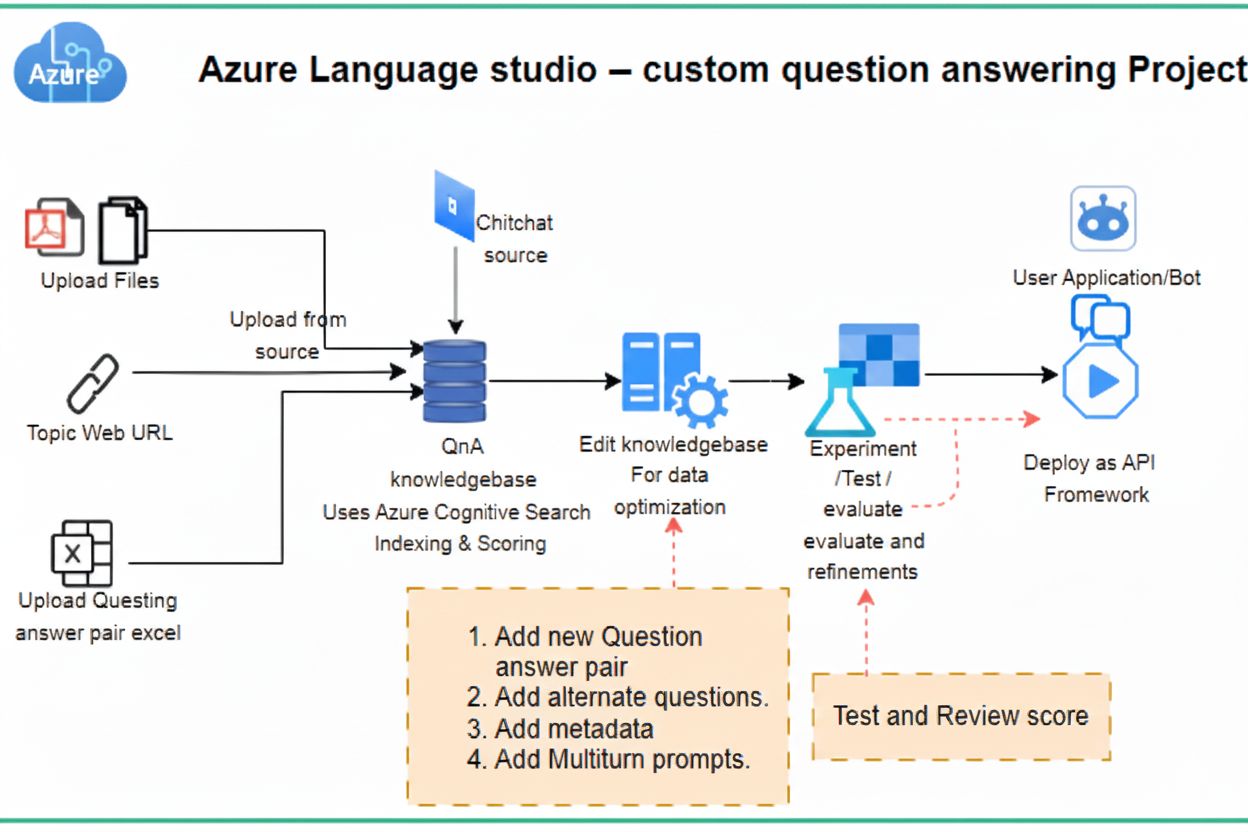

Representative Technical Architecture Diagram Using Azure Services:

The Core Challenge: If a user asks “Why is the system slow?”, the answer depends entirely on the context. Is it the Payroll System or the Manufacturing Robot? To solve this, we use Metadata Tagging.

Phase 2: Building the Knowledge Base

The traditional way to build a bot is manually typing Q&A pairs. The smart way is ingestion.

1. Ingestion

Go to Azure Language Studio and create a “Custom Question Answering” project. You have three powerful ingestion methods:

- URLs: Point it to a public FAQ page.

- Unstructured PDFs: Upload that 500-page user manual. Azure’s NLP extracts the logic automatically.

- Chitchat: Enable this to handle “Hello”, “Thanks”, and “Who are you?” without writing custom logic.

2. The Secret Sauce: Metadata Tagging

This is where most developers fail. They create a flat database. You need to structure your data with tags.

In your Azure project, when you edit your QnA pairs, assign Key:Value pairs to them.

Example Structure:

| Question | Answer | Metadata Key | Metadata Value |

|—-|—-|—-|—-|

| What is the price? | $1200 | Product | LaptopPro |

| What is the price? | $800 | Product | LaptopAir |

Why this matters: Without metadata, the bot sees “What is the price?” twice and gets confused. With metadata, the bot acts as a filter.

Phase 3: The Bot Client Logic (The Code)

Now, let’s look at how the Bot Client communicates with the Knowledge Base. We don’t just send the question; we send the context.

The JSON Request Payload

When your Bot Client (running on C# or Node.js) detects the user is asking about “Product 1”, it injects that context into the API call.

Here is the exact JSON structure your bot sends to the Azure Prediction API:

{

"question": "What is the price?",

"top": 3,

"answerSpanRequest": {

"enable": true,

"confidenceScoreThreshold": 0.3,

"topAnswersWithSpan": 1

},

"filters": {

"metadataFilter": {

"metadata": [

{

"key": "product",

"value": "product1"

}

]

}

}

}

Implicit vs. Explicit Context

How does the bot know to inject product1?

- Explicit: The bot asks the user via a button click: “Which product do you need help with?”

- Implicit (The “Pro” way): Use Named Entity Recognition (NER).

- User: “My MacBook is overheating.”

- NER: Extracts Entity MacBook.

- Bot Logic: Stores context = MacBook and applies it as a metadata filter for all subsequent queries.

Phase 4: Going Next-Gen with Azure OpenAI (GPT-4)

Standard QnA is great for static facts. But for Root Cause Analysis where the answer isn’t always clear-cut to Generative AI.

We can use GPT-4 (gpt-4-turbo or newer) to determine root causes based on historical incident logs.

The Strategy: Fine-Tuning

You cannot just pass a massive database into a standard prompt due to token limits. The solution is Fine-Tuning. We train a custom model on your specific incident history.

Preparing the Training Data (JSONL)

To fine-tune GPT-4, you must convert your incident logs into JSONL format. This is the exact format Azure OpenAI requires:

{"prompt": "Problem Description: SQL DB latency high. Domain: DB. nn###nn", "completion": "Root Cause: Missing indexes on high-volume tables."}

{"prompt": "Problem Description: VPN not connecting. Domain: Network. nn###nn", "completion": "Root Cause: Firewall rule blocking port 443."}

Deploying the Fine-Tuned Model

Once you upload this .jsonl file to Azure OpenAI Studio, the training job runs. Once complete, you get a custom model endpoint.

Now, your API request changes from a standard QnA lookup to a completion prompt:

// Pseudo-code for calling your Fine-Tuned Model

const response = await openai.createCompletion({

model: "my-custom-root-cause-model",

prompt: "Problem Description: Application crashing after update. Domain: App Server. nn###nn",

max_tokens: 50

});

console.log(response.choices[0].text);

// Output: "Root Cause: Incompatible Java version detected."

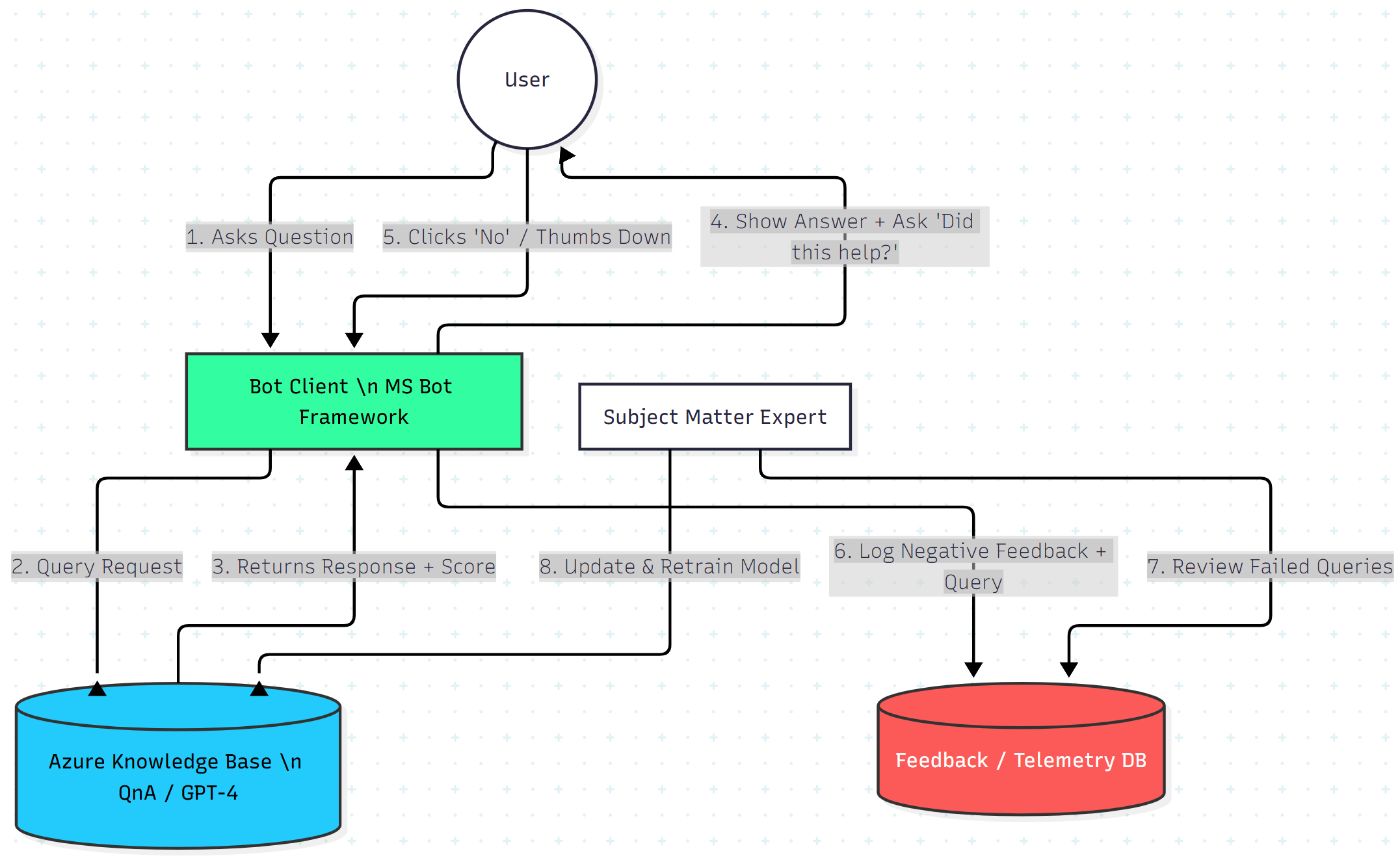

Phase 5: Closing the Loop (User Feedback)

A bot that doesn’t learn is a bad bot. We need a feedback loop.

In your Microsoft Bot Framework logic, implement a Waterfall Dialog:

- Bot provides Answer A (Score: 90%) and Answer B (Score: 85%).

- Bot asks: “Did this help?”

- If User clicks “Yes”: Store the Question ID + Answer ID + Positive Vote in your database.

- If User clicks “No”: Flag this interaction for human review to update the Knowledge Base.

Architecture for Continuous Improvement Using Human-in-the-loop:

In a real implementation, data feeds back into Azure Cognitive Search re-ranking profiles to allow continuous improvement:

Conclusion

We have moved beyond simple “If/Else” chatbots. By combining Azure Custom QnA with Metadata filtering, we handled the specific domain structure. By layering GPT-4 Fine-Tuning, we added the ability to predict root causes from unstructured descriptions.