Authors:

(1) Krist Shingjergji, Educational Sciences, Open University of the Netherlands, Heerlen, The Netherlands ([email protected]);

(2) Deniz Iren, Center for Actionable Research, Open University of the Netherlands, Heerlen, The Netherlands ([email protected]);

(3) Felix Bottger, Center for Actionable Research, Open University of the Netherlands, Heerlen, The Netherlands;

(4) Corrie Urlings, Educational Sciences, Open University of the Netherlands, Heerlen, The Netherlands;

(5) Roland Klemke, Educational Sciences, Open University of the Netherlands, Heerlen, The Netherlands.

Editor’s note: This is Part 3 of 6 of a study detailing the development of a gamified method of acquiring annotated facial emotion data. Read the rest below.

Table of Links

III. GAMIFIED DATA COLLECTION AND INTERPRETABLE FER

In this section, we present our solution that addresses the challenges of labeled data collection for FER model training and devising human-friendly explanations for emotion recognition systems. Specifically, we elaborate on the gamified data collection approach of Facegame and the underlying interpretable FER method.

A. Facegame

Emotional facial expressions emerge rapidly and mostly involuntarily on human faces. Nevertheless, humans are exceptionally good at recognizing even the subtlest cues that appear on the faces of others. Even though humans inherently possess these abilities, it is surprisingly challenging to exercise them deliberately. The motivation of Facegame is to provide the players with a challenging way to exercise the skills of facial expression perception and mimicking.

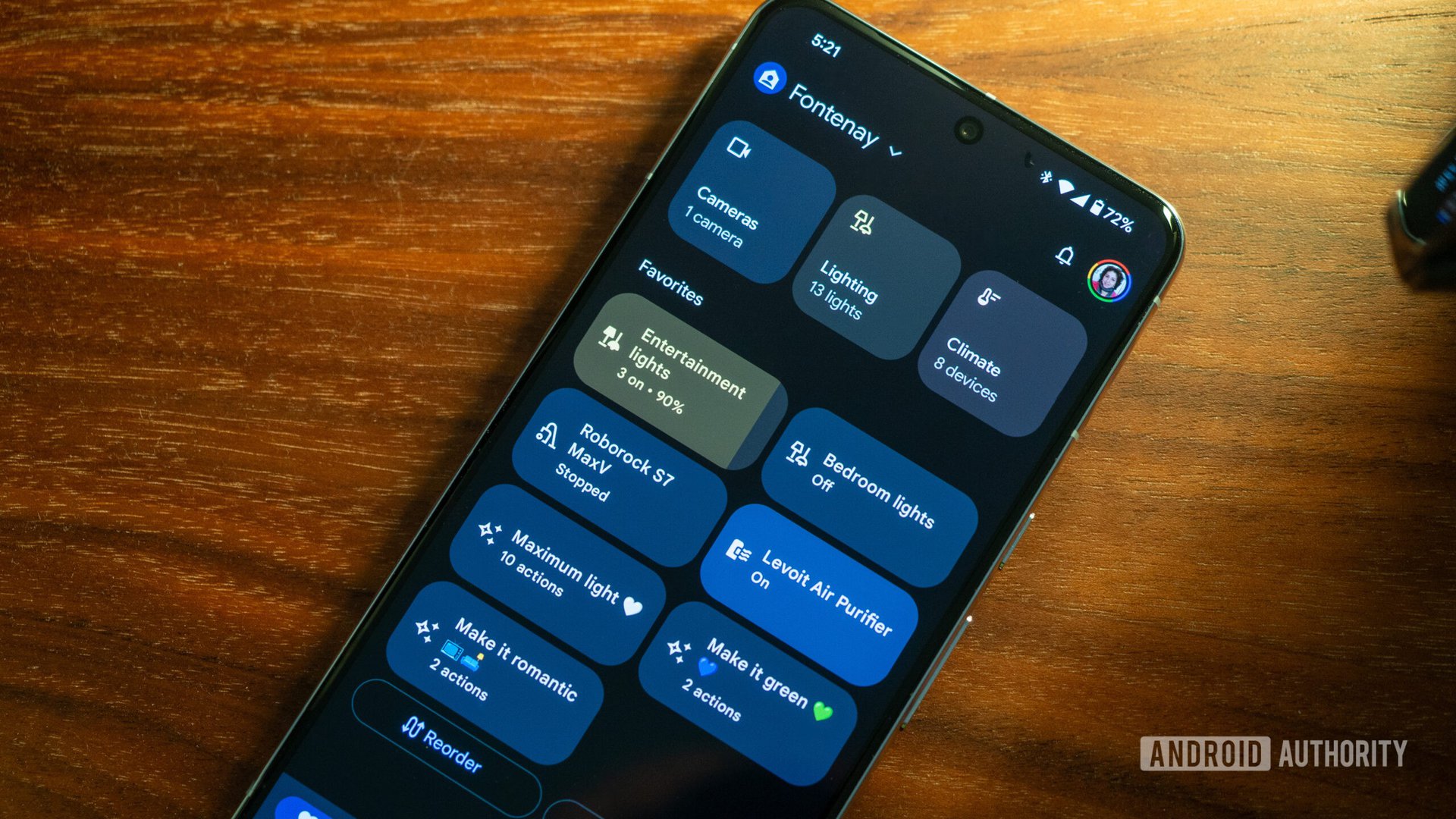

In Facegame, the goal of the players is to mimic the facial expression shown on a target image. The interface of Facegame (Fig. 1) displays two images together; (a) a target image from the database of the game, which contains a face that exhibits a certain basic emotion and (b) the player’s camera feed. Thus, the interface allows the player to compare both faces and try to imitate the target face.

All target images in the database are labeled based on six basic emotions [13] by human experts, as well as the 20 AUs (Table I ) automatically using a pre-trained classifier provided by Py-Feat [40]. The classifier’s inputs are the following two vectors: the facial landmarks, a (68×2) vector of the landmark locations that is computed with the dlib package [41], and the HOGs; a vector of (5408 × 1) features that describe an image as a distribution of orientations [21]. The HOGs are calculated for the faces that are aligned using the position of the two eyes, and masked using the positions of the landmarks. The model’s output is a list of AUs detected on the face image. The pipeline of the AU detection is shown in Fig. 2.

The player is given five seconds to mimic the target expression. Afterward, the player’s image is processed and automatically labeled with AUs. The set of AUs on the target image are already known beforehand. Consecutively, the Jaccard Index of the two AU sets yields the score as seen in the Equation 1; P and T depicting the AU sets of the player and target respectively. Players can retry imitating the same facial image to increase their scores or move on to another image.

B. Quality of data collected from the game

Every time a player plays the game, new data are generated. The score as a game element provides feedback to the player, and the players are motivated to do better and improve their scores, thus, generating better representations of the target facial emotion. This acts as an inherent quality assurance mechanism. Nevertheless, some players may show poor performance due to various reasons. For instance, they might be just exploring and testing the game, or their lighting conditions might be sub-optimal, or they simply do not feel motivated to do good in the game. In any case, such turns would yield low scores, and the data originating from players that consistently score low can easily be eliminated, thus, diminishing noise in data.

The turns that yield a high score are considered good representations of the emotional face expression on the target image, which is already labeled with one of six basic emotions. Thus, the players’ image can automatically be annotated with the same label as the target image. The minor differences between the player and target AU sets provide a desirable variance in the distribution of AUs corresponding to a certain emotional face expression. This way, the variance in the AU distribution is created naturally by human players instead of automatically generated by means of simulation, potentially improving the in-the-wild performance of FER when used in training.

C. Interpretable FER Explanation

Even though there is no precise formula for how combinations of AUs translate into emotional expressions, some strong correlations exist. For instance, a happy face generally exhibits a smile, which is characterized by the existence of the “lip corner puller.” We propose using AUs as a means for explaining the the outcome of FER models. Specifically, we utilize AU detection parallel to FER, and translate the identified AUs into natural language descriptions, which constitute human friendly, interpretable explanations of FER (Figure 3). The natural language descriptions are generated by a rule-based dictionary approach.

We created the dictionary of AU descriptions based on the definitions on Facial Action Coding System [13]. The

dictionary contains AU combinations categorized based on the facial muscle types and areas of appearance: cheeks, eyebrows, eyelids, lips, chin and nose, mouth, horizontal, oblique, and orbital. Every combination of the AUs that fall into these categories are represented in the dictionary. One example entry from the dictionary is as follows:

Eyebrows, AU4: “brow lowerer”

description: “eyebrows are lowered.”

prescription (+): “lower your eyebrows.”

prescription (-): “do not lower your eyebrows.”

The prescriptions of what players must do in order to improve their scores are given based on the outcome of a comparison between the target AU set and the player AU set. The intersection of both sets (P for the player AUs and T; target AUs) are the correctly mimicked AUs, while the difference between them show two kinds of mistakes; The set P-T includes the AUs that should be removed from the player’s expression, and the set T-P covers the AUs that are missing on the player’s expression to mimic the target successfully. The AUs in both sets of mistakes are expressed as natural language prescriptions in different polarities; for example; “raise your eyebrows” and “do not raise your eyebrows”.