How my company stopped coding manually and built a full-stack agent-based development process.

I am the CEO & founder of Easylab AI and Linkeme.ai, both of which are referenced in this article.

It started with a realization that felt uncomfortable at first: despite all the talent on our team, most of what we were building felt… repeatable. Predictable. Boring, even.

We were a consulting shop at first. Talented devs, working on a wide variety of products, frameworks, business logic. But every time we shipped something, I kept noticing that we were spending huge amounts of human brainpower on tasks that weren’t really intellectual challenges. They were familiar, reusable, and frankly — automatable.

That’s when I started experimenting with AI agents.

Not assistants. Not “autocomplete on steroids.” Agents.

What We Mean by “Agents”

For us, an AI agent is a role-specific autonomous or semi-autonomous process, powered by a large language model, that can:

- interpret a goal,

- break it into subtasks,

- reason through constraints,

- call tools (like code generation, test runners, API validators),

- and interact with other agents or humans.

Think of it like a dev team made of LLMs. Each has a job. Each is prompt-engineered to act within guardrails. And each communicates via structured memory or logging.

We didn’t arrive here overnight. Here’s how we made the switch.

Phase 1: Repetitive Code Recognition

Back when we were building Linkeme.ai — a Luxembourg AI tool to automate social media content for SMEs — we hit a wall. We were building the third admin dashboard in under two months. CRUD logic. Data ingestion. Analytics display. Some role-based access control. A few API integrations. Nothing we hadn’t done a dozen times.

And yet, it was eating our time.

So we ran an experiment. We took a module we had to build — a reporting dashboard — and asked Claude 3.5 to generate the full backend based on a natural language spec. Then we asked DeepSeek to generate the test coverage. Then GPT-4 to write the API doc.

The result wasn’t perfect. But it was functional. About 70% of the way there. That was enough to validate the bet: AI could replace dev typing for us.

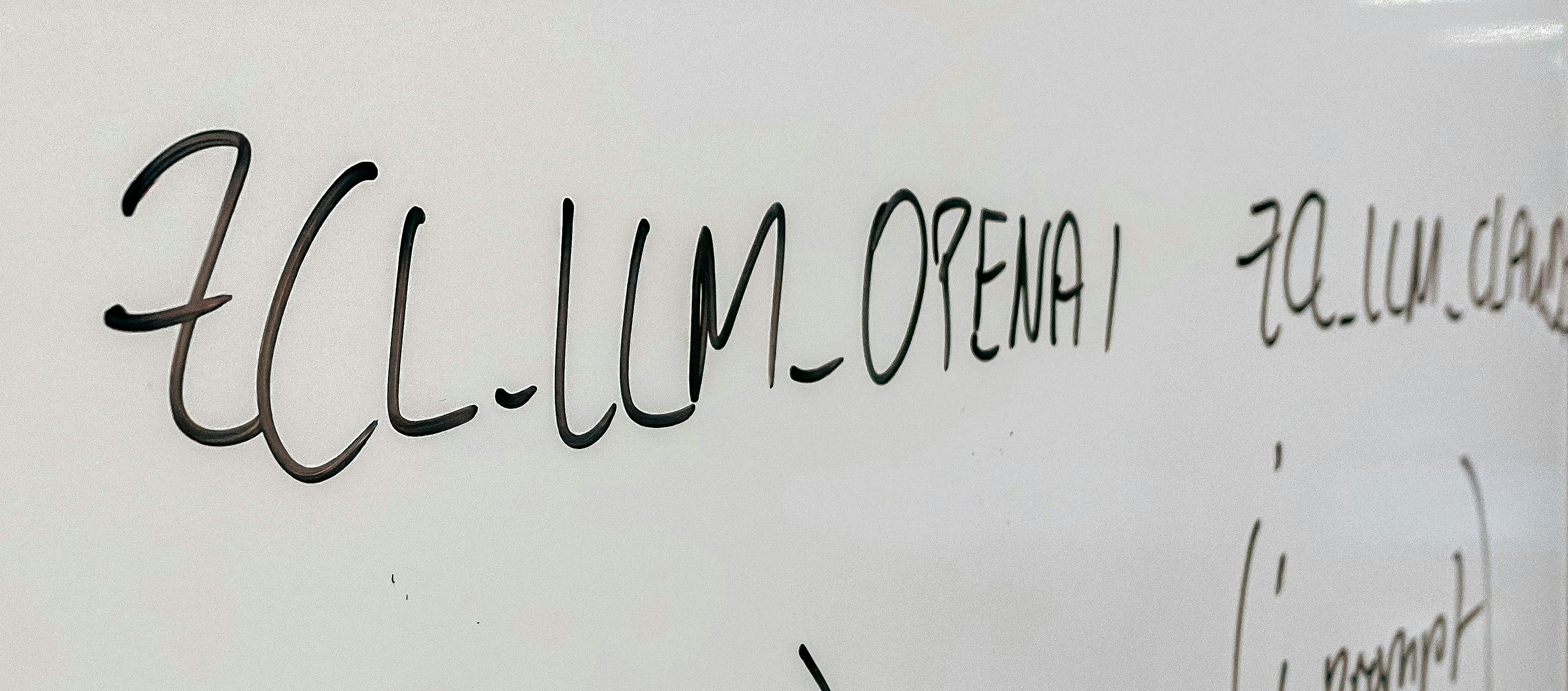

Phase 2: Building Our Agent Stack

We didn’t want a monolithic wrapper around GPT. We wanted modularity. So we built what we now call our “agent spine”: a set of composable agents, each with a clear task and identity.

- The Orchestrator: Takes in the spec and creates subtasks

- Back-end Builder: Handles logic, structure, services

- QA Agent: Runs test coverage, edge case mapping

- Security Scout: Reviews attack surfaces and auth

- API Synth: Generates OpenAPI-compatible docs

- Human Validator: A checkpoint, not a formality

We also use tools like bolt.new for initial scaffolding, cline inside VS Code for in-line agent triggering, and Claude or DeepSeek depending on the type of task.

These agents all operate through a shared memory layer (we use a lightweight Redis-based scratchpad), and log their decisions. That auditability was key to getting team buy-in.

To ensure smooth interaction and context awareness between agents and tasks, we introduced MCPs (Model Context Protocols) — structured templates that define how context is shared, which data persists between agents, and how fallback logic is handled. MCPs help reduce hallucinations, enforce consistency, and improve multi-step reliability.

Phase 3: Team Transition and Cultural Shift

When I announced we’d stop typing code by hand, I expected pushback. What I got was curiosity.

Dev after dev asked: “Okay… but how do I talk to the agents?”

The shift was surprisingly smooth. Engineers became agent wranglers. They learned to write better specs, craft better prompts, and interpret AI output. Instead of wasting mental energy on syntax, they focused on architecture and integration.

Our junior devs became QA strategists. Our lead backend dev now leads agent prompt design. Our product people got better at writing constraints. We didn’t lay anyone off. We up-leveled the team.

And yes — we still write code when it matters. But never twice.

Architecture Overview

You can see this architecture in action through our internal visualization dashboard, which we plan to open-source soon. run everything through a private dev orchestration layer, containerized in Docker. Each agent runs in its own service context. We use LLM APIs (Anthropic, OpenAI, DeepSeek), and a small router layer picks the model based on latency, context size, or task type.

Agents are stateless, but project context is stored and injected per task. We also built a UI dashboard to:

- Watch agent logs

- Reassign tasks

- Retry steps with new prompts

- Flag weird outputs

We use PostHog to monitor agent response quality, and measure human override frequency.

Results (And Challenges)

We measured several things over three months:

- Time to first usable feature: down 65%

- Internal bugs per release: down 40%

- Number of lines written manually: down 80%

- Time spent arguing about code style: zero

But we also hit challenges. Prompt drift is real. Hallucinations still happen. Agents sometimes forget state if context is too large. And when something goes wrong, debugging multi-agent logic is very different from debugging procedural code.

We’ve had to build internal tools just to track agent behavior. And training the team on prompt architecture took time. But none of that outweighed the speed and focus we gained.

What Comes Next

Easylab AI isn’t a dev agency anymore. We’re an AI orchestration company. We help others do what we did: replace repetitive workflows with structured AI agents that execute, adapt, and learn.

And we keep improving our own system. Next up: memory-based agents that self-improve across projects. Context chaining. Long-term reasoning. And eventually, meta-agents that compose sub-teams.

This isn’t the future. It’s working now. We didn’t replace developers. We made them 10x more valuable.

And yes, I still get messages like:

“Did you really fire your devs for AI?”

My answer? Only the ones who wanted to keep writing the same button logic for the fifth time.