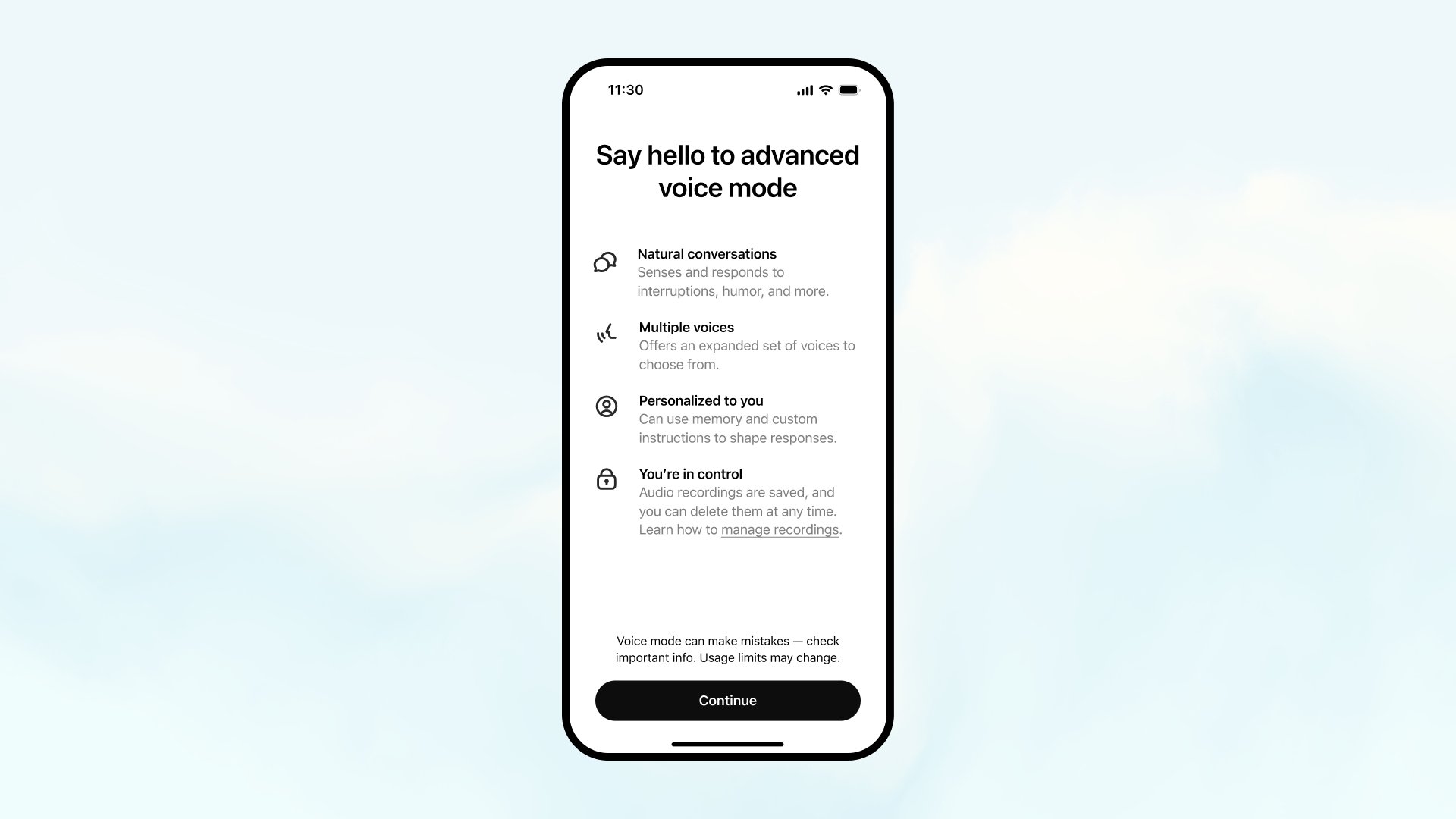

OpenAI recently continued its hot streak with the release of Advanced Voice Mode. It’s ChatGPT’s most impressive voice mode so far, one that makes the chatbot sound like a human engaged in a normal, real conversation. ChatGPT (GPT-4o) can mimic the tonality of regular conversations, giving you the feeling that it has feelings, too.

You can interrupt ChatGPT when Advanced Voice Mode is enabled to slightly alter the conversation (the prompt) so the AI can better answer your queries.

Advanced Voice Mode was first available on iPhone and Android, where voice mode makes more sense for interacting with AI, whether ChatGPT or something else. But after finally being able to test it a few days ago, I think Advanced Voice Mode should be an option everywhere you can access GPT-4o. In the future, AI products like Advanced Voice Mode will change how I use all my computers.

OpenAI apparently has similar thoughts, as it just made Advanced Voice Mode available to the Mac and Windows apps. That’s the only way to get Advanced Voice Mode on the desktop right now. The web version of ChatGPT still doesn’t support it.

First announced in early May, Advanced Voice Mode started rolling out only to ChatGPT Plus users a few weeks ago. The release was limited to the US and non-EU international markets. Despite paying for the Plus subscription, I could not use Advanced Voice Mode until last weekend.

Then, ChatGPT Free users got Advanced Voice Mode in those markets, while paid ChatGPT users in the EU waited.

Now that Advanced Voice Mode is available in Europe, adding the feature to the Mac and the newly released ChatGPT app for Windows makes perfect sense. It’ll allow you to interact with the chatbot even faster while you’re doing something else on the computer.

I finally tested Advanced Voice Mode on my iPhone 16 Plus using ChatGPT as an improvised museum tour guide. I wanted the chatbot to be on standby in the background, always listening to my questions.

Unfortunately, some issues I didn’t account for got in the way. The internet connection wasn’t the best, so the chatbot wasn’t there all the time. It wasn’t accurate all the time, either. Also, I can’t exclude any performance issues over at OpenAI. But I know the setting where I chose to test the feature did not provide the best conditions.

Overall, the test wasn’t as successful as I had hoped, but it certainly opened my eyes to new possibilities.

Having an AI assistant waiting in the background to answer my questions will change how I multitask on the iPhone and the Mac. I might talk to ChatGPT about a topic at hand while doing something else inside an app.

Eventually, these AI assistants will be able to control the computer on my behalf when instructed. Those instructions will come by voice in natural language. I’ll tell the chatbot to download files, change settings, open websites, and perform internet searches.

It all has to start somewhere, and Advanced Voice Mode represents that start. It only makes sense to see it come to the desktop, even if performance and accuracy are still reasons to worry about. With OpenAI delivering the feature, its competitors will also strive to offer similar capabilities.

Not to mention the trust issues that should inherently come with such AI advancements. I’m not sure I would trust ChatGPT or Gemini to access and control parts of my computers, but that’s a story for a different day — a day when Siri might reach the same level as ChatGPT and Advanced Voice Mode to replace them across my devices.

Meanwhile, if you have Macs and Windows PCs that can run the ChatGPT desktop app, you can download the latest update to get Advanced Voice Mode support. You don’t need a ChatGPT Plus subscription to experience Advanced Voice Mode. But you’ll be limited to just a few interactions each month if you go the Free route.