In the rapidly evolving landscape of artificial intelligence, the journey from a promising AI model to a successful AI product is rarely linear. It’s an iterative process, constantly refined by real-world interaction. While model metrics like accuracy, precision, and F1-score are crucial during development, they often tell only half the story. The true litmus test for any AI product lies in its user signals – how real people interact with it, what value they derive, and what frustrations they encounter.

For AI product managers and ML engineers, a common disconnect emerges: a model might perform excellently on internal benchmarks, yet the product struggles with adoption, retention, or user satisfaction. This gap highlights a critical need for an integrated, comprehensive feedback loop for AI that seamlessly bridges technical model performance with practical user experience. This blog post will explore why this integrated approach is paramount for AI product success, how to design such a system, and how to leverage it for continuous improvement.

The Dual Nature of AI Product Performance: Model Metrics vs. User Signals

To build truly effective AI systems, we must understand the distinct yet complementary roles of technical metrics and human-centric feedback.

Understanding Model Metrics (The “Internal View”)

Model metrics are the bedrock of machine learning development. They quantify the performance of an AI model against a defined dataset and objective. These include:

- Classification: Accuracy, precision, recall, F1-score, AUC-ROC.

- Regression: Mean Squared Error (MSE), Root Mean Squared Error (RMSE), R-squared.

- Other: Latency, throughput, model size.

These metrics are indispensable for:

- Initial Development & Benchmarking: Comparing different algorithms, hyperparameter tuning, and ensuring the model learns the intended patterns.

- Internal Quality Checks: Monitoring the model’s health in a controlled environment.

- Technical Optimization: Identifying bottlenecks or areas for algorithmic improvement.

However, relying solely on these metrics can create a siloed view. A model with 95% accuracy might still fail to deliver value if its 5% error rate occurs in critical user journeys or impacts a significant segment of users disproportionately.

Capturing Real User Signals (The “External View”)

User signals are the pulse of an AI product in the wild. They represent direct and indirect indicators of how users interact with the product, their satisfaction levels, and the actual value they derive. Capturing these signals provides insights that no technical metric can.

Types of User Signals:

- Explicit Feedback:

- Surveys & Ratings: In-app “Was this helpful?” prompts, NPS (Net Promoter Score), CSAT (Customer Satisfaction Score) surveys.

- Direct Feedback Channels: Feature requests, bug reports, support tickets, user interviews, focus groups.

- A/B Test Results: User preferences for different AI-driven features or outputs.

- Implicit Feedback:

-

Usage Patterns: Click-through rates, session duration, feature adoption/abandonment rates, navigation paths, search queries.

-

Conversion Rates: For AI-driven recommendations or predictions that lead to a business outcome (e.g., purchase, sign-up).

-

Error Rates: How often users encounter system errors or receive obviously incorrect AI outputs.

-

Retention & Churn: Long-term user engagement and attrition rates.

-

Re-engagement: How often users return after an initial interaction.

Why user signals are crucial:

They reveal the true product value, expose real-world performance gaps, identify emerging user needs, validate or invalidate product assumptions, and highlight areas for improvement that model metrics simply cannot. They are the feedback loops for AI that close the gap between theoretical performance and practical utility.

The Gap: Why Model Metrics Alone Aren’t Enough for Product Success

The chasm between stellar model metrics and disappointing AI product success is a common challenge for AI product managers and ML engineers.

-

The “Good on Paper, Bad in Practice” Phenomenon: A model trained on a clean, static dataset might perform admirably in a lab environment. However, once deployed, it faces the messiness of real-world data, concept drift (where the relationship between input and output changes over time), and data drift (where the characteristics of the input data change). This leads to performance degradation that model metrics alone, calculated on static test sets, won’t immediately reveal.

-

Subjective vs. Objective: Model metrics are objective and quantifiable, focusing on the model’s internal workings. User experience, however, is inherently subjective, encompassing emotions, usability, and perceived value. A technically “accurate” AI recommendation might still feel irrelevant or intrusive to a user, leading to a poor experience.

-

The Black Box Challenge: Users don’t care about the intricate algorithms within the “black box” of an AI model; they care if it solves their problem efficiently and reliably. If the AI output is not intuitive, trustworthy, or helpful, users will disengage, regardless of the underlying model’s precision.

-

Unforeseen Behaviors & Edge Cases: No training dataset can perfectly capture the infinite variations of human behavior or real-world scenarios. User signals are essential for identifying previously unseen edge cases, biases, or unexpected interactions that can severely impact the product’s utility or even lead to harmful outcomes.

Designing a Comprehensive AI Feedback Loop

Building an effective feedback loop for AI products requires a thoughtful, integrated approach that combines the rigor of ML engineering with the empathy of AI product management.

A. Defining Success Metrics (Product + ML Alignment)

The first step is to establish a shared definition of “success” that bridges the technical and business worlds. This means mapping user signals to specific model improvement goals.

- Example 1: If user feedback indicates low engagement with search results (signal), it might point to a need to improve search result relevance or diversity (model objective).

- Example 2: High user satisfaction with a personalized content feed (signal) could be tied to an increase in click-through rates and session duration, indicating a well-performing recommendation engine (model metric).

Key Performance Indicators (KPIs) should integrate both. Instead of just “model accuracy,” consider “successful recommendation click-through rate” or “AI-assisted task completion rate.” This ensures both teams are rowing in the same direction.

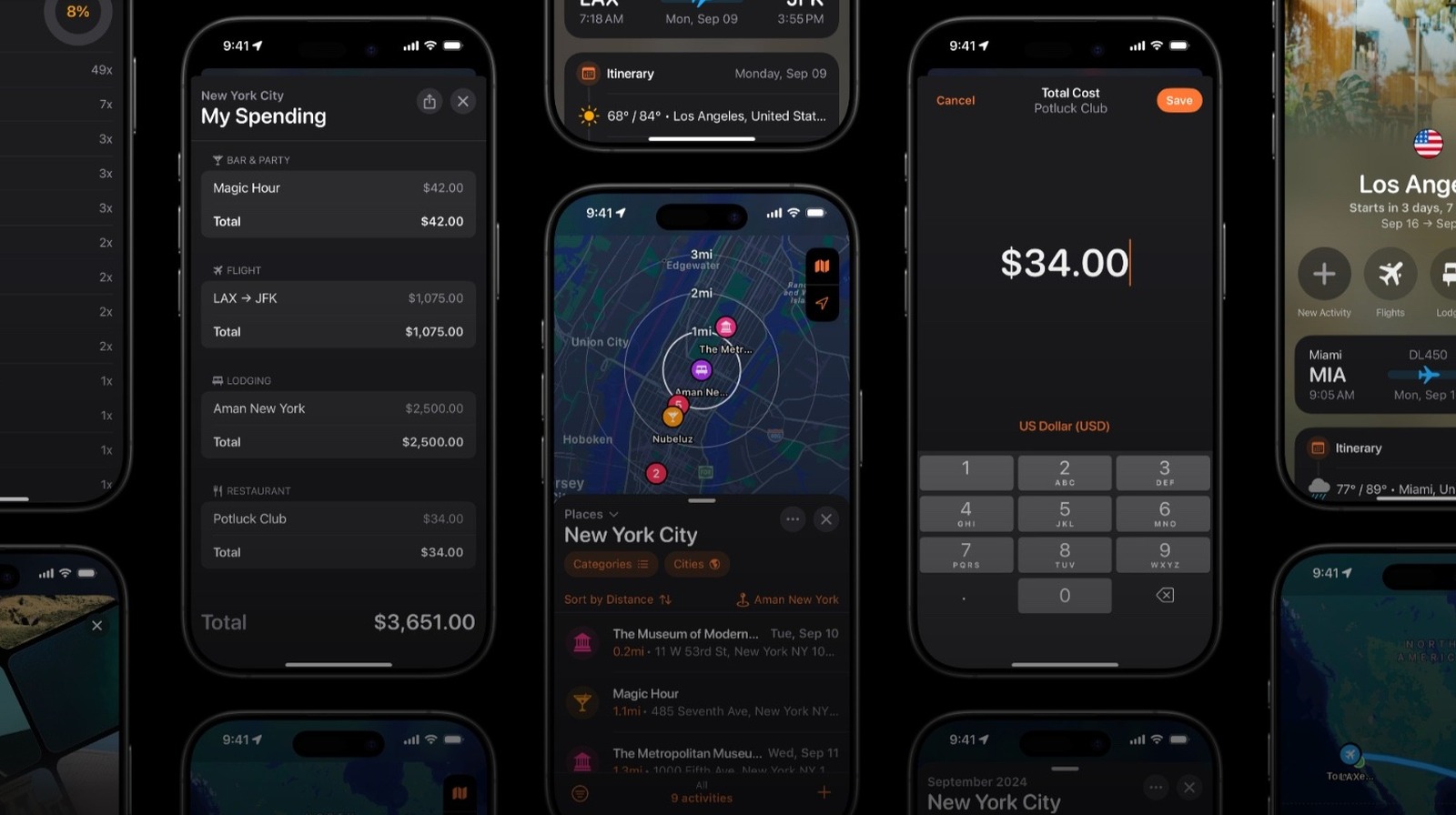

B. Data Collection Strategies for User Signals

Effective feedback loops depend on robust data collection.

-

Instrumentation: Implement comprehensive event tracking and in-app analytics to record user interactions with AI features. This includes clicks, views, hovers, dismissals, edits, and any other relevant actions.

-

Feedback Mechanisms: Strategically place explicit feedback opportunities within the product UI (e.g., “Rate this translation,” “Thumbs up/down for this recommendation”). These should be lightweight and non-intrusive.

-

Observability Tools: Beyond standard analytics, leverage specialized AI observability platforms that can log model predictions alongside user actions, allowing for direct correlation between AI output and user response.

C. Integrating Data Streams

To make sense of the vast amount of data, it must be centralized and accessible.

-

Centralized Data Platform: Utilize data lakes or warehouses (e.g., Snowflake, Databricks, BigQuery) to store both model performance logs and detailed user interaction data. This provides a single source of truth.

-

Data Pipelines: Establish robust ETL (Extract, Transform, Load) or ELT pipelines to ensure data from various sources (application logs, model inference logs, user databases, feedback forms) is collected, cleaned, and made available for analysis in near real-time or regular batches.

D. Analysis and Interpretation

Raw data is useless without intelligent analysis.

- Dashboards & Visualizations: Create integrated dashboards that display model health metrics alongside key user engagement and satisfaction metrics. Visualize trends, correlations, and anomalies.

- Anomaly Detection: Implement automated systems to flag sudden drops or spikes in either model performance or critical user signals, indicating a potential issue or opportunity.

Qualitative Analysis: Don’t neglect the “why.” Regularly review explicit feedback, conduct user interviews, and analyze support tickets to understand the underlying reasons behind quantitative trends.

Acting on Feedback: The Iterative Improvement Cycle

A feedback loop is only valuable if it drives action. This involves a continuous cycle of identification, analysis, translation, and iteration.

A. Identify & Prioritize Issues

When a discrepancy arises between model metrics and user signals, a root cause analysis is crucial. Is it:

- A data issue (e.g., training-serving skew, data drift)?

- A model flaw (e.g., bias, underfitting, incorrect objective function)?

- A product design problem (e.g., bad UI, misleading prompts)?

- Concept drift (the underlying problem itself has changed)?

Prioritize issues based on their user impact, business value, and feasibility of resolution.

B. Translating User Signals into Model Improvements

This is where AI product management meets ML engineering to close the loop.

-

Retraining Data Augmentation: Use implicit feedback (e.g., user corrections to AI output, ignored recommendations, search queries) to enrich and diversify training datasets. If a user consistently ignores a certain type of recommendation, that implicitly tells the model it’s not relevant.

-

Feature Engineering: User behavior can reveal new, powerful features. For example, if users consistently refine AI-generated content by adding a specific keyword, that keyword could become a new feature.

-

Model Architecture Refinement: If feedback reveals a specific type of error (e.g., model struggling with rare categories), it might necessitate exploring different model architectures or fine-tuning existing ones.

-

Human-in-the-Loop (HITL): For complex or critical use cases, human reviewers can annotate user-generated content or model outputs, providing high-quality labels for subsequent model retraining. This is particularly valuable for addressing AI bias or ensuring fairness.

C. Product Iteration & A/B Testing

Once improvements are made, they need to be validated.

-

Deployment Strategies: Employ gradual rollouts (e.g., canary deployments) or A/B testing to compare the new model/feature’s performance against the old one.

-

Monitoring Post-Deployment: Immediately after deployment, intensely monitor both user signals and model metrics to observe the real-world impact of the changes.

D. The Culture of Continuous Learning

A truly effective feedback loop is not just a technical system; it’s a cultural commitment.

- Cross-functional Collaboration: Foster strong ties between product managers, ML engineers, data scientists, and UX researchers. Regular sync-ups, shared goals, and mutual understanding are vital.

Regular Reviews: Conduct frequent “AI product reviews” where both sets of metrics are discussed, insights are shared, and action items are assigned.

Best Practices and Common Pitfalls

Implementing an effective AI product feedback loop is an ongoing journey.

A. Best Practices:

-

Start Simple, Iterate: Don’t try to build the perfect, all-encompassing system overnight. Start with a few key metrics and signals, then expand.

-

Define Clear Metrics Upfront: Before collecting data, know what success looks like from both a model and a product perspective.

-

Automate Data Collection & Dashboards: Reduce manual effort to ensure timely insights.

-

Foster Cross-functional Ownership: Ensure PMs, ML engineers, data scientists, and UX researchers are all invested in and understand the feedback loop.

-

Prioritize User Privacy and Data Security: Design your system with privacy-by-design principles and adhere to all relevant regulations.

B. Common Pitfalls:

-

Ignoring One Set of Metrics: Over-relying on model metrics while neglecting user signals, or vice-versa, leads to a skewed perspective.

-

Too Much Data, Not Enough Insight: Collecting vast amounts of data without a clear strategy for analysis and action can be overwhelming and unproductive.

-

Lack of Clear Ownership: Without a designated owner for managing and acting on the feedback loop, insights can get lost.

-

Failure to Act on Insights (Analysis Paralysis): Data is only valuable if it leads to decisions and iterations.

-

Designing Overly Complex Systems Too Early: This can lead to delays, technical debt, and a system that’s difficult to adapt.

Conclusion

The pursuit of AI product success is not solely about building the most technically advanced models. It’s about creating AI products that genuinely solve user problems, adapt to changing needs, and deliver continuous value. This critical transformation happens when AI product managers and ML engineers collaborate to establish and leverage a robust feedback loop for AI.

By strategically integrating granular model metrics with invaluable real user signals, organizations can gain a holistic understanding of their AI product’s performance, quickly identify areas for improvement, and drive agile, user-centric iterations. In the dynamic world of AI, continuous learning through comprehensive feedback is not just a best practice; it’s the fundamental engine for building resilient, effective, and truly successful AI systems. Start building your integrated feedback loop today, and transform your AI products from static models into dynamic, continuously improving solutions.