Cloudflare has recently redesigned Workers KV with a hybrid storage architecture that automatically routes objects between distributed databases and object storage based on size characteristics, while operating dual storage backends. This change improved the p99 read latencies from 200ms to under 5ms for their global key-value store while handling hundreds of billions of key-value pairs.

Cloudflare performed this rearchitecture in response to a June 12, 2025, outage that affected Workers KV when Google Cloud Platform experienced a global service disruption. Earlier in the year, Cloudflare moved from a dual-backend setup to using GCP exclusively to reduce operational complexity. However, the GCP outage, which lasted over two hours, demonstrated the risks of external dependencies for critical edge infrastructure.

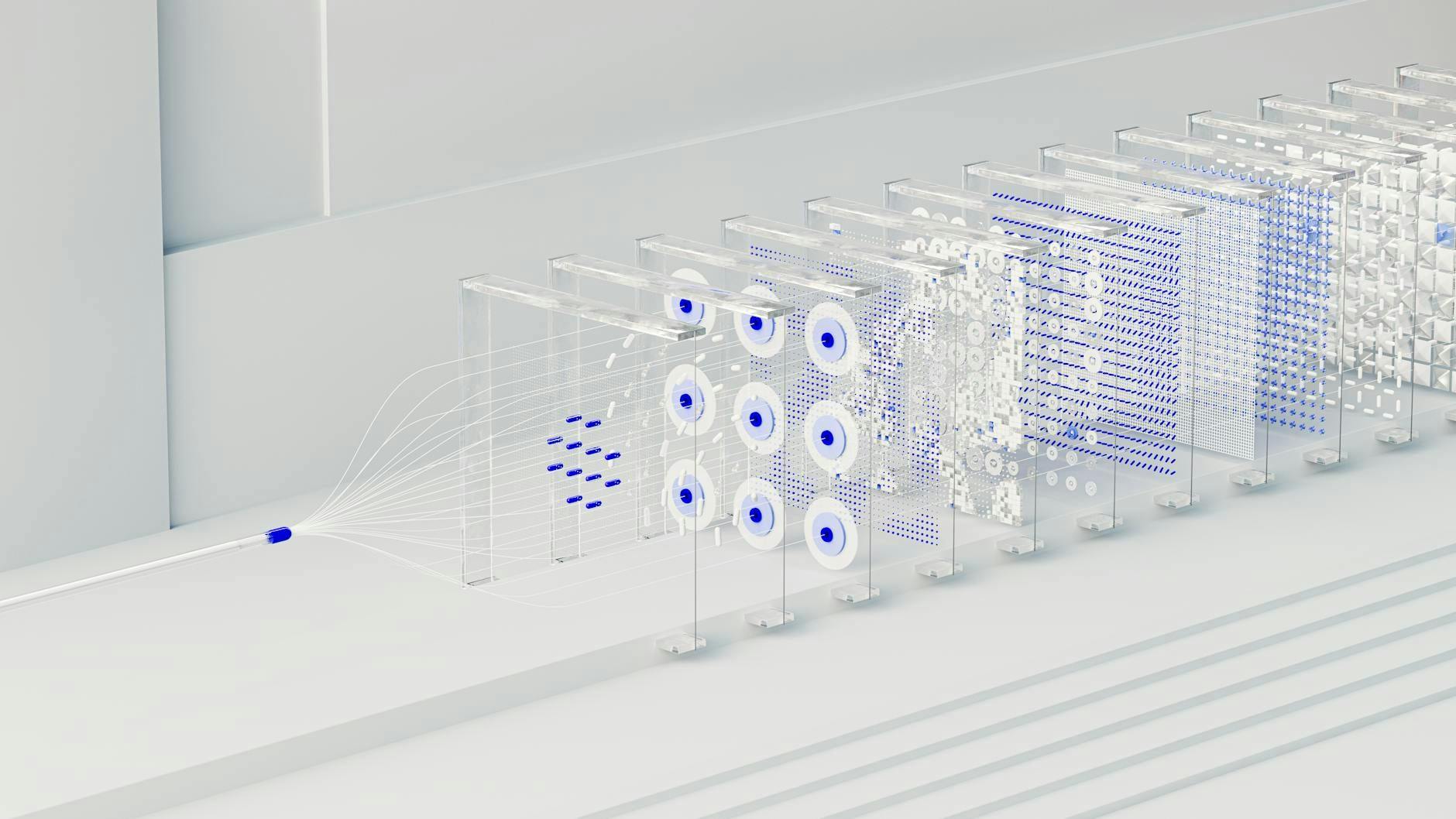

The new system combines Cloudflare’s own distributed database with R2 object storage through size-based routing. Small objects, which represent the majority of Workers KV traffic with a median size of 288 bytes, are stored in Cloudflare’s distributed database, the same distributed database that powers R2 and Durable Objects. Objects larger than a configurable threshold are automatically routed to R2 object storage.

Cloudflare engineers explain that “for workloads dominated by sub-1KB objects at this scale, database storage becomes significantly more efficient and cost-effective than traditional object storage.” The routing decision happens transparently through KV Storage Proxy (KVSP), which provides HTTP interfaces to database clusters while managing connectivity, authentication, and shard routing.

Workers KV’s revised architecture (source)

To employ an improved dual-provider capability, Cloudflare employed sophisticated consistency mechanisms. The system writes to both backends simultaneously, returning success when the first backend confirms persistence. Failed writes are queued for background reconciliation through write-time error handling, read-time inconsistency detection, and background crawlers that continuously scan for data divergence. In addition, each key-value pair includes high-precision timestamps using Cloudflare Time Services to determine correct ordering across independent storage providers.

During initial rollout to internal customers, Cloudflare discovered it had inadvertently regressed read-your-own-write (RYOW) consistency, a property where reads immediately following writes from the same point of presence return updated values rather than stale data.

To address this, engineers developed an “adversarial test framework designed to maximize the likelihood of hitting consistency edge cases by rapidly interspersing reads and writes to a small set of keys from a handful of locations around the world.” This testing approach allowed them to measure and fix RYOW violations while maintaining performance characteristics that make Workers KV effective for high-read workloads.

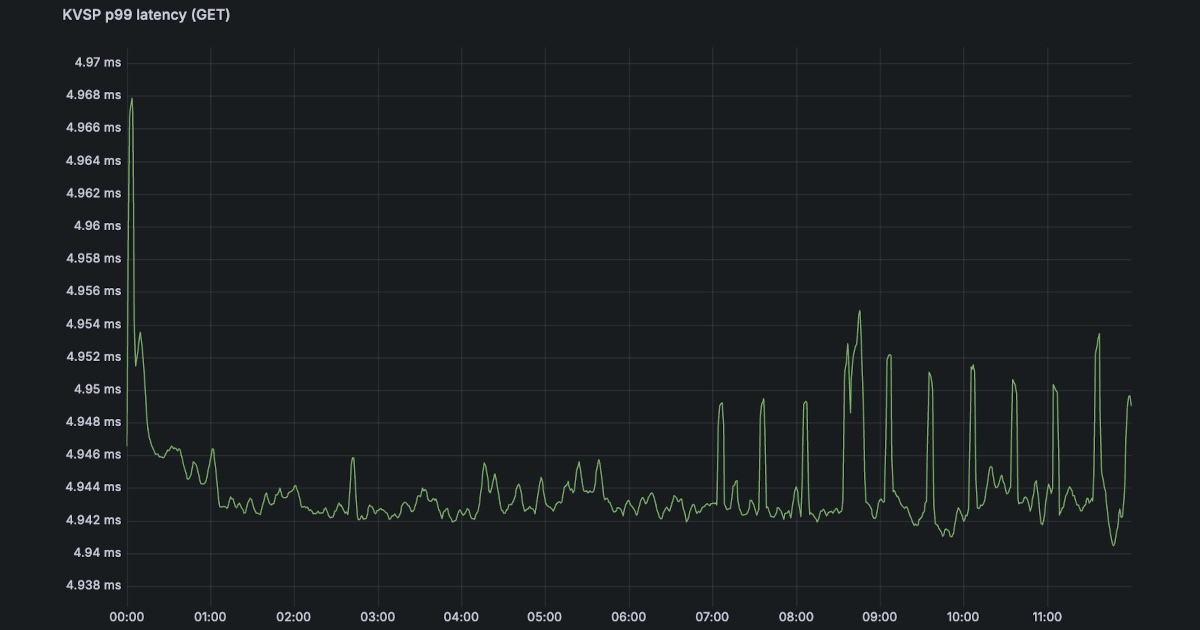

The architectural changes delivered significant performance improvements alongside increased redundancy. Internal p99 latencies for reads from the new Cloudflare backend dropped to under 5 milliseconds, compared to 200ms at p99 for the third-party object storage provider. These improvements were “particularly pronounced in Europe, where our new storage backend is located, but the benefits extended far beyond what geographic locality alone could explain.”

KVSP p99 latency (source)

Third-party cold read p99 latency (source)

Workers KV is Cloudflare’s eventually-consistent key-value store that operates at a global scale across its edge network. Initially launched in 2018, the service provides fast, distributed storage for configuration data, session information, and static assets that need to be available instantly around the globe. Companies use Workers KV for diverse applications, including mass redirects handling billions of HTTP requests, API gateway implementations, A/B testing configurations, user authentication systems, and dynamic content personalization based on geographic location or device type.