Workflows are Cloudflare’s offering, providing a durable execution engine to build multi-step application orchestration. It initially supported only TypeScript; it now also supports Python in beta, as it is, according to the company, the de facto language of choice for data pipelines, artificial intelligence/machine learning, and task automation for data engineers.

Cloudflare Workflows are built on the underlying infrastructure of Workers and Durable Objects. The latter provides the state persistence and coordination necessary for long-running processes, ensuring that workflows maintain their state and can retry individual steps in the event of failure.

The company introduced workflows a year ago and detailed its functionality:

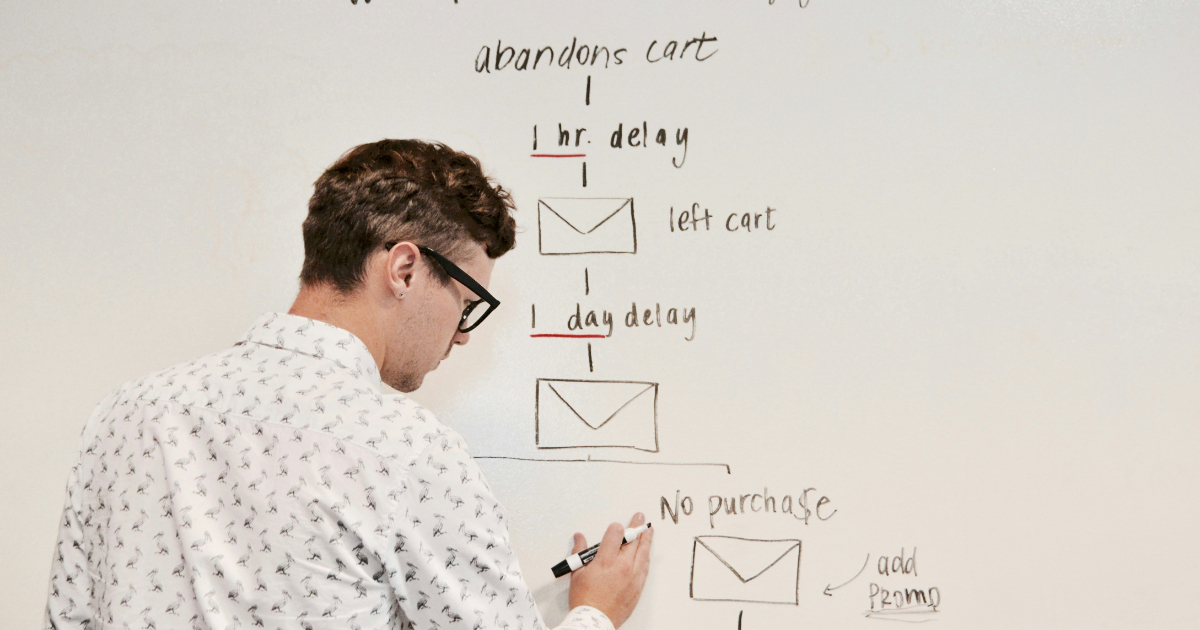

A core building block of every Workflow is the step: an individually retriable component of your application that can optionally emit state. That state then persists, even if subsequent steps fail. This means your application doesn’t have to restart, allowing it to recover more quickly from failure scenarios and avoid redundant work.

In a recent blog post on Python support, the authors explain:

Over the years, we’ve been giving developers the tools to build these applications in Python, on Cloudflare. In 2020, we brought Python to Workers via Transcrypt before directly integrating Python into workerd in 2024. Earlier this year, we added support for CPython , along with any packages built in Pyodide, such as matplotlib and pandas, in Workers. Now, Python Workflows are supported as well, so developers can create robust applications using the language they know best.

Cloudflare Workflows uses the underlying infrastructure for durable execution, while providing an idiomatic way for Python users to write their workflows. In addition, the company aimed for complete feature parity between the JavaScript and Python SDKs, which is now possible because Cloudflare Workers supports Python natively.

A significant focus of the company was ensuring the Python SDK feels “Pythonic.” The platform provides built-in support for asynchronous operations and concurrency, enabling developers to manage dependencies across steps, even when tasks can run concurrently.

This is achieved through two methods: one that leverages Python’s asyncio.gather for concurrent execution, which proxies JavaScript promises (thenables) into Python awaitables, and another, more idiomatic approach using Python decorators (@step.do) to define steps and dependencies, allowing for a cleaner declaration of Directed Acyclic Graph (DAG) execution flow. The engine automatically manages state and data flow between steps.

(Source: X Tweet Matt Silverlock)

The introduction of Python opens up Workflows to complex, long-running applications that benefit from orchestration:

- AI/ML Model Training: Orchestrating sequences such as dataset labeling, feeding data to the model, awaiting model run completion, evaluating loss, and notifying a human for manual adjustment before continuing the loop.

- Data Pipelines: Automating complex ingest and processing pipelines via a defined set of idempotent steps, ensuring reliable data transformation.

- AI Agents: Building multi-step agents (e.g., a grocery agent that compiles lists, checks inventory, and places an order) where state persistence and retries are vital for reaching a successful conclusion.

Lastly, sample Python workflows are available on GitHub.