Table of Links

Abstract and 1 Introduction

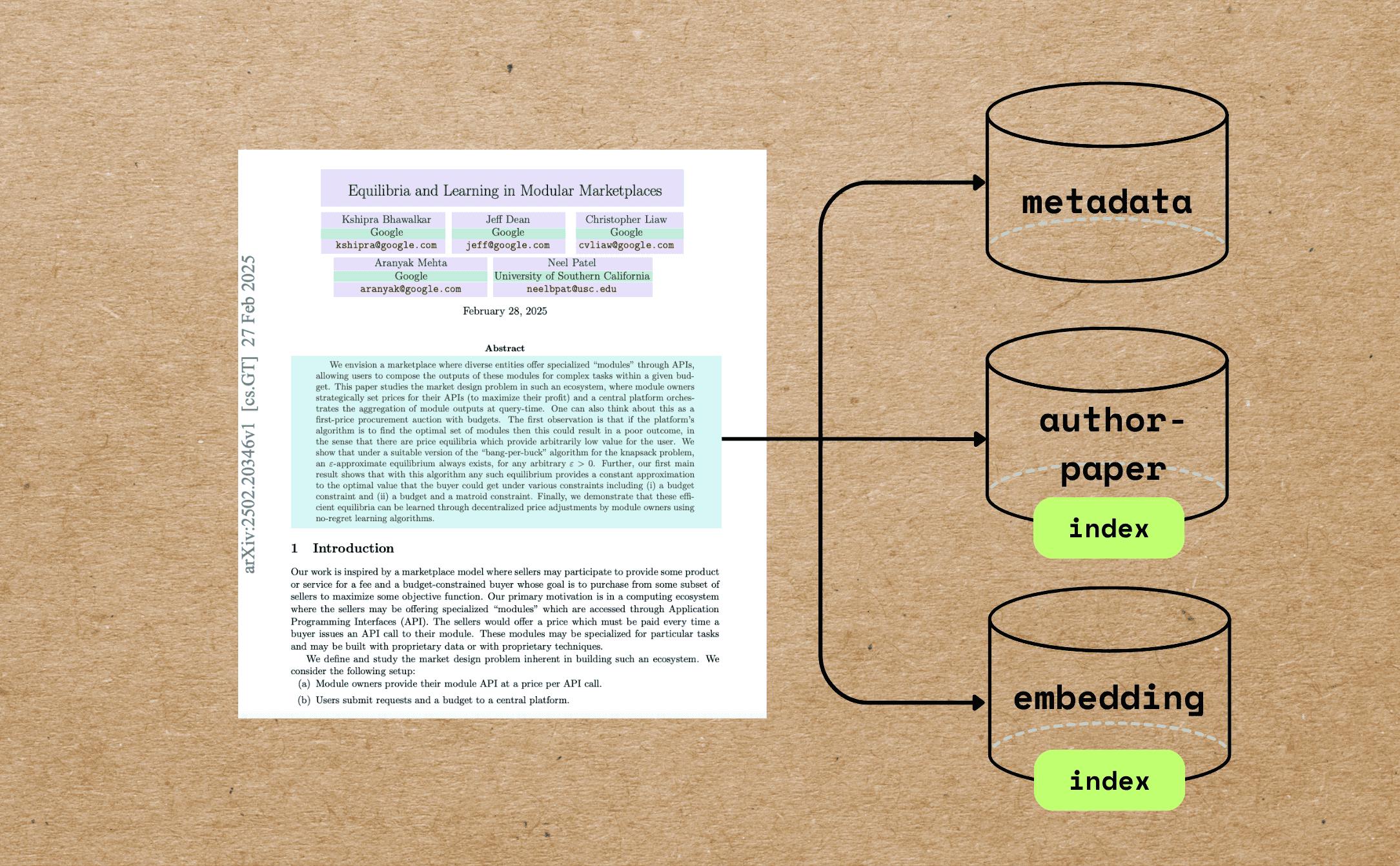

2 COCOGEN: Representing Commonsense structures with code and 2.1 Converting (T,G) into Python code

2.2 Few-shot prompting for generating G

3 Evaluation and 3.1 Experimental setup

3.2 Script generation: PROSCRIPT

3.3 Entity state tracking: PROPARA

3.4 Argument graph generation: EXPLAGRAPHS

4 Analysis

5 Related work

6 Conclusion, Acknowledgments, Limitations, and References

A Few-shot models size estimates

B Dynamic prompt Creation

C Human Evaluation

D Dataset statistics

E Sample outputs

F Prompts

G Designing Python class for a structured task

H Impact of Model size

I Variation in prompts

3.3 Entity state tracking: PROPARA

The text inputs T of entity state tracking are a sequence of actions in natural language about a particular topic (e.g., photosynthesis) and a collection of entities (e.g., water). The goal is to predict the state of each entity after the executions of an action. We use the PROPARA dataset (Dalvi et al., 2018) as the test-bed for this task.

We construct the Python code Gc as follows, and an example is shown in Figure 3. First, we define the main function and list all n actions as comments inside the main function. Second, we create k variables named as state_k where k is the number of participants of the topic. The semantics of each variable is described in the comments

as well. Finally, to represent the state change after each step, we define n functions where each function corresponds to an action. We additionally define an init function to represent the initialization of entity states. Inside each function, the value of each variable tells the state of the corresponding entity after the execution of that action. Given a new test example where only the actions and the entities are give, we construct the input string until the init function, and we append it to the few-shot prompts for predictions.

Metrics We follow Dalvi et al. (2018) and measure precision, recall and F1 score of the predicted entity states. We randomly sampled three examples from the training set as the few-shot prompt.

Results As shown in Table 3, COCOGEN achieves a significantly better F1 score than DAVINCI. Across the five prompts, COCOGEN achieves 5.0 higher F1 than DAVINCI on average. In addition, COCOGEN yields stronger performance than CURIE, achieving F1 of 63.0, which is 74% higher than CURIE (36.1).[3]

In PROPARA, COCOGEN will be ranked 6 th on the leaderboard.[4] However, all the methods above COCOGEN require fine-tuning on the entire training corpus. In contrast, COCOGEN uses only 3 examples in the prompt and has a gap of less than 10 F1 points vs. the current state-of-the-art (Ma et al., 2022). In the few-shot settings, COCOGEN is state-of-the-art in PROPARA.

3.4 Argument graph generation: EXPLAGRAPHS

Given a belief (e.g., factory farming should not be banned) and an argument (e.g., factory farming feeds millions), the goal of this task is to generate a graph that uses the argument to either support or counter the belief (Saha et al., 2021). The text input to the task is thus a tuple of (belief, argument, “supports”/“counters”), and the structured output is an explanation graph (Figure 4).

We use the EXPLAGRAPHS dataset for this task (Saha et al., 2021). Since we focus on generating the argument graph, we take the stance as given and use the stance that was predicted by a stance prediction model released by Saha et al..

To convert an EXPLAGRAPHS to Python, the belief, argument, and stance are instantiated as string variables. Next, we define the graph structure by specifying the edges. Unlike PROSCRIPT, the edges in EXPLAGRAPHS are typed. Thus,

each edge is added as an add_edge(source, edge_type, destination) function call. We also list the starting nodes in a list instantiated with a begin variable (Figure 4). Given a test example, we construct the input until the line of # Edges and let a model complete the remaining.

Metrics We use the metrics defined by Saha et al. (2021) (see Section 6 of Saha et al. (2021) for a detailed description of the mechanisms used to calculate these metrics):

• Structural accuracy (StCA): fraction of graphs that are connected DAGs with two concepts each from belief and the argument.

• Semantic correctness (SeCA): a learned metric that evaluates if the correct stance is inferred from a (belief, graph) pair.

• G-BERTScore (G-BS): measures BERTscore- (Zhang et al., 2020) based overlap between generated and reference edges.

• GED (GED): avg. edits required to transform the generated graph to the reference graph.

• Edge importance accuracy (EA): measures the importance of each edge in predicting the target stance. A high EA implies that each edge in the generated output contains unique semantic information, and removing any edge will hurt.

Results Table 4 shows that COCOGEN with only 30 examples outperforms the T5 model that was fine-tuned using 1500 examples, across all metrics. Further, COCOGEN outperforms the NL-LLMs DAVINCI and CURIE with a text-prompt across all metrics by about 50%-100%.

[3] CURIE often failed to produce output with the desired format, and thus its high precision and low recall.

[4] As of 10/11/2022, https://leaderboard.allenai.org/propara/submissions/public

Authors:

(1) Aman Madaan, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]);

(2) Shuyan Zhou, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]);

(3) Uri Alon, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]);

(4) Yiming Yang, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]);

(5) Graham Neubig, Language Technologies Institute, Carnegie Mellon University, USA ([email protected]).