Table of Links

Abstract and 1 Introduction

2 Related Works

3 Method and 3.1 Proxy-Guided 3D Conditioning for Diffusion

3.2 Interactive Generation Workflow and 3.3 Volume Conditioned Reconstruction

4 Experiment and 4.1 Comparison on Proxy-based and Image-based 3D Generation

4.2 Comparison on Controllable 3D Object Generation, 4.3 Interactive Generation with Part Editing & 4.4 Ablation Studies

5 Conclusions, Acknowledgments, and References

SUPPLEMENTARY MATERIAL

A. Implementation Details

B. More Discussions

C. More Experiments

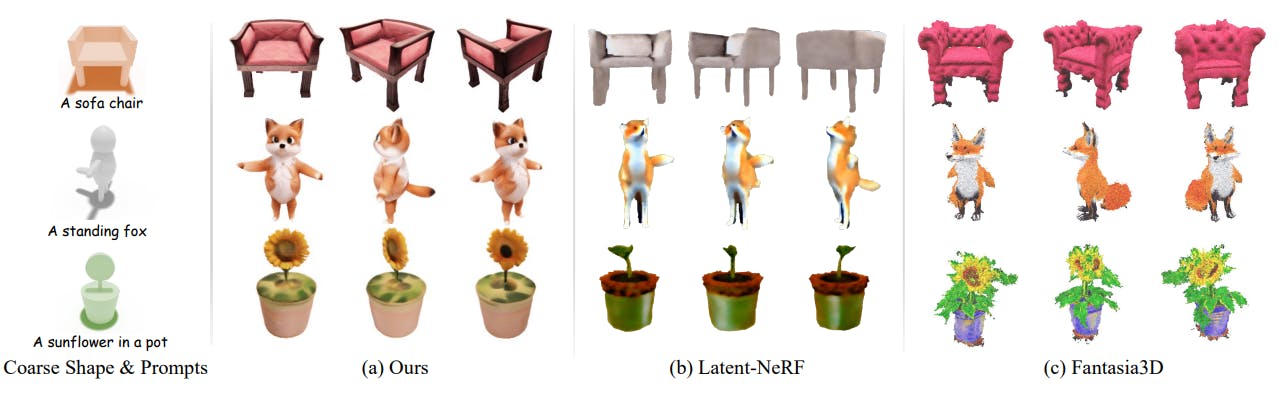

4.2 Comparison on Controllable 3D Object Generation

We compare our method with Controllable 3D object generation methods, including Latent-NeRF [Metzer et al. 2023] and Fantasia3D [Chen et al. 2023a]. Latent-NeRF introduces a sketch-shape guided loss, which constrains the density field close to the surface of the shape proxy, while Fantasia3D uses coarse shapes as the geometry initialization of DMTet [Shen et al. 2021]. In the experiment, we give all the methods the same coarse shape and the text prompts as a guidance and output the neural reconstructions’ rendered views for comparison (since Latent-NeRF does not officially provide mesh extraction). As shown in Fig. 4, Latent-NeRF generally obtains plausible results but fails to produce tiny shape and appearance details (e.g., blurry textured sofa, fox missing clear eyes and right arms and missing sunflower in Fig. 5 (b)), which indicates that directly applying 3D control to the 3D representation is sub-optimal since it might not work smoothly with the generic SDS loss. For Fantasia3D, since it only uses the 3D shape for initialization rather than supervision, it often generates overgrowth results that do not

follow the given shape (e.g., inflate sofa, the fox with incomplete downward arms, pot with many leaves and a broken sunflower in Fig. 5 (c)) and also slightly suffers from “multi-face Janus problem” (e.g., multi-face fox in Fig. 5 (c)). Since both Latent-NeRF and Fantasia3D use vanilla 2D diffusion model as prior while being agnostic to the multi-view correlations, their results are sensitive to the initialization and hyperparameter settings. In contrast, our method directly adds 3D-aware control to the diffusion process, which essentially controls the supervisory of reconstruction’s 2D diffusion prior and consistently achieves high fidelity generation following users’ shape guidance. It is also noteworthy that, both Latent-NeRF and Fantasia3D require a long period of reconstruction (e.g., dozens of minutes) to give an impression of what the object might look like, making it unusable for interactive modeling, while our framework bypasses the reconstruction stage and allows to preview the 3D object in only a few seconds.

4.3 Interactive Generation with Part Editing

We now present examples of interactive generation with progressive part editing. As shown in Fig. 6, users can first generate a basic instance (e.g., a base of cake, a teddy bear, or a penguin) with shape proxy, and then progressively add new shape blocks with changed text prompts (e.g., adding a small cake with candles, a green hat and red scarf, or even progressively add a torch and a red backpack from the back view), which seamlessly enrich the content of the instance while maintaining other parts unchanged. Notably, all these editing operations can be finished in roughly 5∼10 seconds, which then allows interactive previewing of the edited 3D results. Please refer to the supplementary video for the demonstration.

4.4 Ablation Studies

Volume-conditioned reconstruction. We then inspect the efficacy of the volume-SDS loss by ablating during the shape reconstruction. As shown in Fig. 7, by adding volume-SDS loss, we achieve better geometry reconstruction (e.g., less floater and more reasonable chair bottom) than naïve training on fixed multiview images [Liu et al. 2023a; Long et al. 2023].

Proxy-bounded part editing. We finally analyze the proxy-bounded part editing by ablating proxy conditioning and mask dilation strategy in Fig. 8. Specifically, we choose a multi-step editing example,

where the backpack should be edited from the back view. We merge the front and edited back image conditions using mixed denoising [Bar-Tal et al. 2023]. As shown in Fig. 8, the editing without the proxy condition would result in broken shapes (e.g., dangling flames and distorted body), while disabling mask dilation would also make the editing less natural (e.g., tightly fused red bag and broken hands). By equipping with the full strategies, we achieve a seamless editing effect while preserving other content unchanged.

More ablation studies can be found in the supplementary material.

Authors:

(1) Wenqi Dong, from Zhejiang University, and conducted this work during his internship at PICO, ByteDance;

(2) Bangbang Yang, from ByteDance contributed equally to this work together with Wenqi Dong;

(3) Lin Ma, ByteDance;

(4) Xiao Liu, ByteDance;

(5) Liyuan Cui, Zhejiang University;

(6) Hujun Bao, Zhejiang University;

(7) Yuewen Ma, ByteDance;

(8) Zhaopeng Cui, a Corresponding author from Zhejiang University.

![Figure 5: We compare the Controllable 3D generation with Latent-NeRF [Metzer et al. 2023] and Fantasia3D [Chen et al. 2023a].](https://hackernoon.imgix.net/images/fWZa4tUiBGemnqQfBGgCPf9594N2-74834ch.png?auto=format&fit=max&w=3840)