Authors:

(1) Wenqi Dong, from Zhejiang University, and conducted this work during his internship at PICO, ByteDance;

(2) Bangbang Yang, from ByteDance contributed equally to this work together with Wenqi Dong;

(3) Lin Ma, ByteDance;

(4) Xiao Liu, ByteDance;

(5) Liyuan Cui, Zhejiang University;

(6) Hujun Bao, Zhejiang University;

(7) Yuewen Ma, ByteDance;

(8) Zhaopeng Cui, a Corresponding author from Zhejiang University.

Table of Links

Abstract and 1 Introduction

2 Related Works

3 Method and 3.1 Proxy-Guided 3D Conditioning for Diffusion

3.2 Interactive Generation Workflow and 3.3 Volume Conditioned Reconstruction

4 Experiment and 4.1 Comparison on Proxy-based and Image-based 3D Generation

4.2 Comparison on Controllable 3D Object Generation, 4.3 Interactive Generation with Part Editing & 4.4 Ablation Studies

5 Conclusions, Acknowledgments, and References

SUPPLEMENTARY MATERIAL

A. Implementation Details

B. More Discussions

C. More Experiments

ABSTRACT

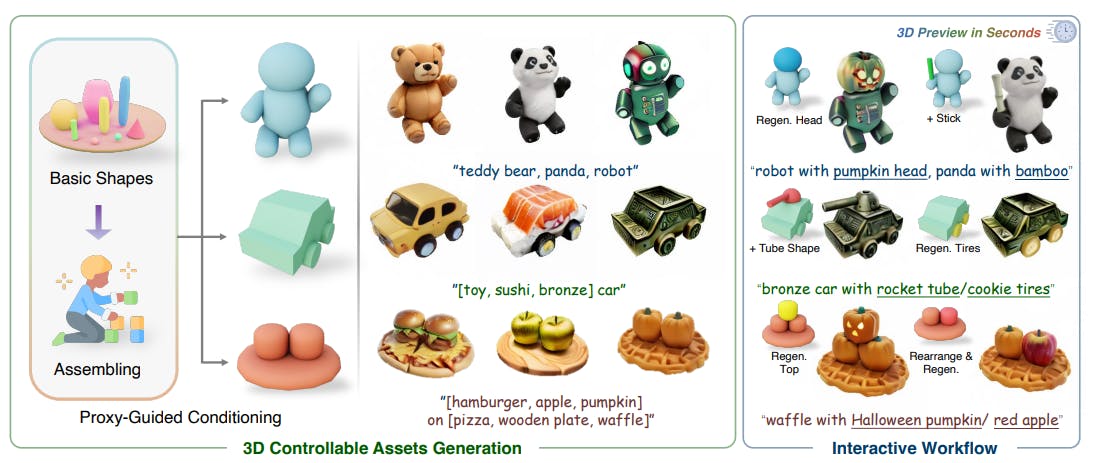

As humans, we aspire to create media content that is both freely willed and readily controlled. Thanks to the prominent development of generative techniques, we now can easily utilize 2D diffusion methods to synthesize images controlled by raw sketch or designated human poses, and even progressively edit/regenerate local regions with masked inpainting. However, similar workflows in 3D modeling tasks are still unavailable due to the lack of controllability and efficiency in 3D generation. In this paper, we present a novel controllable and interactive 3D assets modeling framework, named Coin3D. Coin3D allows users to control the 3D generation using a coarse geometry proxy assembled from basic shapes, and introduces an interactive generation workflow to support seamless local part editing while delivering responsive 3D object previewing within a few seconds. To this end, we develop several techniques, including the 3D adapter that applies volumetric coarse shape control to the diffusion model, proxy-bounded editing strategy for precise part editing, progressive volume cache to support responsive preview, and volume-SDS to ensure consistent mesh reconstruction. Extensive experiments of interactive generation and editing on diverse shape proxies demonstrate that our method achieves superior controllability and flexibility in the 3D assets generation task. Code and data are available on the project webpage: https://zju3dv.github.io/coin3d/.

1. INTRODUCTION

As a child, we are born with the instinct to create things with our imagination, i.e., building houses or vehicles using different Lego bricks, or doodling pictures with pencils [Nath and Szücs 2014]. Yet only a few people learn drawing or modeling skills, which eventually develop the ability to create qualified artworks. Fortunately, the rapid development of generative techniques grants everyone a chance to create fantasy content, i.e., using LLM for automatic manuscripting [Radford et al. 2018; Wei et al. 2022] or 2D diffusion methods for text-to-image/video generation [Guo et al. 2023; Lugmayr et al. 2022; Rombach et al. 2022]. To enable the controllability of the generative model, recent advances in 2D diffusion (such as ControlNet [Zhang et al. 2023], T2I-Adapter [Mou et al. 2023], SDEdit [Meng et al. 2021], and etc.) allow users to take depth, sketches or human poses to control the generation process, enables iteratively editing designated region with inpainting and re-generating mechanism. However, in the field of 3D asset generation [Poole et al. 2022], existing 3D generative methods still lack controls for artistic creation. First, they are usually conditioned with text prompts [Poole et al. 2022] or perspective images [Liu et al. 2023d,c,a; Long et al. 2023; Qian et al. 2023], which is not sufficient to express 3D objects accurately. Second, when performing high-level tasks such as generative editing [Cheng et al. 2023b; Haque et al. 2023; Kamata et al. 2023; Li et al. 2023a] or inpainting [Zhou et al. 2023], existing approaches usually require a significant amount of time for reconstruction before previewing the editing operation.

Given this observation, we believe that a controllable and userfriendly 3D assets generation framework should have these properties. (1) 3D-aware controllable: similar to a child stacking Lego bricks and picturing the vivid appearance in its mind, a controllable 3D generation can be started by assembling basic shapes (e.g., cuboids, spheres, or cylinders), which serves as coarse shape guidance for the detailed generation. Therefore, it reduces the difficulty of 3D modeling for common users and also provides sufficient control over the generation. (2) flexible: the framework should allow users to interactively composite or adjust local regions in a 3Daware manner, ideally as easy as image inpainting tools [Meng et al. 2021]. (3) responsive: once the user’s editing is temporarily finished, the framework should instantly deliver preview images of the generated object from the desired viewpoints, rather than waiting for a long reconstruction period. In this paper, we propose a novel COntrollable and INteractive 3D assets generation framework, named Coin3D. Instead of using text prompts or images as conditions, Coin3D allows users to add 3D-aware conditions into a typical multiview diffusion process in the 3D generation task, i.e., using a coarse 3D proxy assembled from basic shapes to guide the object generation, as illustrated in Fig. 1. Based on proxy-guided conditioning, Coin3D introduces a novel generative and interactive 3D modeling workflow. Specifically, users can depict the desired object by typing in text prompts and assembling basic shapes with their familiar modeling software (such as Tinkercad, Blender, and SketchUp). Then, Coin3D would construct the on-the-fly feature volume in a few seconds, which enables the preview of the result from arbitrary viewpoints or even progressively adjust/regenerate the designated local part of the object. For example, we can generate a bronze car by assembling basic shapes and incrementally adding tubes or changing tires as shown in Fig. 1. However, even though adding 3D-aware conditions is technically plausible, there are still some challenges to an interactive 3D modeling workflow, which will be addressed in this work:

Coarse Shape Guidance. Since we only use simple basic shapes (e.g., stacked spheres or cuboids) instead of intricate CAD models for 3D guidance, the proxy-guided conditioning should allow some freedom during the generation rather than being strict to the given basic shapes, e.g., growing animal ears from the sphere head as shown in Fig. 1. To achieve this goal, we design a novel 3D adapter to process the 3D control, where the 3D proxies (basic shapes) are first voxelized and extracted to 3D features, and then integrated into the spatial features of a multiview generation pipeline [Liu et al. 2023a]. In this way, users can manipulate the control strength by changing the plug-in weights, enabling controlling the generated object more or less close to the given proxy.

Interactive Modeling. An interactive and productive 3D generation workflow should support progressive modeling operations and responsive preview, i.e. seamlessly adding/adjusting shape primitives or precisely regenerating local parts without touching others, while all the operated results should be previewed as quickly as possible without time-consuming reconstruction. To fulfill the demands, we first develop a novel proxy-bounded editing strategy, which ensures precise bounded control and natural style blending when modifying part of the object, and then utilize a progressive volume caching mechanism by memorizing stepwise 3D features to enable responsive preview.

Consistent Reconstruction. To facilitate the standard CG workflow, one might need to export the generated assets into textured mesh with reconstruction. However, even with 3D-aware conditioning, there might still be poor reconstructions when naïvely reconstructing objects using synthesized multiview images due to the limited viewpoints (see Sec. 4.4). To tackle this issue, we propose to leverage the proxy-guided feature volume during reconstruction with a novel volume-SDS loss. This strategy effectively exploits the controlled 3D context during the score distillation sampling [Poole et al. 2022] and faithfully improves the reconstruction quality.

Our contributions can be summarized as follows. 1) We propose a novel controllable and interactive 3D assets generation framework, named Coin3D. Our method designs a 3D-aware adapter to take simple 3D shape proxies as guidance to control the object generation, which supports interactive generation operations such as altering prompts, adjusting shapes, or fine-grained local part regeneration. 2) To ensure an interactive and consistent experience of generative 3D modeling, we develop several techniques, including proxy-bounded editing for precise and seamless part editing, progressive volume cache to support responsive preview from arbitrary views, and a conditioned volume-SDS to improve the mesh reconstruction quality. 3) Extensive experiments of interactive generation with various shape proxies and the interactive workflow deployed on the 3D modeling software (e.g., Blender) demonstrate the controllability and productivity of our method on generative 3D modeling.