Table of Links

Abstract and 1 Introduction

2 Related Works

3 Method and 3.1 Proxy-Guided 3D Conditioning for Diffusion

3.2 Interactive Generation Workflow and 3.3 Volume Conditioned Reconstruction

4 Experiment and 4.1 Comparison on Proxy-based and Image-based 3D Generation

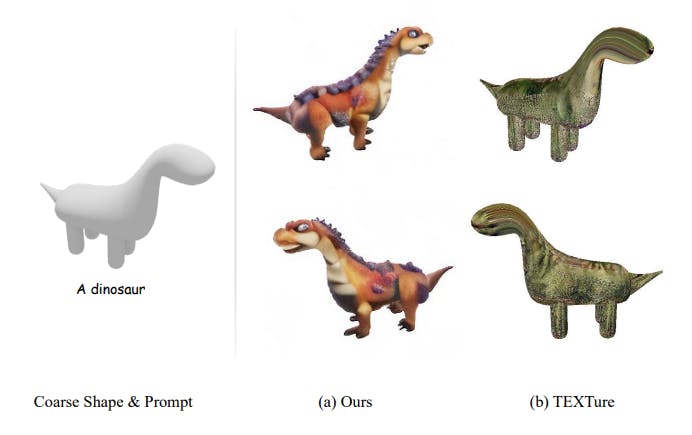

4.2 Comparison on Controllable 3D Object Generation, 4.3 Interactive Generation with Part Editing & 4.4 Ablation Studies

5 Conclusions, Acknowledgments, and References

SUPPLEMENTARY MATERIAL

A. Implementation Details

B. More Discussions

C. More Experiments

Supplementary Material

In this supplementary material, we describe more details of our method in Sec. A. Besides, we also conduct more experiments in Sec. C. More qualitative results can be found in our supplementary video, and the source code will be released upon the acceptance of this paper.

A IMPLEMENTATION DETAILS

A.1 Dataset Preparation

In our experiment, we use the LVIS subset of Objaverse [Deitke et al. 2023] to train the model, which contains 28,000+ objects after a heuristic cleanup process following Long et al. [Long et al. 2023]. For training view rendering, we set up 16 image views with -30° pitch and evenly facing towards the object from 360°.

A.2 Evaluation Data Preparation and User Study

A.3 Training and Network Details

Authors:

(1) Wenqi Dong, from Zhejiang University, and conducted this work during his internship at PICO, ByteDance;

(2) Bangbang Yang, from ByteDance contributed equally to this work together with Wenqi Dong;

(3) Lin Ma, ByteDance;

(4) Xiao Liu, ByteDance;

(5) Liyuan Cui, Zhejiang University;

(6) Hujun Bao, Zhejiang University;

(7) Yuewen Ma, ByteDance;

(8) Zhaopeng Cui, a Corresponding author from Zhejiang University.

![Figure I: We compare our method with the texture synthesis method TEXTure [Richardson et al. 2023].](https://hackernoon.imgix.net/images/fWZa4tUiBGemnqQfBGgCPf9594N2-w3a348s.png?auto=format&fit=max&w=1920)

![Figure J: We compare our proxy-bounded part editing with Fantasia3D [Chen et al. 2023a] fine-tuning.](https://hackernoon.imgix.net/images/fWZa4tUiBGemnqQfBGgCPf9594N2-d8b34mf.png?auto=format&fit=max&w=1920)