Ruin is the destination toward which all men rush, each pursuing his own best interest in a society that believes in the freedom of the commons. – Garrett Hardin

Some time in the past, I worked at a company that self-hosted almost everything from whether it was a good decision or not, deserves a separate discussion.

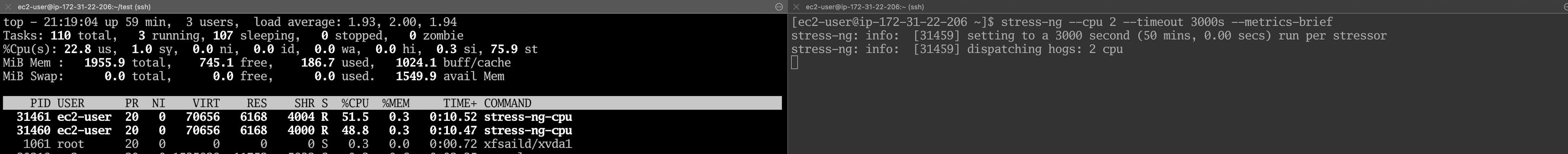

Boxing Day morning, an incident happened. We had a huge backlog of messages in the RabbitMQ queue, and the cluster had lost quorum. Metrics looked right, yet nodes were failing heartbeats. Connected to the machine to diagnose, one glance at top told the real story. %st was consistently high, alternating values between 70-80%; we were experiencing the noisy neighbor effect.

What Contention Really Is

Inside a Linux VM, the CFS scheduler sees only virtual CPUs. The hypervisor, however, is juggling those vCPUs onto a finite set of cores. When everyone wants to run at once, somebody waits. In the VM, that wait time surfaces as steal (%st).

Simulation

Let’s simulate a CPU contention scenario, spin up a t3.small (2 vCPU burstable) instance on AWS, and pin stress-ng on both vCPUs.

stress-ng --cpu 2 --timeout 3000s --metrics-brief

Burstable instances are designed to have a baseline CPU but can burst higher using CPU credits. It is important to run stress-ng long

enough before continuing to expend all CPU credits.

Then create and run a simple web app that responds with a sizeable payload.

package main

import (

"fmt"

"net/http"

"strings"

)

func handler(w http.ResponseWriter, r *http.Request) {

response := strings.Repeat("Hello, world! ", 8000) // about 100KB

fmt.Fprintln(w, response)

}

func main() {

http.HandleFunc("/", handler)

fmt.Println("Starting server on :8080...")

if err := http.ListenAndServe(":8080", nil); err != nil {

panic(err)

}

}

Generate load and record latency.

hey -n 10000 -c 200 http://$PUBLIC_IP:8080/

Detecting Contention

The key is to monitor how long a runnable thread waits for a physical core it cannot see. In practice, you assemble three layers of evidence: VM-level counters, hypervisor or cloud telemetry, and workload symptoms, then correlate them.

VM-level Counters

Inside any modern Linux AMI, you have two first-class indicators:

%st(steal time) inmpstat -P ALL 1ortop. It is the percentage of a one-second interval during which a runnable vCPU was involuntarily descheduled by the hypervisor. Sustained values above 5% on Nitro hardware or above 2% on burstable T-classes indicate measurable contention.

-

schedstatwait time exposed byperf sched timehistorperf schedule record. This traces every context-switch, capturing the delay, in microseconds, the task spent queued while ready. A workload that normally shows sub-millisecond delay will display tens of milliseconds when the host is oversubscribed.

Example 30-second snapshot:

# per-CPU steal

mpstat -P ALL 1 30 | awk '/^Average:/ && $2 ~ /^[0-9]+$/ { printf "cpu%-2s %.1f%% stealn", $2, $9}'

# scheduler wait histogram

sudo perf sched record -a -- sleep 30

sudo perf sched timehist --summary --state

Look for steal spikes aligning with a right shift of the delay distribution.

Hypervisor and Cloud Telemetry

AWS publishes two derived metrics that map directly to CPU contention:

CPUSurplusCreditBalance(burstable instances). When the credit balance falls below zero, the hypervisor throttles vCPU to a baseline percentage, producing an immediate surge in steal.

CPUCreditUsagewith a flat-line onCPUUtilization. If usage pins yet VM-reported utilization does not, you are hitting the scheduler ceiling rather than exhausting work. On compute-optimized families, enable the detailed monitoring option and ingest%stealfrom theInstanceIddimension via CloudWatch Agent.

Correlating With Workload Symptoms

Latency-sensitive services often expose a sharp inflection: average latency holds steady while p95 and p99 diverge. Overlay those percentiles with %steal and ready-time to confirm causality. During a true contention, throughput typically plateaus because each request still completes, but it simply waits longer for compute slices. If throughput also collapses, the bottleneck may be elsewhere (I/O or memory).

Advanced Tracing

For periodic incidents that last seconds, attach an eBPF probe:

sudo timeout 60 /usr/share/bpftrace/tools/runqlen.bt

A widening histogram of run-queue length across CPUs at the moment service latency spikes is conclusive evidence of CPU oversubscription.

Alert Thresholds

Empirically, alert when:

Use the AND condition to avoid false positives; stealing without user-visible latency can be tolerable for batch nodes.

Mitigation

The first decision is the instance family. Compute-optimized or memory-optimized generations reserve a higher scheduler weight per vCPU than burstable classes.

Where predictable latency is a requirement, avoid oversubscribed families entirely or use a Dedicated Instance to remove the multi-tenant variability.

How instances are placed matters too. Spread placement groups reduce the probability of two heavy tenants landing on the same host; cluster groups improve east-west bandwidth but can increase the risk of sharing a box with a CPU-intensive neighbor. For latency-sensitive fleets, the spread policy is usually safer.

Build an automated response for outliers. A lightweight lambda can poll CloudWatch for elevated %steal , and reboot the instance. Because contention tends to be host-local, recycling often resolves the incident within minutes.

if metric("CPUStealPercent") > 10 and age(instance) > 5min:

cordon_from_alb(instance)

instance.reboot(instance)

Inside managed Kubernetes, the same ideas apply. Allocate critical pods with identical requests and limits so the scheduler assigns them the Guaranteed quality-of-service class, pin them with a topology spread to avoid stacking noisy neighbors, and constrain opportunistic batch jobs with CPU quotas to prevent self-inflicted contention.

Where policy controls are insufficient, vertical headroom is the remaining lever. Over-provision vCPUs slightly for latency-critical services; the additional cost can be lower than the opportunity cost missed.

If convenient, go bare-metal.

Conclusion

CPU contention remains one of the most common and least visible performance risks in multi-tenant cloud computing. Because the hypervisor arbitrates access to physical cores, every guest sees only a projection of reality; by the time application latency shifts, the root cause is already upstream in the scheduler. To recap:

- Instrument first-class contention metrics: steal time and scheduler wait alongside service-level indicators.

- Select isolation levels that match the SLA, from compute-optimized families to dedicated tenancy, and revisit those choices as traffic patterns evolve.

- Automate remediation, whether recycling instances, rebalancing pods, or scaling capacity, so that corrective action completes faster than customers can perceive degradation. When these practices are built into the platform, CPU contention becomes another monitored variable rather than an operational surprise. The result is predictable latency, fewer escalations, and infrastructure that degrades gracefully under load instead of failing unpredictably to a noisy neighbor. The remaining unpredictability becomes a business decision: pay for stronger isolation, or design software that tolerates residual variance.