Decathlon, one of the world’s leading sports retailers, recently shared why it adopted the open source library Polars to optimize its data pipelines. The Decathlon Digital team found that migrating from Apache Spark to Polars for small input datasets provides significant speed and cost savings.

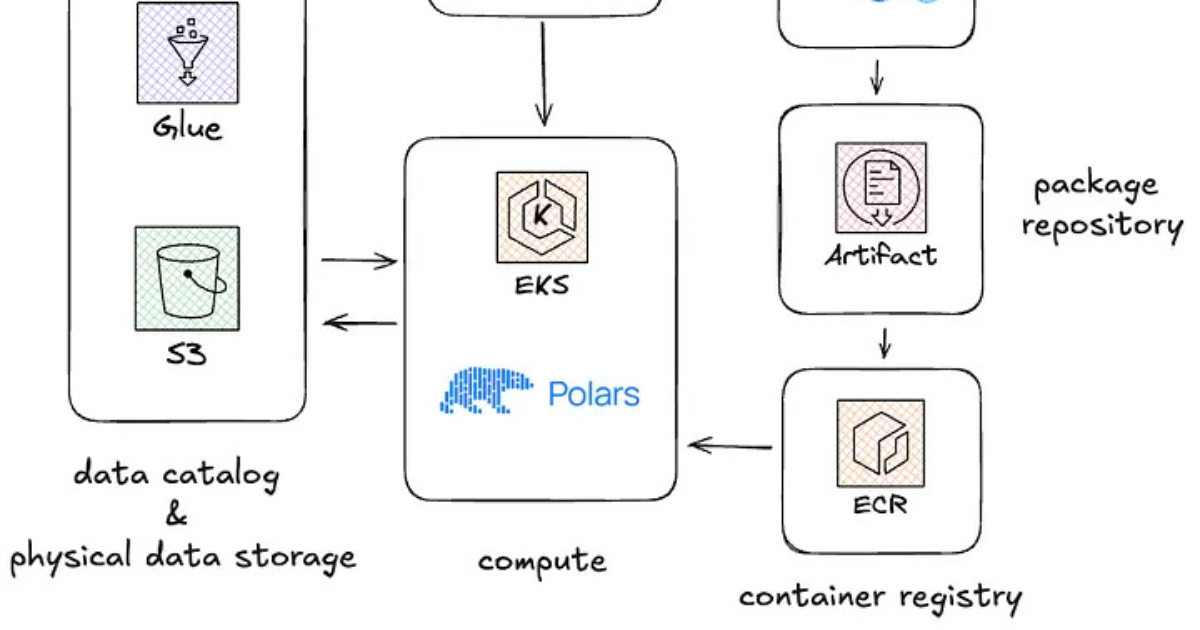

Decathlon’s data platform runs PySpark workflows on cloud clusters, each with approximately 180 GiB of RAM and 24 cores across six workers. Data is stored as Delta tables in an AWS S3 data lake, with AWS Glue serving as the technical metastore.

While the solution was optimized for large data jobs, it was considered suboptimal for much smaller datasets (gigabytes or megabytes). Arnaud Vennin, Tech Lead Data Engineer at Decathlon, writes:

For data engineers in the data department, the primary tool is Apache Spark, which excels at processing terabytes of data. However, it turns out that not every workflow has terabytes of data as input; some involve gigabytes or even megabytes.

The platform uses a Medallion-style architecture (Bronze, Silver, Gold, Insight) to refine and organize data for quality and governance. Workflows are orchestrated with MWAA, a managed Apache Airflow service on AWS, and CI/CD is automated through GitHub Actions for testing and deploying code.

The data team began experimenting with Polars for lighter or mid-size workloads, initially as a replacement for existing tools like pandas that were experiencing scaling issues. Polars is an open source library for data manipulation built around an OLAP query engine implemented in Rust, using Apache Arrow Columnar Format as the memory model.

Decathlon data platform architecture running Polars. Source: Decathlon Digital Blog

As Polars’ syntax is similar to Spark’s, the team decided to migrate a Spark job to Polars, starting with a Parquet table of approximately 50 GiB, equivalent to at least a 100 GiB CSV table. Moving from a Spark cloud-hosted cluster to a single-node Kubernetes pod reduced the compute launch time from 8 to 2 minutes.

The results were even more promising after enabling Polars’ new streaming engine, which allows processing datasets larger than available memory. On a single Kubernetes pod, Decathlon reports that jobs that once required large clusters now run efficiently with modest CPU and memory, often completing before a full Spark cluster could even be cold-started.

Source: Decathlon blog

Eric Cheminot, principal architect at Schneider Electric, questions:

It’s more of an indication that deploying those jobs on Spark clusters was not a good choice. But… we see this so often that it’s representative!

Based on the experiments, the team decided to implement Polars for all new pipelines where input tables are less than 50 GiB, have a stable size over time, and do not involve multiple joins, dozens of aggregations, or exotic functions. Vennin shares some warnings, too:

Running Polars on Kubernetes presents challenges. It adds a new tool to the stack, so teams need to learn how to run the container service. It may also slow down data pipeline hopping between teams. Additionally, Kubernetes requires to be managed by Data Ops and carries specific security policies. These considerations affect how Polars is rolled out within Decathlon.

Michel Hua agrees:

Polars has Spark-compatible syntax, which facilitates code migration. The pain comes mostly from managing Resilient Distributed Dataset and clusters.

As some practitioners question why Decathlon did not extend the solution to more jobs, Vennin highlights additional constraints around when Polars cannot read the data, for example, datasets written with Liquid Clustering or Column Mapping features.