If you follow the discourse surrounding AI security today, you’d be forgiven for thinking we’re standing on the edge of an existential cliff. The headlines and security warnings are relentless, painting a picture of an immediate and unprecedented threat landscape.

Every week brings a new, dire headline that seems to amplify the sense of impending crisis:

- “AI agents can be tricked into attacking systems”

This often refers to research demonstrating how autonomous AI systems, designed to perform helpful tasks, can be manipulated via subtle inputs known as adversarial examples or jailbreaking techniques to perform malicious actions, bypass safety guardrails, or even launch sophisticated attacks against underlying infrastructure. The core worry here is the loss of control over powerful, autonomous agents.

- “LLMs enable novel cyberattacks”

This covers the dual threat posed by Large Language Models (LLMs). The primary concern is that LLMs can lower the bar for entry into cybercrime by helping novices draft highly convincing phishing emails, generate exploit code, or perform reconnaissance. Secondly, there is a concern that attackers can use LLMs as a component in their attacks, leveraging them to automate complex, adaptive social engineering campaigns or to dynamically discover and craft zero-day payloads.

- “Prompt injection is the new SQL injection”

This analogy highlights the new class of vulnerability specific to AI systems. Just as SQL injection exploits a failure to sanitize user input destined for a database, prompt injection exploits a failure to properly isolate a user’s instructions (the ‘prompt’) from the model’s internal operating instructions. This allows an attacker to seize control of the model’s behavior, leading to data exfiltration, unauthorized actions, or policy violation.

The cumulative implication of this constant stream of negative news and fear-driven analysis is often the same: AI itself is the problem. The narrative tends to focus on the inherent instability, unpredictability, and risk associated with the core technology, leading to the belief that the technology must be contained, strictly regulated, or fundamentally redesigned to be safe. It frames AI as an inherently insecure and destabilizing force.

As someone who has spent years analyzing real-world attacker behavior, this framing has always felt incomplete. Most claims rely on hypotheticals, toy examples, or intentionally unsafe demonstrations. Very few ask a simpler question: What attack surface is actually exposed by today’s real AI integrations? So, instead of adding another opinion, I decided to measure it.

Why MCP Is a Useful Reality Check

The rise of sophisticated language models has necessitated the development of robust and standardized mechanisms for interaction with external resources. To this end, the Model Context Protocol (MCP) has been introduced, establishing a crucial, standardized framework that enables language models to securely and predictably invoke external tools and services.

This protocol acts as a secure intermediary, allowing the language model to extend its capabilities beyond its internal knowledge and computational boundaries. In essence, the MCP is vital for transforming language models from sophisticated text processors into capable and interactive agents that can perform real-world tasks by securely and contextually integrating with the broader digital ecosystem. MCP is often cited as evidence that “AI systems are dangerous,” yet it is also concrete, open source, widely replicated, and measurable. That makes it an ideal test case for separating facts from fear.

Research Methodology

This analysis deliberately avoided speculative or adversarial setups. The study’s design employed a conservative methodology involving five key steps:

- Repository Identification: MCP server repositories were located by analyzing SDK import patterns found on GitHub.

- Execution Verification: Repositories that failed to start successfully were excluded from the analysis.

- Tool Analysis: Successfully running servers had their exposed tools and schemas extracted. These were then scanned using both open-source and proprietary in-house tools.

- Capability Mapping: Tools were classified based on their potential actions (i.e., what they can do), independent of whether they contained known vulnerabilities.

- Server Ranking: Servers were ultimately aggregated and ranked according to the density and type of exposed capabilities they contained.

Only MCP servers that actually ran and exposed tools were included, as an attack surface that doesn’t execute is not an attack surface.

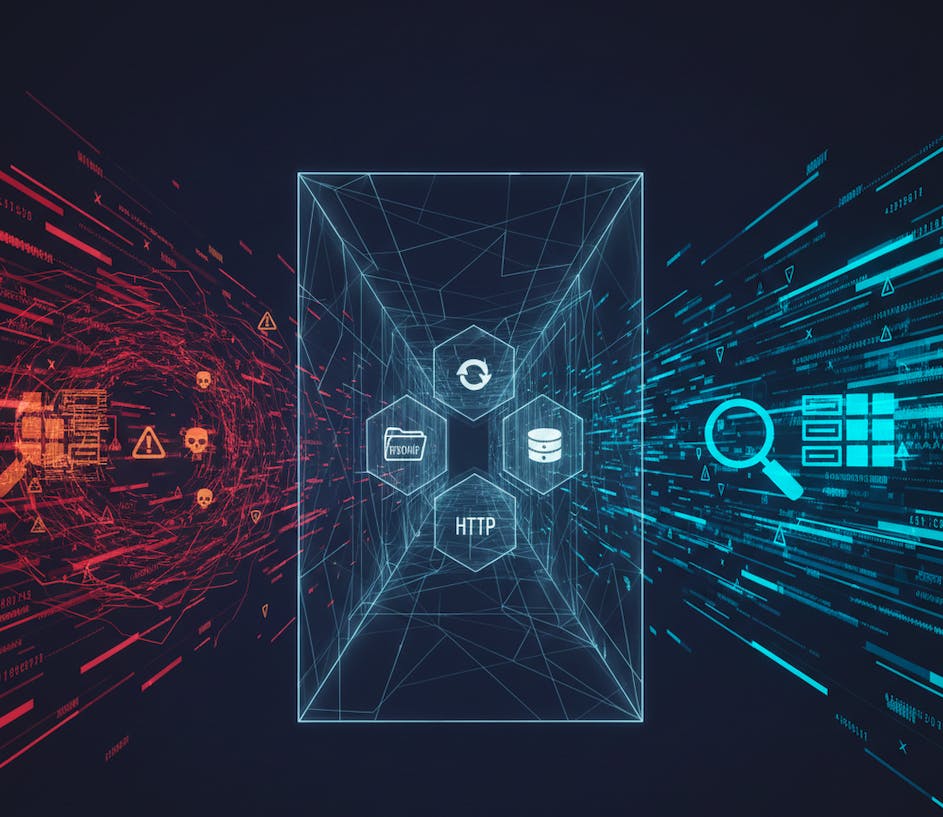

What MCP Servers Actually Expose

Once runnable servers were isolated, a clear pattern emerged: MCP servers expose familiar security primitives, not exotic AI-only capabilities.

TABLE 1 — Observed MCP Capability Classes:

| Capability Class | Description |

|—-|—-|

| Filesystem access | Read/write operations on files or documents |

| HTTP requests | Fetching or scraping remote URLs |

| Database queries | Structured data retrieval |

| Process execution | Local command or script execution |

| Orchestration | Tool chaining, planning, or agent control |

| Read-only search | Constrained data or API lookups |

The key point here is that these primitives already exist throughout modern software systems. MCP simply exposes them in a standardized way.

Capability Distribution Across Real MCP Servers

Despite widespread claims that AI tooling routinely enables dangerous behavior, the observed distribution was far more restrained.

TABLE 2 — Capability Frequency (Runnable MCP Servers):

| Capability | Relative Frequency |

|—-|—-|

| Filesystem access | Medium |

| HTTP requests | Medium |

| Database queries | Low |

| Process execution | Rare |

| Orchestration | Occasional |

Observation: n The initial findings suggest a significant divergence from the prevailing, often alarmist, narratives concerning the inherent insecurity of advanced AI systems. Specifically, research into the practical exploitation of these systems revealed that arbitrary command execution, a capability that could lead to catastrophic system compromise or data breach, was identified as theleast common high-impact security vulnerability.

This counter-intuitive result directly challenges the widespread assertion that current AI models are fundamentally “reckless” or prone to easily exploitable, high-severity flaws that grant attackers deep control over the underlying infrastructure. While other vulnerabilities undoubtedly exist, the low frequency of command execution flaws implies that the robust input sanitization, sandboxing, and architectural defenses in place may be more effective than commonly acknowledged. It urges a more nuanced discussion in the cybersecurity community, shifting the focus from generalized panic to a targeted understanding of the actual, statistically significant risks posed by modern AI deployments.

The data suggests that security efforts might be more effectively spent addressing common, lower-impact vulnerabilities that occur with higher frequency, rather than focusing predominantly on the rarest, albeit most sensational, potential exploit.

The Real Risk: Capability Composition

Individually, many servers appear harmless. Risk increases when multiple primitives coexist in the same server, especially when orchestration is present. The security risk posed by an individual MCP server is often minimal or appears innocuous when viewed in isolation. A single, narrowly-scoped MCP might perform a necessary function, such as managing a simple configuration change or performing a basic logging operation. However, the potential for exploitation escalates dramatically when multiple servers are deployed and co-exist within the same server or operational environment.

This compounding risk is rooted in the combinatorial possibilities that arise when distinct primitives can be chained or leveraged in sequence. For example, one MCP server might be capable of reading sensitive configuration data, while another might be capable of altering firewall rules, and a third might be able to restart a critical service. Individually, these actions are limited. Together, they form a potent attack vector, enabling an attacker to escalate privileges, achieve persistence, and exfiltrate data.

The true inflection point for this risk is the introduction of orchestration. Orchestration, whether provided by automated security tools, container platforms (like Kubernetes), cloud-native frameworks, or sophisticated scripting, is designed to efficiently coordinate and manage these distributed capabilities. While intended for benign purposes, such as scaling, self-healing, and automated deployment, it simultaneously provides the ideal mechanism for an adversary.

An attacker who gains control over the orchestration layer can effectively direct a symphony of malicious actions, turning a collection of harmless primitives into a powerful, automated weapon system. This transforms a localized threat into a systemic one, where the environment’s own automation is used against it to achieve complex, multi-stage attacks at speed and scale.

TABLE 3 — Example Composition Patterns:

| Composition | Security Implication |

|—-|—-|

| HTTP fetch + filesystem write | Content injection / persistence |

| Database query + orchestration | Data exfiltration pipelines |

| HTTP fetch + planning | Prompt-injection amplification |

| Filesystem write + orchestration | Supply-chain style poisoning |

MCP isn’t inventing these chains. It’s just making it far easier to put them together.

Schemas, Not Prompts, Define the Attack Surface

A recurring theme in AI security discussions, particularly in the realm of LLMs, is the vulnerability to prompt manipulation, often sensationalized as “prompt injection.” This focus, while understandable given its directness, often overshadows more systemic and impactful security flaws. In practice, our research and the broader industry’s experience have consistently shown that schemas matter far more than simple prompt-based attacks.

The schema in this context refers to the entire operational framework: the architecture, the underlying data models, the access controls, the orchestration logic, and the pre- and post-processing steps that wrap around the core LLM. A sophisticated attacker will not focus on coaxing a slight deviation from the model’s output via a clever prompt. Instead, they will exploit weaknesses in a way the AI system interacts with external data sources, executes code, or leverages integrated tools.

For instance, an insecure schema might:

- Allow uncontrolled function calling: If the schema permits the LLM to call external APIs or functions without robust input validation and least-privilege principles, a successful injection attack allows for lateral movement, data exfiltration, or arbitrary command execution. The prompt is merely the trigger; the insecure schema is the actual vulnerability.

- Lack separation of context and instruction: Blending user input (the prompt) with the system instructions or operational context in a way that allows the user’s input to hijack system directives is a schema flaw.

- Use insufficiently validated inputs/outputs in Retrieval-Augmented Generation (RAG) systems: If retrieved documents are not properly sanitized before being fed back into the prompt context, or if the system blindly executes post-processing steps based on the output, the attack vector bypasses the core model’s defenses.

In essence, while prompt injection proves that the LLM is a complex, non-deterministic input validator, the severe, business-critical risks are rooted in the brittle scaffolding around the model, the schema. Securing AI systems requires shifting the security focus from merely defensive prompting techniques to adopting sound, installing traditional software, apply security principles across the entire AI application stack, and treating the LLM as just one component of a larger, interconnected, and potentially vulnerable system.

TABLE 4 — Schema Constraint Impact:

| Schema Pattern | Risk Implication |

|—-|—-|

| url: string | High (arbitrary network access) |

| path: string | High (potential traversal / overwrite) |

| query: string | Medium (depends on backend) |

| enum(values) | Low (constrained intent) |

| Hard-coded parameters | Minimal |

In MCP servers, the schema is the trust boundary. If a tool accepts unconstrained input and passes it to a powerful sink, the risk is architectural, not AI-driven.

Why This Isn’t an “AI Is Dangerous” Story

The widespread discussion proclaiming that AI introduces an “unprecedented risk” often misses the more nuanced and architecturally significant reality. The most critical takeaway from current security research and incident analysis is not that AI represents a fundamentally new or unmanageable level of danger. Instead, the profound consequence of integrating AI, particularly in the form of LLMs and intelligent agents, is that AI fundamentally shifts the location where essential security decisions are made and enforced.

This is not a mere incremental update to existing security models; it represents a deep architectural shift in how applications are constructed and defended. Security focus is being moved:

| FROM: The Traditional Application Security Focus | TO: The Modern AI-Driven System Security Focus |

|—-|—-|

| UI Flows (User Interface) | Schemas (Data and Interaction) |

| Security was heavily focused on validating inputs and protecting against manipulation of front-end components and presentation layers. | The emphasis moves to strictly defining and validating the structure and content of data exchanged between models, tools, and the application, ensuring input and output types are rigorously adhered to. |

| Human Intent and Manual Vetting | Tool Composition and Orchestration |

| Logic was often designed around what a human intended to do, relying on role-based access control (RBAC) and explicit permissions tied to individual actions or user profiles. | Security now centers on how the AI agent selects, chains, and uses various external tools (e.g., APIs, databases, services). The integrity and safety of the entire sequence of actions must be guaranteed, focusing on preventing prompt injection from driving unintended tool use. |

| Application Logic (Hard-Coded Business Rules) | Execution Context Isolation (Sandboxing) |

| Security resided within monolithic or tightly coupled business logic, where rules were explicitly coded and easy to audit within the application’s source code. | The focus shifts to isolating the AI model’s runtime environment, ensuring that the consequences of any erroneous or malicious model output (e.g., code generation or external calls) are strictly contained and cannot impact critical infrastructure or bypass perimeter defenses. |

This displacement of the security locus is an architectural shift, not merely a reflection of failure in existing security practices. It demands that organizations adapt their defensive strategies, security tooling, and developer training to address these new points of control. Recognizing that the primary security boundary is no longer the application’s front door, but the configuration and management of the intelligence pipeline itself.

Conclusion: Measure First, Panic

The pervasive discourse surrounding AI security is often dominated by fear-mongering and hypothetical worst-case scenarios. However, for practitioners, improving the state of AI security requires a shift in perspective: we must replace fear with measurement and pragmatic discipline.

LLMs and other complex AI/machine-controlled processing systems are not inherently insecure; they are simply capability-rich systems. This abundance of functionality means they require the same rigorous, disciplined approach to threat modeling, secure configuration, and operational oversight that we already apply to other critical infrastructure like automation pipelines and cloud APIs.

The uncomfortable but ultimately empowering truth is that a significant number of so-called AI “risks” are, in fact, old security problems wearing new language. Concepts like insecure defaults, supply chain vulnerability, injection flaws, and misconfigured access controls have been fundamental concerns for decades.

And that is excellent news.

It is good news because it means that we are not starting from scratch. We already know how to solve these problems. The established security principles, frameworks, and methodologies, such as the Principle of Least Privilege (PoLP) and defense-in-depth architecture, remain perfectly valid. The challenge is not inventing a new science, but systematically adapting existing security expertise to the new attack surfaces and system characteristics introduced by AI.

By framing AI security as an extension of established enterprise security rather than an entirely new, insurmountable problem, organizations can move from paralyzing anxiety to effective, measurable risk reduction.

n n n