Smarter AI is great — but AI you can actually trust? That’s the real game-changer.

AI is now making decisions that affect everything from your bank loan to your job application. But there’s one big catch — most of the time, it doesn’t tell us why it made those decisions.

That’s where Explainable AI (XAI) steps in. It’s not a single technology, but a growing field focused on making machine learning models more transparent, interpretable, and — crucially — trustworthy.

Why Explainability Matters

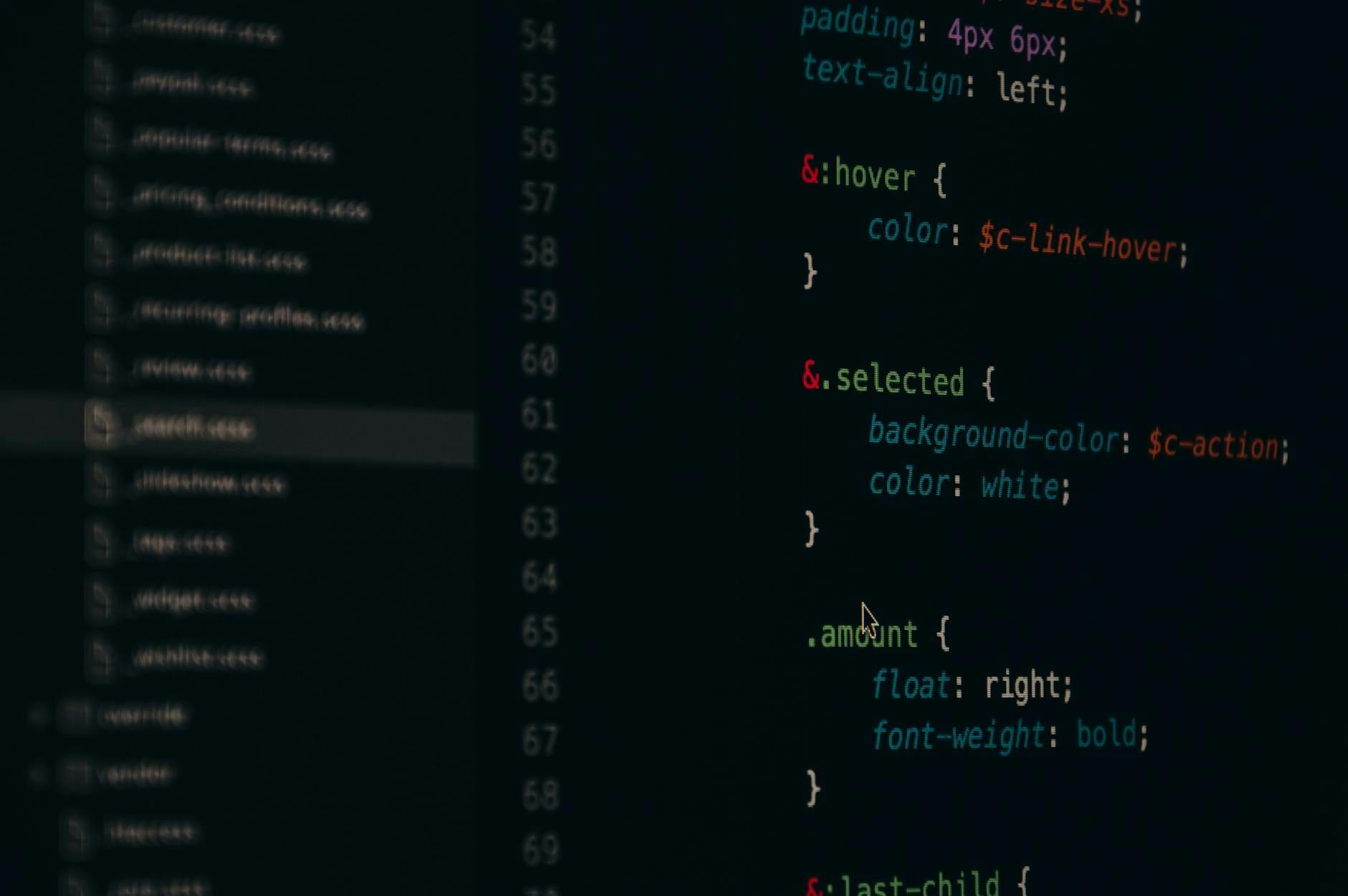

Modern AI models, especially deep learning systems, are incredibly powerful. But they’re also incredibly opaque. We get results, but we often have no clue how the model arrived there.

And that’s a real problem — especially in high-stakes areas like:

- 🏥 Healthcare

- 💳 Finance

- 🚗 Autonomous driving

- ⚖️ Legal systems

Without visibility into AI reasoning, it’s hard to catch mistakes, challenge unfair outcomes, or build user trust. In some cases, it might even violate regulations like GDPR’s “right to explanation.”

XAI offers several strategies to make model decisions more understandable. Here’s a breakdown of the most widely used ones:

1. Feature Attribution

“Which input features had the biggest impact on the model’s decision?”

-

SHAP (Shapley Additive Explanations)

Based on game theory, SHAP assigns a score to each input, showing how much it contributed to the prediction.

-

LIME (Local Interpretable Model-Agnostic Explanations)

Builds a simple model around one specific prediction to explain what’s happening locally.

-

Grad-CAM / Grad-CAM++

Highlights important regions in an image that a CNN focused on when making a prediction. Super useful for tasks like medical imaging or object detection.

2. Concept-Based Explanations

“What high-level idea did the model detect?”

-

CAVs (Concept Activation Vectors)

Link neural network layers to human-understandable concepts, like “striped texture” or “urban scene.”

-

Concept Relevance Propagation (CRP)

Traces decisions back to abstract concepts instead of just low-level input data.

These approaches aim to bridge the gap between human thinking and machine reasoning.

3. Counterfactuals

“What would have changed the outcome?”

Imagine your loan application is rejected. A counterfactual explanation might say:“If your annual income had been £5,000 higher, it would’ve been approved.”

That’s not just helpful — it’s actionable. Counterfactuals give users a way to understand and potentially influence model outcomes.

4. Human-Centered Design

XAI isn’t just about clever algorithms — it’s about people. Human-centered XAI focuses on:

- Designing explanations that non-technical users can understand

- Using visuals, natural language, or story-like outputs

- Adapting explanations to the audience (data scientists ≠ patients ≠ regulators)

Good explainability meets people where they are.

What Makes XAI So Challenging?

Even with all these tools, XAI still isn’t easy to get right.

|

Challenge |

Why It Matters |

|---|---|

|

Black-box complexity |

Deep models are huge and nonlinear — hard to summarize cleanly |

|

Simplicity vs detail |

Too simple, and you lose nuance. Too detailed, and nobody understands it |

|

Privacy risks |

More transparency can expose sensitive info |

|

User trust |

If explanations feel artificial or inconsistent, trust breaks down |

Where It’s All Going

The next generation of XAI is blending cognitive science, UX design, and ethics. The goal isn’t just to explain what models are doing — it’s to align them more closely with how humans think and decide.

In the future, we won’t just expect AI to be accurate. We’ll expect it to be accountable.

Because “the algorithm said so” just doesn’t cut it anymore.