One of the highlights of the CES event last week was the Nvidia Corp. keynote delivered by Chief Executive Jensen Huang. Though there are many focal points to an event such as CES, the one pervasive theme is artificial intelligence, and no company has become more synonymous with AI than Nvidia.

That’s why thousands of people queued up for hours in advance to hear the latest and greatest vision and product news from the company’s leader. While there was lots of great product news, there were some important takeaways worth calling out above and beyond the product news.

Agentic will be the new interface for applications

During his keynote, Huang painted a picture of a world where the primary interface for many of the work tasks we do will shift to agentic agents. He cited several examples where this is happening today, including ServiceNow Inc., Palantir Technologies Inc. and Snowflake Inc. Historical interfaces, such as filling out spreadsheets, command lines and event graphical user interfaces are manually intensive and generally require the human to be the integration point between products.

Agentic agents, such as Nvidia Nemotron, are not only simpler but can reason, use tools, plan and search removing much of the heavy lifting involved in work today. My research has found that workers spend up to 40% of their time managing work instead of doing the actual work and agentic agents can take that time to zero. There is so much fear and around AI taking jobs, but much of the value is in allowing us to grow productivity by an order of magnitude because we no longer have to do the things that are low value.

Agentic agents are multi-everything

One of the more interesting parts of Huang’s talk track when he discussed his “a-ha” moment around multimodel AI. He talked about how Perplexity uses multiple large language models to get the most accurate results. “I thought it was completely genius,” Huang said. “Of course, in AI, we would call upon all the world’s greatest AI models to answer different questions at various points in the reasoning chain. This is why AI needs to be multimodel in nature.” He added that this allows the agentic agent to use the best model for the specific task.

Huang went on to explain that in addition to multimodel, agentic AI will be multimodal to understand speech, images, text, videos, 3D graphics and other forms of communications. The “multi” continues with deployment models as AI needs to be multicloud to enable models to reside in the optimal location. It’s important to understand, in this case, multicloud is inclusive of hybrid cloud. This becomes increasingly important with physical AI as robots, edge servers and other connected devices required access to the data and the models in real time and that requires localized services.

There have been several comparisons made to the Internet with AI and I think the biggest similarity is that AI, like the internet, will eventually embedded into everything we do and that requires AI to be multimodal, multimodel and multicloud.

Nvidia continues to redefine the network

Big companies make acquisitions all the time but there has been perhaps no more important acquisition to Nvidia than Mellanox. The company paid just under $7 billion to gain networking capabilities and that business now generates more than $7 billion every quarter. While the primary infrastructure announcement at CES for Nvidia was the Vera Rubin platform, the network is what enables the various components to work together. Vera is the CPU and Rubin the GPU with Vera Rubin NVL72 being the AI supercomputer where the 72 Rubin GPUs and 36 Vera CPUs are connected using NVLink, one of Nvidia’s networking products.

In fact, of the six Vera Rubin platform announcements as part of the CES payload, four were networking, these include:

- ConnectX-9 SuperNIC – Nvidia’s next generation NIC card designed to handle the massive throughput from Rubin GPUs. The 200G SerDes enables a total bandwidth of 1.6Tb/sec per GPU, double the previous version. This is designed for the rigors of scale-out.

- NVLink 6 Switch – The interconnect that allows multiple GPUs within a single rack to act as one processor. This is optimized for scale-up networking and provides 260 TB/s of bandwidth per rack.

- Spectrum-X Ethernet Photonics – This integrates silicon photonics to solve the power and increase the data center resilience compared to traditional or standard optical cabling. The co-packaged optics provide significant power reduction and increased uptime. The flagship SN6800 offers a whopping 409.6 Tb/sec of aggregate bandwidth supporting 512 800G Ethernet ports.

- BlueField-4 DPUs – This offloads many networking functions from the server. The new DPU features 64 Arm Neoverse V2 cores and has 6x processing capacity from BlueField-3.

Nvidia is making storage AI-native

Nvidia announced something called “Context Memory Storage,” which the company is positioning as the right storage architecture for the AI era. With the rise of AI, Nvidia has rethought processing, the network and is now doing the same to storage.

At CES, I sat down with Senior Vice President Gilad Shainer, who came to the company via the Mellanox acquisition. During our conversation we discussed how important it was the compute, network and storage be in lockstep with one another to provide the best possible performance. Shainer made an interesting point that the traditional tiers of storage are not necessarily optimized for inference, and that’s what Context Memory Storage is bringing.

It fundamentally redefines the storage industry by transitioning it from a general-purpose utility to a purpose-built, “AI-native” infrastructure layer designed specifically for the era of agentic reasoning. By introducing a new tier of storage, this platform bridges the critical gap between the capacity-limited GPU server storage and the traditional, general-purpose shared storage, effectively turning key-value cache into a first-class, shareable platform resource.

Instead of forcing GPUs to recompute expensive context for every turn in a conversation or multistep reasoning task, the platform leverages the BlueField-4 DPU to offload metadata management and orchestrate the high-speed sharing of context across entire compute pods. This architectural shift eliminates the “context wall” that previously stalled GPU performance, delivering up to five times higher token throughput and five times better power efficiency compared to traditional storage methods.

Auto innovation continues to accelerate

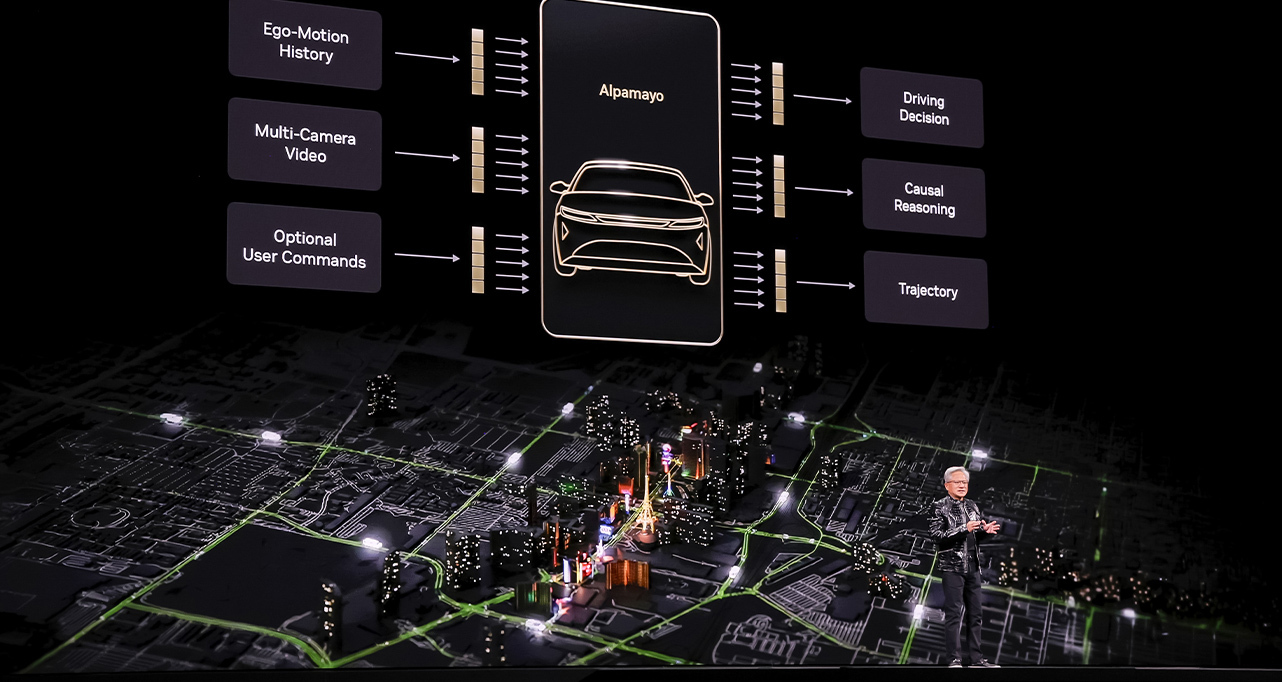

Many casual observers of the auto industry believe innovation has slowed down. This perception comes from the fact that about a half -decade ago, the industry started talking about level five self-drive and we still seem to be far from that. However, along the way the rise of digital twins, cloud to car development, camera vision and AI has allowed cars to be much safer and smarter than ever before. During his keynote, Huang highlighted that the new Mercedes-Benz CLA, built on the newly announced Alpamayo open model, achieved a five-star European New Car Assessment Program (EuroNCAP) safety rating.

Alpamayo (pictured) will be a boon to the auto industry as it introduces the world’s first thinking and reasoning-based AV model. Unlike traditional self-driving stacks that rely on pattern recognition and often struggle with unpredictable “long-tail” scenarios, Alpamayo employs a Vision-Language-Action architecture to perform step-by-step reasoning, allowing a vehicle to solve complex problems — such as navigating a traffic light outage or an unusual construction zone — by explaining its logic through “reasoning traces.”

By open-sourcing the Alpamayo 1 model along with the AlpaSim simulation framework and 1,700 hours of real-world driving data, Nvidia provides automakers like Mercedes-Benz, Jaguar Land Rover, and Lucid with a powerful “teacher model” that can be distilled into smaller, production-ready stacks. This ecosystem significantly lowers the barrier to achieving Level 4 autonomy by replacing “black-box” decision-making with transparent, human-like judgment, ultimately accelerating safety certification and building the public trust necessary for mass-market autonomous deployment.

Final thoughts

Nvidia’s CES keynote was certainly packed with AI-based innovation. My only nitpick with the presentation was that I’d like to see the company lead with the impact and then roll into the tech. As an example, Huang went through great detail on how it contributed more models than anyone, then introduced Alpamayo and finally talked about the Mercedes safety score. He should have led with the Mercedes data point, since that’s the societal impact, and then later brought the tech in.

Across CES, one could see the impact that AI is having on changing the way we work and live, and no vendor has done more to bring that to life than Nvidia. Its keynote has become the marquee event within a show filled with high-profile keynotes, and I don’t expect the momentum it has to slow down anytime soon.

Zeus Kerravala is a principal analyst at ZK Research, a division of Kerravala Consulting. He wrote this article for News.

Photo: Nvidia

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with more than 11,400 tech and business leaders shaping the future through a unique trusted-based network.

About News Media

Founded by tech visionaries John Furrier and Dave Vellante, News Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.