We believe the artificial intelligence center of gravity for enterprise value creation is shifting from large language models to small language models, where the S not only stands for small but encompasses a system of small, specialized, secure and sovereign models.

Moreover, we see LLMs and SLMs evolving to become agentic, hence SAM – small action models. In our view, it’s the collection of these “S-models,” combined with an emerging data harmonization layer, that will enable systems of agents to work in concert and create high-impact business outcomes. These multi-agent systems will completely reshape the software industry generally and, more specifically, unleash a new productivity paradigm for organizations globally.

In this Breaking Analysis, we’ll update you on the state of generative AI and LLMs with some spending data from our partner Enterprise Technology Research. We’ll also revisit our premise that the long tail of SLMs will emerge with a new, high-value component in the form of multiple agents that work together guided by business objectives and key metrics.

Let’s first review the premise we put forth over a year ago with the Power Law of Generative AI. The concept is that, similar to other power laws, the gen AI market will evolve with a long tail of specialized models. In this example, size of model is on the Y axis and model specificity is the long tail. The difference here from classical power laws is that the open-source movement and third-party models will pull that torso shown in red up and to the right. We highlight Meta Platforms Inc. in Red for reasons that will become clear in a moment, but this picture is playing out as we expected, albeit slowly, where enterprises are in search of return on investment, they’re tempering payback expectations and realizing AI excellence isn’t as easy as making a bunch of application programming interface calls to OpenAI models.

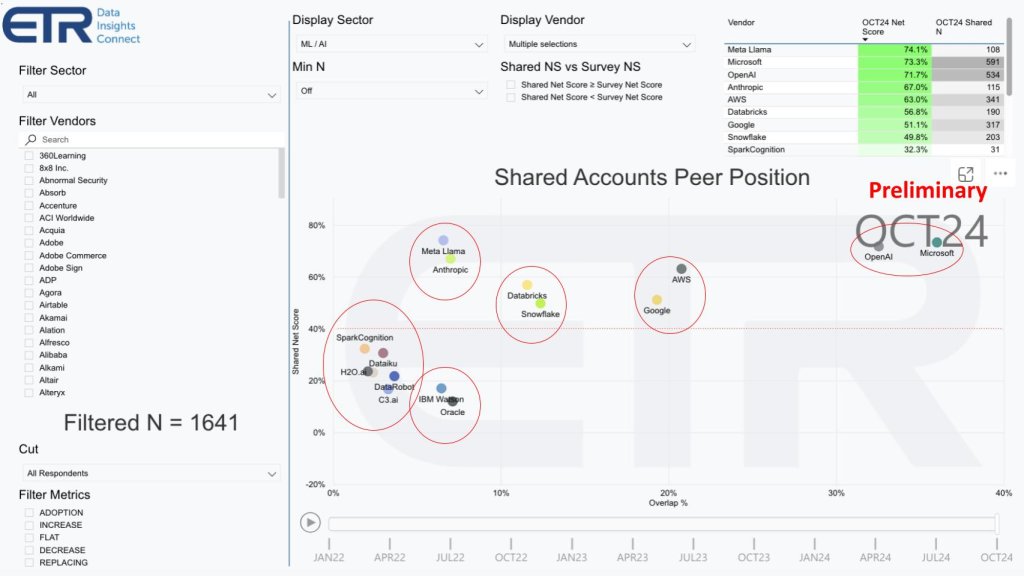

Survey data shows Meta’s Llama leads in adoption velocity

The reason we highlighted Meta in the previous slide is that, as we predicted, the open-source momentum is having a big impact on the market. The data below from ETR shows Net Score or spending momentum on the vertical axis and account Overlap in the dataset of more than 1,600 information technology decision makers on the X axis. Overlap is a proxy for market penetration. That red line at 40% indicates a highly elevated spending velocity.

Let’s start with Llama. Notice the table insert in the upper left. This is preliminary data from the ETR survey that is in the field now and closes in October. We got special permission from ETR to use the data. Look at Meta. With a 74% Net Score, it now has surpassed OpenAI and Microsoft Corp. as the LLM with leading spending momentum. In the case of an open-source offering such as Llama, which is ostensibly “free,” the survey is measuring adoption, although we note that organizations will spend in the form of labor (skills), infrastructure and services to deploy open-source models.

We’ve grouped these companies and cohorts in the diagram with the red circles. So let’s start with Meta and Anthropic. You’ve got the open-source and third-party representatives here, which as we said earlier, pull the torso of the power law up to the right.

In the left-most circle, we show the early AI and machine learning innovators such as SparkCognition Inc., DataRobot Inc., C3 AI Inc., Dataiku Inc. and H2O.ai Inc. Below that on the Y axis, but with deeper market penetration, we show the big legacy companies represented here by IBM Corp. with Watson and Oracle Corp., both players in AI.

Then we show the two proxies for the modern data stack, Databricks Inc. and Snowflake Inc. Also both in the game. Next AWS and Google who are battling it out for second place in mindshare and marketshare going up against Microsoft and OpenAI in the upper right, the two firms that got the gen AI movement started. They are literally off the charts.

The following additional points are noteworthy:

Our research indicates a significant surge in enterprise investment in artificial intelligence and machine learning . Key highlights include:

- Investment growth: Based on ETR survey data, enterprises have increased their investment in AI and ML from 34% to 50% over the past year, marking a substantial 16-point growth.

- High velocity of spend: As we’ve reported previously, AI and ML are experiencing the highest velocity of spend across all technology categories, surpassing even container technologies and robotic automation.

- Enterprise commitment: In our opinion, this trend underscores the considerable commitment enterprises are making to build or source their own AI models.

We believe that models like Meta’s Llama 3.1, particularly the 405B model, have reached a frontier class comparable to GPT-4. Additional observations include:

- Primary use cases: Over the last 12 months, the most tangible use cases for generative AI have emerged in customer service and software development.

- Adoption challenges: Many organizations are struggling to transition from pilot projects to full-scale production thanks to the complexity of integrating multiple components into a cohesive system — a challenge that mainstream companies are often not equipped to handle.

- Role of ISVs: We expect independent software vendors to bridge this gap by incorporating these components into their systems, leading to increased adoption through AI agents.

In summary, the accelerated investment in AI and ML reflects a strategic shift among enterprises toward advanced AI capabilities, with ISVs poised to facilitate widespread adoption through integrated solutions.

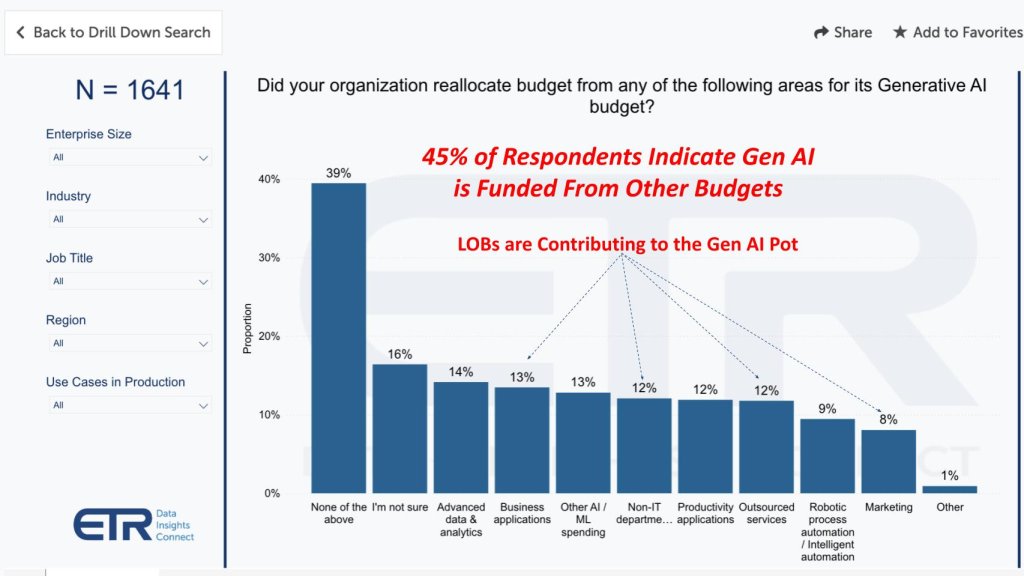

Gen AI funding continues to steal from other budgets

Let’s shift gears and talk about the state of gen AI spending. The chart below shows the latest data from the most recent ETR drill-down survey on gen AI. We’ve previously shared that roughly 40% to 42% of accounts are funding gen AI initiatives by stealing from other budgets. This figure is now up to 45%. And the new dimension of this latest data is that lines of business are major contributors to the funding, as shown below. The money is coming from business apps, non-IT departments, outsourced services and marketing budgets.

The point is that business lines have major skin in the game and that’s where the real value will be recognized.

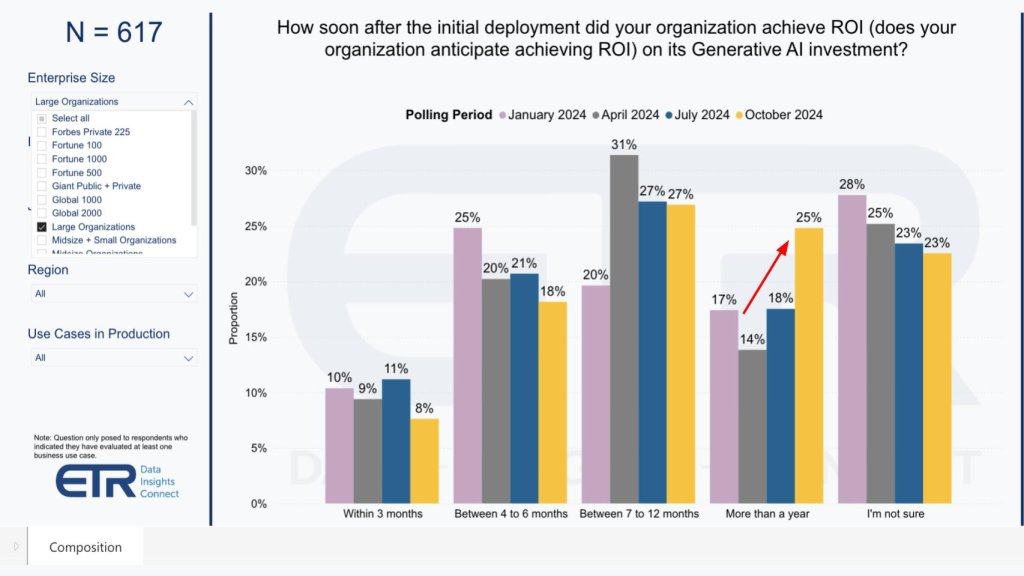

Payback expectations are less optimistic

The other vector that we want to explore is ROI expectations. Initially when ETR started surveying around gen AI ROI, the expectations were much more optimistic for faster returns, as shown below.

Notice how the yellow bar in more than a year has jumped up from a low of 14% in April of this year (gray bars), when folks were more optimistic and the most common response was under twelve months. Today, that 14% has nearly doubled to 25% of the survey base. As well, the percentage of customers expecting fast ROI of under 12 months drops notably. We expect this to continue as organizations seek greater returns and higher net present values.

We note the following additional points:

Our takeaway is that the current phase of technology transformation is experiencing a typical period of disillusionment, where hype has slightly outpaced reality and expectations are becoming more balanced. We believe this temporary slowdown will lead to a renewed surge in growth and adoption as the market adjusts expectations and strategies.

Key observations include:

- Open-source momentum: The increasing adoption and velocity of models such as Llama are driven by the momentum behind open source. The ability to customize, greater transparency and better integration across models provide more visible advantages relative to proprietary offerings from OpenAI and Microsoft, which led with first-mover advantage.

- Need for transparency and customization: In our opinion, enterprises are realizing the necessity for higher levels of transparency and customization in AI models. This has prompted greater interest in solutions that offer such capabilities and is challenging first movers.

- Shifting ROI expectations: We are observing a shift in ROI timelines. Initially, organizations were optimistic about quick ROI within three to six months. However, expectations are now extending beyond a year, indicating a more realistic approach to AI implementation.

We believe that as generative AI becomes integrated as a feature within existing products, it will serve as a sustaining innovation. To invoke Clay Christensen, this benefits incumbent companies by enhancing their current offerings without requiring a complete transformation of their business models.

Additional insights:

- Incremental value through integration: In our view, organizations can derive substantial incremental value by adopting generative AI features embedded in their existing products, rather than investing in building bespoke generative AI systems from scratch. Current research however continues to indicate high levels of experimentation with bespoke systems. We believe over time this trend will decline and embedded AI will be the dominant model.

- AI as a universal enhancer: In our view, this era of AI resembles the impact of the internet and has the potential to be a tide that lifts all ships, offering widespread benefits across industries.

In summary, the current conservatism around AI ROI is a natural part of the technology adoption cycle. We anticipate continued strong AI investment and innovation as organizations leverage open-source models and integrate generative AI features into existing products, leading to significant value creation and industry-wide advancement.

Future applications will feature a system of multiple agents

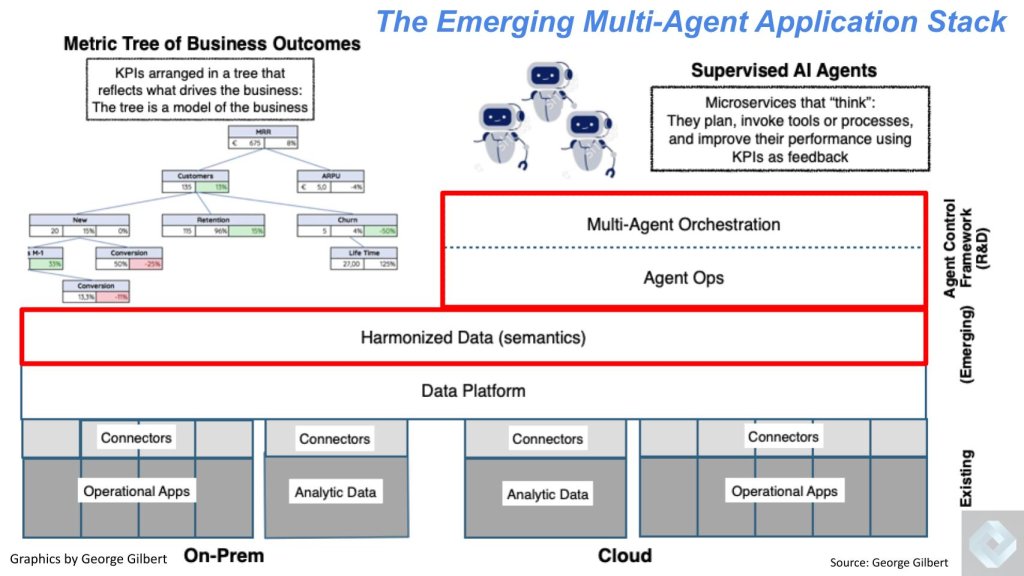

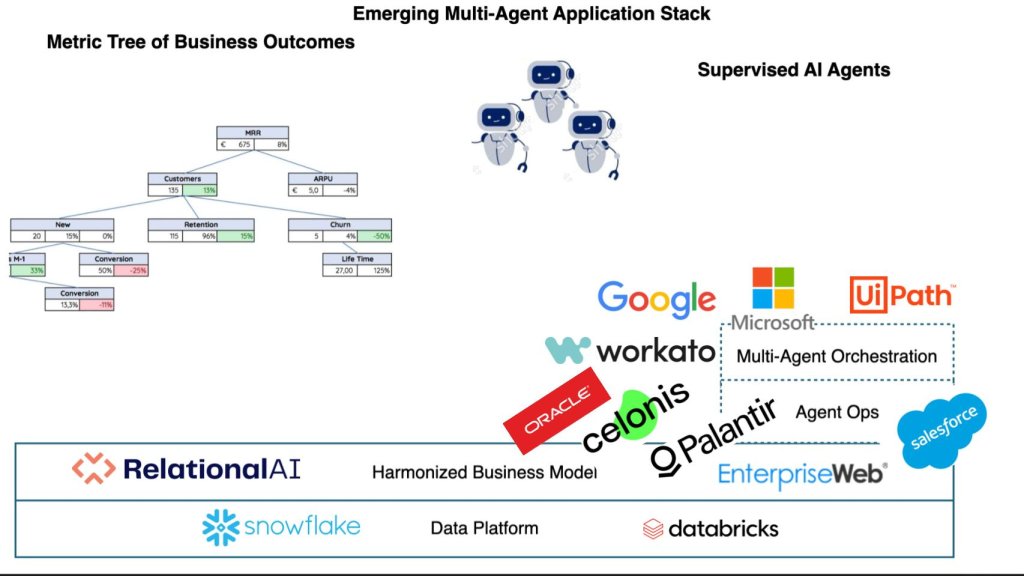

Let’s build on our previous research around agentic systems and get a glimpse into the future. Below we revisit our block diagram of the new, multi-agent application stack that we see emerging.

In the above diagram, let’s work our way to the top. Starting at the bottom, we show these two-way connections to the operational and analytic apps. Moving up the stack, we show the data platform layer, which has been popularized by the likes of Snowflake and Databricks. Above that, we see a new, emerging harmonization layer, we’ve talked about that a lot – sometimes called the semantic layer. Then we show multiple agents and an agentic operation and orchestration module.

Many people talk about single agents. We’re talking here about multiple agents that can work together that are guided by top-down key performance indicators and organizational goals. You can see those in a tree formation on the upper left in the diagram. The whole idea here is the agents are working in concert, they’re guided by those top-down objectives, but they’re executing a bottom-up plan to meet those objectives.

The other point is, unlike hard-coded micro services, these swarms of agents can observe human behavior, which can’t necessarily be hard-coded. Over time, agents learn and then respond to create novel and even more productive workflows to become a real-time representation of a business.

One final point is that we believe every application company and every data company is going to be introducing its own agents. Our expectation is customers will be able to deploy these agents and tap incremental value, whether it’s talking to their enterprise data through natural language or more easily building workflows in their applications.

But what they’ll quickly find is that they’re just reinforcing the existing islands of applications or automation and analytic data, and it’s this broader framework we’re putting forth that’s needed to evolve gradually over time in order to achieve the full productivity value that will come from end-to-end integration and automation.

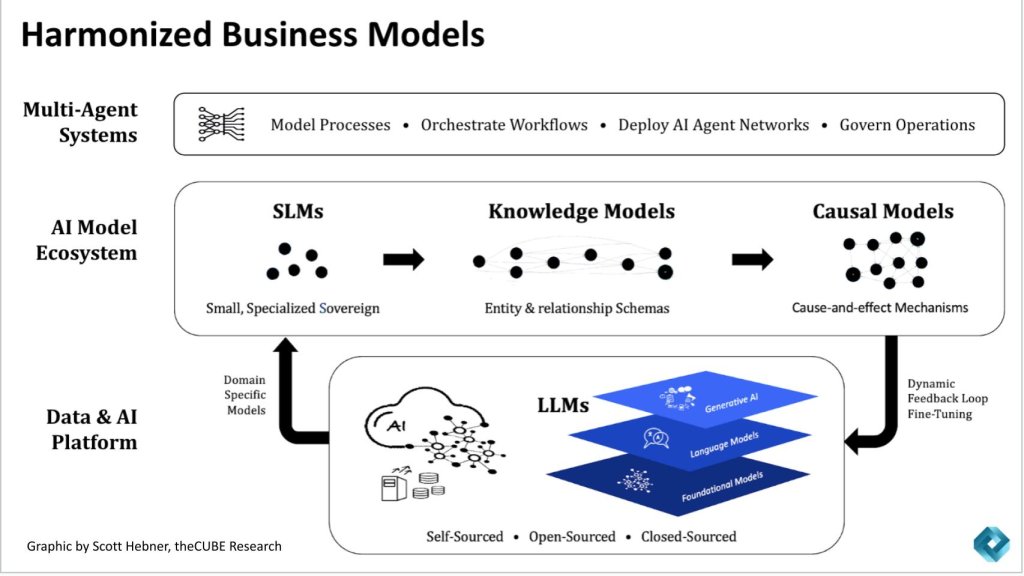

Double-clicking on the harmonization layer

In the previous diagram we highlighted the harmonization layer in red. Let’s describe that more fully represented by the diagram below. We believe the development of intelligent, adaptive systems resembles an iceberg, where agents represent the visible tip above water, but the substantial complexity lies beneath the surface. We believe that transitioning from semantic design to intelligent adaptive, governed design is crucial for empowering these agents effectively.

Key components of this ecosystem include:

- Data and AI platform: This foundational layer consists of a data fabric combined with the capabilities to build, manage, and govern AI models.

- Large language models:

- Central role: In our opinion, LLMs serve as the heart and soul of the system, housing generally applicable data and information.

- Customization and optimization: As described in the Gen AI Power Law, enterprises are increasingly customizing LLMs for specific business needs. These models can be:

- Self-sourced within an organization.

- Open-sourced and tailored.

- Public or closed source accessed via APIs from various providers.

- Small language models:

- Specialized functions: More than just smaller versions, SLMs are specialized, sovereign and secure.

- Ecosystem collaboration: We believe there is a need for an ecosystem where SLMs partner and collaborate with LLMs to enhance the system’s overall functionality.

- Derivative works: SLMs are developed from LLMs and incorporate:

- Knowledge graphs: Representing entities and relationships within the business.

- Causal models: Understanding the mechanics of cause and effect.

- Self-education cycle: As these models interact, they self-educate, leading to continuous improvement. LLMs become smarter through input from SLMs, creating an architected ecosystem of models.

- Agent orchestration layer:

- This layer enables the orchestration and construction of multi-network agents, which operate based on the intelligence gathered from both LLMs and SLMs.

We believe this entire system becomes autodidactic and self-improving. We note the significant importance of this harmonization layer as a key enabler of agentic systems. This is new intellectual property that we see existing ISVs (e.g. Salesforce Inc., Palantir Technologies Inc., and others) building into their platforms. And third parties (e.g. RelationalAI, EnterpriseWeb LLC and others.) building across application platforms.

Additional insights include:

- Synergistic collaboration:

- Historically, application development involved separate personas for data modeling and application logic, operating in different realms.

- In our view, a similar scenario exists today, where the causal layer (a digital twin of the business) and agents (task-specific applications) must work in unison.

- Agents need a synchronized view of the business state; otherwise, unsynchronized actions could disrupt system coherence.

- Capturing tribal knowledge:

- The system, in theory, addresses the loss of organizational process expertise when key personnel depart.

- Expertise is captured within the system, allowing domain experts to build agents or metrics without relying on centralized data engineering teams.

- Agents can be created by expressing rules in natural language, connected directly to business goals (e.g., reducing customer churn), enabling continuous learning and optimization.

- Shift from correlation to causality:

- Traditional models rely on statistical correlation based on historical data, suitable for static environments.

- We believe incorporating causality accounts for dynamic changes in the business world, as causality considers how probabilities evolve with changing conditions.

- Focusing on causal AI elevates knowledge models and enhances both SLMs and LLMs through bidirectional learning, providing a more accurate reflection of real-world dynamics.

- Organic system mimicry:

- The entire ecosystem mimics an organic system, where learning and adaptation occur naturally.

- By uncovering complex causal drivers and relationships, the system enables agents to collaborate in a more organized and intelligent manner.

In summary, transitioning to an intelligent, adaptive design supported by a coordinated ecosystem of LLMs and SLMs is essential to maximize enterprise value. By integrating causal AI and fostering organic, self-learning systems, organizations will be able to unlock deeper insights, retain critical expertise and build more effective agentic systems that accurately reflect and adapt to the dynamic nature of modern businesses.

Digging into the agent control framework

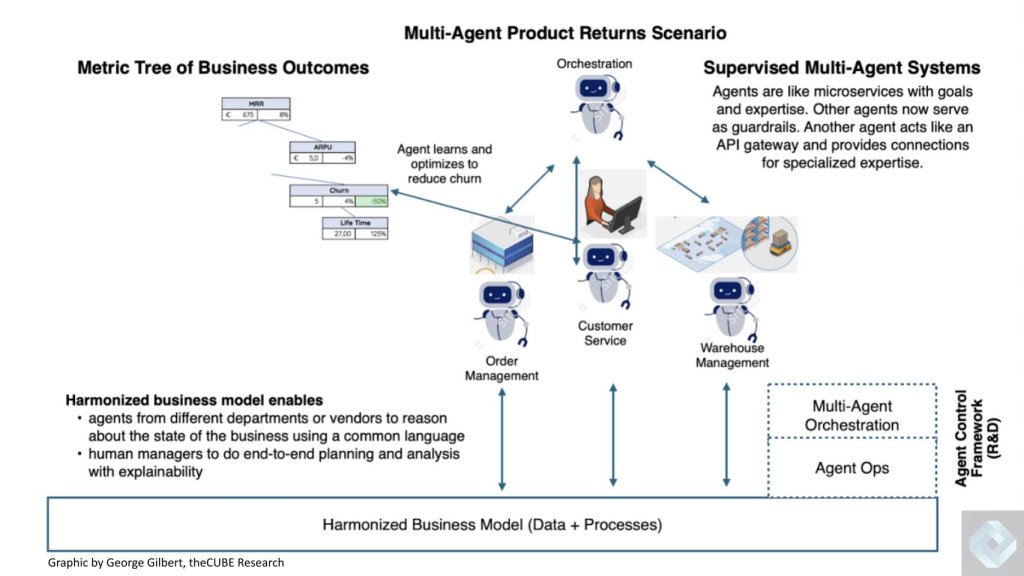

A high value piece of real estate in this emerging stack is what we refer to as the agent control framework. In the following section, we’ll explain that in detail, using a product return use case.

Our previous research indicates that the evolution of LLMs and intelligent agents is bringing us closer to realizing a real-time digital representation of organizations — a vision we’ve likened to “Uber for all.” We believe that to support this vision, an agent control framework is essential for organizing and managing AI agents effectively.

Key additional analysis and insights follow:

- Necessity of an agent control framework:

- Organizing agents: Similar to an API gateway, an agent control framework organizes agents in a hierarchy of functional specialization.

- Use case example: A consumer interacts with a customer service AI agent regarding a product return. The agent aims to resolve routine interactions without human escalation by communicating with an orchestration agent. This orchestration agent connects with order management and warehouse system agents to manage inventory and replacement processes.

- Harmonized business layer: Specialized agents utilize a harmonized business layer to reason about inventory, order status and logistics using common assumptions.

- Enhanced reliability and performance: In our opinion, operating a multi-agent system across multiple vendors requires agents to work with a common language about the state of the business. This harmonization enhances reliability, precision and performance, distinguishing probabilistic agents from traditional symbolic code.

- Transition from correlation to causality in AI:

- Limitations of current AI: Today’s AI relies on probabilistic statistics, identifying patterns and making predictions based on historical data — a static world view.

- Need for dynamic understanding: We believe AI must adapt to understand cause and effect to reflect the dynamic nature of businesses and human reasoning.

- Causality in practice: Incorporating causality allows AI to determine not just that a problem exists (e.g., customer churn) but why it exists and what actions can best address it.

- Architectural integration: Combining LLMs, SLMs, knowledge graphs and causal models under agent systems will incrementally improve ROI over time.

- Synergy between agents and business models:

- Rich business metrics: The relationships between business measures become richer and more dynamic when incorporating probabilistic and causal relationships.

- Learning systems: In our view, AI systems should function as learning systems where plans are made with causal assumptions, outcomes are reviewed, and learnings are incorporated back into the business model for continuous improvement.

- Root-cause analysis example: Understanding the root cause of issues like customer churn enables AI to recommend the best course of action through “what-if” modeling, helping businesses decide among various interventions.

- Collaborative agent ecosystems:

- Dynamic underpinnings: A continuously learning and dynamic foundation is necessary to support the synergy between agents and the harmonized business layer.

- Iterative human-AI interaction: Agents run “what-if” scenarios and propose next-best actions or plans, which are then evaluated by humans in an iterative process to refine strategies.

In summary, we believe that developing an agent control framework and integrating causality into AI systems are crucial steps toward creating dynamic, self-learning ecosystems. By harmonizing business models and fostering synergy between various AI components, organizations can enhance reliability, precision and performance, ultimately achieving deeper insights and more effective decision-making.

Ecosystem participants and implications for future innovation

We’ll close with a discussion of the and some examples of firms we see investing to advance this vision. Note this is not an encompassing list of firms, rather a sample of companies within the harmonization layer and the agent control framework. Below, we took the agentic stack from the previous chart, simplified it and superimposed some of the players we see evolving in this direction.

Today, business logic, data and metadata are “locked” inside of application domains. We see two innovation vectors emerging in this future system, specifically: 1) existing ISVs adding harmonization and agent control framework capabilities; and 2) tech firms with a vision to create horizontal capabilities that transcends a single application domain. Importantly, these domains are not mutually exclusive as we see some firms trying to do both, which we describe in more detail below.

Key analysis and insights include:

- Emergence of application vendors in multiple layers:

- Harmonization and agent layers: Companies like Microsoft, Oracle, Palantir, Salesforce and Celonis are actively building both the harmonization layer and the multi-agent orchestration and operations layer within their application domains.

- Foundation on existing data platforms: These developments are built upon today’s modern data platforms such as Snowflake, Databricks, Amazon Web Services, Google BigQuery and others.

- Horizontal enablement by specialized firms:

- Broad applicability: Firms such as RelationalAI and EnterpriseWeb (harmonization layer); and UiPath Inc., Workato Inc. and others (agent control layer) are working to enable these systems to scale horizontally across any application domain through integration and new intellectual property (“Uber for all”).

- Ecosystem evolution: We believe these companies are instrumental in evolving the ecosystem by providing tools and platforms that are accessible to a wider range of businesses.

- Fundamental changes in database systems:

- From relational to graph databases: The harmonized business model represents a fundamental technical shift from traditional data platforms. It moves from relational databases to graph databases, marrying application logic with database persistence and transactions.

- A new layer in enterprise software: In our opinion, this is the most significant addition to enterprise software since the emergence of the relational database in the early 1970s.

- Addition of AI agents: On top of this new database layer, the integration of AI agents adds another layer of complexity and capability, marking a powerful industry transition.

- Inclusion of major players:

- Signals from recent announcements by Oracle and Salesforce: Participation from major vendors such as Oracle (see our analysis from Oracle Cloud World) and Salesforce (see our analysis of Dreamforce/Agentforce) demonstrate how existing platform companies are pursuing these developments, delivering on the harmonization layer, incorporating the expressiveness of knowledge graphs with the query simplicity and flexibility of SQL. These leaders are unifying data and metadata and set up the future enablement for agents to take action in a governed manner.

- Importance of the harmonization layer:

- Three critical attributes for enterprises:

- Unique business language: It must understand the unique language of each business, including industry-specific terminology, regional nuances and customer segments.

- Unique workflows and automation: Every business has distinct automation needs and processes that require customization.

- Trust and transparency: Trust and transparency have different meanings across organizations, influenced by corporate policies and regulatory requirements.

- Driving open-source growth: We believe these requirements are fueling rapid growth in open-source models, as they offer the customization and flexibility needed to address unique business needs.

- Three critical attributes for enterprises:

- Open source as a catalyst:

- Dominance in the AI marketplace: Open-source models are growing faster than closed-source alternatives. Platforms such as Hugging Face have surpassed 1 million models, with approximately 80% to 90% being open source.

- Advantages of open source:

- Customization: Allows businesses to build unique language models, automation systems and trust mechanisms.

- Private cloud deployment: Enables running models on private clouds for enhanced sovereignty and control.

- Flexibility and standards: Open-source models offer greater flexibility and are likely to become the industry standard, as seen in previous technological transformations.

We believe that this shift mirrors past industry trends where open source and open standards became the norm. The integration of harmonized business models and AI agents represents a transformative period in enterprise software, with open-source solutions playing a pivotal role in meeting the unique needs of modern businesses.

In summary, the industry is undergoing a significant transformation driven by the emergence of new foundational layers in enterprise software. The harmonization of business models and the addition of AI agents will enable unprecedented capabilities and productivity gains in our view – perhaps 10 times relative to today’s partially automated enterprises. This evolution is further accelerated by the adoption of open-source models, which provide the customization, flexibility and trust required by enterprises today. We anticipate that open source and open standards will once again become the industry reality, shaping the future landscape of AI and enterprise technology.

Image: theCUBE Research

Disclaimer: All statements made regarding companies or securities are strictly beliefs, points of view and opinions held by News Media, Enterprise Technology Research, other guests on theCUBE and guest writers. Such statements are not recommendations by these individuals to buy, sell or hold any security. The content presented does not constitute investment advice and should not be used as the basis for any investment decision. You and only you are responsible for your investment decisions.

Disclosure: Many of the companies cited in Breaking Analysis are sponsors of theCUBE and/or clients of Wikibon. None of these firms or other companies have any editorial control over or advanced viewing of what’s published in Breaking Analysis.

Your vote of support is important to us and it helps us keep the content FREE.

One click below supports our mission to provide free, deep, and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more luminaries and experts.

THANK YOU