Table of Links

Abstract and 1 Introduction

2.1 Software Testing

2.2 Gamification of Software Testing

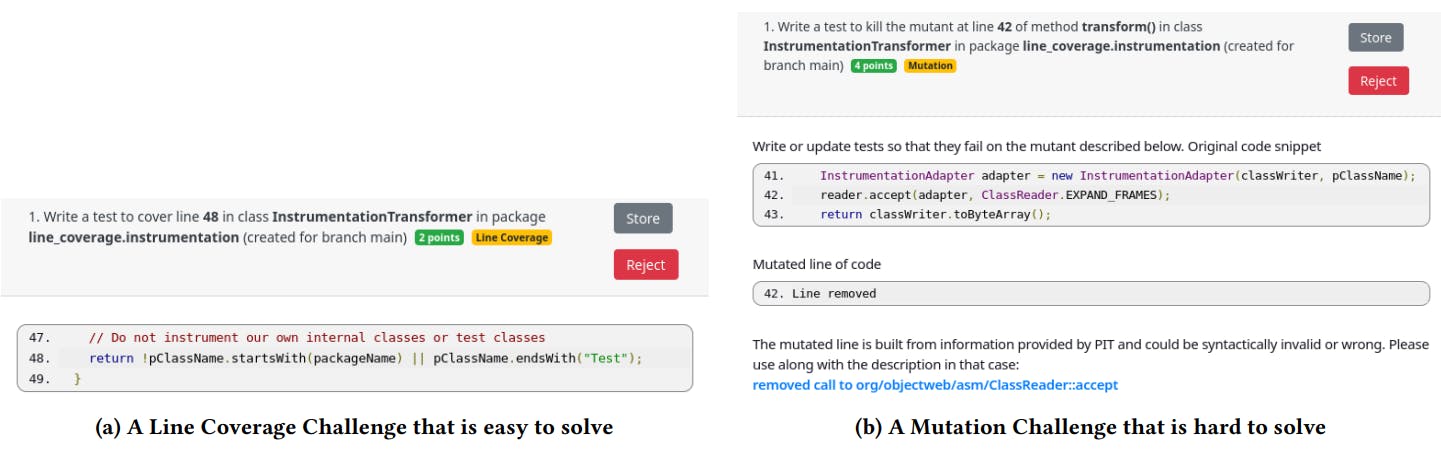

3 Gamifying Continuous Integration and 3.1 Challenges in Teaching Software Testing

3.2 Gamification Elements of Gamekins

3.3 Gamified Elements and the Testing Curriculum

4 Experiment Setup and 4.1 Software Testing Course

4.2 Integration of Gamekins and 4.3 Participants

4.4 Data Analysis

4.5 Threats to Validity

5.1 RQ1: How did the students use Gamekins during the course?

5.2 RQ2: What testing behavior did the students exhibit?

5.3 RQ3: How did the students perceive the integration of Gamekins into their projects?

6 Related Work

7 Conclusions, Acknowledgments, and References

5.2 RQ2: What testing behavior did the students exhibit?

On average, the students wrote 14.8 tests for the Analyzer project and 21.8 for the Fuzzer (Fig. 7c). One of the goals of their assignments was to achieve 100 % line coverage (Fig. 7a) which they came close to achieving in both projects, with mean coverage percentages of 91.9 % and 95.5 % respectively. In terms of mutation scores (Fig. 7b), the students obtained a higher score in the Analyzer (mean 78.2 %) compared to the Fuzzer (mean 65.2 %), which may suggest that implementing and testing the Fuzzer was more challenging, for example, because of the necessity to use mocking techniques and special handling of edge cases. Despite this, the students received good grades overall for both projects (Fig. 7e), with mean grades of 87.4 %, and 89.6 % respectively. The students executed their projects

in the CI more than 1300 times, averaging almost 26 runs per student. The majority of these runs were successful (93 %). The high number of runs can be attributed to the number of commits of both projects, as depicted in Fig. 7d (15 for Analyzer and 30 for Fuzzer).

There are significant correlations between the points accumulated by participants using Gamekins and various factors, including coverage (Fig. 8a and Fig. 8b), mutation score (Fig. 8c), number of tests (Fig. 8d) and commits (Fig. 8f), and grade (Fig. 8e) for both projects combined. These positive correlations demonstrate that engaging with Gamekins and positive testing behavior are indeed

related, which is important since (1) it confirms that the gamification elements are meaningful and do not distract students, and (2) it suggests that challenges and quests are effective metrics for comparing and grading students in a software testing course.

Compared to the class of 2019 which did not use Gamekins, both classes performed similarly in terms of the number of tests (Fig. 7c) and line coverage (Fig. 7a). The mutation scores were higher in 2022 (Fig. 7b), although the difference is not statistically significant (𝑝 = 0.11). The slight increase may be because students from 2019 stopped testing their projects once they achieved 100 % coverage, while the students from 2022 continued to solve Mutation Challenges even after reaching high coverage. There is a significant difference (𝑝 < 0.001) regarding the correctness of the outputs of the Analyzer (Fig. 7f): In 2022, almost all students had correct outputs (mean 10.6), whereas the students from 2019 had a lower mean of 8.3. This suggests that using Gamekins helped to identify bugs leading to incorrect output. This conjecture is supported by the

responses from the survey (G15), where two-thirds of the students stated that they discovered bugs while using Gamekins.

The commit histories also provide evidence of bugs being found, with individual commits referring to bugs being fixed. More generally, in the 2022 course, the students committed their code in a more fine-grained manner (Fig. 7d), which is evidence for their interactions with Gamekins. The mean number of commits in 2022 was 15, compared to 7 in 2019; this difference is statistically significant (𝑝 < 0.001). Figure 10 shows an example commit history (git log) of a student of 2022 committing tests individually, whereas Fig. 9 exemplifies the common behavior from 2019 without Gamekins when students committed their code in only a few commits. Overall, these findings suggest that the students in 2022 were more diligent in committing their code and addressing bugs.

Authors:

(1) Philipp Straubinger, University of Passau, Germany;

(2) Gordon Fraser, University of Passau, Germany.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25838985/twarren_rtx5090_2.jpg)