Prakhar Khanna / Android Authority

TL;DR

- Google’s working to bring its Labs experiments to Gemini.

- Ahead of that availability, we’ve spotted a few Labs options referenced in the Google app.

- Among the experiments in development, we could get some big Gemini Live upgrades and new agentic options.

Keeping up with AI developments can be like watching a race where all the participants are at very different places. Far off in the distance, we can see new ideas taking shape, but it’s often anyone’s guess how long it will be before they’re ready for prime time. Last year, one of the big steps Google shared was Project Astra, a “universal AI assistant” able to work with apps across your phone. After getting our first taste last summer, we’re taking a look at where some of those Astra ambitions may be headed next.

Google’s been working to bring Gemini users a preview of some new in-development features, and last month we uncovered signs of a new Gemini Labs section of the app that would enable this access. Today we’re building upon that with the identification of a few new Labs options.

Don’t want to miss the best from Android Authority?

As you may recall, “robin” is a codeword we’ve associated with Gemini before, and in version 17.2.51.sa.arm64 of the Google app for Android, we’re seeing it pop up in several new strings:

Code

Live Experimental Features

Try our cutting-edge features: multimodal memory, better noise handling, responding when it sees something, and personalized results based on your Google apps.

Live Thinking Mode

Try a version of Gemini Live that takes time to think and provide more detailed responses.

Deep Research

Delegate complex research tasks

UI Control

Agent controls phone to complete tasks First up we’ve got Live Experimental Features, which looks like it’s going to cover quite a few improvements. Beyond practical tweaks like that improved noise rejection for Gemini Live, the ability to respond to on-screen content feels like it could be in Astra territory.

Then we have Live Thinking Mode, teasing deeper analysis even when using Gemini Live. Last year, we saw Gemini offer a split between a Fast model using Gemini 2.5 and Thinking based on Gemini 3 Pro. Presumably, this represents its expansion to Gemini Live.

Next, there’s Deep Research. Gemini has had a Deep Research mode for a while now, and we’ve already seen it get better, so we’re very curious what further improvements may await in this Labs preview.

Finally, UI Control promises an agentic mode that’s able to interact with apps on our phone to get work done — sensing a theme across some of these? We got the Gemini Agent with Gemini 3, but this Labs option may end up being a lot more versatile than just tied in to your Chrome browser.

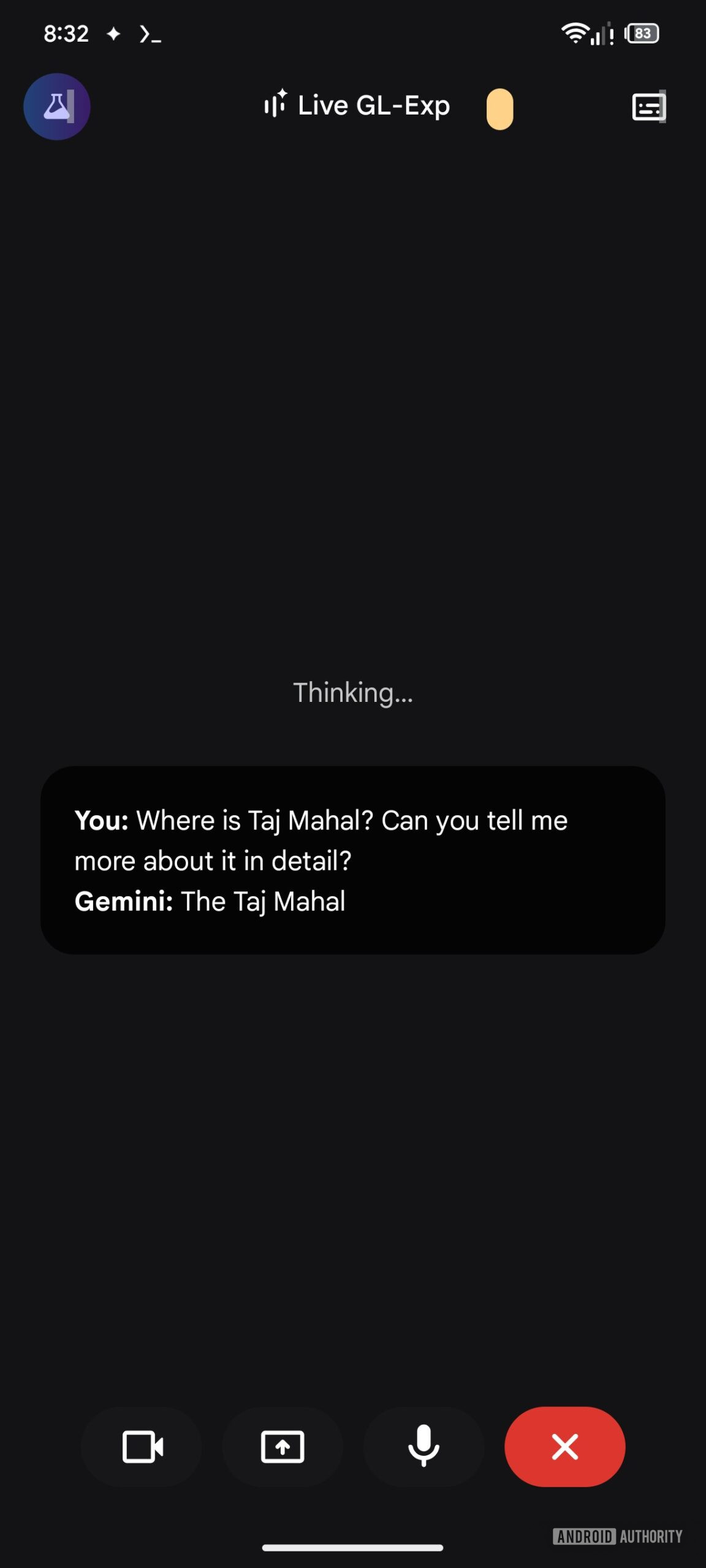

While we’re not yet able to interact with most of these in a useful way, we can see that Google intends to make them all individually selectable, exposing you to only the experiments you want. We have been able to coax a small UI preview out of the app, even with the Labs icon so far unresponsive:

AssembleDebug / Android Authority

There’s that “Thinking…” message that presumably applies to Live Thinking Mode, plus we see that “GL – Exp” label up top, which sure seems to indicate that Live Experimental Features are enabled.

Right now, none of this is public-facing, but with these strings now present under the hood, we wonder just how long it could possibly be before Google’s ready to start giving us access.

⚠️ An APK teardown helps predict features that may arrive on a service in the future based on work-in-progress code. However, it is possible that such predicted features may not make it to a public release.

Thank you for being part of our community. Read our Comment Policy before posting.