Earlier this week, Google announced a wave of new AI features coming to the Chrome browser. As someone who spends nearly his entire day living inside a browser, I was eager to get my hands on them. I’ve been putting these tools through their paces on my laptop, and while some of them feel like a genuine win, others left me scratching my head — or just waiting for the progress bar to move.

A quick heads-up, though: Many of these features are currently in early access. They are primarily available to those with Google One AI Pro or AI Ultra subscriptions, and for now, you’ll need to be in the US and set your browser to English to give them a whirl.

What do you think about Gemini’s new features in Chrome?

1 votes

Gemini in the sidebar: The context king

Rita El Khoury / Android Authority

Screenshot

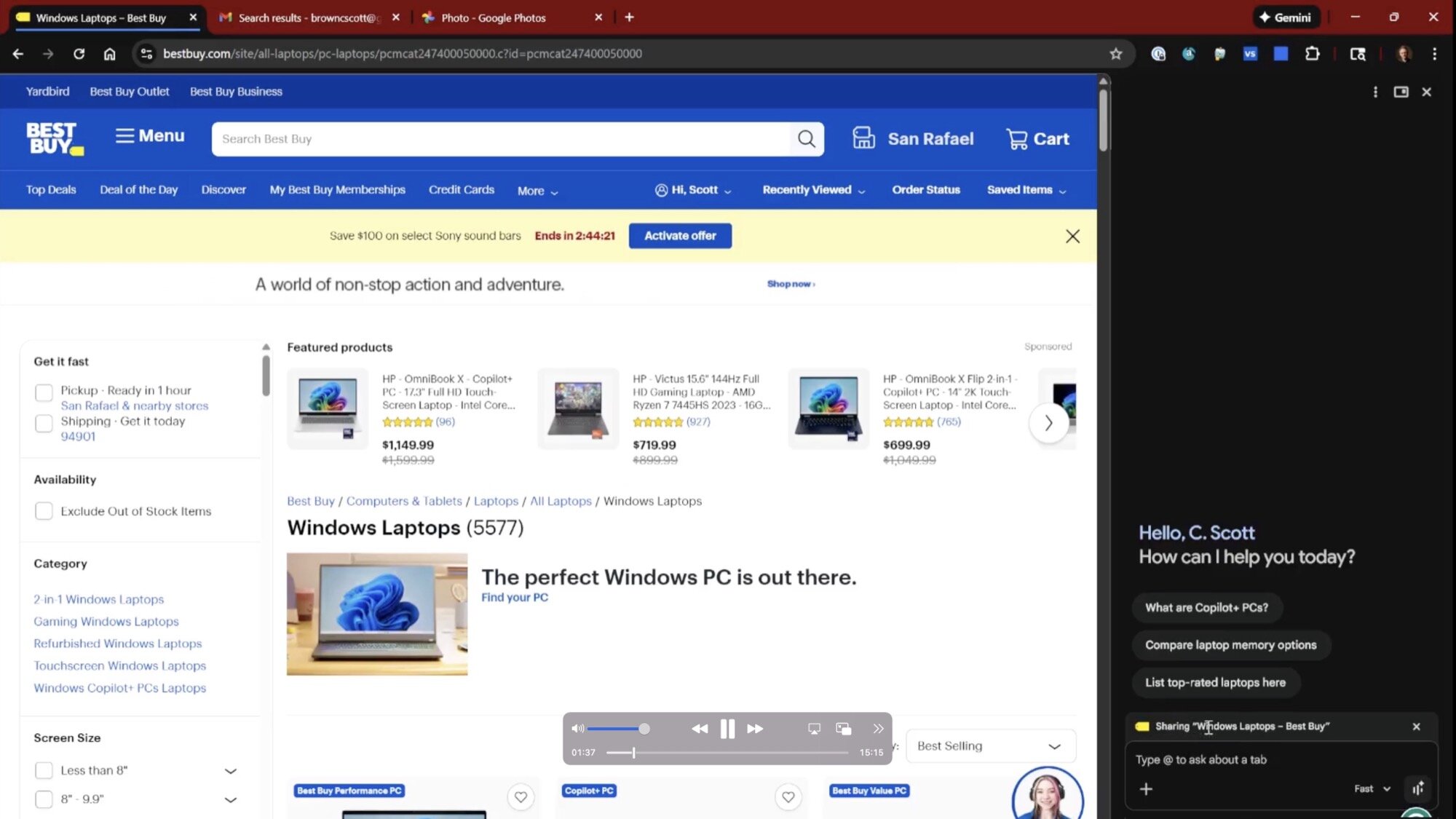

The first thing I tested was the new Gemini sidebar. Previously, if you wanted to chat with Gemini, it would pop up in its own separate window, effectively pulling you away from whatever you were doing. You could install shady third-party extensions for Gemini, but that wasn’t ideal. Now, Gemini lives in a convenient flyout sidebar that can “see” the tab you are currently viewing — exactly what my colleague Adamya asked for more than a year ago. This is a massive workflow improvement because it allows you to stay grounded in your current task while using AI as a research assistant.

To test its contextual awareness, I pulled up a page on Best Buy filled with Windows laptops. I asked Gemini a simple question: “Are there any laptops here that run Panther Lake?”

Gemini correctly identified that while Panther Lake chips — Intel’s latest and greatest — had recently hit the market, the specific Best Buy page I was looking at mostly featured previous-gen hardware. It even gave me a list of where I could find those newer chips. It was fast, accurate, and incredibly helpful for shopping. This is exactly what I want from a browser-integrated AI: the ability to parse the overwhelming information of a retail site and give me a straight answer without me having to CTRL-F through every listing.

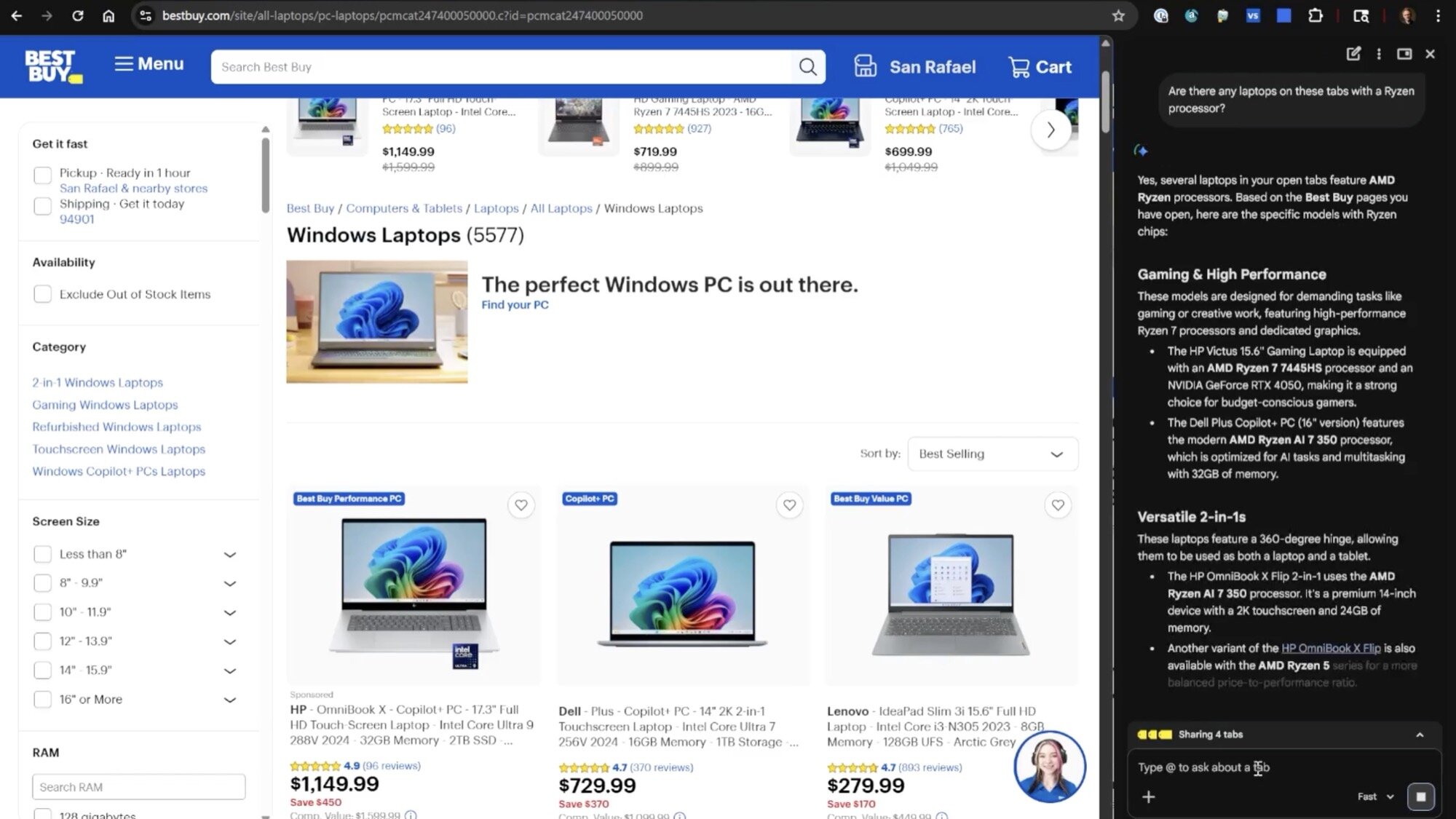

Managing multiple tabs with AI

Rita El Khoury / Android Authority

Screenshot

One of the more frustrating parts of using AI in a browser is keeping track of which information belongs to which tab. Chrome’s new system handles this quite well by allowing you to have different conversations for different tabs simultaneously. This effectively gives you a “memory” for each individual task you’re working on.

I opened a separate tab for an HP OmniBook X. When I switched back and forth between my Best Buy search results and the specific product page, the Gemini sidebar updated to show me the relevant conversation for that specific tab. This prevents the AI from getting “hallucination crossover,” where it might confuse the specs of one laptop with another.

Even cooler? You can now have one conversation that pulls data from multiple tabs at once. I opened three different laptop product pages in addition to the main search page and asked, “Are there any laptops on these tabs with a Ryzen processor?” Gemini scanned all four tabs (see the “Sharing 4 tabs” indicator on top of the text entry box) and correctly identified which models featured AMD silicon. This is a game-changer for comparative shopping or research. Instead of copy-pasting text from three different sources into a chat window, the browser just understands the relationship between your open tabs.

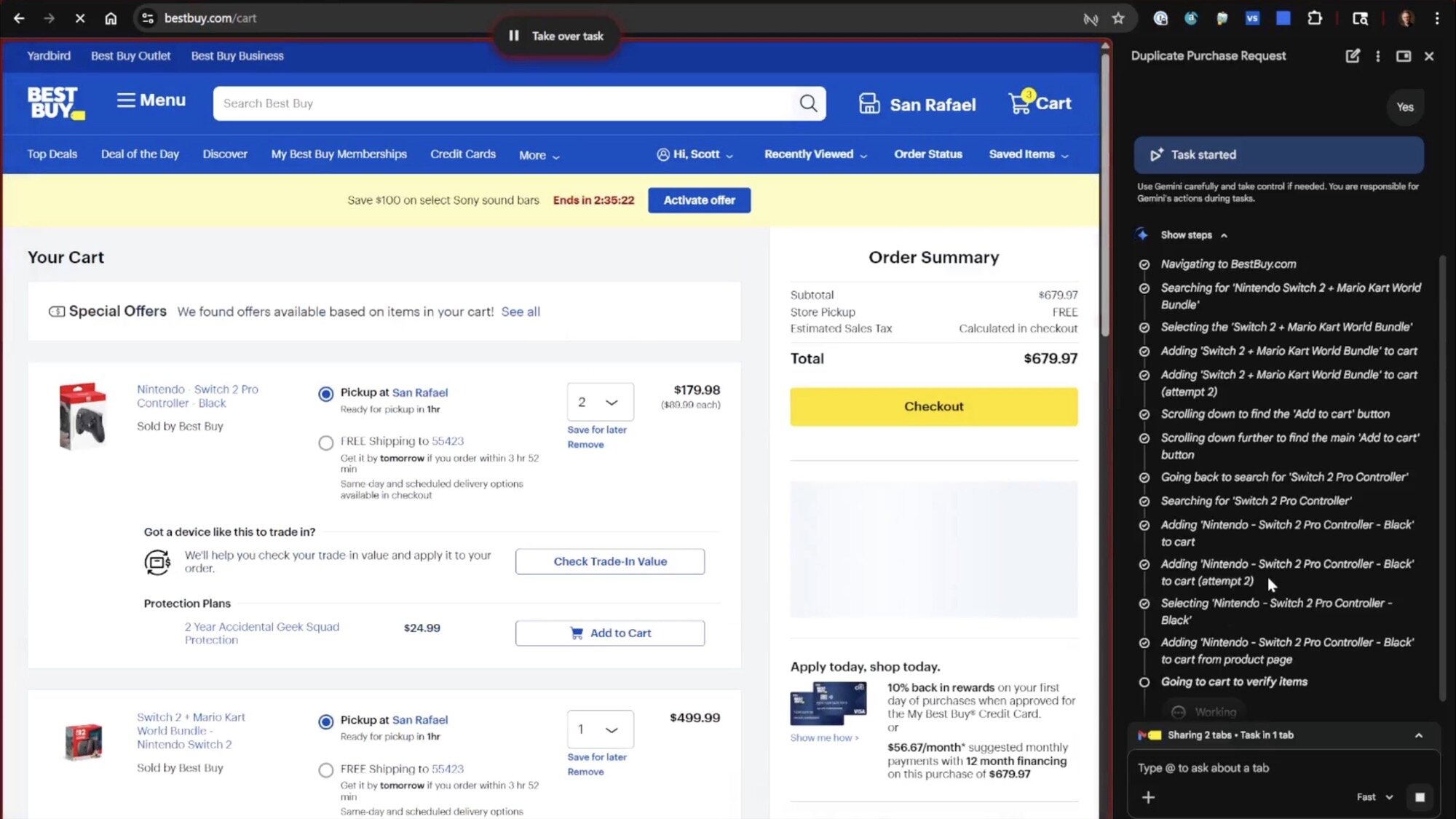

The AI Agent: Buying a Nintendo Switch (sort of)

Rita El Khoury / Android Authority

Screenshot

Google is heavily promoting the idea of an “agentic” AI experience — basically, an AI that can take actions for you. The big example it’s used is the AI actually buying something on your behalf based on information found in your emails.

I decided to give this a real-world test. I recently bought a Nintendo Switch 2 bundle, so I asked Gemini to buy me the same thing using this query: “I recently bought a Nintendo console. Please go into my email and buy that exact console again.”

The process was a bit of a rollercoaster:

- The Handoff: Gemini successfully accessed my Gmail, found the receipt for the “Nintendo Switch 2 + Mario Kart World Bundle,” and even remembered that I had purchased a Pro Controller alongside it.

- The Navigation: It opened a new tab, navigated to Best Buy, and began searching for the items. You can actually see the agent at work, typing in search bars and clicking “Add to Cart” while a red tint covers the screen to warn you not to interfere.

- The Limitation: As a safety measure, Google will not let the AI actually hit the final “Place Order” button. It gets you all the way to the final checkout screen, and then asks you to take over to finish the transaction.

While impressive, it was undeniably slower than if I had just done it myself. I could have manually navigated to my order history and clicked “reorder” in half the time. However, the technical feat shouldn’t be ignored: the AI had to understand the context of a past purchase from a plain-text email, find the corresponding live products on a third-party retail site, and manage the shopping cart.

Assuming this process gets faster and smoother (which it undoubtedly will), it could be a game-changer for productivity. Instead of performing the clicks and scrolls of some mundane task yourself, just ask Gemini to do it for you while you make yourself a cup of tea.

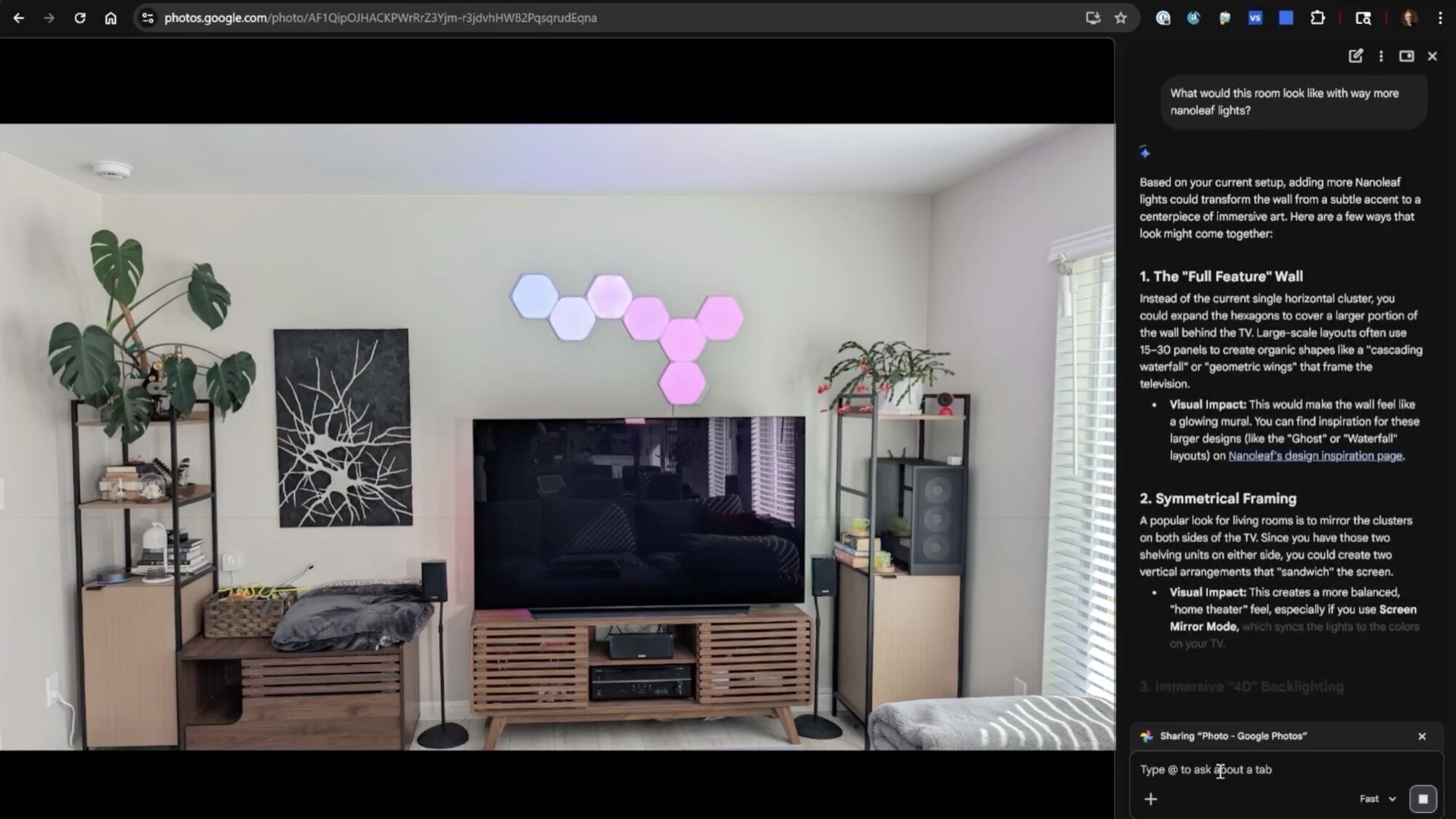

Image generation with Nano Banana

Rita El Khoury / Android Authority

Screenshot

Finally, I wanted to test the built-in image generation powered by Nano Banana. I took a photo of my living room — complete with some Nanoleaf lights on the wall — and asked Gemini: “What would this room look like with way more Nanoleaf lights?”

This is where the “beta” nature of these features really showed. Instead of generating a reimagined version of my room covered in Nanoleaf lights, Gemini just spit out a text list of design ideas, like “Symmetrical Framing” and “Immersive 4D Backlighting.” I tried a second time with a more specific prompt, asking it to actually render the image, but the result was the same.

It’s weird that this didn’t work. The AI could see that I had Nanoleaf lights, and it knew how to talk about lighting design, but it couldn’t yet perform the style transfer or generative fill required to show me the result. Maybe I should’ve used “generate image” in my prompt, so I repeated this again. I gave it a much more specific prompt to get it to work: “Use Nano Banana to generate an image based on this tab of what the room would look like with way more Nanoleaf lights.” That worked, but that’s not the natural language processing Google is gunning for with this system.

Verdict: Is Chrome still the king of browsers?

Rita El Khoury / Android Authority

Screenshot

The Chrome browser is facing more competition than ever, especially from innovative newcomers like Comet. Google’s response is a bit of a mixed bag right now — the sidebar integration and multi-tab context are great, but the agentic features are slow, and the image generation didn’t quite land for me today.

However, we have to look at where this is going. Chrome’s new AI features feel like the next step in an evolution: an assistant that doesn’t just wait for your prompts, but lives in your browser and actually does things.

For now, these tools are for the early adopters. If you have the patience for a slightly slower workflow in exchange for a much smarter assistant, the new Chrome features are worth a look. As they move out of beta and get faster, they could genuinely change how we interact with the web.

Don’t want to miss the best from Android Authority?

Thank you for being part of our community. Read our Comment Policy before posting.