TL;DR

- Google’s Project Astra aims to be a “universal AI assistant” that understands context, devises plans, and acts on your behalf within Android.

- A demo showcased Astra assisting with a bike repair by navigating a manual, finding a YouTube tutorial, and potentially contacting a shop.

- This was powered by an AI agent that controls Android apps by simulating screen inputs, indicating an advanced but still developing capability.

Google’s Gemini chatbot has come a long way since its initial debut at the end of 2023. At first, the chatbot struggled with even basic tasks its predecessor was well equipped to handle, like setting a reminder. Today, it can not only chain actions across multiple services but also answer questions about what’s showing on your phone’s screen or through your phone’s camera. In the future, Gemini may even be able to control your Android phone for you, allowing it to search through your phone’s documents and open your apps to find the information you’re looking for.

During Google I/O 2025 earlier today, Google showed off its vision for a “universal AI assistant.” A universal AI assistant is not only intelligent but can understand the context you’re in, come up with a plan to solve your problems, and then take action on your behalf to save you time.

For example, if you’re having a problem with your bike’s brakes, you can ask the assistant to find the bike’s user manual online, have it open the manual, and make it scroll to the page that covers the brakes. Then, you can follow-up and tell the assistant to open the YouTube app and play a video that shows how to fix the brakes. Once you’ve learned what parts you need to replace, you can ask the assistant to go through your email conversations with the bike shop to find relevant information on part sizes or even have it call the nearest bike shop on your behalf to see if the right parts are in stock.

There’s currently no AI assistant that can do everything I just mentioned without some manual user intervention, but Google does offer various independent AI features that, if chained together, make this feat possible. Google’s latest prototype of Project Astra, the code-name for its future universal AI assistant, demonstrates exactly that. Today’s demonstration shows a man asking Astra on his phone how to fix a problem with his bike’s brakes, with the assistant doing every step I just described in the previous paragraph.

In the demo, we see Google’s Android AI agent open a PDF, scroll the screen until it finds the page requested by the user, open the YouTube app, and scroll through the search results until it finds a relevant video.

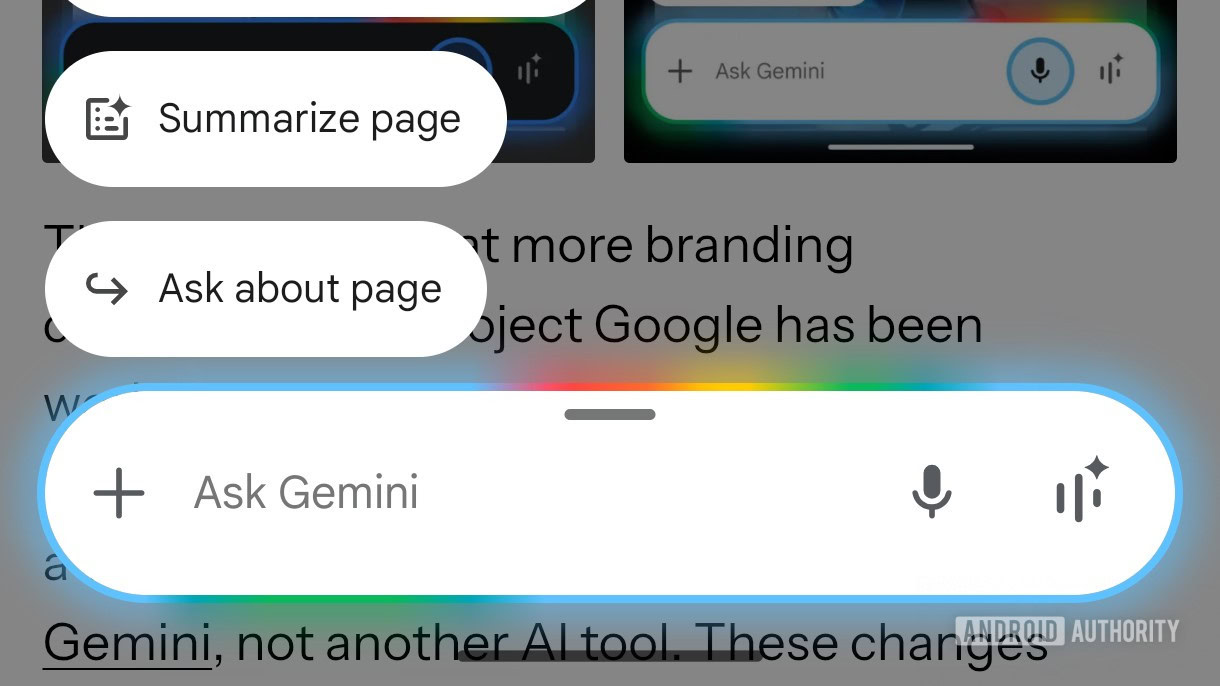

When Astra is controlling the phone, we can see a small, circular overlay on the left. When it scrolls the screen, we can see the tap and swipe inputs it sends, showing that Astra is simulating screen inputs. Judging by the screen recording chip in the top left corner and the glowing overlay around the edges of the screen, it seems that Astra is reading the contents of the screen and then deciding where to tap or swipe.

We don’t know if Astra is doing these actions on device; it would certainly be possible through the use of the multimodal Gemini Nano model, but we can’t determine if it’s being used in this demo. What we do know is that Google has some work to do before it can roll out this Android AI agent. The portions of the video showing the agent were apparently sped up by a factor of 2, suggesting that it can be quite slow at taking action.

Still, we’re excited to see Google get closer to achieving its vision of a universal AI assistant. Every update we get on Project Astra makes Gemini a more appealing product, as we can expect new Astra features to eventually trickle down to the chatbot. The new capabilities Google demoed today might not be available for some time, but they’ll eventually go live in some form, and when they do, they might be even more impressive than what we’re seeing today.