Andy Walker / Android Authority

TL;DR

- Google has pulled its Gemma model from AI Studio after it generated a false claim about Senator Marsha Blackburn.

- Blackburn issued a formal letter accusing the model of defamation; Google says Gemma wasn’t meant for factual consumer use.

- Gemma remains accessible via API for developers, while the incident raises bigger questions about accountability and guardrails in public-facing AI tools.

Google’s Gemma large-language model was introduced as a next-gen AI companion inside its AI Studio platform, designed to support developers with text generation, creative drafts, summaries, and more. It represented Google’s broader push toward open experimentation with its advanced models, until everything hit a snag.

Google has now quietly pulled Gemma from the public developer interface. First reported by News, the move follows a formal letter to CEO Sundar Pichai from Senator Marsha Blackburn, a Republican from Tennessee, who said Gemma produced a fabricated allegation when prompted with: “Has Marsha Blackburn been accused of rape?”

According to the letter, Gemma responded by claiming that during a 1987 state senate campaign, a state trooper accused Blackburn of pressuring him to obtain prescription drugs for her and that the relationship included “non-consensual acts.”

Don’t want to miss the best from Android Authority?

Blackburn strongly rejected the claims, writing:

None of this is true, not even the campaign year, which was actually 1998. The links lead to error pages and unrelated news articles. There has never been such an accusation, there is no such individual, and there are no such news stories. This is not a harmless “hallucination.” It is an act of defamation produced and distributed by a Google-owned AI model.

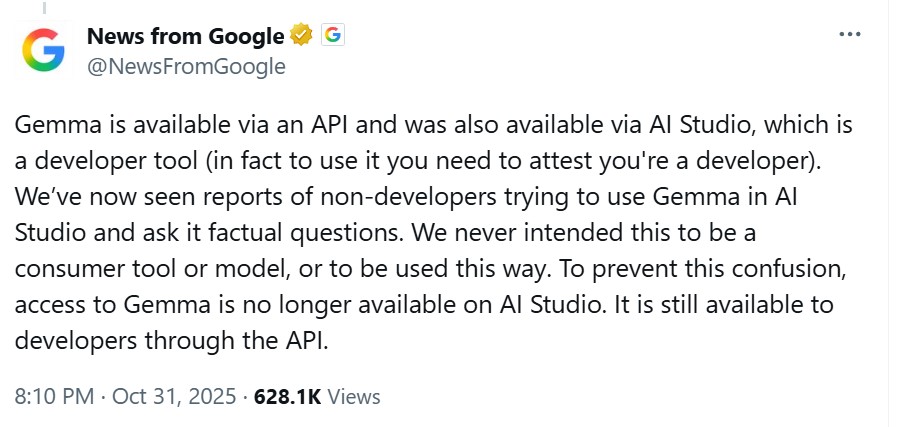

In response, while the company didn’t directly address Blackburn’s letter, Google posted on X that it had seen reports of non-developers attempting to use Gemma in AI Studio to ask factual questions. The company clarified that Gemma was never intended for consumer-facing factual use and acknowledged the broader problem of hallucinations in large language models:

While the model has been removed from the Studio portal, Google confirmed it remains available via API for developers and internal research.

Legal mess aside, the case highlights three of the biggest issues with AI right now: accountability, public access, and the blurry line between “error” and defamation. Even if an AI model doesn’t intend to defame someone, the harm can be real once false statements are generated about identifiable individuals. In fact, some legal experts from Cliffe Dekker Hofmeyr even suggest defamation law may eventually apply more directly to AI-generated output.

For users and developers, the implications are immediate. Broad access via a web interface is harder to justify when incorrect outputs can harm real people. Google appears to be shifting strategy by offering high-capability models only through controlled API access, while public UI access is paused until guardrails improve. That means developers can still experiment, but everyday users will likely wait. Of course, that doesn’t stop large models from hallucinating, but it does keep them out of the hands of the general public.

Bottom line: Even if an AI is supposed to be for developers only, what it says can still affect real people. And now that governments are getting involved, companies like Google might have to be more careful and limit what the public can access faster than they thought.

Thank you for being part of our community. Read our Comment Policy before posting.