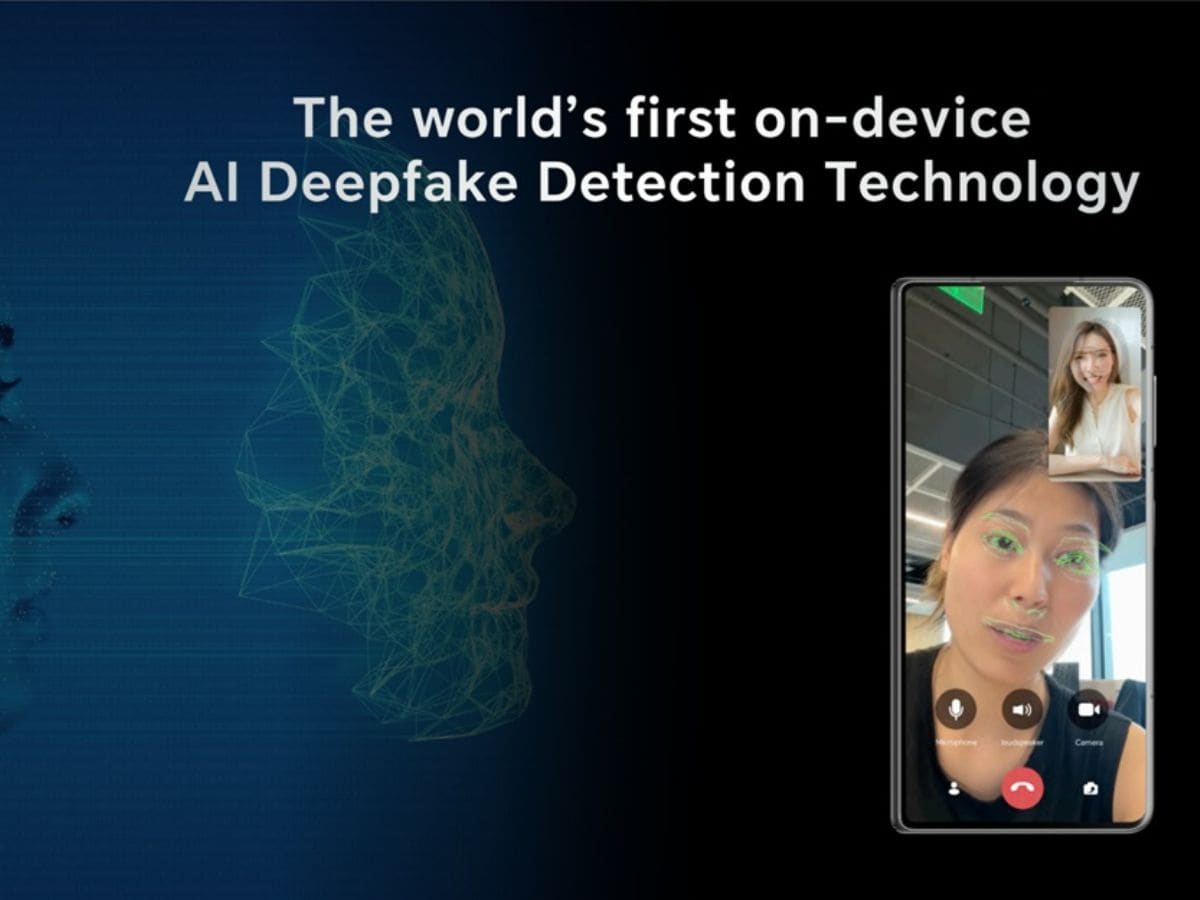

Honor has announced today that the company will be bringing AI Deepfake detection technology on a global level by April 2025. This feature will help all the users to identify the deepfake content in real time so that they can stay safe from manipulated images and videos and experience a digital journey that is safe.

As for the past, Honor introduced the feature for the first time in IFA 2024. The tool by Honor analyzes the media for inconsistencies like border composting artifacts, pixel-level synthetic imperfections, inter-frame continuity issues, facial anomalies like face-to-ear ratio, hairstyle, and more. Once the toll is done with the detection part, it will provide an instant warning to the users so that they can make appropriate decisions related to the content.

The importance of Honor’s AI Deepfake Detection tech

With the increased use of Artificial Intelligence, the intensity of deepfake attacks and cyber threats has also skyrocketed. A report published by Entrust Cybersecurity Institute suggested that in 2024, a deepfake attack occurred every five minutes which is nothing less than insane. Moreover, the Connected Consumer Study of 2024 by Deloitte suggested that around 59% of users find it difficult to identify AI-generated content.

And around 84% of AI users suggest that content labeling should be an active practice. From criminals using deepfakes to impersonate executives to AI-generated content that can damage the reputation of a brand or an individual, we have seen it all by now. And Honor here is just trying to do its bit to make sure that the users are not manipulated online or get scammed because of deepfakes. In the past, we have seen major players like Instagram and more to bring the labeling of AI content. And expectations are that in the future, we will see more tech giants joining the initiative.

Get latest Tech and Auto news from Techlusive on our WhatsApp Channel, Facebook, X (Twitter), Instagram and YouTube.