Agoda recently described how it consolidated multiple independent data pipelines into a centralized Apache Spark-based platform to eliminate inconsistencies in financial data. The company implemented a multi-layered quality framework that combines automated validations, machine-learning-based anomaly detection, and data contracts with upstream teams to ensure the accuracy of financial metrics used in statements and strategic planning, while processing millions of daily booking transactions.

The problem emerged from a typical enterprise pattern: Agoda’s Data Engineering, Business Intelligence, and Data Analysis teams had each developed separate financial data pipelines with independent logic and definitions. While this offered simplicity and clear ownership, it created duplicate processing and inconsistent metrics across the organization. As Warot Jongboondee from Agoda’s engineering team explains, these discrepancies “could potentially impact Agoda’s financial statements.”

Separate financial data pipelines (source)

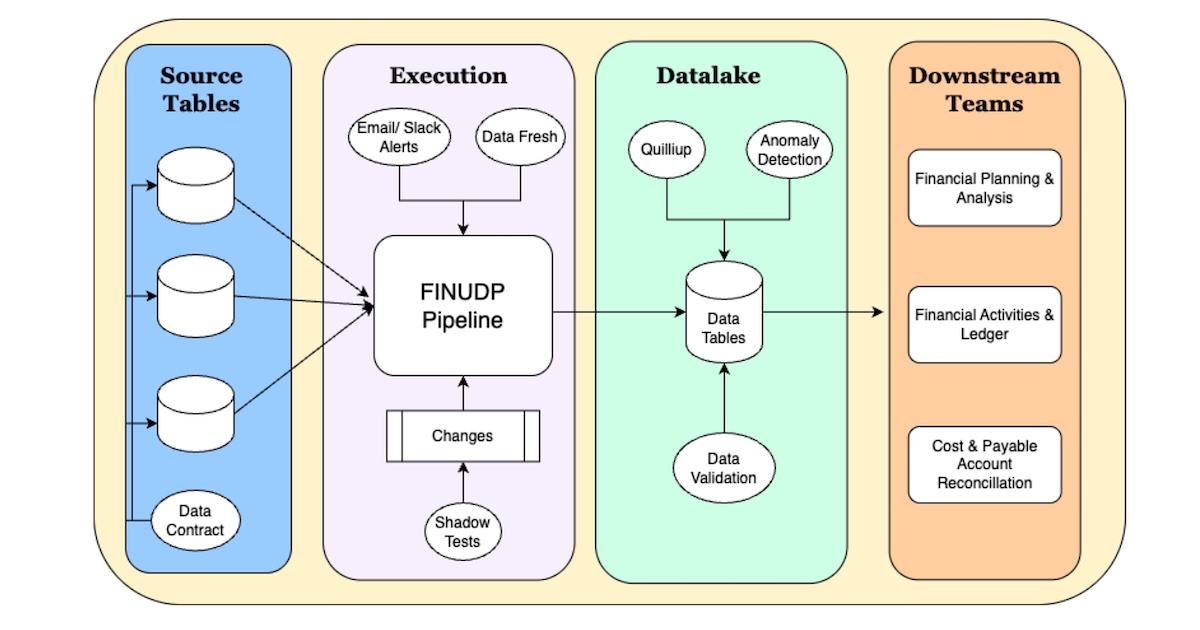

The solution, called Financial Unified Data Pipeline (FINUDP), establishes a single source of truth for financial data, including sales, cost, revenue, and margin calculations. Built on Apache Spark, the system delivers hourly updates to downstream teams for reconciliation and financial planning. The consolidation required significant effort: aligning stakeholders across product, finance, and engineering on shared data definitions was time-intensive, and the initial runtime of five hours required optimization through query tuning and infrastructure adjustments to reach approximately 30 minutes.

Unified Financial Data Pipeline (FINUDP) architecture (source)

Agoda’s quality framework implements multiple defensive layers. Automated validations check data tables for null values, range constraints, and data integrity. When business-critical rules fail, the pipeline automatically halts rather than processing potentially incorrect data. The team uses Quilliup to compare target and source tables. Data contracts with upstream teams define required rules; violations trigger immediate alerts. Machine learning models monitor patterns to identify anomalies. A three-tier alerting system ensures rapid response via email, Slack notifications, and an internal tool that escalates to Agoda’s 24/7 Network Operations Center when updates lag.

The approach aligns with broader industry trends. According to recent industry research, 64% of organizations cite poor data quality as their biggest challenge, with data contracts emerging as what Gartner calls an “increasingly popular way to manage, deliver, and govern data products.” These formal agreements between producers and consumers define expectations for schemas and quality requirements.

The consolidation came with explicit trade-offs. Development velocity decreased because changes now require testing the entire pipeline. Data dependencies mean the full pipeline waits for all upstream datasets before proceeding. Thorough documentation and stakeholder consensus slowed implementation but built trust. Jongboondee noted that centralization “demands tighter coordination and careful change management at every step.”

The system currently achieves 95.6% uptime and targets 99.5% availability. All changes undergo shadow testing where queries run on both proposed and previous versions, with results compared within merge requests. A dedicated staging environment mirrors production, allowing teams to test before release.

The FINUDP initiative demonstrates how organizations handling critical business data at scale are moving beyond ad-hoc quality checks toward comprehensive, architecturally enforced reliability systems that prioritize consistency and auditability over development speed, characteristics increasingly essential as financial data feeds reporting, machine learning models, and regulatory compliance processes.