In the previous case, I described how we optimized the art pipeline and produced a full set of production artifacts, accelerating art development by approximately 70% (you can read more about it here). However, the majority of these results would not have been achievable without addressing another critical factor—one that is often overlooked, yet fully capable of derailing even the best plans.

That factor is the quality of the working day and cross-department interaction. Let’s walk through it step by step how we optimized our daily routine.

The Case

About six months after optimization, we began noticing a pattern. Art production itself moved at a steady pace: assets flowed smoothly from sketches to art review. However, the process consistently stalled during implementation—at the first point of interaction with other departments, and especially during testing. n n “Noticed,” “felt,” and “it seems like” mean nothing in the life of a producer or PM. We needed data.

To isolate the problem, I selected a version far from release and treated it as if we were already live: marketing in full swing, hard delivery commitments in place, and a requirement to ship nine content branches at launch and be prepared to scale post-release.

We deliberately balanced the scope:

- 30% complex skins (G5)

- 30% medium complexity (G4)

- 30% recolors (G3)

- 10% near-final skins, requiring only a merge

After three sprints, the results were alarming.

Only the 10% near-final skins made it into the master build. From the remaining 90%, just one branch was close to being ready for the next version. That was it.

This was indefensible in front of stakeholders. Even allowing for a department still being formed, this required immediate correction.

Work

We began with a retrospective to identify root causes. Two themes surfaced repeatedly:

- Documentation gaps

- Miss-communication issues

The second was easy to observe firsthand. Over three sprints, tasks and files were routinely lost at handover points. Chat messages and calls didn’t help—some people stored files “where it made sense to myself,” while others searched for them “where they were supposed to be.”

Every time a task crossed departments—FBX files moving to rigging, rigs moving to gameplay setup, skins moving to narrative for descriptions—we lost at least one full day to communication. In worse cases, two or three days if someone was unavailable. In roughly half the cases, a single missing person in the chain blocked the entire process.

The ultimate symptom was clear: five bugs per branch as an average — an unacceptable number for cosmetic content meant to be produced at scale. These bugs had no single root cause; they appeared at every stage of development and slowed us down. Our objective became clear:

- Enable delivery of nine branches per version, starting with the most complex geometry

- Reduce average bugs per branch to 0.5

Fit the entire test phase into two weeks, with the second week reserved for master queueing.

The Solution

Task Structure & Documentation

I’ve already described how we restructured Epics and art tasks (linkhere). We now needed the same clarity for departments involved only intermittently. Tasks had to be readable at a glance. I wrote them as if a child might read them—everything had to be obvious without additional explanation. A Game Design task looked like this:

Mandatory Task Checklists

Art tasks received a new mandatory checklist. In addition to stage-specific deliverables, artists were required to verify key conditions before moving forward. PMs were not allowed to close tasks unless all checklist items were completed.

Production Documentation

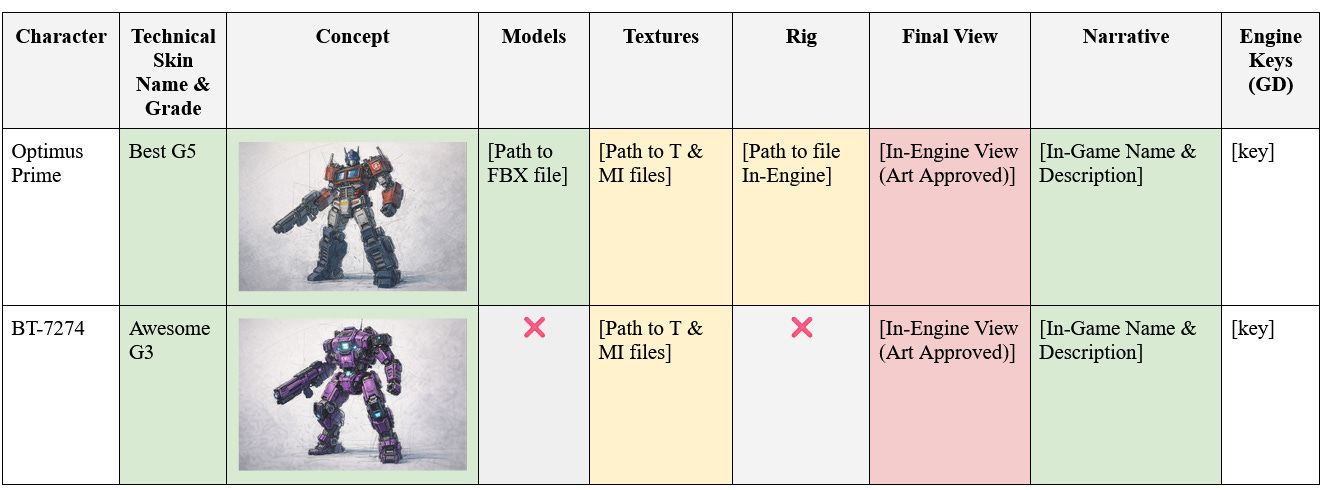

In Confluence, we introduced a table breaking each skin into stages. Each contributor updated their section independently.

The PM tracked progress via background colors:

- White — not started

- Yellow — in progress

- Red — Stopped, blocked or cancelled

- Green — completed, ready for next stage

- Grey – Not applicable

Checkpoint Tasks

Each Epic now included checkpoint tasks at known communication failure points. Examples:

- Export FBX File — artist provides a single link for the rigger

- Post-GD Setup Validation — rigger verifies animation after gameplay setup

Each checkpoint required a concrete artifact attached to the final comment. Developers now had a single, clearly defined place where all files required for the task were stored. Tasks were linked to one another, making it impossible to miss or lose the necessary files.

Art Review

We introduced a formal Art Review task, which previously did not exist. Each review specified:

- Build number

- In-game verification

- Art Lead or Principal approval

If changes were required, the reason and responsible person had to be explicitly mentioned. To reduce waiting time, I learned to build and distribute builds myself.

Jira Rules

This was an early version of the Jira rules we use on a daily basis. I already talked about themhere.

Only PMs Create Tasks

We also declared war on “urgent-do-or-we-die” tasks arriving from outside teams. All tasks had to be created by the PM using a unified format. Each task required:

- What needs to be done

- How much time it should take

- Attached technical documentation

The PM also became the single intake point for client requests.

No task — no work

If a request came directly to an artist, bypassing official channels, the artist was required to notify the PM and could ignore it until the task was formalized.

Meetings & Communication

I’ve reduced unnecessary communication. I genuinely dislike redundant calls. We kept only three:

- Daily Morphing Stand-up (30 minutes max) n Results only. Problems moved to separate meetings. n

- Integration Daily (15 minutes max, before morning stand-up) n Participants: PM, QA, Art Lead, Principal Artist n Developers were removed from testing entirely. n

- Art Director Feedback Sessions (Monday & Wednesday, 1 hour max) n Optional, but guaranteed access to Art Director time.

Each meeting had its own chat. Plus one additional Concept Art chat. Nothing else.

Results

In three months we were able to merge around 8,5 branches per version already. Bug count dropped to 0.75 per branch over six months. The average test time was ~1,1 weeks.