Artificial intelligence shows up in more places than most people realise. It helps your phone finish your sentences, decides which posts you see online, organises your email, and even supports doctors reading scans. It works fast, handles huge amounts of data, and can offer answers in seconds. But no matter how polished it looks, AI is always predicting. It’s not recalling facts it personally knows — it has no memory of truth. It’s working from patterns it has learned and trying to map what it sees now to what it’s seen before.

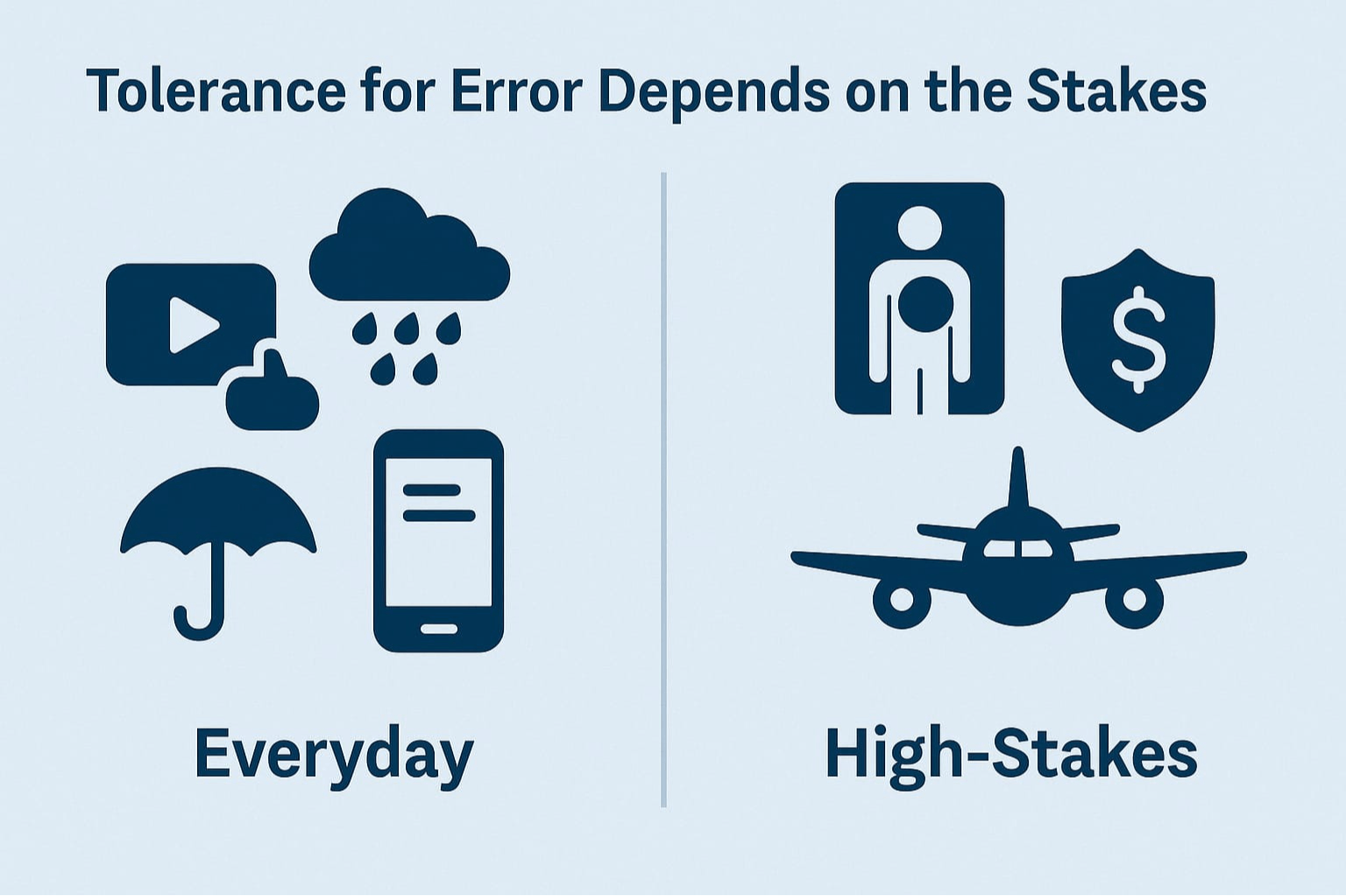

Those predictions can land spot‑on, be slightly off, or sometimes miss the mark completely. The tricky part is knowing when “almost right” is good enough and when it’s a problem. A small error in a movie suggestion is harmless and easily ignored. A small error in a medical scan, a financial decision, or a safety‑critical system isn’t — the consequences there can be much larger than the margin of error suggests.

What Guessing Really Means

When people talk about AI “guessing,” it isn’t random guessing. It’s closer to an informed estimate built from patterns the system has seen before. Feed it a million examples of cats and dogs and it will learn to tell them apart in new photos. Still, it’s never certain. If the photo is blurry, taken from an odd angle, or simply unlike what it has seen before, it will make its best call anyway — confident or not.

The closer the new example is to something the system already knows well, the better the odds it’s right. The further it drifts from familiar ground, the more you need to consider how much that guess is actually worth.

Accuracy and Usefulness Aren’t the Same Thing

Accuracy tells you how close the answer is to being correct. Usefulness is about whether that answer actually helps you do what you need to do.

A map that shows a shop a few steps away from its real location is slightly wrong but still gets you there. That’s useful. But a medical tool that calls something harmless when it’s not could be accurate most of the time yet dangerously wrong in that moment. The difference matters because accuracy alone doesn’t measure risk. In some contexts, being “mostly right” is good enough. In others, like healthcare, safety systems, or financial decisions, a rare mistake can outweigh a thousand correct answers. Usefulness is tied to context — what’s at stake, what decisions are being made, and what happens if the system is wrong.

When “Close” Works Just Fine

Plenty of everyday uses don’t need perfect precision.

A weather forecast might be off by a few percentage points on the chance of rain and you’ll still know whether to grab an umbrella. A streaming app might get your taste right most of the time and still be worth using. Your phone might guess the wrong next word and you just ignore it and keep typing. Even search engines sometimes get it slightly wrong, but if the right link shows up in the top few results, it’s still useful.

These are low‑stakes situations. The cost of being wrong is so small you barely notice — or you adapt without thinking about it. In contexts like this, “close enough” is exactly that. Precision isn’t the goal; usefulness is.

When “Close” Isn’t Good Enough

Some decisions leave no room for “almost.”

A wrong medical reading can lead to the wrong treatment. A self‑driving car that mistakes a person for a shadow can’t afford that error. A fraud detection system that only “almost” spots fraud can cost people money or trust. In these contexts, the tolerance for error drops to nearly zero because the consequences of being wrong aren’t just inconvenient — they’re potentially harmful.

Speed and convenience don’t matter if the system makes a critical mistake. The value of an AI tool in these situations isn’t judged by how quickly it responds or how often it’s right on average, but by how rarely it fails when failure carries real costs. When lives, safety, or financial integrity are on the line, “close enough” simply isn’t.

Using Confidence Levels

Many AI tools provide a built‑in measure of how sure they are. It might show up as a number, a percentage, or a label like “high,” “medium,” or “low” confidence. This score can guide how much weight you give the result, but it isn’t the whole story.

A system claiming 99% certainty sounds reassuring, yet that missing 1% can still matter — sometimes a lot. If the tool is suggesting a movie, the risk is negligible. But if a facial recognition system is “99% sure” it has the right match, that remaining 1% could be the difference between solving a case and misidentifying someone entirely. Confidence scores don’t replace judgment; they just help you measure risk.

How People Judge “Close Enough”

People already make these calls all the time, often without thinking about them. A teacher might overlook minor grammar slips if the argument in an essay is solid. A builder might accept a slightly uneven cut of wood if it still fits and holds. But with medication doses, “close” is never acceptable — because the cost of getting it wrong is too high.

The same logic applies to AI. Your tolerance for error should depend on the stakes, not the technology. Match how much you trust an AI system to how severe the consequences of being wrong would be. The higher the stakes, the narrower your margin for “close enough.”

The Misleading Nature of Average Accuracy

You often see claims like “Our model is 92% accurate.” It sounds impressive, but that number hides a lot. It’s an average — it tells you nothing about where the system struggles. A model can be flawless in common, well‑represented cases and still fail often in rare or edge scenarios.

Take a translation tool: it might handle everyday conversations perfectly but stumble over legal terms, technical jargon, or cultural nuance. If you only look at the headline number, you won’t see these blind spots. Understanding accuracy isn’t just about the percentage; it’s about knowing when and where the system’s predictions are reliable — and when they aren’t.

Deciding in Practice

When you get an AI result, think about the impact if it’s wrong. Can you cross‑check it somewhere else? Does the tool tell you how confident it is? Has it seen enough similar situations to be trustworthy here? You don’t need deep technical knowledge to ask these questions. Simple checks like these help you decide when to lean on the output and when to pause. The goal isn’t to second‑guess every result, but to know when a result needs more scrutiny before acting on it.

Different Fields, Different Lines

“Close enough” depends entirely on context. In healthcare, an AI prediction can be a useful second opinion but never a replacement for actual tests. In retail, a stock prediction that’s slightly off can still guide ordering effectively. In security, a facial match might be treated as a lead, not proof. The threshold shifts across fields. In creative work like art or music, near misses can still be valuable — an AI‑generated sketch that sparks an idea, even if it’s imperfect. But in engineering or surgery, small mistakes can cascade into serious consequences. The stakes define the standard.

Don’t Let Trust Get Lazy

The more often an AI tool is right, the easier it is to stop checking. That’s when mistakes sneak through. Even when a system has a good record, spot‑checking its outputs keeps you sharp and aware of its limits. Trusting a system blindly because it’s “usually right” is how subtle errors build into big problems. Staying alert to its boundaries keeps you in control.

Making AI Easier to Judge

AI designers can make all of this easier by surfacing uncertainty in plain language instead of burying it in technical metrics. Tools should flag when a result is shaky, explain why, and give clear examples of where the system works well — and where it doesn’t. Adding easy ways for users to verify results independently is just as important. Good design doesn’t just give you answers; it helps you decide how much to trust them.

The Takeaway

“Close enough” is never a fixed rule. It depends on how accurate the result is, how useful it is for your goal, and what happens if it’s wrong. In low‑stakes situations, being nearly right can save time and effort. In high‑stakes scenarios, the exact same level of accuracy might be far too risky. The safest way to use AI is to pair its speed and reach with your own judgment. Always ask what the cost is if the system gets it wrong. Look for signs of how sure it is. Cross‑check when it matters. That way, you get the best of both worlds — AI’s power and efficiency, without being caught off guard by its blind spots.

.jpg)