Table of Links

Abstract and 1 Introduction

2 Background and 2.1 Blockchain

2.2 Transactions

3 Motivating Example

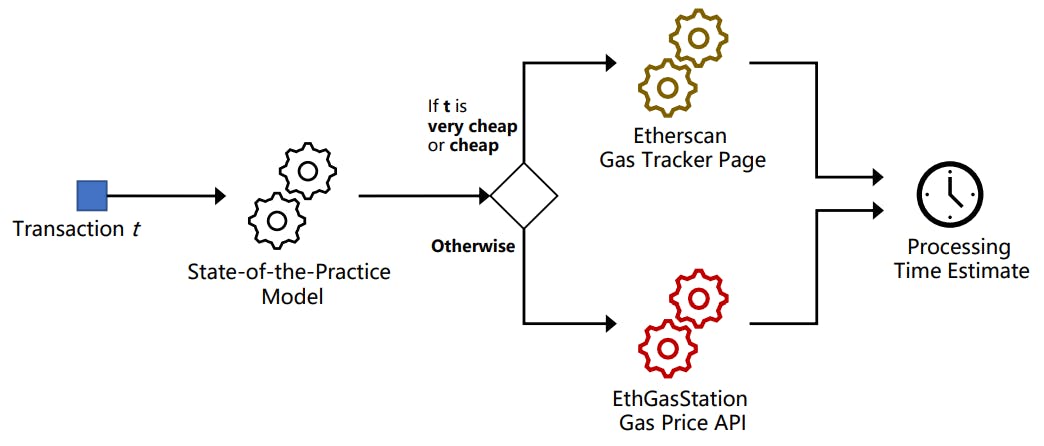

4 Computing Transaction Processing Times

5 Data Collection and 5.1 Data Sources

5.2 Approach

6 Results

6.1 RQ1: How long does it take to process a transaction in Ethereum?

6.2 RQ2: How accurate are the estimates for transaction processing time provided by Etherscan and EthGasStation?

7 Can a simpler model be derived? A post-hoc study

8 Implications

8.1 How about end-users?

9 Related Work

10 Threats to Validity

11 Conclusion, Disclaimer, and References

A. COMPUTING TRANSACTION PROCESSING TIMES

A.1 Pending timestamp

A.2 Processed timestamp

B. RQ1: GAS PRICE DISTRIBUTION FOR EACH GAS PRICE CATEGORY

B.1 Sensitivity Analysis on Block Lookback

C. RQ2: SUMMARY OF ACCURACY STATISTICS FOR THE PREDICTION MODELS

D. POST-HOC STUDY: SUMMARY OF ACCURACY STATISTICS FOR THE PREDICTION MODELS

Transaction processing within blockchains. Hu et al. [21] propose a private blockchain to facilitate banking processes, and also focus on the extent of possible impacting factors on transaction processing times. As the authors focus on a private blockchain for the purpose of a single application, a few assumptions about their blockchain preceding their analysis. These assumptions include: “transaction rates are slower than the real ETH blockchain”, and “block size is sufficient enough to include all transactions”. Additionally, all experiments are performed using 10 miner nodes and 10 light nodes. By performing experiments within said blockchain, the authors conclude that transaction times are correlated with block generation times. It is also revealed that network congestion does not affect processing times due to the dynamic adjustment of the block difficulty. Also due to this feature, the average generation time of blocks and ultimately transaction processing times are found to be stable regardless of the amount of miner. As the assumptions stated above do not currently apply to the main net Ethereum blockchain, these results cannot be generalized to the main net of Ethereum blockchain.

Kasahara and Kawahara [24] focus primarily on the effect of Bitcoin fee on transaction processing times using queuing theory. In this study they also conclude that transactions with small fees take a much longer time to be processed than those with high fees. This is included by deriving and analyzing the mean processing times of transactions. The authors also separate transactions by their set fee as part of the analysis, though only segregate them into two groups: high and low. The exploration of network congestion is also included, and it is revealed that both high and low Bitcoin fee transactions are impacted heavily as the usage of the Bitcoin network increases. As a possible reason for this is due to the size of blocks in the Bitcoin blockchain being 1 MB, the authors also discuss the implications of increasing the block size. As it is discovered that high fee transactions continue to experience slow confirmation times as high levels of congestion exist after increasing the block size, it is concluded that increasing the block size is not a solution to this issue.

Rather than focusing on variables from transactions and the network, Rouhani and Deters [38] focus on the differences in transaction processing times within two of the most used Ethereum clients: Geth and Parity. Using a private blockchain, the authors conclude that using the Parity client with the same system configuration as found within the Geth client, transaction processing times experience an 89.8% increase. Unfortunately, the type of client that was used to execute a transaction is not available within transaction metadata. Additionally, most mining nodes are part of a mining pool, which increases the difficulty of studying the potential impacts of a single mining node. As a result, when conducting analyses on the transactions executed on the blockchain as a whole, this information cannot be inferred.

Transaction fees. Instead of analyzing the impact of transaction fees or gas prices on other factors, the work of Pierro and Rocha [35] explores the effect of several different factors on transaction fees within the Ethereum blockchain. The main factors analyzed by the authors include: electricity price, total number of miners, USD to ETH conversion rates, and total pending transactions. The authors discover that both the total number of miners and pending transactions have a large impact on transaction fees.

Chen et al. [11] explain how extremely low gas prices can lead to a security vulnerabilities and attacks such as denial of service. It is stated that Ethereum has updated their platform to defend against the possibility of such attacks, however the paper explores if the changes are adequate enough to minimize any disruption within the network. They conclude that the alternative settings are not enough, and that allowing users to set such low gas prices will continue to give malicious users the opportunity to attempt to disrupt the network. The authors propose a solution which dynamically alters the costs of sending transactions as the amount of transaction executions increase. The experimental results of their proposition reveal that it is effective in preventing potential denial of service attacks.

More generally, transaction fees vary depending on the gas price chosen by transaction issuers. Several tools such as Etherscan’s Gas Tracker EthGasStation, and geth provide recommendations for gas prices. Determining whether transaction issuers adopt the exact suggestions issued by those tools is a fruitful research endeavor, as it helps to understand the driving forces behind gas price choices. Gas price choices impact the economics of Ethereum (e.g., the meanings of cheap and expensive gas prices depend on which gas prices are being chosen by transaction issuers at a given point in time), as well as its behavior (e.g., gas prices influence the likelihood that a given transaction will be selected by a miner to be processed).

Measurements of the Ethereum network. Kim et al. [25] argue that, while application-level features of blockchain platforms have been extensively studied, little is known about the underlying peer-to-peer network that is responsible for information propagation and that enables the implementation of consensus protocols. The authors develop an open-source tool called NodeFinder, which can scan and monitor Ethereum’s P2P network. The authors use NodeFinder to perform an exploratory study with the goal of characterizing the network. From data collected throughout the second trimester of 2018, the authors observe that: (a) 48.2% of the nodes with which NodeFinder was able to negotiate an application session with were considered non-productive peers, as these peers either did not run the Ethereum subprotocol or did not operate on the main Ethereum blockchain (or both), (b) 76.6% of all Mainnet Ethereum peers use Geth and 17% use Parity, (c) peers using Geth are more likely to adopt the client’s latest version compared to Parity, (d) Geth and Parity implementations of a log distance metric yield different results, which calls for an improved standardization and documentation of operations in the protocols (RLPx, DEVp2p, and the Ethereum subprotocol), (e) Ethereum’s P2P network size is considerably smaller than that of the Gnutella P2P network, (f) US (43.2%) and China (12.9%) host the largest portions of Ethereum Mainnet nodes, and (g) Ethereum nodes operate primarily in cloud environments (as opposed to residential or commercial networks).

In a similar vein, Silva et al. [41] implement and deploy a distributed measurement infrastructure to determine the impact of geo-distribution and mining pools on the efficiency and security of Ethereum. Data was collected from April 1st 2019 to May 2nd 2019 (~1 month). Some of their key findings include: (a) nodes located in Eastern Asia are more likely to observe new blocks first as several of the prominent pools operate in Asia (nodes in North America are around four times less likely to observe new blocks first), (b) 1.45% of the mined blocks were empty, (c) a single miner sometimes produces several blocks at the same height, possibly to exploit the uncle block reward system[17], and (d) 12 block confirmations might not be enough to deem a given block as final. The authors classify (a) and (b) as selfish mining behavior.

Oliva et al. [34] analyzes transactional activity in Ethereum. They report that, within the time period of their analysis (July 30th 2015 until September 15th 2018), 80% of the contracts transactions targeted 0.05% of all smart contracts. This result shows that a minuscule proportion of popular contracts have a large influence over the network. For instance, if transaction issuers happen to submit transactions with higher gas prices for these contracts, then it is likely that the ranges for all price categories will increase (please refer to Section 6.1 for an explanation of how we define price categories). This has happened before. For instance, the sudden success of a game called CryptoKitties in late 2017 led to a severe congestion of the entire network and a significant increase in gas prices [5].

as usage estimation. We differentiate between two types of studies in the field of gas usage estimation: (i) worst-case estimations and (ii) exact estimations.

– Worst-case estimations: Studies of this type aim to upper bounds for the gas usage of a given function (i.e., worst-case gas usage). For example, Marescotti et al. [30] conduct a study in which they focus on discovering the worst-case gas usage of a given contract transaction. The authors introduce the notion of gas consumption paths (GCPs), which maps gas consumptions to execution paths of a function. Worst-case gas estimations are derived by analyzing the GCPs of a function with two candidate symbolic methods. The two symbolic methods build on the theory of symbolic bounded model checking [6] and rely on efficient SMT solvers. The proposed estimation model is only evaluated on a single example contract. Similarly, Albert et al. [2] introduce a tool called Gasol that takes as input (i) a smart contract , (ii) a selection of a cost model, and (iii) a selected public function, and it infers an upper bound for the gas usage of the selected function. Differently from their prior work [3], the cost model of Gasol is vastly configurable. Gasol relies on several tools to extract control flow graphs from smart contracts, which are then decompiled into a high-level representation from which upper bounds can be calculated using a combination of static analyzers and solvers [1]. Gasol is implemented as an Eclipse plug-in, making it suitable for use during development time. No evaluation of the proposed tool is conducted.

– Exact estimations: Studies of this type aim to provide exact estimations of gas usage. Das and Qadeer [16] introduce a tool called GasBoX, which takes a smart contract function and an initial gas bound as input (e.g., the gas limit). Next, it determines whether the bound is exact or returns the program location where the virtual machine would run out of gas. The tool is also designed to be efficient, running with complexity in linear time in the size of the program. GasBoX operates by instrumenting the smart contract code with specific instructions that keep track of gas consumption. GasBoX applies a Hoare-logic[18] style gas analysis framework to estimate gas usage. Despite the theoretical soundness of behind GasBoX, such a tool has three key limitations: (i) it does not account for function arguments and the state of contracts and (ii) it operates on contracts written in a simplified version of Move[19], which is a programming language in prototypal phase, and (iii) it is evaluated on 13 examples contracts that are written by the authors. More recently, Zarir et al. [46] proposed a simple historical method for the estimation of gas usage. For a given smart contract function f(), the authors retrieve the gas usage of the prior ten executions of that function and take the mean. The conjecture behind the method is that there are historical patterns in how functions burn gas and thus the gas usage of a given transaction sent to a function f() should not be too different from that of recent transactions sent to f(). The authors argue that these patterns in gas usage emerge either due to (i) how the contracts are implemented (e.g., their business logic and/or the data that they store) or (ii) how transactions issuers interact with a function (e.g., the amount of data sent as input parameters to the function in question). The proposed estimation method also leverages an empirical discovery: approximately half of the functions that received at least 10 transactions during the Byzantium hard-fork showed an almost constant gas usage. Differently from prior work in the field, the authors conduct a large-scale evaluation of their approach by testing it on all successful contract transactions from the Byzantium hard-fork (~161.6M transactions). The results indicate that gas usage could be predicted with an RSquared of 0.76 and a median absolute percentage error (APE) of 3.3%. Based on their findings, they suggest that (i) smart contract developers should provide gas usage information as part of the API documentation and (ii) Etherscan and Metamask should consider providing per-function historical gas usage information (e.g., statistics for recent gas usage).

Reducing gas consumption (gas optimization). Zou et al. [48] conducted semi-structured interviews and an online survey with smart contract developers to uncover the key challenges and opportunities revolving around their activities. According to the authors, the majority of interviewees mentioned that gas usage deserves special attention. In addition, 86.2% of the survey respondents also declared that they frequently take gas usage into consideration when developing smart contracts. The reason is twofold: “gas is money” and “transaction failure due to insufficient amount of gas”. In terms of challenges, the authors highlight that “there is a need for source-code-level gas-estimation and optimization tools that consider code readability”, since most gas-optimization tools operate at the bytecode level (e.g., Remix).

Chen et al. [10] investigated the efficiency of smart contracts by analyzing the bytecode produced by the Solidity compiler. The authors observed that the compiler fails to optimize several gascostly programming patterns, resulting in higher gas usage (and consequently, higher transaction fees). The authors introduce a tool called GASPER, which can detect several gas costly patterns automatically.

Signer [40] studied the gas usage of different parts of a smart contract code by executing transactions with semi-random data. He deployed and executed the transactions in a local simulation of Ethereum blockchain based on the Truffle IDE and solc compiler. With each execution of a transaction, he collected data and mapped to the corresponding section of an abstract syntax tree (AST) of the Solidity source code. He proposed Visualgas, a tool that provides developers with gas usage insights and that directly links to the source code.

More recently, Brandstätter et al. [8] proposed 25 strategies for code optimization in smart contracts with the goal of reducing gas usage. They developed a prototype, open-source tool called python-solidity-optimizer that implements 10 of these strategies. For 6 of these 10 strategies, the tool can not only detect the code optimization opportunity but also automatically apply it (i.e., change the code). The authors evaluated their tool on 3,018 verified contracts from Etherscan. On average, 1,213 gas units were saved when deploying an optimized contract. Also on average, 123 units were saved for each function invocation. Despite the small savings, the authors argue that hundreds of thousands of dollars are spent on transaction fees daily and thus even small cost savings can sum up to high absolute numbers.

Authors:

(1) MICHAEL PACHECO, Software Analysis and Intelligence Lab (SAIL) at Queen’s University, Canada;

(2) GUSTAVO A. OLIVA, Software Analysis and Intelligence Lab (SAIL) at Queen’s University, Canada;

(3) GOPI KRISHNAN RAJBAHADUR, Centre for Software Excellence at Huawei, Canada;

(4) AHMED E. HASSAN, Software Analysis and Intelligence Lab (SAIL) at Queen’s University, Canada.

[17] Uncle blocks are created in Ethereum when two blocks are mined and submitted to the ledger at about the same time. The one that is not validated is deemed as an uncle block. Miners are rewarded for uncle blocks. Uncle block reward was designed to help less powerful miners.

[18] Hoare logic is a formal system with a set of logical rules for reasoning rigorously about the correctness of computer programs.

[19] Move is the smart contract programming language under development by Facebook, to be used in their Libra blockchain platform. The language’s definition can be seen at: https://developers.libra.org/docs/assets/papers/libra-move-a-languagewith-programmable-resources/2020-05-26.pdf