I’ve always been curious about how different codecs stack up when it comes to screen sharing over WebRTC. So, I decided to find out for myself by testing the four most common ones: VP8, VP9, H.264, and AV1.

To make sure the results were solid, I ran tests across a variety of content types and network conditions. I didn’t just rely on visual impressions – I used frame-by-frame comparisons, calculated Peak Signal-to-Noise Ratio (PSNR), and pulled detailed WebRTC stats to get a clear, data-driven view.

To go a step further, I even built a custom Chrome plugin that tracks CPU usage during both encoding and decoding. That gave me a good look at how each codec affects system performance – because quality’s great, but not if your computer’s on fire trying to keep up.

I looked at key metrics like bitrate, frame rate, resolution, quantization (QP), PSNR, and CPU load. Based on all that, I came up with a few practical recommendations to help anyone trying to optimize WebRTC screen sharing for their own use cases.

About the experiment

Why Screen Sharing Isn’t as Simple as It Looks

If you’ve ever shared your screen during a video call and noticed the quality dropping – or the video getting choppy – you’re not alone. Keeping screen sharing crisp and smooth is actually trickier than it seems.

The problem? It’s all about variety. Some content is still, like a slide deck or a document. Other times, you’re sharing high-motion video. These different types of content demand very different things from the codec. For example, fast-moving video eats up a ton of bandwidth, while static images are more sensitive to compression artifacts that can make them look blurry or blocky.

Throw in real-world internet issues like packet loss or bandwidth drops, and things get even messier. That’s why choosing the right codec – and fine-tuning how it works – is so important for making screen sharing both high-quality and responsive.

There’s already a lot of research out there on how video codecs perform in general streaming scenarios. But screen sharing has its own quirks. It’s not just about watching a video – it’s about interacting in real time, and the content types are all over the place. That means the usual research doesn’t always apply.

What I Set Out to Do

I wanted to dig deeper into this specific use case. My goal was to see how different codecs perform when it comes to screen sharing, especially under real-world constraints like varying content types and unpredictable network conditions.

To do that, I designed a method for evaluating screen share quality as accurately as possible – not just based on how it looks, but backed by solid metrics and data. Along the way, I learned a lot about which codecs hold up best and how to fine-tune things for better performance in real-time applications.

One thing quickly became clear: screen sharing performance really depends on what you’re sharing. A fast-paced video behaves very differently from a static document or a slow scroll through a webpage.

To keep things fair and consistent, I made sure that every test ran with the exact same conditions – same resolution, same starting point, and same duration. That way, I could be confident the results were all about the codec performance, not some accidental variable.

I created two different test cases to simulate real-life screen sharing situations:

- High-motion video – This was a real clip of motorcyclists speeding through a forest. Lots of rapid movement, detailed scenery, and a constantly changing visual environment – perfect for pushing the limits of compression.

- Auto-scrolling text – I built a simple HTML page with text and images, then used JavaScript to scroll it at a steady speed. It mimics a common use case like sharing a document or reading from a webpage.

These two types of content gave me a good mix to test how each codec handles different challenges – from keeping up with motion to preserving clarity in more static scenes.

How I Set Everything Up

To properly test the codecs, I needed a solid setup that could capture everything – both what was being sent and what was received. I started with a tool called webrtc-sandbox (By the way you can learn about this tool and many more in my other post: “Learning WebRTC in Practice: Best Tools and Demos“), which is great for messing around with WebRTC internals. I ended up tweaking it quite a bit to better handle screen sharing and make sure I could record both the outgoing and incoming video streams. Each test run gave me two video files – one for what was sent and one for what was received – which I used later for side-by-side analysis.

But I didn’t stop at video. I also wanted to track what was happening under the hood. For that, I pulled detailed WebRTC stats directly from the Chrome browser. These stats gave me a window into how each codec performed and how the simulated network behaved during each test.

One big piece of the puzzle was CPU usage – and that turned out to be a bit tricky. Chrome’s normal version doesn’t let plugins monitor CPU load for individual tabs, so I used a development build of the browser and wrote my own plugin to get around that.

I specifically focused on measuring CPU usage from the tab that was sending or receiving the screen share. That way, I excluded unrelated rendering tasks from other parts of the browser. Since both sending and receiving happened in the same tab, the numbers I got were a combined view – but still pretty close to what you’d see in a real-world use case. (Spoiler: encoding usually hits the CPU harder than decoding.)

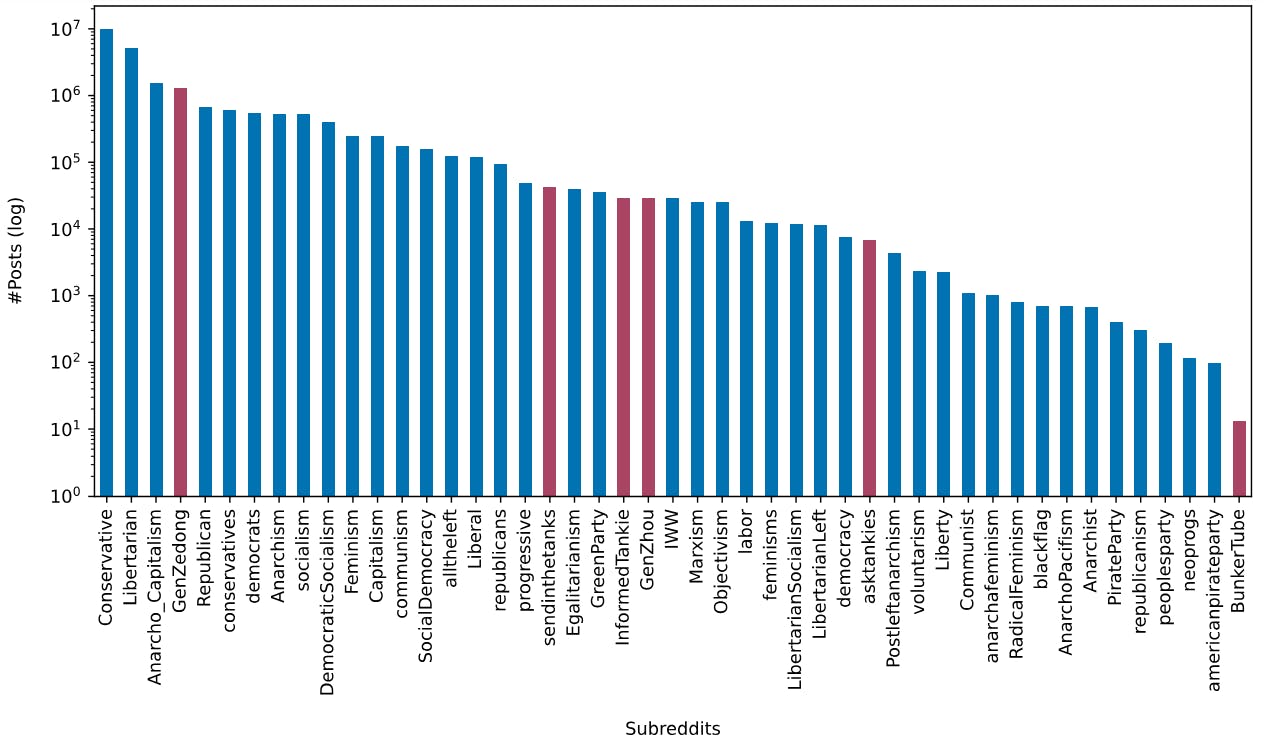

Gathering the Data: 157 Test Runs …

Once the setup was ready, it was time to run the actual experiments – and I ran a lot of them. I repeated the tests on the same machine, over and over, using different network conditions and codec settings to see how things held up. In total, I collected 157 data points, making sure every combination of test conditions was well represented.

This gave me a solid dataset to work with and allowed for some deep-dive analysis into how screen sharing behaves in WebRTC under different scenarios. Here’s what I was specifically testing:

- Video Type:

I used two types of screen share content to reflect common use cases:- High-motion video – Real-world footage with tons of pixel changes every frame.

- Auto-scrolled text – Mostly static visuals, but with pixels shifting position as the text scrolls.

- Codec:

I tested the four most popular screen-sharing codecs: - Network Bandwidth:

Since bandwidth plays a huge role in video quality (especially in video calls), I simulated a variety of network conditions to see how well each codec handled tight or fluctuating bandwidth.

By mixing and matching these variables in a structured way, I was able to mimic real-world screen sharing scenarios – the kind you’d run into on a video call, during a live demo, or in a collaborative remote session.

Fixing the Glitches: How I Made the Tests More Reliable

As with most experiments, the first few runs didn’t go as smoothly as I hoped. Two major problems came up right away:

- Manual Start = Messy Timing. At first, I was starting the screen sharing manually – literally clicking a button to kick things off. The problem? It was nearly impossible to sync the start of the recording with the start of the video content. That meant every test run had slightly different timing, which threw off the comparisons.

- Sent vs. Received = Out of Sync. Even when the timing was right, real-time video has its own quirks. Thanks to encoding, decoding, and network delays, the sent and received streams never lined up perfectly. This made frame-by-frame quality comparisons nearly impossible.

To solve these issues, I made a couple of key improvements:

- Programmatic Syncing: Instead of manually starting everything, I wrote some code to sync the start of the video or scrolling text with the recording process. That way, every test run began at the exact same moment on both ends – problem solved.

- QR Code Frame Matching: For the desync issue, I added a small QR code overlay to the shared video. This little marker acted like a timestamp – it let me match up frames between the sent and received streams with precision. Suddenly, frame-by-frame analysis became doable (and way more accurate).

There was one more thing I had to account for: WebRTC’s adaptive bitrate. One of WebRTC’s cool features is that it automatically adjusts video quality based on available bandwidth. But that adjustment doesn’t happen instantly – it takes a few seconds to stabilize. So, I added a short delay before starting the actual recording. This gave the system time to settle at the target bitrate, so the results would reflect the real quality you’d get after things even out.

These changes made the experiment a lot more reliable and gave me confidence that the data I was collecting actually reflected how screen sharing behaves in the real world.

What I Measured (And Why It Matters)

I collected a lot of data during these tests, but to keep things straightforward and easy to compare, I focused on a few core metrics that really tell the story of how each codec performs in a screen sharing scenario.

Here’s what I looked at:

- Frame Rate

This tells me how many frames per second were actually encoded, sent, and received. It’s a good indicator of how smooth the video stream feels – a higher frame rate usually means a more fluid experience. - Resolution

Resolution is all about how much visual detail is preserved. I tracked the number of pixels per frame at each stage (sent and received) to see whether the codecs held onto image quality or dropped it to save bandwidth. - Video Quality

I used a couple of key metrics here:- Quantization Parameter (QP) – A lower QP generally means better quality.

- Peak Signal-to-Noise Ratio (PSNR) – This gives a numerical sense of how much the received video differs from the original. Higher = better.

- CPU Usage

Codec performance isn’t just about what you see – it’s also about what your machine is doing behind the scenes. I measured how much CPU power was used for encoding and decoding during each test, normalized over time, to see which codecs are lightweight and which ones are resource hogs.

By breaking things down with these metrics, I was able to compare the codecs not just on quality, but also on smoothness, efficiency, and how demanding they are. That helped reveal where each codec shines – and where it struggles – in real-world screen sharing conditions.

Finally coming to the Results

How Much Does Content Type Matter? A Lot More Than You’d Think

One of the most surprising takeaways from my experiments was just how much the type of content you’re sharing affects screen sharing performance – both in terms of video quality and resource usage. And this was true for every codec I tested.

The idea behind it is pretty simple: when more pixels change from frame to frame (like in a fast-moving video), the system has to work harder. That means more bandwidth is needed, and your CPU has to hustle more to keep up.

Take AV1, for example. When I used it to share a 1.5-megapixel screen, the required bandwidth varied a lot depending on what was being shared. In one case, where the content was more dynamic, AV1 had to push significantly more data to keep the stream smooth. I captured this in the following graph, which shows just how drastically content complexity impacts bandwidth usage.

But it’s not just bandwidth – your hardware feels it too.

The next graph shows how CPU usage changes based on the content being shared. Again, using AV1 as the example, you can clearly see that more complex visuals demand much more CPU power to keep things running at the same frame rate and resolution.

This isn’t just an AV1 thing, either. All codecs rely on the same basic principles for encoding and decoding video, so you’d expect them to show similar behavior – but the data tells a different story. The CPU load doesn’t just change with the content, it also changes based on which codec you’re using.

To make this easier to compare, I put together the following table, which shows how much CPU each codec uses when streaming a 1.5-megapixel video at around 24 frames per second – a pretty typical setup for smooth screen sharing. The results highlight some major differences in how efficient each codec is when it comes to using your hardware.

|

Codec/CPU |

AV1 |

H264 |

VP8 |

VP9 |

|---|---|---|---|---|

|

Motion |

287% |

213% |

270% |

364% |

|

Text |

175% |

130% |

179% |

198% |

So, if you’re building or optimizing something that relies on WebRTC screen sharing, the takeaway is clear: both your content and your choice of codec matter. A lot.

Codec Showdown: Frame Rates, CPU Load, and the Real Costs of Quality

When it comes to screen sharing, one of the first things you notice is how smooth (or not) the video feels. That’s where frame rate comes in. If you’re sharing high-motion content, like video playback or animations, a higher frame rate is essential to avoid choppy, hard-to-watch streams.

For this part of the experiment, I focused on frame rate performance across different codecs while keeping everything else constant: same resolution (about 1.5 megapixels) and the same content for each test. I used the contentHint WebRTC setting to make sure that resolution stayed locked in across the board.

In following image, you can see how different codecs handle high-motion content as bandwidth increases. On the x-axis: bitrate in Mbps. On the y-axis: frame rate in frames per second (fps).

Here’s what stood out:

- H.264 and AV1 pulled ahead once bandwidth hit 2 Mbps or more, both delivering 20+ fps – a smooth experience that’s achievable even on a decent 3G connection.

- VP8 and VP9 didn’t keep up as well. They hovered at about half the frame rate under the same conditions, and really needed 4 Mbps or more to feel usable – which isn’t always realistic on lower-end networks.

Then I shifted to low-motion content – a slowly scrolling text page – to see how codecs perform when not much is changing between frames.

Unsurprisingly, both H.264 and AV1 did even better in this scenario, with AV1 coming out on top. That’s thanks to a feature called Intra Block Copy, which lets AV1 skip re-encoding parts of the screen that haven’t changed. It’s incredibly effective for static or semi-static screen sharing.

In next graph, you can see just how efficiently AV1 handles these low-motion situations, keeping bandwidth usage impressively low while maintaining high visual quality.

But… there’s a trade-off.

AV1 might give you better visuals and compression, but it also eats up more CPU. Next image shows this clearly: AV1’s CPU usage climbs steadily as bitrate increases, outpacing H.264 by a long shot. H.264 has a big advantage here thanks to widespread hardware acceleration, which keeps its CPU load low and stable.

VP9, interestingly, uses even more CPU than AV1 – following a similar trend but with higher peaks. Both VP9 and AV1 rely on complex algorithms to deliver good quality, but that comes at a cost: they’re heavy hitters on your processor.

There was a twist when I revisited low-motion content. This time, VP8 and VP9 actually looked pretty efficient – using less CPU than AV1, as shown in the next graph.

AV1, despite being designed with screen sharing in mind, still used the most CPU. Why? All those optimizations that help it compress high-motion video also add overhead – even when there’s not much happening on screen.

A big reason for this? AV1 still lacks widespread hardware encoding support. While decoding is relatively lightweight, encoding is still mostly done in software – and that’s a CPU-intensive task, especially in real-time scenarios like screen sharing where both encoding and decoding happen constantly.

This is where things get tricky for portable devices like laptops and tablets. Without hardware acceleration, codecs like AV1 can quickly drain power and hog resources – not ideal when you’re on the go. Until better hardware support becomes more common, AV1’s advanced features come with a pretty noticeable performance cost.

Codecs and Resolution: What Happens When You Prioritize Frame Rate?

So far, the results I’ve shared came from tests that kept resolution constant. When bandwidth got tight, the system would respond by lowering the frame rate – which makes sense for things like static content or text. But what if the goal is to keep things moving smoothly, even if that means sacrificing resolution?

To explore this, I ran a new set of experiments where WebRTC was configured to prioritize frame rate instead. This was done using the contentHint setting in WebRTC, which lets you tell the browser what’s more important – high resolution or smooth motion.

I aimed to hold frame rate at a consistent 30 fps, which is widely recognized as the sweet spot for smooth, comfortable viewing. Getting that consistently was tough – adaptive streaming means there’s always a bit of fluctuation – but the results offered valuable insight into how each codec handles this trade-off.

To help analyze this behavior, I introduced a new metric:

Sent Pixels per Second = Frame Rate × Resolution

This gives a more complete picture than just looking at FPS or resolution alone. It shows how much visual data the codec is actually delivering per second under different conditions.

For high-motion video, AV1 once again came out on top – and by a noticeable margin. Even at lower bitrates, it managed to transmit significantly more pixels per second than any other codec. This clearly shows how well AV1 handles dynamic content when the system is under pressure to maintain a high frame rate. Please see the following graph:

When I switched to low-motion content – like a webpage with scrolling text – the playing field got a little more level. The performance of all codecs became more uniform as you can find on the next image. However, AV1 still retained a lead, especially at higher bitrates. Its compression optimizations helped it maintain strong throughput without significantly increasing resource use.

What does all this mean in practice?

Well, if your use case involves dynamic, high-motion visuals – like video walkthroughs, live animations, or game streaming – prioritizing frame rate can make a big difference, and AV1 proves to be particularly capable in that environment.

Even for slower-moving content, AV1 continues to show strength. While the difference may be smaller, it consistently manages to send more visual data – meaning better quality at the same or lower bandwidth – thanks to its advanced compression strategies.

In both cases, the “sent pixels per second” metric proved useful for understanding how codecs balance resolution and frame rate when bandwidth is limited. And AV1’s performance under these conditions further cements it as the most capable option – provided your system can handle the additional CPU demands.

How Clean Is the Image? Let’s Talk PSNR

Beyond frame rates, resolution, and CPU usage, there’s one more important piece of the screen sharing puzzle: how close does the received image look to the original? That’s where Peak Signal-to-Noise Ratio (PSNR) comes in.

PSNR is a widely used metric for measuring the quality of compressed video. It tells you how much distortion – or “noise” – has been introduced during encoding. It’s measured in decibels (dB), and the rule of thumb is simple: the higher, the better. A high PSNR means the image looks almost exactly like the original; a lower score means there’s more visible degradation.

To put this into context, I tested PSNR values across codecs in two different scenarios: one where resolution is the priority, and another where frame rate takes the lead. Both tests used the same high-motion video content to keep things consistent.

In this setup, where clarity is the focus (even if the video ends up a bit choppy), H.264 performed particularly well, delivering sharp visuals with minimal distortion. It’s a strong choice when smoothness isn’t as critical.

When the goal shifts to maintaining fluid motion, AV1 comes out ahead, especially at higher bitrates. It manages to preserve image quality even while compressing aggressively to hit frame rate targets.

While the differences in PSNR between codecs aren’t always dramatic, they highlight the trade-offs you make when selecting a codec. Some prioritize sharpness; others aim for smooth motion – and depending on your use case, one may be better suited than the other.

Then I ran the same tests again, this time using scrolled text content – something that really stresses the importance of resolution and clarity.

When motion is prioritized, PSNR values across all codecs look pretty similar. The content isn’t changing much, so the differences in compression strategy don’t have much impact on overall image quality.

This is where things get interesting. With resolution as the priority, AV1 pulls far ahead of the other codecs – especially at higher bitrates. Its performance here is exceptional.

Why? AV1 includes specific optimizations for handling static, repetitive content like text. These allow it to maintain higher image fidelity, avoid artifacts, and compress more efficiently. That makes AV1 a fantastic choice for use cases like document sharing or code walkthroughs – anywhere that crisp, readable detail really matters.

In short, PSNR helps highlight a subtle but important dimension of screen sharing quality. Whether you prioritize motion or sharpness, understanding how codecs behave under different constraints lets you choose the right one for the job.

What’s the Deal with QP? Understanding Compression vs. Quality

Another important factor in screen sharing quality is something called the Quantization Parameter, or QP. If you’ve ever wondered what controls how much detail gets lost during compression, this is it.

In simple terms, QP tells the encoder how aggressively to compress the video.

- A low QP means less compression and better image quality.

- A high QP means more compression – which saves bandwidth, but can make the video look worse.

While PSNR gives us a result – how much image quality was preserved – QP tells us what the encoder was aiming for. So, I looked at both.

In this study:

- QP values were pulled from standard WebRTC metrics.

- PSNR was measured after the fact by comparing each original frame to its received version.

Here’s where things got interesting. AV1 had the best PSNR scores, meaning it preserved image quality the best – but it also had QP values up to four times higher than the other codecs. That seems contradictory at first glance.

But here’s the catch: each codec defines and handles QP differently, so the values aren’t directly comparable. A QP of 50 in one codec doesn’t necessarily mean the same level of compression as a QP of 50 in another.

Still, QP trends tell us something useful. Across all codecs, I observed that as bandwidth increases, QP decreases. That makes sense – with more bandwidth available, the codecs can afford to reduce compression and improve image quality.

So even though we can’t line up QP numbers side by side across codecs, they still show how each codec dynamically adjusts compression based on available network conditions.

Bottom line: QP isn’t a quality score – it’s a setting. But tracking how it shifts with bandwidth gives helpful insight into what the encoder is doing behind the scenes. And combined with PSNR, it gives a more complete picture of codec behavior.

Final Thoughts: What This All Means for WebRTC Screen Sharing

After diving deep into how WebRTC performs under the hood, one thing is clear: not all codecs are created equal – and the best choice really depends on your priorities and environment.

Here are the key takeaways from my experiments:

AV1: Best Quality, Highest Cost

AV1 consistently delivered the best visual quality, whether I was sharing fast-moving video or slowly scrolling text. Its advanced compression and optimizations make it incredibly efficient – but that comes with a price. AV1 is CPU-hungry, and since hardware support is still catching up, it’s not ideal for lower-powered or mobile devices just yet.

H.264: The Solid All-Rounder

If you’re looking for a balance between performance and efficiency, H.264 is still a great choice. It’s widely supported, hardware-accelerated on most platforms, and does a good job in almost every scenario – especially when system resources or battery life are a concern.

Content Matters More Than You Think

The type of content you’re sharing has a huge impact on performance. High-motion video demands way more from your CPU and bandwidth than static content like documents or text. Choosing the right codec – and the right settings – for your content can make a massive difference in quality and resource usage.

Thanks to a custom Chrome plugin I built, I was able to measure CPU usage accurately during screen sharing. The results showed big differences in how demanding each codec is, which becomes especially important on mobile devices or in energy-sensitive environments.

What’s Next? Where This Research Could Go

This experiment opened the door to some exciting next steps. Here’s where I think future work could make an even bigger impact:

Testing on Mobile Devices

So far, all tests were done on desktop – but screen sharing is just as common (if not more) on phones and tablets. Testing how these codecs perform on mobile would give a more complete picture, especially in terms of responsiveness and power usage.

Energy Efficiency

Speaking of power – codecs don’t just burn CPU, they burn battery life too. Future research should explore which codecs are most energy-efficient, especially for long screen sharing sessions on portable devices.

AI-Powered Codec Tuning

Imagine if WebRTC could automatically adjust codec settings based on your current content and network speed. AI could make that possible, dynamically finding the perfect balance between quality and performance in real-time.

Wrapping It Up

Codec choice is more than just a technical decision – it directly affects the quality, smoothness, and resource use of your screen sharing experience. Whether you’re building a video conferencing tool, a collaboration platform, or just optimizing your own workflows, understanding how these codecs behave under different conditions can help you make smarter, more effective decisions.

As WebRTC continues to evolve, so will the tools and techniques around it. I hope this deep dive helps others better understand what’s going on behind the scenes – and how to get the most out of their screen sharing stack.

Want to Explore the Data Yourself?

If you’re curious to dig deeper into the results or run your own analysis, I’ve made the full dataset from this study available here:

Download the dataset on Kaggle

It includes raw metrics for frame rate, resolution, PSNR, QP, CPU usage, and more – all organized by codec, content type, and bandwidth condition. Feel free to use it for your own experiments, benchmarking, or just to explore how WebRTC behaves in different scenarios.