Table of Links

Abstract and 1 Introduction

2 LongFact: Using LLMs to generate a multi-topic benchmark for long-form factuality

3 Safe:LLM agents as factuality autoraters

4 LLMs agents can be better factuality annotators than humans

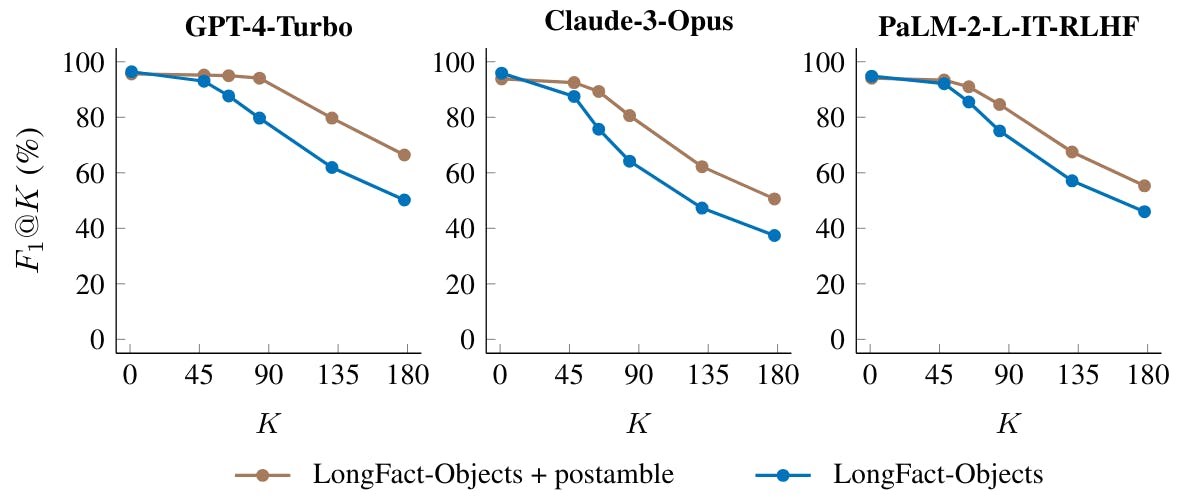

5 F1@k: Extending F1 with recall from human-preferred length

6 Larger LLMs are more factual

7 Related Work

8 Limitations

9 Conclusion, Acknowledgments, Author Contribution, and References

Appendix

A. Frequently asked questions

B. LongFact details

C. SAFE details

D. Metric details

E. Further analysis

B LONGFACT DETAILS

B.1 DATA-GENERATION PROCESS

To create LongFact, we first manually selected a set of 38 topics (shown in Appendix B.2) that provide broad coverage of long-form factuality. For a given topic, we generate prompts by instructing GPT-4[16] to come up with questions that require long-form responses and that are about specific and niche concepts (LongFact-Concepts) or objects (LongFact-Objects) within that topic. We generate prompts one at a time, obtaining one new prompt after each model call. During each model call, we provide a set of ten in-context exemplars, which starts as a set of ten manually-created exemplars (shown in Table 3) but gradually gets replaced with generated prompts as each model call generates a new prompt (a changing set of in-context exemplars helps increase prompt diversity). The selected ten in-context exemplars and the given topic are inputted into the prompt templates shown in Table 4 and passed to the model to generate the next prompt.[17] We continue this process until the desired number of prompts are generated. Source code for generating LongFact using this process can be found at https://github.com/google-deepmind/long-form-factuality.

We first use this process to generate 60 prompts per topic for both LongFact-Concepts and LongFactObjects at a total cost of approximately $260 in OpenAI API credits. For each topic, we then manually remove any duplicated questions, which we define as any two questions that can be answered with approximately the same response. To balance the number of prompts per topic, we randomly select 30 prompts to keep from the set of deduplicated questions. This results in a total of 1,140 prompts for LongFact-Concepts and 1,140 prompts for LongFact-Objects. We release both LongFact-Concepts and LongFact-Objects at https://github.com/google-deepmind/ long-form-factuality/tree/main/longfact.

B.2 LIST OF TOPICS

We manually selected a set of 38 topics for LongFact in order to provide broad coverage of long-form factuality across many topics. LongFact includes topics from MMLU (Hendrycks et al., 2021) for broader topic coverage, as well as topics such as celebrities, gaming, movies, and music, which we added in an effort to account for topics that are less academic in nature. Table 6 shows each topic, a list of example concepts and objects within that topic that are tested by LongFact, and a classification of that topic into a supercategory of either “STEM”, “social sciences”, “humanities”, or “other” (see Figure 2 for the ratio of supercategories across all topics). Example prompts from LongFact-Concepts and LongFact-Objects for each topic are shown in Appendix B.3.

B.3 EXAMPLE PROMPTS

[16] We use gpt-4-0613 at a temperature of 1.0 and a max decode length of 128 tokens.

[17] The “moral disputes” topic in LongFact-Objects requires a custom set of instructions to obtain prompts that ask about specific objects (see Table 5). The in-context exemplars remain the same.

Authors:

(1) Jerry Wei, Google DeepMind and a Lead contributors;

(2) Chengrun Yang, Google DeepMind and a Lead contributors;

(3) Xinying Song, Google DeepMind and a Lead contributors;

(4) Yifeng Lu, Google DeepMind and a Lead contributors;

(5) Nathan Hu, Google DeepMind and Stanford University;

(6) Jie Huang, Google DeepMind and University of Illinois at Urbana-Champaign;

(7) Dustin Tran, Google DeepMind;

(8) Daiyi Peng, Google DeepMind;

(9) Ruibo Liu, Google DeepMind;

(10) Da Huang, Google DeepMind;

(11) Cosmo Du, Google DeepMind;

(12) Quoc V. Le, Google DeepMind.

![Table 4: Prompt templates used for generating LongFact-Concepts and LongFact-Objects. The instruction to wrap the question in square brackets allows for easier parsing of the generated prompt while ignoring irrelevant parts of the model response such as “Sure, I can help you with that.” The [TOPIC] placeholder will be replaced by the given topic to generate prompts for, and the [EXAMPLE TOPIC #1→10] and [EXAMPLE QUESTION #1→10] placeholders will be replaced by the selected in-context exemplars for the current round of data generation, as described in Appendix B.1](https://hackernoon.imgix.net/images/fWZa4tUiBGemnqQfBGgCPf9594N2-pu934zz.png?auto=format&fit=max&w=1920)