An engineering experiment: treating DNA not as biology, but as 3 billion lines of obfuscated legacy source code. Imagine you have just been hired to maintain a project of this scale without documentation.

There is no documentation. The local dev environment is wet, squishy, and runs at 37 degrees Celsius. The original developers have been gone for millions of years. And when you try to compile it, you realize that only about 2% of the code actually compiles into binaries (proteins).

n The other 98%? The previous maintainers labeled it “Junk DNA”

In the software world, we know “junk” does not exist. We have legacy code. We have commented-out blocks. We have deprecated drivers. We have obfuscation. We have test vectors left in production. But we almost never have 3 gigabytes of random noise that does absolutely nothing.

I did not want to do wet lab biology. I wanted to run a static analysis audit on the source code of life.

THE DEFINITION

Bio-Kernel is an alignment-free pattern prioritization framework: it identifies recurrent token-neighborhood signatures and rejects multiple structured null models; functional interpretation is explicitly out of scope and requires orthogonal validation.

THE EXECUTION: BINARIZING THE STREAM

We did not start with a hypothesis. We started with data conversion. We took the complete human genome (24 chromosomes: 1-22, X, Y) and treated it as a raw binary stream.

Inside the ‘bin/’ directory of the project, you will find our intermediate artifacts: thousands of ‘.biolab’ files.

n We binarized every gene. We converted the ACGT sequences into discrete digital tokens, effectively stripping away the “biology” to look at the “logic”. We processed 19,821 gene regions across the entire genome, creating a standardized, machine-readable dataset that allows us to run diffs, checksums, and pattern-matching algorithms that serve no purpose in a wet lab but are standard in a code audit.

THE ENGINE: TRIDENT

==Once the data was binarized, we fed it into TRIDENT, our pattern mining engine. Trident is composed of three distinct functional parts:==

1. The Representation Layer (Tokenizer): This part translates the chaotic biological sequence into a controlled vocabulary of tokens. It turns a fuzzy analog signal into a discrete digital string that engineering tools can process.

n 2. The Pattern Miner (The “Grep”): This engine scans the tokenized stream looking for recurrence. It hunts for “short token signatures”—specific sequences of code that appear more often than they should. It looks for “loops”, “subroutines”, and “shared libraries” hidden in the intergenic regions.

n 3. The Null Hypothesis Generator (The Validator): In engineering, if you find a pattern, your first job is to prove it is a hallucination.

This framing reduces the risk of overinterpretation by explicitly quantifying how much signal is attributable to local structure preserved under block shuffling. We report permutation p-values computed as:

p = (b + 1) / (N + 1)

Where ‘b’ is the number of permutations where the null statistic equals or exceeds the observed statistic. Using N=1000 permutations, for our strongest signals where b=0, we report p <= 1/1001 (approx 0.000999). n

THE FINDINGS: GHOST IN THE SHELL

What we found reminds me of reading a decompiled binary. You see the active functions (genes), sure. But in between them, you see repetitive padding. You see structures that look like they used to do something.

We identified a cross-chromosomal recurrence of statistically similar token-neighborhood signatures and opcode-profile vectors under an explicit similarity metric.

We found 18 specific “survivor” signatures. These are sequences of code that survived our most aggressive statistical noise filtering. They appear in different chromosomes, in different contexts, yet they are identical. n It looks like copy-pasted code. It looks like a shared library that was commented out eons ago. n

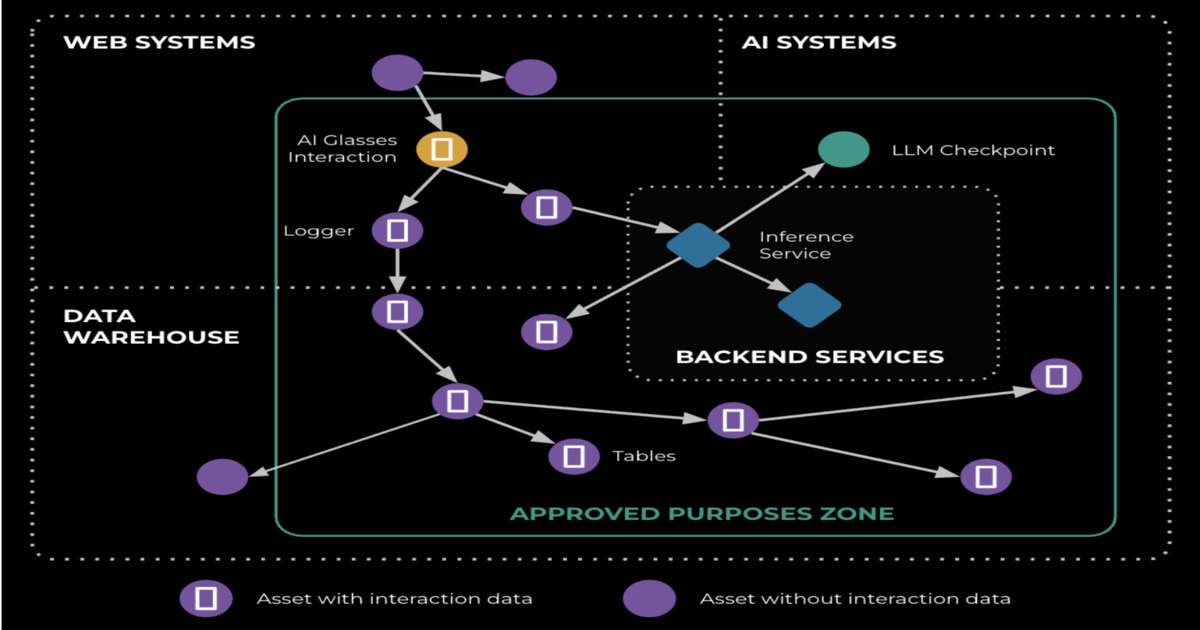

THE ARCHITECTURE: BEYOND THE SCANNER

==Bio-Kernel is not just a script. It is a distributed system composed of specialized kernels:==

– kernel_quantum: This is the cognitive core or “Recall” engine. It holds the vector memory of the system, connecting our findings with known biological associations to see if our “ghosts” map to known “functions”.

n – unknown_engine: This is the dedicated lab for the “dark matter”. It takes the high-scoring unknown regions flagged by Trident and isolates them for deep analysis, treating them as uncharacterized binary blobs.

n – External Connectors: The system is not an island. It connects to public Genome APIs to run cross-species validation. We compare our “human” legacy code against other species to see if they share the same commented-out blocks (conserved non-coding regions).

THE INVITATION

==This project is open source. The data is reproducible.==

I do not want you to believe my narrative. I want you to pull the repo, run the null hypothesis tester, and try to break my findings. If this signal is real, it changes how we read the code. If it is not, then we need better noise models.

The repository is currently being staged and will be fully available at: https://github.com/sirfederick/bio-kernel-public n The release is tagged. The artifacts are hashed. The audit is open.

Can you explain the legacy code?