Key Takeaways

- Selling yourself and your stakeholders on doing architectural experiments is hard, despite the significant benefits of this approach; you like to think that your decisions are good but when it comes to architecture, you don’t know what you don’t know.

- Stakeholders don’t like to spend money on things they see as superfluous, and they usually see running experiments as simply “playing around”. You have to show them that experimentation saves money in the long run by making better-informed decisions.

- These better decisions also reduce the overall amount of work you need to do by reducing costly rework.

- You may think that you are already experimenting by doing Proofs of Concept (POCs). Architectural experiments and POCs have different purposes. A POC helps validate that a business opportunity is worth pursuing, while an architectural experiment tests some parts of the solution to validate that it will support business goals.

- Sometimes, architectural experiments need to be run in the customer’s environment because there is no way to simulate real-world conditions. This sounds frightening, but techniques can be used to roll back the experiments quickly if they start to go badly.

As we stated in a previous article, being wrong is sometimes inevitable in software architecting; if you are never wrong, you are not challenging yourself enough, and you are not learning. The essential thing is to test our decisions as much as possible with experiments that challenge our assumptions and to construct the system in such a way that when our decisions are incorrect the system does not fail catastrophically.

Architectural experimentation sounds like a great idea, yet it does not seem to be used very frequently. In this article, we will explore some of the reasons why teams don’t use this powerful tool more often, and what they can do about leveraging that tool for successful outcomes.

First, selling architectural experimentation to yourself is hard

After all, you probably already feel that you don’t have enough time to do the work you need to do, so how are you going to find time to run experiments?

You need to experiment for a simple reason: you don’t know what the solution needs to be because you don’t know what you don’t know. This is an uncomfortable feeling that no one really wants to talk about. Bringing these issues into the open stimulates healthy discussion that shape the architecture, but before you can have them you need data.

One of the forces to overcome in these discussions is confirmation bias, or the belief that you already know what the solution is. Experimentation helps you to challenge your assumptions to reach a better solution. The problem is, as the saying goes, “the truth will set you free, but first it will make you miserable”. Examples of this include:

- Experimentation may expose that solutions that have worked for you in the past may not work for the system you are working on now.

- It may expose you to the fact that some “enterprise standards” won’t work for your problem, forcing you to explain why you aren’t using them.

- It may expose that some assertions made by “experts” or important stakeholders are not true.

Let’s consider a typical situation: you have made a commitment to deliver an MVP, although the scope is usually at least a little “flexible” or “elastic”; the scope is always a compromise. But the scope is also, usually, more optimistic and you rarely have the resources to confidently achieve it. From an architectural perspective you have to make decisions, but you don’t have enough information to be completely confident in them; you are making a lot of assumptions.

You could, and usually do, hope that your architectural decisions are correct and simply focus on delivering the MVP. If you are wrong, the failure could be catastrophic. If you are willing to take this risk you may want to keep your resumé updated.

Your alternative is to take out an “insurance policy” of sorts by running experiments that will tell you whether your decisions are correct without resorting to catastrophic failure. Like an insurance policy, you will spend a small amount to protect yourself, but you will prevent a much greater loss.

Next, selling stakeholders on architectural experimentation is a challenge

As we mentioned in an earlier article, getting stakeholder buy-in for architectural decisions is important – they control the money, and if they think you’re not spending it wisely they’ll cut you off. Stakeholders are, typically, averse to having you do work they don’t think has value, so you have to sell them on why you are spending time running architectural experiments.

Architectural experimentation is important for two reasons: For functional requirements, MVPs are essential to confirm that you understand what customers really need. Architectural experiments do the same for technical decisions that support the MVP; they confirm that you understand how to satisfy the quality attribute requirements for the MVP.

Architectural experiments are also important because they help to reduce the cost of the system over time. This has two parts: you will reduce the cost of developing the system by finding better solutions, earlier, and by not going down technology paths that won’t yield the results you want. Experimentation also pays for itself by reducing the cost of maintaining the system over time by finding more robust solutions.

Ultimately running experiments is about saving money – reducing the cost of development by spending less on developing solutions that won’t work or that will cost too much to support. You can’t run experiments on every architectural decision and eliminate the cost of all unexpected changes, but you can run experiments to reduce the risk of being wrong about the most critical decisions. While stakeholders may not understand the technical aspects of your experiments, they can understand the monetary value.

Of course running experiments is not free – they take time and money away from developing things that stakeholders want. But, like an insurance policy that costs the amount of premiums but protects you from much greater losses, experiments protect you from the effects of costly mistakes.

Selling them on the need to do experiments can be especially challenging because it raises questions, in their minds anyway, about whether you know what you are doing. Aren’t you supposed to have all the answers already?

The reality is that you don’t know everything you would like to know; developing software is a field that requires lifelong learning: technology is always changing, creating new opportunities and new trade-offs in solutions. Even when technology is relatively static, the problems you are trying to solve, and therefore their solutions, are always changing as well. No one can know everything and so experimentation is essential. As a result, the value of knowledge and experience is not in knowing everything up-front but in being able to ask the right questions.

You also never have enough time or money to run architectural experiments

Every software development effort we have ever been involved in has struggled to find the time and money to deliver the full scope of the initiative, as envisioned by stakeholders. Assuming this is true for you and your teams, how can you possibly add experimentation to the mix?

The short answer is that not everything the stakeholders “want” is useful or necessary. The challenge is to find out what is useful and necessary before you spend time developing it. Investing in requirements reviews turns out not to be very useful; in many cases, the requirement sounds like a good idea until the stakeholders or customers actually see it.

This is where MVPs can help improve architectural decisions by identifying functionality that doesn’t need to be supported by the architecture, which doubly reduces work. Using MVPs to figure out work that doesn’t need to be done makes room to run experiments about both value and architecture. Identifying scope and architectural work that isn’t necessary “pays” for the experiments that help to identify the work that isn’t needed.

For example, some MVP experiments will reveal that a “must do” requirement isn’t really needed, and some architectural experiments will reveal that a complex and costly solution can be replaced with something much simpler to develop and support. Architectural decisions related to that work are also eliminated.

The same is true for architectural experiments: they may reveal that a complex solution isn’t needed because a simpler one exists, or perhaps that an anticipated problem will never occur. Those experiments reduce the work needed to deliver the solution.

Experiments sometimes reveal unanticipated scope when they uncover a new customer need, or that an anticipated architectural solution needs more work. On the whole, however, we have found that reductions in scope identified by experiments outweigh the time and money increases.

At the start of the development work, of course, you won’t have any experiments to inform your decisions. You’re going to have to take it on faith that experimentation will identify extra work to pay for those first experiments; after that, the supporting evidence will be clear.

Then you think you’re already running architectural experiments, but you’re not

You may be running POCs and believe that you are running architectural experiments. POCs can be useful but they are not the same as architectural experiments or even MVPs. In our experience, POCs are hopefully interesting demonstrations of an idea but they lack the rigor needed to test a hypothesis. MVPs and architectural experiments are intensely focused on what they are testing and how.

Some people may feel that because they run integration, system, regression, or load tests, they are running architectural experiments. Testing is important, but it comes too late to avoid over-investing based on potentially incorrect decisions. Testing usually only occurs once the solution is built, whereas experimentation occurs early to inform decisions whether the team should continue down a particular path. In addition, testing verifies the characteristics of a system but it is not designed to explicitly test hypotheses, which is a fundamental aspect of experimentation.

Finally, you can’t get the feedback you need without exposing customers to the experiments

Some conditions under which you need to evaluate your decisions can’t be simulated; only real-world conditions will expose potentially flawed assumptions. In these cases, you will need to run experiments directly with customers.

This sounds scary, and it can be, but your alternative is to make a decision and hope for the best. In this case, you are still exposing the customer to a potentially severe risk, but without the careful controls of an experiment. In some sense, people do this all the time without knowing it, when they assume that our decisions are correct without testing them, but the consequences can be catastrophic.

Experimentation allows us to be explicit about what hypothesis we are evaluating with our experiment and limits the impact of the experiment by focusing on specific evaluation criteria. Explicit experimentation helps us to devise ways to quickly abort the experiment if it starts to fail. For this, we may use techniques that support reliable, fast releases, with the ability to roll back, or techniques like A/B testing.

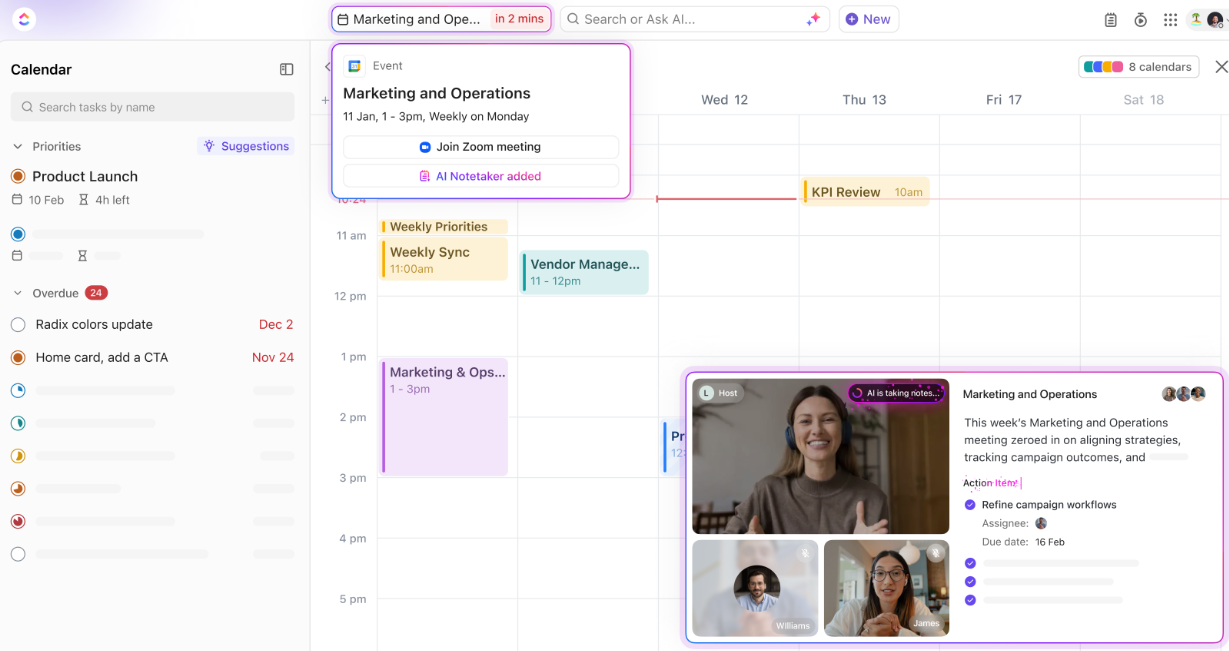

As an example, consider the case where you want to evaluate whether a LLM-based chatbot can reduce the cost of staffing a call center. As an experiment, you could deploy the chatbot to a subset of your customers to see if it can correctly answer their questions. If it does, call center volume should go down, but you should also evaluate customer satisfaction to make sure that they are not simply giving up in frustration and going to another competitor with better support. If the chatbot is not effective, it can be easily turned off while you evaluate your next decision.

Conclusion

In a perfect world, we wouldn’t need to experiment; we would have perfect information and all of our decisions would be correct. Unfortunately, that isn’t reality.

Experiments are paid for by reducing the cost, in money and time, of undoing bad decisions. They are an insurance policy that costs a little up-front but reduces the cost of the unforeseeable. In software architecture, the unforeseeable is usually related to unexpected behavior in a system, either because of unexpected customer behavior, including loads or volumes of transactions, but also because of interactions between different parts of the system.

Using architectural experimentation isn’t easy despite some very significant benefits. You need to sell yourself first on the idea, then sell it to your stakeholders, and neither of these is an easy sell. Running architectural experiments requires time and probably money, and both of these are usually in short supply when attempting to deliver an MVP. But in the end, experimentation leads to better outcomes overall: lower-cost systems that are more resilient and sustainable.