It’s official: AI-generated content is everywhere. From news blurbs to blog posts, it’s quickly weaving itself into the fabric of online media. To help users navigate this shift, platforms are experimenting with labels—some automated, others manually reviewed.

But what happens when audiences actually see the label? Our recent HackerNoon poll suggests the reaction isn’t monolithic: while nearly a third of readers (31%) say they trust content less when they spot the “AI-generated” tag, others take a far more nuanced view.

What Research Says About Labelling AI-generated Content

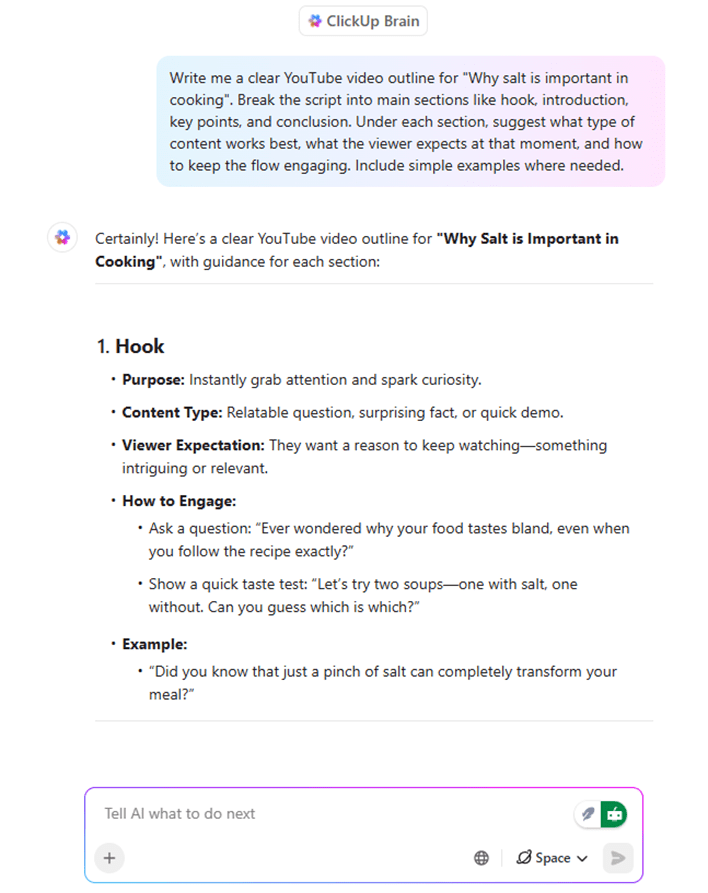

The debate is alive in academia and the newsroom alike. A large-scale experiment with nearly 4,000 participants found that explicitly labeling news articles as AI-generated dampened perceived accuracy and interest, but didn’t sway policy support or trigger alarm over misinformation (arxiv.org, trustingnews.org).

That lines up with the 20% of our poll respondents who said it’s “no big deal” — they notice the label, but it doesn’t really change how they engage. For them, AI authorship is more of a metadata detail than a trust breaker.

HackerNoon Readers Weigh In

Interestingly, labeling isn’t universally damaging. In our survey, 19% said they actually trust AI-labeled work more, reasoning that it signals honesty and gives them the context to make informed decisions. This echoes newsroom research showing that disclosures — especially ones explaining how AI was used and who reviewed the output — can boost credibility.

In other words, for some readers, the “AI-generated” tag is less a warning sign than a trust badge.

On the flip side, another 19% avoid AI-tagged work entirely, preferring content created exclusively by humans. That mirrors a growing subset of internet users who see AI assistance as a dilution of craft — and who argue that the label isn’t just informative, but a red flag.

Meanwhile, 13% of readers go the other way entirely, actively seeking out AI content because they believe it hits the mark faster and better. This “efficiency-first” camp values speed and precision over human nuance — a viewpoint increasingly common in time-sensitive industries like finance, tech, and customer support.

What This Means for Platforms and Publishers

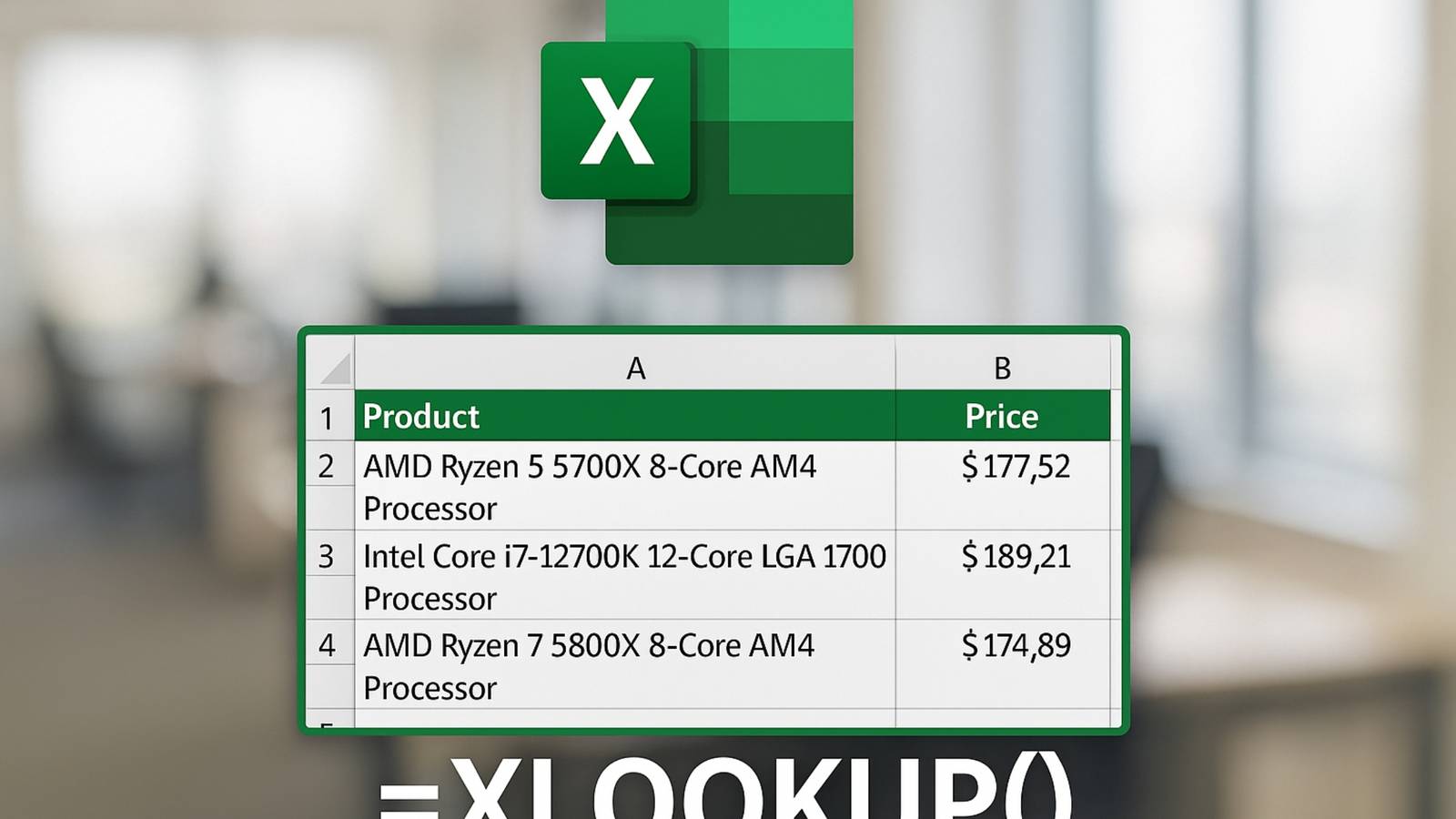

If there’s one throughline in the research — and our own data — it’s that labels matter, but context matters more. When paired with human oversight and thoughtful messaging, they can strengthen trust. When slapped on without explanation, they risk alienating both skeptics and enthusiasts.

| Strategy | Why It Works |

|—-|—-|

| Add context to labels (how/why AI was used, human editor review) | Readers value transparency and want details (trustingnews.org) |

| Use impactful UI (full-screen alerts when necessary) | Noticeable labels have real effect on perceptions (dais.ca) |

| Educate audiences through media literacy | Labels alone won’t shift trust or skepticism (hai.stanford.edu) |

| Balance efficiency with integrity | Speed is useful, but authenticity earns loyalty |

Whether you’re in the 31% that trusts it less, the 19% that trusts it more, or somewhere in between, the “AI-generated” label is here to stay — and how it’s used will shape the next chapter of online trust.

:::tip

Join the conversation in HackerNoon’s weekly technology polls—where forward-thinking technologists share their vision for the industry’s future.

:::