Key Takeaways

- The next frontier in AI technologies is going to be Physical AI.

- Retrieval Augmented Generation (RAG) has become a commodity lately with increasing adoption of RAG based solutions in enterprise applications.

- A shift is occurring from AI being an assistant to AI being a co-creator of the software. We’re not just writing code faster, we’re entering a phase where the entire application can be developed, tested and shipped with the AI as part of the development team.

- AI driven DevOps processes and practices are getting a lot of attention this year.

- In the area of human computer interaction (HCI) with emerging technologies, we should map all our research and engineering goals to true human needs and understand how these technologies fit into people’s lives and design for that.

- New protocols like Model Context Protocol (MCP) and Agent-to-Agent (A2A) will continue to offer interoperability between AI client applications and AI agents with backend systems.

The InfoQ AI ML Trends Reports offer InfoQ readers a comprehensive overview of emerging trends and technologies in the areas of AI, ML, and Data Engineering. This report summarizes the InfoQ editorial team’s podcast with external guests to discuss the trends in AI and ML technologies and what to look out for in the next twelve months. In conjunction with the report and trends graph, our accompanying podcast features insightful discussions of these trends.

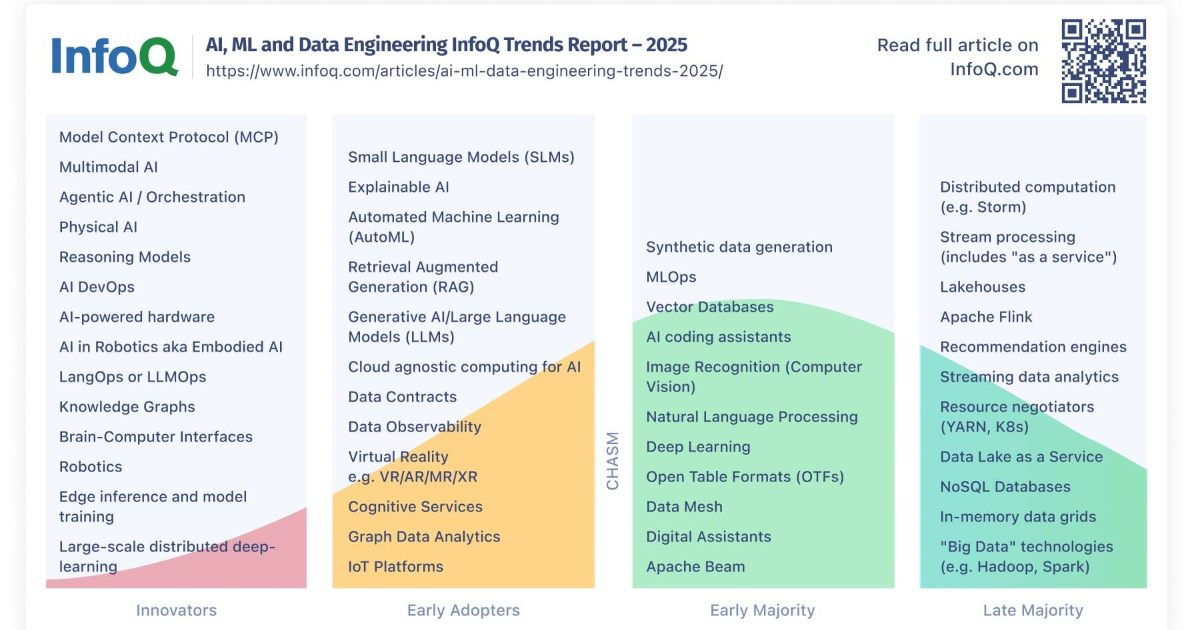

AI and ML Trends Graph

An important part of the annual trends report is the trends graph, which shows what trends and topics have made it to the innovators category and which ones have been promoted to early adopters and early majority categories. The categories are based on the book, Crossing the Chasm, by Geoffrey Moore. At InfoQ, we mostly focus on categories that have not yet crossed the chasm. Here is this year’s graph:

Since ChatGPT was released back in November 2022, Generative AI and Large Language Models (LLMs), have taken over the AI technology landscape. Major players in the technology space have continued to release newer and better versions of their Language Models. Following the same explosive growth of the last few years, AI technologies have seen significant innovations and developments since the InfoQ team discussed the trends report last year (see 2024 trends graph below).

This article highlights the trends graph that shows different phases of technology adoption and provides more details on individual technologies that were added or updated since last year’s trends report. We also discuss which technologies and trends have been promoted in the adoption graph.

Here are some highlights of what has changed since last year’s report:

Innovators

The first category on our adoption graph, Innovators, has a few new topics added this year. These topics are discussed in detail below.

AI Agents

One area that has seen major developments this year is the AI-enabled agent programs. With companies like Anthropic recently announcing Claude Subagents and Amazon announcing their Bedrock Agents, a lot of innovations are happening in this space. AI agents are transforming from performing individual tasks to executing complex systems by orchestrating chained tasks in a workflow as well as being responsible for decision-making and context based adaptations.

Other recent news in this category include OpenAI’s announcement of Generalist ChatGPT Agent to work with applications based on spreadsheets and presentations, Amazon open sourcing Strands Agents SDK for building AI Agents, NVIDIA’s Visual AI Agents for developing video analytics solutions.

Daniel Dominguez and Anthony Alford discussed the recent developments in AI Agents area, in this year’s podcast:

Daniel Dominguez:

I think all this big shift that we’re seeing in the agentic space, so instead of having the chatbot that we were just to interact with, now we’re having this AI that can help us to book meetings, to update databases, to launch cloud resources, to do a lot of things.

In that space, for example, Amazon Bedrock Agents are interesting because they let us build production-ready agents, on top of any foundation model, without the need to manage the infrastructure. They can chain tasks, AWS services, and interpret data securely. It’s basically bringing the agent paradigm into the AWS ecosystem, making it easy for companies to move from experimentation to real world applications. It’s not only AWS, the platform that allows the creation of production-ready agents. I know that Google is also allowed to create those agents, Azure as well. There are a lot of platforms that NAN lets you also create agents from scratch. I think it’s good to see these agents moving faster because all of these easy and ready platforms to deploy it.

Anthony Alford:

Yes, I really think that these agents are, as like everything, a double-edged sword, extremely powerful and useful, but also kind of dangerous. The base example is, it’s an LLM that can call a tool. And so, some of the tools they can call are things like file system operations. Well, it might try to “rm -rf” and this happens to people, but these things are, they’re very useful. And once I’ve started using Amazon Q command line, which by the way Q is, there is no CodeWhisperer anymore, I don’t think, it’s Q.

They’re just calling everything that – but you can chat with it and say write a shell script to do whatever – find all the image files in this folder and enlarge them and it’ll do it. It’s been very helpful to me because I can never remember shell script syntax, things like that, but we’ve been talking about AI safety for years now. It’s important already, but it’s extremely important when the AI can erase your hard drive, access your bank account, all this other stuff. Yes, it can make our lives easy, but it could also make our lives very unpleasant. And again, I don’t think anybody has an answer for it.

Multi-modal Language Models

Language models have become multi-modal, meaning they are now trained on multiple data types – whether it’s text, images, audio, or video – enabling more in-depth understanding and correlation of different types of data, offering richer insights and more relevant, accurate, and valuable outcomes.

Physical AI

One of the significant developments in the AI technology landscape this year is the Physical AI, the embodiment of artificial intelligence in robots. On-device language models and robotics have seen major innovations. The InfoQ team believes this area will go through more significant changes in the coming years. Google released Gemma 3n, a generative AI model optimized for use in devices like phones, laptops, and tablets. Microsoft introduced Mu, a lightweight on-device Small Language Model for Windows Settings. Mu is offloaded onto the Neural Processing Unit (NPU) and responds at over one hundred tokens per second, meeting the demanding UX requirements of the agent in Settings scenario.

Also, from Google is the Gemini Robotics On-Device, the latest iteration of the Gemini Robotics family. It’s a vision language action (VLA) model optimized to run locally on robotic devices, bringing Gemini 2.0’s multimodal reasoning and real-world understanding into the physical world. Last, but definitely not least, NVIDIA robotics space includes several innovations in the Physical AI technologies. NVIDIA’s three-computer solution that comprises NVIDIA DGX AI supercomputers for AI training, NVIDIA Omniverse and Cosmos on NVIDIA RTX PRO Servers for simulation, and NVIDIA Jetson AGX Thor for on-robot inference, enables complete development of physical AI systems, from training to deployment.

Our panelists talked about Physical AI in the podcast.

Savannah Kunovsky:

I think the conversation about edge is especially relevant when we’re talking about coming back to users and what people are going to be excited to have in their homes and kind of in their more everyday spaces. What edge lets us do is design for trust and design experiences of how people interact with technology and how data is processed and where it’s stored, in a way that people will hopefully and more likely feel comfortable than if their data feels like it’s being processed and sent off to faraway places that they don’t know or understand. So especially when we start talking about Physical AI and capturing data that is inside of people’s homes that can feel really precious and intimate.

It’s important that we create products and services that respect the needs and desires of users for us to know that that data is really sensitive and to kind of be careful with it. I think that with the advances in manufacturing and kind of the race that we’re seeing in robotics, there are going to be more devices available that will have advanced technology in them that can support us in our everyday lives. And that’s really exciting. And the only way to [achieve] true adoption of those technologies is to create them, one in a way that’s honestly and practically extremely useful if people are going to be paying for these things, but also in a way that people are excited to invite them into their homes, which we all want to keep relatively private.

Anthony Alford:

The reasoning language models seem to be sort of a path towards using them in robotics. I’ve written several news items for InfoQ where people are taking language models and just asking them to make a plan for a robot to go pick up this thing, bring it there.

There’s a person named Dr. Jim Fan, who’s a director of robotics at NVIDIA. I saw a tweet from him recently. He says he believes that the GPT-1 of robotics is already somewhere in a paper. We just don’t know which one. He makes the claim that we will not have AGI, we’ll not have Artificial General Intelligence without it being embodied, basically, without it being [in] a robot. This is actually an idea that’s been around for a long time. I remember it in my long ago career in robotics in the 20th century that people were making that claim back then. Now, we still don’t have it, but maybe someday.

Model Context Protocol (MCP)

Introduced by Anthropic In November 2024, MCP is an open standard designed to help frontier LLMs produce better and more relevant responses. It provides a standardized protocol for integrating and sharing data between external tools, systems, and data sources with the LLMs, effectively eliminating the need for fragmented and custom integrations. Major players like OpenAI, Microsoft, and Google have made announcements about the adoption to integrate MCP support into their products.

The InfoQ team discussed the important role MCP is going to play in AI applications.

Anthony Alford:

MCP has definitely taken off and been adopted. All of those coding tools that we talk about support MCP. MCP is probably the key technology for making agents happen. It’s not a panacea. I think we’re already seeing headlines of security problems with MCP servers, but they’re certainly useful now. I think their utility might be somewhat limited by the context windows of the models because everything that happens with MCP – the input, the output – goes into that model context.

And so, you can only do so much. I think we’re definitely seeing things like Playwright, MCP server for running tests. That one seems to be a big winner. There’s one for Figma. You can tell your coding agent to just go look at their mock-up in Figma and go create that. I think that it’s definitely got promise and potential, and it does have utility. But it remains to be seen what the limitations are that we’re going to run into.

Daniel Dominguez:

I think for me the exciting part of MCP is the interoperability. For example, with MCP, you could have, I don’t know, the Anthropic Claude model using Google Search or you can have OpenAI using your company’s data. So everything will be working under the same protocol now. That’s what makes that scalable and is the idea of having multi-agent systems possible. Different agents from different companies [with] different sources working together, doing their best. I think that’s what the exciting part is going to be.

Human Computer Interaction (HCI)

Aided by Agentic AI and Physical AI technologies, the human computer interaction (HCI) area is also seeing major transformation and innovations. Due to AI interfaces, how we interact with software is significantly shifting.

Savannah Kunovsky:

Savannah Kunovsky shared her views on the innovations happening in HCI, based on her experience at IDEO organization, and the projects her teams have been working on:

In order to use a large language model, you have to know how to prompt it and talk to a computer rather than talking to it [like an actual] person, which is what we’re used to. And I think that’s a detraction from adoption from these technologies for people. One last comment is that there was a lot of talk in the design community when Apple released their Liquid Glass new kind of design system and there was a lot of pushback against it because it was kind of like, “What is this?” And it’s just sort of evolutionary rather than revolutionary. But I think just in the name, Liquid Glass, what they’re trying to show us is that we can think of our technology as something that has an interface that is a lot more fluid. There’s a group in the MIT Media Lab that’s literally named Fluid Interfaces.

What if we actually just had more interfaces in our homes and on our computers and in our lives that let us move information around a screen where we actually needed it to be [such as accessing] information about cooking on a cooking surface rather than balancing your laptop on top of your microwave [It] might just be me, but I think that what technology allows us to do is embed information in the places that we need it to be.

[For example, as] you’re walking down the street, it doesn’t make sense that you have to take your phone out and stop and pull over and respond to a message. Why can’t you just do that as you continue going about your day? So, in general, when we talk about human computer interaction with emerging technologies, what we should be driving towards is taking all of our research and engineering goals and mapping those to true human needs and understanding how these technologies fit into the context of people’s lives and designing for that.

She also talked about AI technologies from a design perspective:

There’s a ton going on at IDEO. We have, I don’t know, thirty or forty design crafts. So we have your typical visual graphic designers and interaction designers, people who are designing interfaces or designing interactions between humans and hardware or whatever it might be. But we also have business designers and software designers who have software engineering backgrounds and things like that. The ways that these tools are being used across all of these different crafts is incredibly different. But some of the things that are consistent are that they are allowing folks who are kind of in one craft to be able to express their ideas in ways that they couldn’t before.

For example, we have a business designer named Tomochini who’s done a couple of interesting experiments where he was doing research with kids to make children’s toys that have more sustainable materials. Rather than just coming in and telling them the story or trying to describe the idea or showing them a picture of what these new materials might look like and what the new toys might look like, he was able to create short kinds of trailer videos that illustrate his ideas. I think that’s a lot more powerful, that we’re able to just express our intent more quickly and express our ideas more quickly. He also turned business model creation into a game. So he is now able to vibecode these apps [such as creating] this game where he is able to pin different business models against each other.

Other trends we have seen this year that we are adding to the Innovators category include Reasoning Models and AI DevOps.

Early Adopters

In the Early Adopters category, we would like to highlight two main topics: Innovations in Language Models and RAG.

Language Model Innovations

LLMs are the foundation of Generative AI technologies, and have seen interesting developments in the last year, including Vision LLMs, Small Language Models(SLMs), Reasoning Models, and State Space Models. Some of the highlights of Language Models and LLM innovations include:

Small Language Models (SLMs) continue to gain traction for on-device inference, privacy-preserving applications, and cost-sensitive deployments.

Other developments in languages include the state space model (SSM) and diffusion models.

In the podcast, Anthony Alford and Savannah Kunovsky talked about the language models.

Anthony Alford:

I think probably the biggest news lately is that OpenAI released GPT-5 and I think they may surprise a few people. They released it and you’re just starting to use it and you don’t have a choice. I think that took some people aback. They also did something interesting. They have different versions of each model. They’ll have the pro or full model, they’ll have a smaller faster model and you [can] pick which one you want based on your task. With GPT-5, it just picks whichever one it wants. Now, I actually kind of like that. I feel like OpenAI was struggling a little with naming their models. They had a model called 4o and they had another one called o4. It has logic to it, but it’s not always obvious. So I think that was an interesting development as well. What are your thoughts?

Savannah Kunovsky:

I think that the reason why they’re doing that is to simplify the interface. So much of what happens when we’re trying to release these new frontier technologies to people is that they get created kind of in the back rooms and R&D departments by engineers and research scientists, which is incredible work. We, as engineers, sometimes think that the general public is going to understand our vision and understand the way that we’re interacting with something and some of those kinds of research-led interfaces.

I think that what the OpenAI team came to understand is that they actually need to simplify the interface to have a consumer product that is really going to resonate and be more useful to people. I think in that way, as we move forward, as the capabilities of large language models and other advanced technologies become the norm and we can say it’s a given that they can do interesting things, then the interface layer and how we help people interact with those technologies is going to become the most important differentiator for businesses and one of the most important levers for adoption.

Outside of these new innovations, we have seen a continued adoption of RAG based solutions being developed in enterprise applications.

Retrieval Augmented Generation (RAG)

RAG-based application development has seen significant growth in the last year. It’s becoming more of a commodity, moving up in the adoption curve.

From the podcast:

Anthony Alford:

Like you pointed out, these things are really becoming commonplace. Maybe not commonplace, but they’re certainly in the enterprise software space, they’re gaining ground. I think any business that has a big document database, bunch of knowledge articles and things like that, they’re going to be looking at this.

Savannah Kunovsky:

One interesting shift that I’ve seen in our design process is that a lot of the time, the way that we try to understand what’s happening inside of a business is by going and just talking to a bunch of people inside of that business and doing online research about how they’re doing and what they’ve been creating and kind of where their focus is, and CEO messages and all of those types of things. But because of the existence of RAG, we are now able to build systems that actually allow us to gain a ton of context before we start designing.

If we’re able to access more documents that can give us that information, then it kind of gives our designers and our design teams the ability to work from a good set of information rather than starting from zero. So it’s interesting to see something that started as, I think, a really technical focused innovation start to have more of an application that’s applicable to non-technical folks and hopefully, will become easier and easier to be created by non-technical folks for that reason too. And I think that there’s all sorts of business opportunities and just data availability that they provide.

Another entry we are moving into early adopters is Automated Machine Learning. These technologies have gone through extensive development and adoption and are now being used by many applications in various organizations.

Early Majority

We are moving the following topics to the Early Majority category as these technologies have become more matured and widely adopted by software development teams in various organizations.

- Vector DBs

- MLOps

- Synthetic Data

Late Majority

The last category in our adoption graph, the Late Majority category has also seen some new additions as these technologies are now fully adopted by teams and are part of their core architecture patterns.

- Lakehouses

- Stream processing

- Distributed computation (Storm)

Conclusion

As AI technologies transform from tactical task assistants to trusted collaborators that solve real-world, complex problems, InfoQ team envisions AI technologies are here to stay and will continue to develop and innovate, including the domains and applications that we have not thought about. Some of the team’s predictions for the next year include:

- We expect AI agents and AI-enabled coding and software development tools to keep going.

- We’re going to see the things that are truly useful to people as the things that set the new precedent for the next foundations of the internet.

- Another prediction is on video RAG. We’ll have long form videos and it’ll become a challenge to differentiate between a human generated video and AI generated video.

- We’ll start talking about the AI bubble, not that the technology is not going to work because the technology is here and the technology is going to stay, but more regarding the industry itself.

- AI will continue to be a more and more subtle part of our lives. The future interactions will be more context based and behind-the-scenes type of interactions.