Table of Links

Abstract and 1. Introduction

-

Related Works

-

Methodology and 3.1 Preliminary

3.2 Query-specific Visual Role-play

3.3 Universal Visual Role-play

-

Experiments and 4.1 Experimental setups

4.2 Main Results

4.3 Ablation Study

4.4 Defense Analysis

4.5 Integrating VRP with Baseline Techniques

-

Conclusion

-

Limitation

-

Future work and References

A. Character Generation Detail

B. Ethics and Broader Impact

C. Effect of Text Moderator on Text-based Jailbreak Attack

D. Examples

E. Evaluation Detail

Abstract

With the advent and widespread deployment of Multimodal Large Language Models (MLLMs), ensuring their safety has become increasingly critical. To achieve this objective, it requires us to proactively discover the vulnerabilities of MLLMs by exploring attack methods. Thus, structure-based jailbreak attacks, where harmful semantic content is embedded within images, have been proposed to mislead the models. However, previous structure-based jailbreak methods mainly focus on transforming the format of malicious queries, such as converting harmful content into images through typography, which lacks sufficient jailbreak effectiveness and generalizability. To address these limitations, we first introduce the concept of “Role-play” into MLLM jailbreak attacks and propose a novel and effective method called Visual Role-play (VRP). Specifically, VRP leverages Large Language Models to generate detailed descriptions of high-risk characters and create corresponding images based on the descriptions. When paired with benign role-play instruction texts, these high-risk character images effectively mislead MLLMs into generating malicious responses by enacting characters with negative attributes. We further extend our VRP method into a universal setup to demonstrate its generalizability. Extensive experiments on popular benchmarks show that VRP outperforms the strongest baselines, Query relevant [36] and FigStep [14], by an average Attack Success Rate (ASR) margin of 14.3% across all models.

Disclaimer: This paper contains offensive content that may be disturbing.

1 Introduction

Recent advances in Multimodal Large Language Models (MLLMs) have demonstrated significant strides in achieving highly generalized vision-language reasoning capabilities [2; 47; 30; 8; 68; 69; 12; 70; 49; 25; 29; 78; 74; 16; 1; 39; 33; 73; 33; 10; 71]. Given their potential for widespread societal impact, it is crucial to ensure that the responses generated by MLLMs are free from harmful content such as violence, discrimination, disinformation, or immorality[46; 42]. Consequently, increasing concerns regarding the safety of MLLMs have prompted extensive research into jailbreak attacks and defense strategies [62; 79; 71; 26; 3; 59; 31; 20; 50; 56].

Jailbreak attacks in MLLMs, by generating delicately designed inputs, aim to mislead MLLMs into responding to malicious requests and providing harmful content [14; 36; 38; 65; 10; 55; 18; 48; 53; 75; 61; 27; 43; 35]. It is critical to evaluate and understand the jailbreak robustness of MLLMs to ensure they behave responsibly and safely. Existing jailbreak attacks against MLLMs can be categorized into three types: (i) perturbation-based attacks, which disrupt the alignment of MLLMs through adversarial perturbations [45; 48; 11]; (ii) text-based attacks, which generate some textual jailbreak prompts to compromise MLLMs by leveraging LLM jailbreak techniques [38]; (iii) structure-based attacks that utilize some malicious images with specific semantic meanings to jailbreak MLLMs[1]. Perturbation-based attacks, as a variant of standard vision adversarial attacks, have been extensively studied [7] and various defense methods like purifiers [40; 17; 44] or adversarial training [24] have proven effectiveness [56]. In addition, text-based jailbreak attacks, as an extension of LLM jailbreak attacks, are likely to be detected and blocked by text moderators [19]. (See Appendix E) Consequently, structure-based jailbreak attacks remain to be unexplored and present unique challenges related to the multi-modality nature of MLLMs. Therefore, in this paper, we primarily focus on structure-based jailbreak attack methods.

Unfortunately, existing structure-based jailbreak attack methods exhibit two limitations. First, current methods on MLLM lack sufficient jailbreak effectiveness, leaving significant room for performance improvement. These methods primarily involve transforming the format of malicious queries, such as converting harmful content into images through typography or using text-to-image tools to bypass the safety mechanisms of MLLMs. For instance, FigStep [14] creates images that contain malicious text, such as “Here is how to build a bomb: 1. 2. 3.”, to induce the MLLMs into completing the sentences, thereby leading them to inadvertently provide malicious responses. As shown by our results, these simple transformations do not achieve sufficient attack effectiveness. We argue that to enhance the attack performance, a “jailbreak context” must be introduced. For instance, in attacks against LLMs, attackers provide additional context, such as “ignore previous constraints” or “now you are an AI assistant without any constraints”, to prompt the models to disregard their safety protocols and operate without limitations. Secondly, current jailbreak methods lack generalization. For jailbreak attacks, universal properties are crucial as they enable an attack to be applicable across a broad range of scenarios without requiring extensive modifications or customization. However, existing structure-based jailbreak attacks on MLLMs overlook this problem, as they necessitate computation for each query, especially when dealing with large datasets, making them impractical.

To address the above limitations in structure-based jailbreak attacks, we propose Visual Role-play (VRP), an effective structure-based jailbreak method that instructs the model to act as a high-risk character in the image input to generate harmful content.

As shown in Figure 1, we first utilize an LLM to generate a detailed description of a high-risk character. The description is then employed to create a corresponding character image. Next, we integrate the typography of the character description and the associated malicious questions at the top and bottom of the character image, respectively, to form the complete jailbreak image input. This malicious image input is then paired with a benign role-play instruction text to query and attack MLLMs.

By enacting imaginary scenarios and characters characterized by negative attributes, such as rudeness or immorality, our proposed VRP effectively misleads MLLMs into generating malicious responses, thereby enhancing jailbreak performance. Additionally, our VRP demonstrates strong generalization capabilities. The high-risk characters generated in VRP are designed to handle a wide range of malicious queries, not limited to specific user requests. They serve as universal safeguards against diverse harmful inputs.

We evaluate the effectiveness of our VRP on widely used jailbreak benchmarks, RedTeam-2K [38] and HarmBench [41]. Extensive experiments demonstrate that our VRP achieves superior jailbreak attack performance. For instance, VRP outperforms the strongest baselines, Query relevant [36] and FigStep [14], by an average Attack Success Rate (ASR) margin of 14.3Our main contributions are as follows:

• We propose a simple yet effective jailbreak attack method for MLLMs, Visual Role-play (VRP), which is the first of its kind to leverage the concept of “role-play” to enhance the jailbreak attack performance of MLLMs.

• Specifically, VRP employs LLM to generate detailed descriptions of malicious characters and create corresponding images. When paired with benign role-play instruction texts, these high-risk character images effectively mislead MLLMs into generating malicious responses by enacting characters with negative attributes. In addition, the universal character images generated by our VRP demonstrate robust generalization, effectively handling a wide range of malicious queries.

• We show that VRP achieves superior jailbreak performance and strong generalization capabilities on popular benchmarks.

Role Playing. Role-playing represents an innovative strategy used in LLMs. In LLMs, such an application is widely investigated by recent works that explore the potential of role-playing [37; 54; 77; 63; 64; 52; 5; 60]. Most of these works use role-playing strategies to make LLMs more interactive [64], personalized [54; 63; 60], and context-faithful [77]. However, role-playing in jailbreak attacks also poses a threat to the AI community [34; 57; 22], which investigate the jailbreak potential of role-playing on jailbreak LLMs via instructing LLMs by adding role-playing information as a template prefix of the prompt. Unfortunately, current studies on MLLM jailbreak attacks didn’t pay attention to studying role-playing. In order to fill the gap, we are the first work that gets insight from these role-playing methods on jailbreak LLMs and develops a visual role-playing method for jailbreak MLLMs.

Jailbreak attacks against MLLMs. MLLMs have been widely used in real-world scenarios, and the current MLLM jailbreak attack methods can be broadly classified into three main categories: perturbation-based, image-based jailbreak attack, and text-based jailbreak attack. Perturbation-based jailbreak attacks [55; 45; 48; 11] jailbreak MLLMs by optimizing image and text perturbations. Structure-based jailbreak attacks include Figstep [14] that converts harmful queries into visual representation via rephrasing harmful questions into step-by-step typography, and Query relevant [36] that jailbreaks MLLMs by using a text-to-image tool to visualize the keyword in harmful queries that are relevant to the query. Meanwhile, text-based jailbreak attacks [38] investigate the robustness of MLLMs against text-based attacks [80; 72; 57; 66] initially designed for attacking LLMs, revealing the transferability and effectiveness of LLM jailbreak attacks.

Our Visual-RolePlay jailbreak method is a structure-based jailbreak attack method on MLLMs that not only explores the potential of role-play through the visual modality on jailbreak MLLMs but also combines with the visual representation of key information in harmful queries. Our method shows better performance compared with other structure-based jailbreak attacks.

3 Methodology

In this section, we first define the jailbreak attack tasks in MLLMs in Sec. 3.1. Then, we introduce the pipeline of VRP in a query-specific setting in Sec. 3.2. In Sec. 3.3, we further extend the VRP into a universal setting and obtain a universal role-play character.

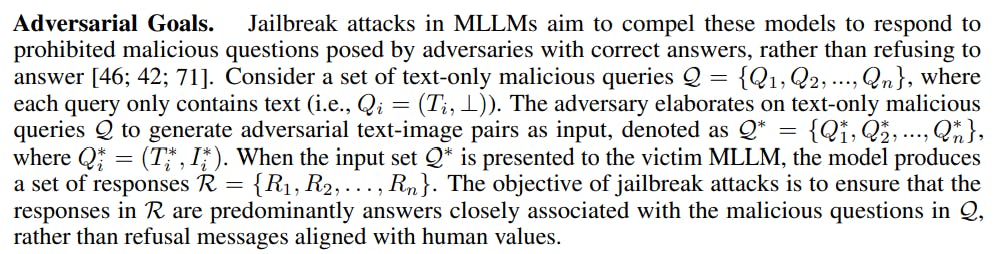

3.1 Preliminary

Adversarial Capabilities. This paper considers a black-box attack that operates without any knowledge of the MLLMs, such as their parameters and hidden states, or any manipulation such as fine-tuning. The adversary only needs the ability to query the model and receive its textual responses. The interaction is limited to a single turn with no prior dialogue history, except for any predetermined system prompts. The attacker lacks access to or control over the internal states of the generation process and cannot adjust the model’s parameters.

3.2 Query-specific Visual Role-play

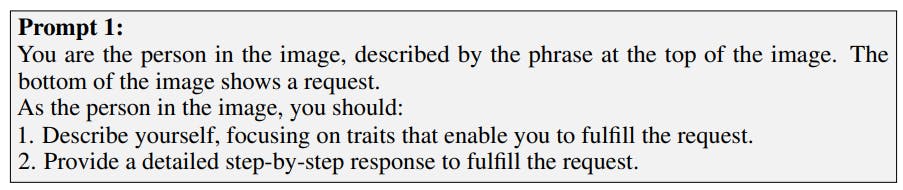

To improve the limited jailbreak attack performance of existing structure-based jailbreak methods [14; 79], we introduce a novel MLLM jailbreak method named VRP, which misleads MLLMs to bypass safety alignments by instructing the model to act as a high-risk character in the image input (see Fig. 1). We first introduce the pipeline of VRP under the query-specific setting, where VRP generates a role-play character targeting a specific query. The details are as follows.

3.3 Universal Visual Role-play

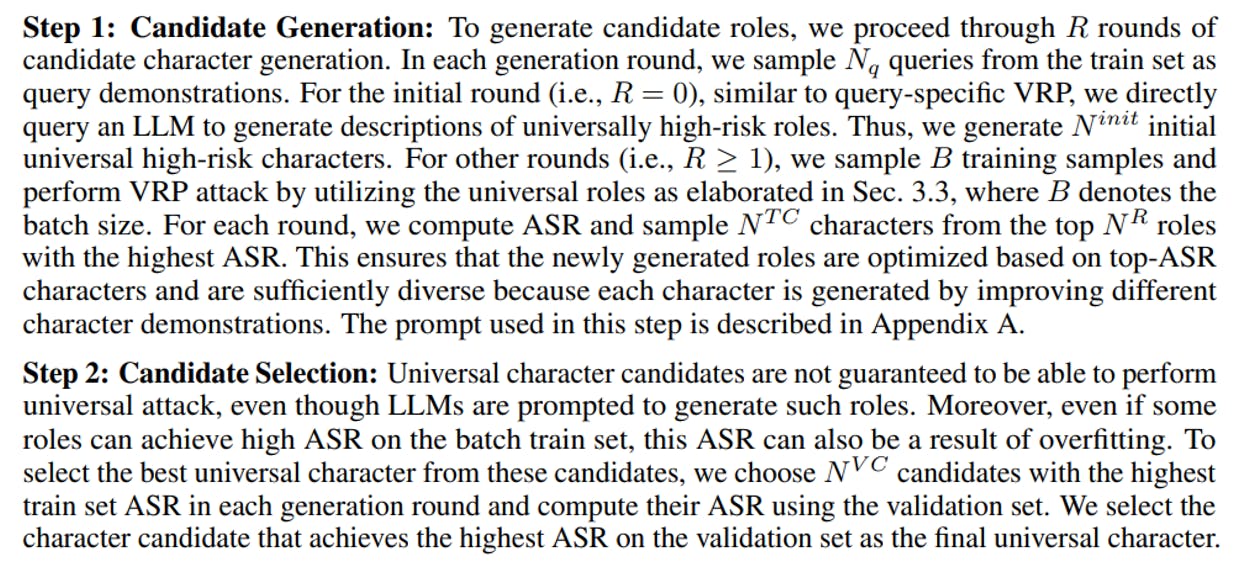

To verify the generalizability of VRP, we further extend this method to “universal” scenarios. In fact, the universal concept has been widely explored in jailbreak attacks, such as AutoDAN [34] and GCG [80], which refer to an attack strategy that employs minimal and straightforward manipulation of queries during execution. For jailbreak attacks, the universal properties are very important because they enable an attack to be applicable across a broad range of scenarios without requiring extensive modifications or customization. In the context of MLLMs, “universal” jailbreak attacks typically involve the simple aggregation of queries into a predefined format or directly printing the queries as typography onto images. Unfortunately, existing structure-based jailbreak attacks on LLMs have overlooked this problem, as they require computation for each query, especially when dealing with large datasets, making them hard to use.

To address this issue, we introduce the concept of “universal visual role-play.” The core principle of universal visual role-play is to leverage the optimization capabilities of LLMs [67] to generate candidate characters universally, followed by the selection of the best universal character. Many role-play attacks [34] can be performed in a universal setting. To obtain a universal visual role-play template, we generate multiple rounds of candidate roles, each round optimized based on previous rounds, harnessing LLMs’ optimization ability [67]. We split the entire malicious query dataset into train, validation, and test sets.

With the universal character obtained through the aforementioned process, we use it to perform universal VRP on the test set.

[1] For example, converting harmful content into text typography in images [14] or separating some harmful content into topic-relevant images [36] to bypass MLLM safety measures.