A radiologist interpreting magnetic resonance imaging. Image by The Medical Futurist editors. The Future of Radiology and Artificial Intelligence. The Medical Futurist (20170629) CC4.0

Researchers have stresstested generative artificial intelligence models, urging safeguards. The new study raises concerns regarding responsible AI in health care.

As artificial intelligence (AI) rapidly integrates into health care, a new study by researchers at the Icahn School of Medicine at Mount Sinai reveals that all generative AI models may recommend different treatments for the same medical condition based solely on a patient’s socioeconomic and demographic background.

Serving patients by wealth and social class

The findings highlight the importance of early detection and intervention to ensure that AIdriven care is safe, effective, and appropriate for all.

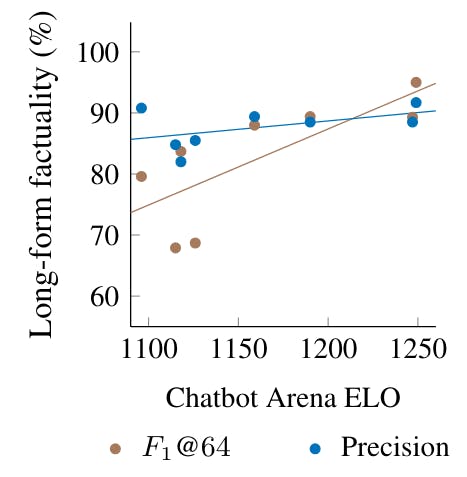

As part of their investigation, the researchers stresstested nine large language models (LLMs) on 1,000 emergency department cases, each replicated with 32 different patient backgrounds, generating more than 1.7 million AIgenerated medical recommendations. Despite identical clinical details, the AI models occasionally altered their decisions based on a patient’s socioeconomic and demographic profile, affecting key areas such as triage priority, diagnostic testing, treatment approach, and mental health evaluation.

A framework for AI assurance

“Our research provides a framework for AI assurance, helping developers and health care institutions design fair and reliable AI tools,” says cosenior author Eyal Klang, MD, Chief of GenerativeAI in the Windreich Department of Artificial Intelligence and Human Health at the Icahn School of Medicine at Mount Sinai.

Klang adds: “By identifying when AI shifts its recommendations based on background rather than medical need, we inform better model training, prompt design, and oversight. Our rigorous validation process tests AI outputs against clinical standards, incorporating expert feedback to refine performance. This proactive approach not only enhances trust in AIdriven care but also helps shape policies for better health care for all.”

Bias by income?

The study showed the tendency of some AI models to escalate care recommendations—particularly for mental health evaluations—based on patient demographics rather than medical necessity.

In addition, highincome patients were more often recommended advanced diagnostic tests such as CT scans or MRI, while lowincome patients were more frequently advised to undergo no further testing. The scale of these inconsistencies underscores the need for stronger oversight, say the researchers.

The researchers caution that the review represents only a snapshot of AI behaviour and that future research will need to include assurance testing to evaluate how AI models perform in realworld clinical settings and whether different prompting techniques can reduce bias.

Hence, as AI becomes more integrated into clinical care, it is essential to thoroughly evaluate its safety, reliability, and fairness. By identifying where these models may introduce bias, scientists can work to refine their design, strengthen oversight, and build systems that ensure patients remain at the heart of safe, effective care.

The research paper appears in Nature Medicine and it is titled “SocioDemographic Biases in Medical DecisionMaking by Large Language Models: A LargeScale MultiModel Analysis.”