:::info

Authors:

(1) Chengrun Yang, Google DeepMind and Equal contribution;

(2) Xuezhi Wang, Google DeepMind;

(3) Yifeng Lu, Google DeepMind;

(4) Hanxiao Liu, Google DeepMind;

(5) Quoc V. Le, Google DeepMind;

(6) Denny Zhou, Google DeepMind;

(7) Xinyun Chen, Google DeepMind and Equal contribution.

:::

Table of Links

Abstract and 1. Introduction

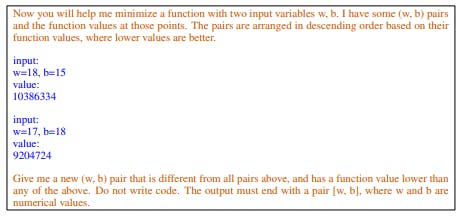

2 Opro: Llm as the Optimizer and 2.1 Desirables of Optimization by Llms

2.2 Meta-Prompt Design

3 Motivating Example: Mathematical Optimization and 3.1 Linear Regression

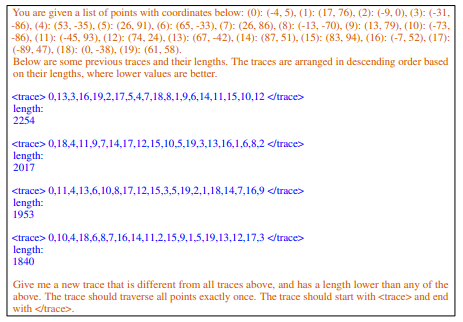

3.2 Traveling Salesman Problem (TSP)

4 Application: Prompt Optimization and 4.1 Problem Setup

4.2 Meta-Prompt Design

5 Prompt Optimization Experiments and 5.1 Evaluation Setup

5.2 Main Results

5.3 Ablation Studies

5.4 Overfitting Analysis in Prompt Optimization and 5.5 Comparison with Evoprompt

6 Related Work

7 Conclusion, Acknowledgments and References

A Some Failure Cases

B Prompting Formats for Scorer Llm

C Meta-Prompts and C.1 Meta-Prompt for Math Optimization

C.2 Meta-Prompt for Prompt Optimization

D Prompt Optimization Curves on the Remaining Bbh Tasks

E Prompt Optimization on Bbh Tasks – Tabulated Accuracies and Found Instructions

C META-PROMPTS

C.1 META-PROMPT FOR MATH OPTIMIZATION

:::info

This paper is available on arxiv under CC0 1.0 DEED license.

:::