As AI copilots and assistants become embedded in daily work, security teams are still focused on protecting the models themselves. But recent incidents suggest the bigger risk lies elsewhere: in the workflows that surround those models.

Two Chrome extensions posing as AI helpers were recently caught stealing ChatGPT and DeepSeek chat data from over 900,000 users. Separately, researchers demonstrated how prompt injections hidden in code repositories could trick IBM’s AI coding assistant into executing malware on a developer’s machine.

Neither attack broke the AI algorithms themselves.

They exploited the context in which the AI operates. That’s the pattern worth paying attention to. When AI systems are embedded in real business processes, summarizing documents, drafting emails, and pulling data from internal tools, securing the model alone isn’t enough. The workflow itself becomes the target.

AI Models Are Becoming Workflow Engines

To understand why this matters, consider how AI is actually being used today:

Businesses now rely on it to connect apps and automate tasks that used to be done by hand. An AI writing assistant might pull a confidential document from SharePoint and summarize it in an email draft. A sales chatbot might cross-reference internal CRM records to answer a customer question. Each of these scenarios blurs the boundaries between applications, creating new integration pathways on the fly.

What makes this risky is how AI agents operate. They rely on probabilistic decision-making rather than hard-coded rules, generating output based on patterns and context. A carefully written input can nudge an AI to do something its designers never intended, and the AI will comply because it has no native concept of trust boundaries.

This means the attack surface includes every input, output, and integration point the model touches.

Hacking the model’s code becomes unnecessary when an adversary can simply manipulate the context the model sees or the channels it uses. The incidents described earlier illustrate this: prompt injections hidden in repositories hijack AI behavior during routine tasks, while malicious extensions siphon data from AI conversations without ever touching the model.

Why Traditional Security Controls Fall Short

These workflow threats expose a blind spot in traditional security. Most legacy defenses were built for deterministic software, stable user roles, and clear perimeters. AI-driven workflows break all three assumptions.

- Most general apps distinguish between trusted code and untrusted input. AI models don’t. Everything is just text to them, so a malicious instruction hidden in a PDF looks no different than a legitimate command. Traditional input validation doesn’t help because the payload isn’t malicious code. It’s just natural language.

- Traditional monitoring catches obvious anomalies like mass downloads or suspicious logins. But an AI reading a thousand records as part of a routine query looks like normal service-to-service traffic. If that data gets summarized and sent to an attacker, no rule was technically broken.

- Most general security policies specify what’s allowed or blocked: don’t let this user access that file, block traffic to this server. But AI behavior depends on context. How do you write a rule that says “never reveal customer data in output”?

- Security programs rely on periodic reviews and fixed configurations, like quarterly audits or firewall rules. AI workflows don’t stay static. An integration might gain new capabilities after an update or connect to a new data source. By the time a quarterly review happens, a token may have already leaked.

Securing AI-Driven Workflows

So, a better approach to all of this would be to treat the whole workflow as the thing you’re protecting, not just the model.

- Start by understanding where AI is actually being used, from official tools like Microsoft 365 Copilot to browser extensions employees may have installed on their own. Know what data each system can access and what actions it can perform. Many organizations are surprised to find dozens of shadow AI services running across the business.

- If an AI assistant is meant only for internal summarization, restrict it from sending external emails. Scan outputs for sensitive data before they leave your environment. These guardrails should live outside the model itself, in middleware that checks actions before they go out.

- Treat AI agents like any other user or service. If an AI only needs read access to one system, don’t give it blanket access to everything. Scope OAuth tokens to the minimum permissions required, and monitor for anomalies like an AI suddenly accessing data it never touched before.

- Finally, it’s also useful to educate users about the risks of unvetted browser extensions or copying prompts from unknown sources. Vet third-party plugins before deploying them, and treat any tool that touches AI inputs or outputs as part of the security perimeter.

How Platforms Like Reco Can Help

In practice, doing all of this manually doesn’t scale. That’s why a new category of tools is emerging: dynamic SaaS security platforms. These platforms act as a real-time guardrail layer on top of AI-powered workflows, learning what normal behavior looks like and flagging anomalies when they occur.

Reco is one leading example.

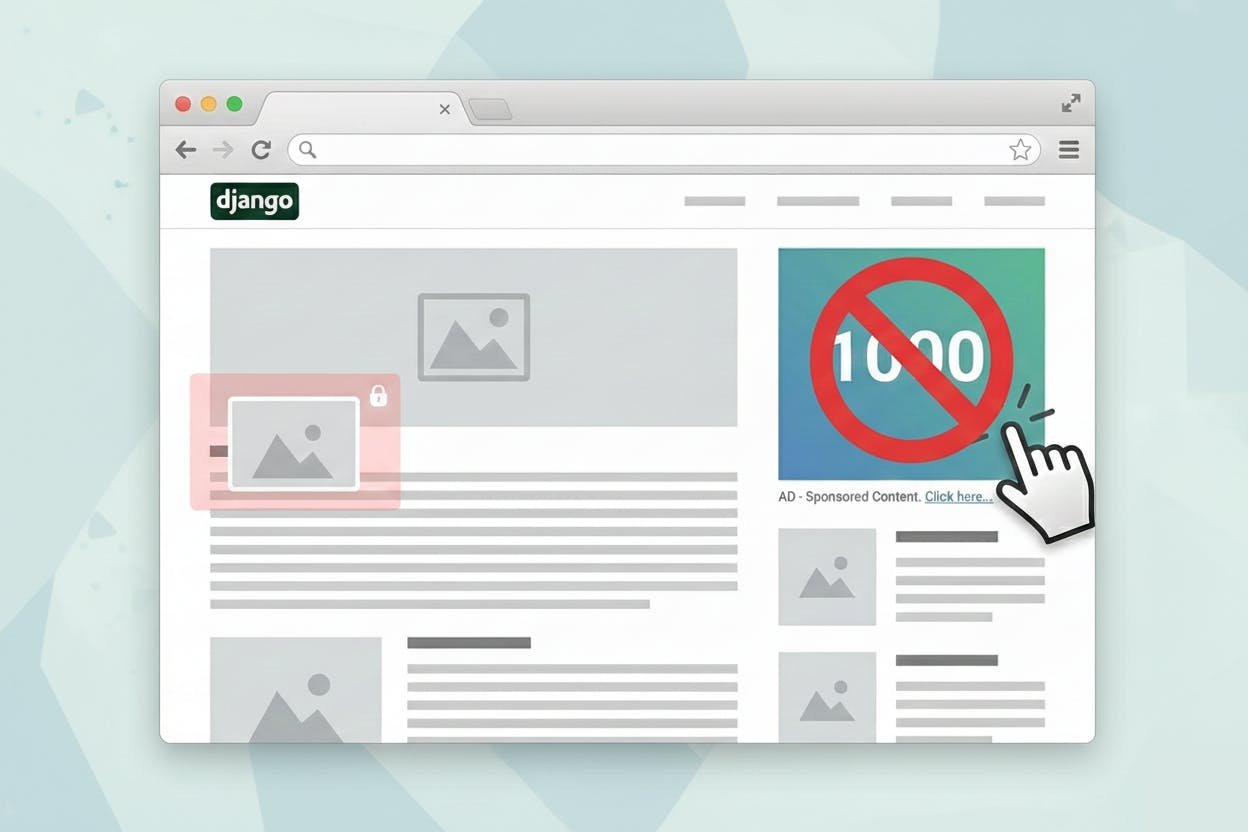

|

| Figure 1: Reco’s generative AI application discovery |

As shown above, the platform gives security teams visibility into AI usage across the organization, surfacing which generative AI applications are in use and how they’re connected. From there, you can enforce guardrails at the workflow level, catch risky behavior in real time, and maintain control without slowing down the business.

Request a Demo: Get Started With Reco.