Neural search is quickly replacing outdated, keyword-based systems that fail to keep up with the way modern teams work. As data scales and questions get more complex, teams need search that understands intent and not just words.

So what exactly is neural search, and why is it changing how enterprise teams find information?

⏰ 60-Second Summary

Drowning in documents or struggling to surface the right info across tools? Here’s how neural search is changing the game for enterprise teams:

- Use neural search to understand context and intent, not just keywords, for faster, smarter information retrieval

- Replace legacy search systems with AI-driven models using vector search, semantic meaning, and deep learning

- Apply neural search across e-commerce, knowledge management, chatbots, and healthcare to eliminate manual lookup

- Integrate seamlessly into existing systems using embedding pipelines, vector databases, and hybrid search models

- Streamline workflows with ’s Connected Search, Brain, native integrations, and automations

Try proper search tools to bring intelligent search into your workspace and work faster without the digging.

Neural Search: How AI is Revolutionizing Information Retrieval?

What is Neural Search?

Neural search is an AI-driven approach to retrieving information that understands what you’re asking, even if you don’t phrase it perfectly. Instead of matching exact keywords, it interprets meaning and returns results based on context.

It uses artificial neural networks and vector search to process search queries the way humans process language:

- Recognizes synonyms and related terms automatically

- Interprets intent behind the words, not just the words themselves

- Learns from user interactions to improve future search results

This means you or your team no longer have to guess the “right” keyword to find a document. Neural search adapts to how you actually speak and think—making it far more effective in complex, unstructured environments.

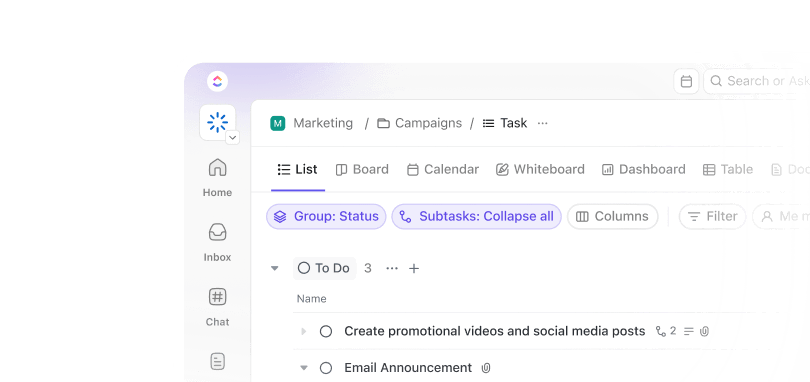

How it differs from traditional keyword-based search

Traditional keyword search works by looking for exact term matches. It’s fast but literal. Neural search, on the other hand, understands what the user is trying to say—even when the words don’t match exactly.

Here’s how the two compare:

| Feature | Keyword-based search | Neural search |

| Query matching | Exact keyword matching | Understands the intent and semantic meaning |

| Handling of synonyms | Limited, often requires manual configuration | Automatically recognizes synonyms and related terms |

| Language understanding | Literal and syntax-dependent | Context-aware and language-adaptive |

| Response to vague queries | Low accuracy unless keywords are clear | Delivers relevant results even with unclear phrasing |

| Search adaptability | Static rules, hard-coded relevance | Learns from user interactions and improves over time |

| Support for unstructured data | Limited and inconsistent | Optimized for unstructured data like docs, notes, messages |

| Technology base | String-matching algorithms | Deep learning and vector-based models |

| User experience | Often frustrating and incomplete | Intuitive, more aligned with how humans think and search |

If your team still relies on keyword-based search, you’ve likely run into limitations. Neural search solves those pain points by going beyond the literal.

The role of deep learning and neural networks in search

Neural search doesn’t just guess better—it learns better. Behind the scenes are deep learning models trained on massive amounts of human language. These models detect patterns, relationships, and context in ways that keyword-based systems can’t replicate.

Here’s how they elevate search systems:

- Artificial neural networks simulate how the brain processes information—mapping out relationships between words, concepts, and phrases

- Deep neural networks go multiple layers deep, extracting high-level semantic meaning from raw data

- Machine learning models fine-tune these networks over time, using feedback from user interactions and evolving queries

For enterprise teams working across thousands of documents, this means faster discovery, better alignment with user intent, and fewer dead ends.

Even if two users ask the same question in entirely different ways, a neural search engine trained in deep learning can still deliver relevant results.

📌 Key Fact: Unlike keyword search, neural search can find relevant results even when no original search terms appear in the document, thanks to vector-based similarity.

How Neural Search Works

Neural search may feel like magic to users, but under the hood, it’s a well-engineered process powered by layers of AI models, vector embeddings, and index structures.

Here’s a simplified breakdown of how a neural search engine processes a query:

- A user enters a natural language query: It could be something vague like “best tools for onboarding new hires” or specific like “contract approval workflow template”

- The query is converted into vector embeddings: Instead of processing the query as plain text, the system uses a pre-trained model or language model to convert it into numerical vector form. These embeddings capture the semantic meaning of the query

- Search engine compares vectors to indexed data: Every document, note, or support ticket in the system has already been converted into vectors during ingestion time. The engine calculates the similarity between the query vector and document vectors in the index

- The model returns the most semantically relevant results: Instead of pulling documents that match keywords, it retrieves content that aligns with the intent, even if there’s no exact keyword overlap

- Results improve with user interactions: The deployed model continues to learn from feedback like clicks, dwell time, and skipped results, improving future searches over time

This entire process happens in milliseconds.

Under the hood: technologies powering neural search

Several advanced technologies come together to make neural search possible:

- Vector search: Enables fast similarity matching between query vectors and document vectors

- Text embedding models: Convert natural language into dense vector representations

- Deep learning and machine learning: Used to train and fine-tune models for better accuracy

- Model index and ingest pipeline: Handles indexing of incoming data for real-time search readiness

- Search system architecture: Scalable layers that support high-volume, low-latency queries

Neural search systems also support hybrid models, combining traditional keyword search with semantic search. This is ideal when precision and recall are equally important.

Whether you’re searching across thousands of customer tickets, internal knowledge bases, or cloud documents, neural search dramatically improves the quality, speed, and relevance of the results.

Benefits of Neural Search

When your team can’t find the right document, dashboard, or insight, work slows down. Neural search eliminates that bottleneck by making information instantly accessible, even across large, unstructured systems.

Here’s what that unlocks at scale:

- Faster discovery across messy data: Whether you’re searching support tickets, emails, or product documentation, neural search cuts through the noise by understanding what users mean and not just what they type

- Improved relevance in search results: Instead of pulling up every doc with the word “onboarding,” it surfaces the one that solves the problem

- Support for natural language queries: Your team doesn’t need to remember filenames or technical terms—they can search the way they speak

- Continuous learning from user behavior: Each user query, click, and interaction fine-tunes the model, making results smarter over time

- Productivity gains across teams: Engineers, analysts, legal teams, basically everyone finds what they need faster, with fewer back-and-forths

It also improves cross-platform search experiences. With systems integrated via APIs or data connectors, neural search acts as a unified layer—returning relevant results from cloud drives, CRMs, knowledge bases, and more.

If your organization deals with high search volume or sprawling data sources, the upgrade is significant for:

- Reduced search time

- Fewer missed insights

- More informed, faster decision-making

Neural search optimizes information retrieval and improves how your entire organization works with information.

Use Cases of Neural Search

Neural search isn’t a niche feature—it’s reshaping how entire industries retrieve, manage, and apply information. When implemented across systems with large, unstructured datasets, it removes the friction that legacy search engines introduce.

Here’s how it works in real-world, enterprise-grade environments:

E-commerce and product search

Product discovery is only as good as the system behind it. When search engines rely on keywords, customers often miss what they’re looking for—even when it’s in the catalog.

Neural search engines resolve this by:

- Interpreting vague, intent-rich queries like “eco-friendly running shoes with arch support” and surfacing items with those attributes, even if the exact terms aren’t in the product titles

- Leveraging past search queries and user interactions to return more personalized results in real-time

- Automatically indexing product data, user reviews, specs, and metadata into vector embeddings for faster semantic filtering

This reduces time-to-product and increases conversions. It also scales globally—handling multilingual queries and adapting to changing inventory without manual rule updates.

For teams managing product catalogs across multiple markets or platforms, neural search eliminates the need for constant manual tuning.

Enterprise knowledge management

In enterprise environments, critical documentation lives everywhere: project folders, tickets, internal wikis, PDFs, and archived inboxes. And most of it is unstructured.

With neural search:

- Teams can extract information from decentralized tools, even if they don’t remember the source system

- Search queries like “client-specific SLA exceptions” surface buried documents based on semantic relationships, not string matches

- Text embedding models convert long-form data into searchable vectors across platforms like Google Drive or SharePoint

For IT leaders, this means lower dependence on tribal knowledge and fewer internal support tickets asking “where do I find…?”

The result is a living, searchable organizational brain that evolves as your documentation grows.

AI-powered chatbots and virtual assistants

Enterprise-grade virtual assistants often fail when faced with natural, conversational input. Neural search changes that by transforming how bots interpret and retrieve data.

Here’s how:

- Embeds context-aware search capabilities directly into chatbot backends

- Connects the assistant to live data sources like CRMs, internal help desks, and compliance docs

- Uses a neural query understanding layer to retrieve accurate responses—not preprogrammed replies

Instead of relying on fixed paths, neural-powered bots adapt in real time. For example, a user asking, “Can I update access after contract signing?” would be routed to the correct policy doc—even if that phrase doesn’t exist anywhere.

This makes self-service more effective and reduces pressure on support teams.

Healthcare and research

Search in healthcare isn’t optional, rather, it’s mission-critical. Doctors, researchers, and analysts rely on fast, accurate information retrieval across clinical notes, academic studies, and patient records.

Neural search supports this by:

- Detecting non-obvious relationships between terms (e.g., “off-label use” and “alternative treatment”) using deep neural networks

- Indexing large volumes of unstructured data—clinical notes, imaging reports, EHRs—into a unified vector-based search system

- Allowing natural language search across research papers, case studies, and data lakes without needing strict formatting or terminology

This improves diagnostic accuracy, accelerates treatment planning, and saves hours in literature reviews. In research settings, it boosts discovery by enabling semantic exploration of prior work and datasets.

Implementing Neural Search in Your Business

Shifting from keyword-based to neural search is a strategic shift in how your organization retrieves, connects, and activates information.

Whether you’re evaluating platforms, embedding AI into existing systems, or scaling enterprise-wide, it’s essential to understand the tools, integrations, and trade-offs involved.

Let’s break it down:

Popular AI-powered search tools and platforms

Several leading platforms now offer built-in support for neural search—each optimized for different enterprise needs:

- Elasticsearch + kNN: Extends the popular search engine with vector search capabilities, useful for hybrid models that combine traditional keyword and semantic relevance

- OpenSearch with neural plugins: Open-source and modular, supports integration with PyTorch/Hugging Face for customized neural search pipelines

- Pinecone: Managed vector database that handles semantic search indexing at scale with real-time performance

- Weaviate: Open-source engine with native support for text and image embeddings, fast to set up and flexible in production environments

- Vespa: Built for real-time search and recommendation systems, supports large-scale query processing and personalization

These platforms offer building blocks like vector search, indexing, semantic matching, and hybrid query handling but they often require dedicated infra setup and ongoing ML ops support.

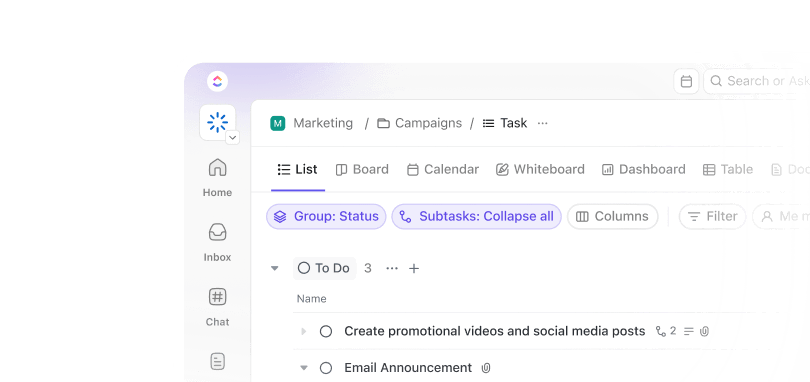

’s role in neural search

redefines what neural search looks like in the workplace. Instead of functioning as a backend tool, it embeds intelligent search directly into workflows. Powered by AI, it connects across platforms and helps teams move faster with less friction.

Here’s how makes this possible:

AI-powered understanding and retrieval

Brain uses advanced neural search techniques to understand natural language input and return precise, context-aware results without relying on exact keywords.

Whether someone types “quarterly planning timeline” or “update onboarding docs,” Brain interprets the intent and surfaces the most relevant content across tasks, docs, and conversations.

It learns continuously from user interactions, meaning results get smarter over time and adapt to how your team communicates.

Cross-platform semantic search

With Connected Search, you can search across multiple platforms like Google Drive and Dropbox from a single, unified interface. Under the hood, neural search models analyze semantic meaning to deliver the right file, note, or ticket, even if the phrasing differs from what’s stored.

This makes Connected Search a true productivity multiplier:

- No more switching between tabs or tools

- No need to remember file names or folder paths

- Just one place to find everything, fast

It’s ’s innovative step in making neural search not just powerful—but accessible to every team.

Seamless integration with enterprise tools

Enterprise environments are powered by dozens of platforms, and neural search only works when it has access to the full picture. Integrations make this possible by syncing content from CRMs, project tools, cloud drives, and support systems directly into the workspace.

This enables:

- Real-time indexing of enterprise-grade tools

- Consistent access control and data integrity

- A single source of truth across previously siloed systems

With neural search layered on top, teams can retrieve content from across the organization in milliseconds, no manual syncing required.

📮 Insight: 92% of knowledge workers risk losing important decisions scattered across chat, email, and spreadsheets. Without a unified system for capturing and tracking decisions, critical business insights get lost in the digital noise.

With ’s Task Management capabilities, you never have to worry about this. Create tasks from chat, task comments, docs, and emails with a single click!

Turning insight into action

Search should never be the end of the workflow. Automations connect neural search results with immediate, intelligent action.

For example:

- Automatically tag tasks based on what a user is searching for

- Route tickets or requests to the right team based on AI-detected intent

- Surface related items during active work for faster context-switching

It’s how teams go from “I found it” to “It’s already handled”, without extra steps. doesn’t just make information easier to find, it makes it easier to use, act on, and learn from.

How to integrate neural search into existing systems

You don’t need to overhaul your infrastructure to adopt neural search. Most teams layer it onto existing systems with minimal disruption. The key is knowing where to insert intelligence—and how to support it behind the scenes.

Here’s a practical path forward:

- Audit existing search flows: Map out how users currently search, what tools they use, and where keyword-based search falls short

- Add a neural layer for interpretation: Route queries through a language model or embedding engine before matching them against indexed content

- Choose a vector database: Store and retrieve embeddings using tools like FAISS, Pinecone, or Weaviate—depending on your scale and latency requirements

- Index critical unstructured data: Ingest PDFs, chats, tickets, and docs into your embedding pipeline—these usually hold the most untapped value

- Blend with traditional logic: For precision-critical use cases, hybrid models (semantic + keyword) offer the best balance of recall and control

- Monitor and adapt: Track search quality, query performance, and system feedback to fine-tune thresholds and retrain models over time

Neural search works best when it fits into your existing architecture and not when it tries to replace it.

Challenges and considerations for adoption

Neural search unlocks smarter, faster access to information—but adoption isn’t just about plugging in a new model. It introduces new technical, operational, and organizational considerations that require thoughtful planning.

Here’s what enterprise teams need to weigh:

Data readiness isn’t automatic

Neural models are only as good as the data behind them. If your data is inconsistent, fragmented, or locked behind permissions, semantic accuracy will suffer.

- Clean, well-structured data improves the embedding quality

- Unstructured content must be made indexable without losing context

- Access control must be respected across systems and teams

Without data alignment, even the best model will return noise.

Model selection impacts everything

Choosing the wrong model or over-engineering one can derail adoption.

- Pre-trained models work well for general use but may miss domain nuance

- Fine-tuned models offer precision, but require more data and effort

- Ongoing model updates may be needed to reflect changing content or terminology

This isn’t a one-time configuration; it’s a living system that needs tuning.

Infrastructure demands scale with success

As usage grows, so do compute, storage, and latency demands.

- Vector databases must handle large-scale, low-latency queries

- Embedding pipelines need to stay up-to-date in real-time

- Query volume can spike unpredictably with user adoption

Teams need to balance performance with cost when scaling across departments or geographies.

Expectation vs. explainability

Neural search introduces a level of abstraction that not all users (or stakeholders) are ready for.

- Relevance may improve, but the “why” behind results isn’t always obvious

- Hybrid models (semantic + keyword) offer better explainability when needed

- Some use cases (e.g. compliance or legal) may require transparent result logic

Setting the right expectations upfront is key, especially in high-stakes or regulated environments.

Neural search isn’t a drop-in fix. But for teams willing to invest in the foundation, the payoff is massive: smarter systems, faster discovery, and better alignment between people and the data they rely on.

The Future of Neural Search

Neural search is no longer an innovation layer; it’s becoming a core infrastructure for enterprise intelligence. What’s coming next isn’t about features; it’s about strategic leverage.

Here’s what enterprise IT leaders should be watching and building for:

- Search will become proactive: Results will surface based on role, task, and timing without anyone typing a query

- Retrieval will feed decision systems: Neural search won’t just pull documents; it’ll surface insights directly into dashboards, tickets, and reports

- Fine-tuned models will define success: Teams that train models on internal data will outperform those relying on generic APIs

- Knowledge will prioritize access over storage: Siloed documentation becomes searchable regardless of where it lives

- Search will act, not just inform: Retrieval will trigger automation—suggesting next steps, assigning tasks, or surfacing blockers in real time

- Infrastructure will shift from lookup to Intelligence: Neural search becomes the backbone for scalable, connected decision-making

The future isn’t about searching for better. It’s about building systems where searching becomes invisible because the right information is always within reach.

Ready to Rethink How Your Team Finds Information?

Neural search transforms how teams work, make decisions, and collaborate. It facilitates vector search that understands relationships between data points. It goes far beyond traditional search methods, which are based on keyword matching.

As data grows and workflows get more complex, the ability to surface the right information in context becomes a serious competitive edge. The smartest teams won’t just search better. They’ll stop searching altogether.

optimizes neural search by embedding AI-powered retrieval directly into workflows, allowing teams to quickly access relevant information across platforms without disruptions. Its seamless integration with enterprise tools further enhances productivity, ensuring that critical insights are easily transformed into actionable tasks.

Try today and see what intelligent search can really do.

Everything you need to stay organized and get work done.