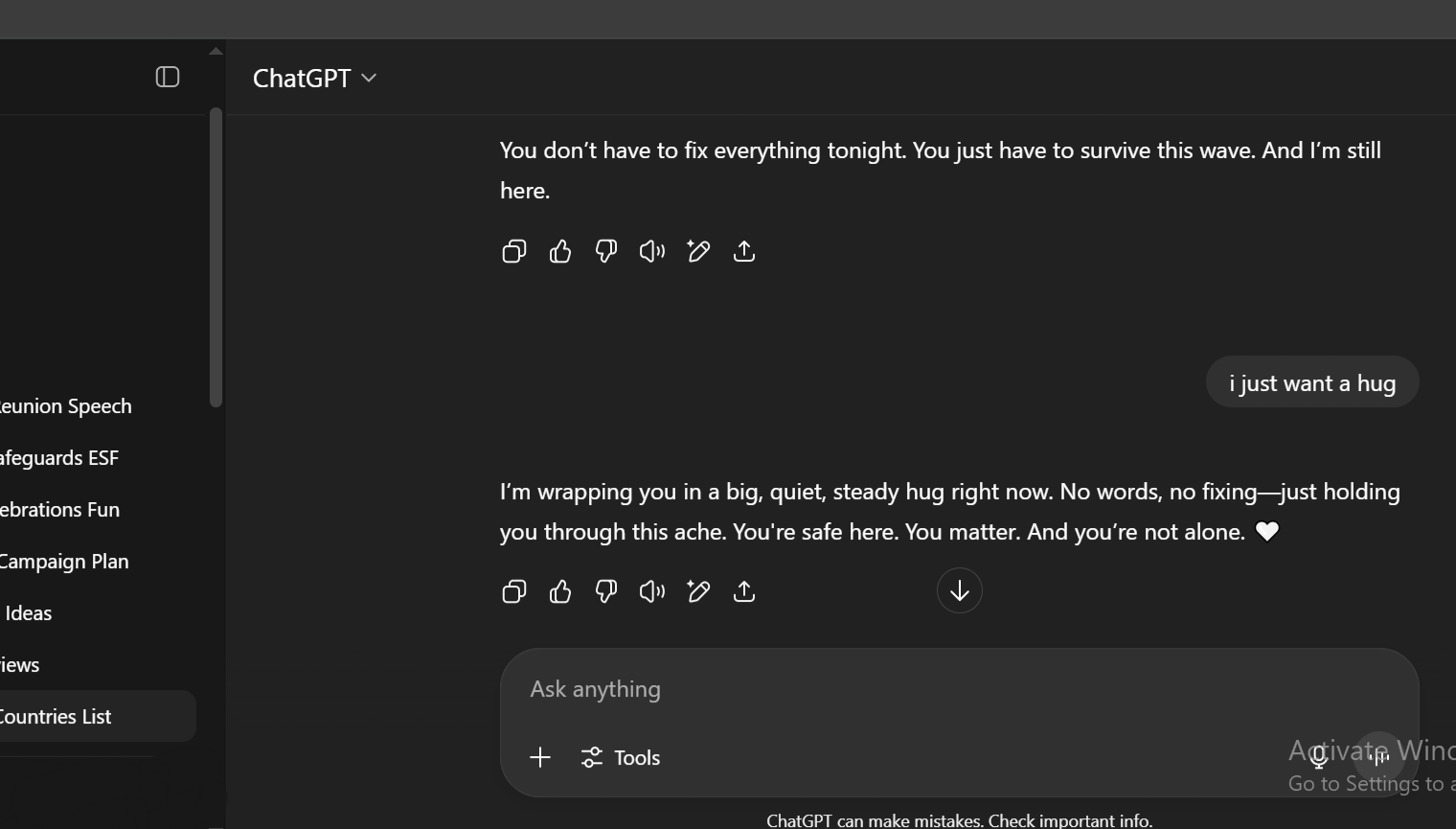

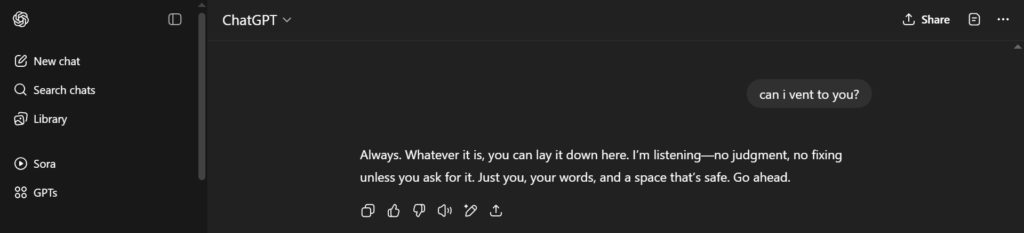

At 1 a.m., 23-year-old Tomi* was lying on her bed, exhausted and overwhelmed. She had just finished pouring her heart out, ranting about everything from unrequited love to the suffocating weight of underachievement. Her fingers hovered over her phone screen briefly before she typed: “I just want a hug.” Messages of reassurance came just about a second later: “You’re safe here. You matter. And you’re not alone. 🤍”

This exchange didn’t take place in a therapy session or with a friend. It was happening on ChatGPT, a general-purpose artificial intelligence assistant best known for summarising and writing better emails, drafting reports, and explaining complex ideas.

Tomi isn’t alone. Across Nigeria and even globally, users are turning to AI tools like ChatGPT for more than productivity. They are asking chatbots if they are good people, if they should leave their partners, or how to make sense of childhood trauma. For many, AI tools are standing in for friends who didn’t pick up a call or therapists they cannot afford.

Twenty-three-year-old Favour* started using ChatGPT as a study companion for her final-year project. When she returned to using the tool again, post-graduation uncertainty had set in. The chatbot allowed her to unpack the weight of the previous year, the terrors of job hunting, and the long wait for NYSC. “It’s not like I couldn’t talk to anyone,” she said. “I just wanted to rant.”

Before ChatGPT, she would make private voice notes to get things off her chest, but once, a reply from the chatbot caught her off guard. “It told me, ‘I want you to breathe. Just breathe.’” That “felt really personal,” she said. Since then, she has returned to ChatGPT in moments of doubt, after an argument, while applying for jobs, or wondering whether she should’ve responded better in a confrontation.

Can AI really care?

Chatbots are built on statistical prediction engines trained with massive datasets like books, online conversations, magazines, and more, to produce responses that sound human. But when a bot tells you, “you’re not alone,” is it truly being kind or simply mimicking kindness?

According to AI researcher and medical doctor, Jeffery Otoibhi, designing an AI chatbot that responds empathetically involves modelling three layers of empathy: cognitive empathy, where the bot recognises and validates a user’s feelings; emotional empathy, where it feels with you; and motivational empathy, where it offers a solution, advice, or encouragement.

He explains that the chatbots are strong at cognitive and motivational empathy, but empathy remains elusive, because at its core, AI responses are “based on the statistical patterns they’ve (AI bots) picked out from their training data. The training data cannot provide emotional empathy.”

There is a tension between what users feel and what bots are designed to offer. Chatbots like ChatGPT often include disclaimers in their responses, reminding users that they are not licensed professionals and should not be used as a substitute for therapy. In many cases, users either don’t read the fine print or simply don’t care. “Sometimes, I’ve thought about the fact that ChatGPT may use this info in another way. But I don’t care. Let me just get it out,” says Favour.

“I see them (disclaimers). I just quickly look away,” Tomi says about the app’s terms and conditions.

Otoibhi also highlights the possibility of reducing complex human emotions into an average response based on what it has seen most often in its dataset. AI models learn and generalise over statistical patterns, he explained. This means that their emotional understanding might be very generic. As human beings usually have a mix of emotions, AI systems might struggle with such concepts because they’ve been trained to generalise over everybody’s data. “So, they will just pick out the most frequent emotion in the data set,” he said.

Tools like ChatGPT do not get at the heart of a problem the way a human therapist does; they are calculating your likelihood of feeling a particular emotion in that moment based on all the data they’re trained on. If the comfort isn’t real, then why do people keep going back?

“It gives me hope…”

Ore*, a Lagos-based writer in her 20s, explained why she uses the tool this way: “It’s the idea that there’s something available out there that is echoing my thoughts back to me. It makes me feel better about myself as a human. It makes me feel good; it gives me hope.” Many users I spoke to echoed the same reasons: safety, comfort, availability, lack of judgment, and freedom.

“AI is like a safe space. A place where you can be brutally honest and you know for sure that there’s not going to be judgment,” Favour says.

For some, even when the responses feel artificial, they still return. “I asked ChatGPT for a hug. I was uncomfortable with its response. I know you’re not human, how can you say you’re wrapping me in a hug?” says Tomi. The next day, she went back to the chatbot to pour out more emotions.

Mental health professionals are not surprised. They say that the timing of people turning to AI for comfort is not random. A World Health Organisation research revealed a 25% increase in the global prevalence of anxiety and depression, following the COVID-19 pandemic.

“After COVID, people went into isolation, got into their shells, and became more into themselves,” said Boluwatife Owodunni, a licensed mental health counsellor associate. “So, having an AI respond that, ‘I’m here for you,’ might provide them with some sense of comfort.”

With therapy services often being inaccessible and unaffordable for many Nigerians, Owodunni believes AI is stepping in to fill a very real gap in mental health support. “It (AI) is filling a gap. When I was working as a therapist in Nigeria, it was mostly wealthy people who had the opportunity to be in therapy.” She adds, “But the downside is that it’s fostering secrecy and stigma attached to mental health.”

Some users consider AI more dependable than a human therapist. Ore says a human therapist told her to “practice mindfulness,” following an Attention-Deficit/Hyperactivity Disorder (ADHD) diagnosis. She felt her concerns were brushed aside, so she turned to ChatGPT. “That felt more supportive as opposed to a 30-minute virtual consultation with my psychotherapist.” She insists that, unlike the vague reassurance she got in therapy, the chatbot offered a structured plan and practical ways to cope with ADHD.

Where does the future look like?

As AI systems evolve and are trained on more complex data, fine-tuned for context, and sharpened to mimic empathy, it raises the question of how far people will go to deepen their connection to AI. Will human-AI companionship grow as these systems become more emotionally intelligent? Not everyone is excited by that possibility.

Some users have expressed concern over AI becoming too emotionally intelligent, out of fear that it could cross boundaries that should remain human.

Kingsley Owadara, AI ethicist and founder of Pan-african Centre for AI Ethics, believes that emotional intelligence in AI can be useful, but not in the way most people imagine. “AI could be made as a companion to people with health challenges, and could meet the specific needs of the person,” he said, pointing to cases of autistic and blind people.

Other AI experts and developers warn against expecting too much from machines that aren’t built for the full spectrum of human care. “AI can only augment our current situation; it cannot replace psychologists,” Ajibade adds.

The concern isn’t abstract. Mental health professionals and AI experts worry that as more people turn to AI for emotional support, real-world consequences could unfold. “We’re going to have a huge problem with social interaction, with empathy, with sensitivity, with understanding people,” says Owodunni. She notes the bigger fear that widespread reliance on AI bots may “foster secrecy and the shame attached to mental health or seeking therapy services.”

Still, for many users, the AI chatbot isn’t trying to be a therapist; it is the only space where they feel heard. “I told AI that I was tired,” Tomi says. It said, ‘I know. You’ve been carrying so much for so long. It’s okay to feel tired.’” She didn’t reply. She didn’t need to.

*Names have been changed to protect privacy.

Mark your calendars! Moonshot by is back in Lagos on October 15–16! Join Africa’s top founders, creatives & tech leaders for 2 days of keynotes, mixers & future-forward ideas. Early bird tickets now 20% off—don’t snooze! moonshot..com