OpenAI today detailed o3, its new flagship large language model for reasoning tasks.

The model’s introduction caps off a 12-day product announcement series that started with the launch of a new ChatGPT plan. ChatGPT Pro, as the $200 per month subscription is called, features a predecessor of the new o3 LLM. OpenAI also released its Sora video generator and a bevy of smaller product updates.

The company has not detailed how o3 works or when it might become available to customers. However, it did release results from a series of benchmarks that evaluated how well o3 performs various reasoning tasks. Compared with earlier LLMs, the model demonstrated significant improvements across the board.

Perhaps the most notable of the benchmarks that OpenAI used is called ARC-AGI-1. It tests how well a neural network performs tasks that it was not specifically trained to perform. This kind of versatility is seen as a key requisite to creating artificial general intelligence, or AGI, a hypothetical future AI that can perform many tasks with the same accuracy as humans.

Using a relatively limited amount of computing power, o3 scored 75.7% on ARC-AGI-1. That percentage grew to 87.5% when the model was given access to more infrastructure. GPT-3, the LLM that powered the original version of ChatGPT, scored 0%, while the GPT-4o model released earlier this year managed 5%.

“Passing ARC-AGI does not equate to achieving AGI, and, as a matter of fact, I don’t think o3 is AGI yet,” François Chollet, the developer of the benchmark, wrote in a blog post. “You’ll know AGI is here when the exercise of creating tasks that are easy for regular humans but hard for AI becomes simply impossible.”

OpenAI says that o3 also achieved record-breaking performance in the Frontier Math test, one of the most difficult AI evaluation benchmarks on the market. It comprises several hundred advanced mathematical problems that were created with input from more than 60 mathematicians. OpenAI says that o3 solved 25.2% of the problems in the test, easily topping the previous high score of about 2%.

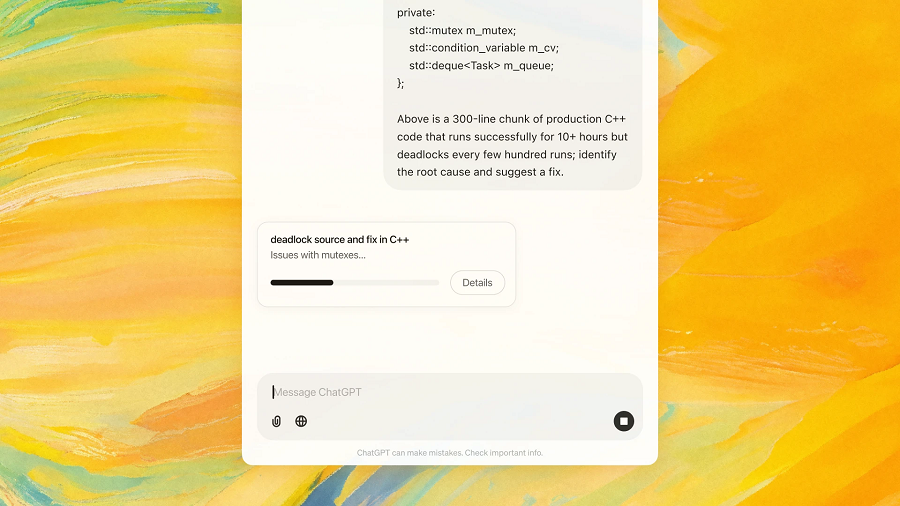

Programming is another use case to which the LLM can be applied. According to OpenAI, o3 outperformed the previous-generation o1 model on the SWE-Bench Verified benchmark by 22.8%. The benchmark includes questions that challenge AI models to find and fix a bug in a code repository based on a natural language description of the problem.

OpenAI detailed that 3o is available in two flavors: a full-featured edition called simply o3 and o3-mini. The latter release is presumably a lightweight version that trades off some output quality for faster response times and lower inference costs. The previous-generation o1 model is also available in such a scaled-down edition.

Initially, OpenAI is only making o3 accessible to a limited number of AI safety and cybersecurity researchers. Their feedback will help the company improve the safety of the model before it’s made more broadly available.

In a blog post, OpenAI detailed that it built o3 using a new technique for preventing harmful output. The method, deliberative alignment, allows researchers to supply AI models with a set of human-written safety guidelines. It works by embedding those guidelines into the training dataset with which an LLM is developed.

Image: OpenAI

Your vote of support is important to us and it helps us keep the content FREE.

One click below supports our mission to provide free, deep, and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more luminaries and experts.

THANK YOU