Table of links

ABSTRACT

1 INTRODUCTION

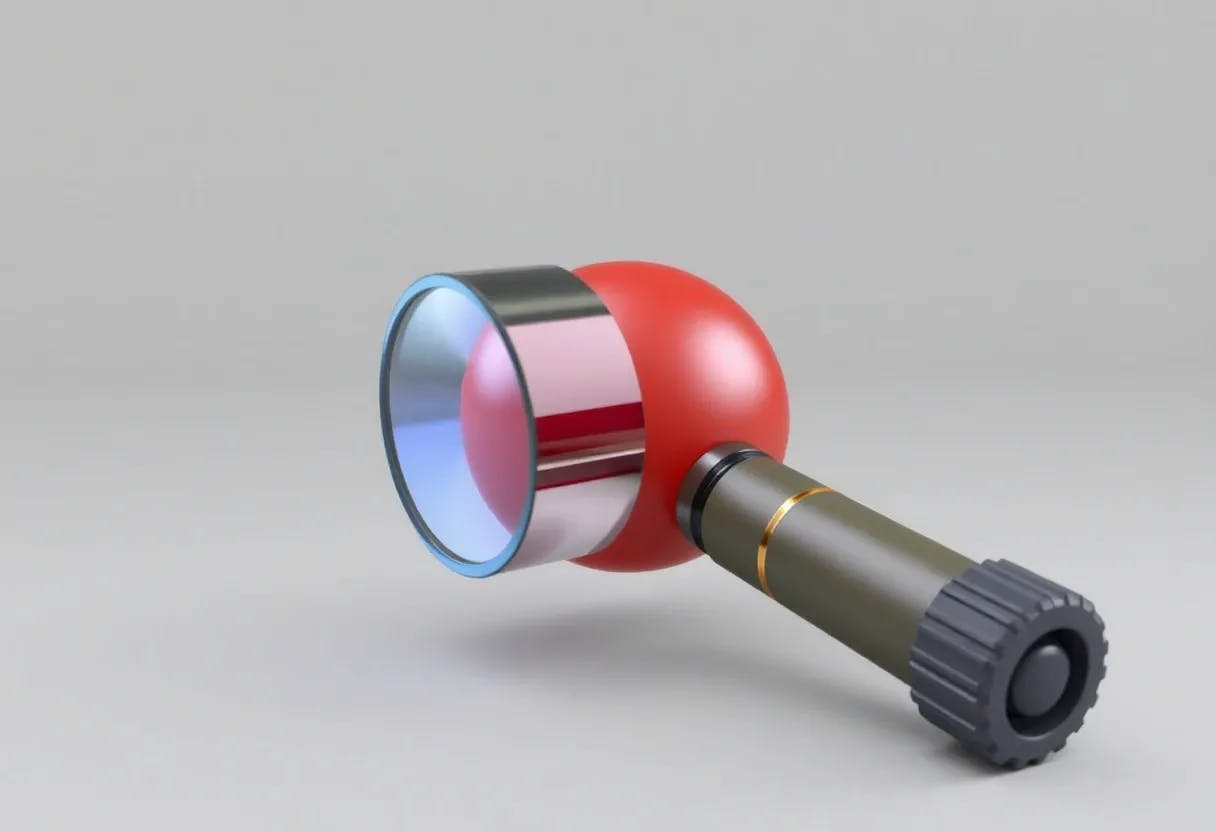

2 BACKGROUND: OMNIDIRECTIONAL 3D OBJECT DETECTION

3 PRELIMINARY EXPERIMENT

3.1 Experiment Setup

3.2 Observations

3.3 Summary and Challenges

4 OVERVIEW OF PANOPTICUS

5 MULTI-BRANCH OMNIDIRECTIONAL 3D OBJECT DETECTION

5.1 Model Design

6 SPATIAL-ADAPTIVE EXECUTION

6.1 Performance Prediction

5.2 Model Adaptation

6.2 Execution Scheduling

7 IMPLEMENTATION

8 EVALUATION

8.1 Testbed and Dataset

8.2 Experiment Setup

8.3 Performance

8.4 Robustness

8.5 Component Analysis

8.6 Overhead

9 RELATED WORK

10 DISCUSSION AND FUTURE WORK

11 CONCLUSION AND REFERENCES

6 SPATIAL-ADAPTIVE EXECUTION

Panopticus dynamically schedules the inference configuration of the multi-branch model at runtime to maximize detection accuracy within the latency target 𝑇 . Specifically, the goal of the scheduler is to find the optimal selection 𝑐 ∗ for 𝑁 image-branch pairs, given the possible inference branches {𝐵 𝑖 } 𝑀 𝑖=0 and multi-view images {𝐼 𝑗 𝑡 } 𝑁 𝑗=0 at time 𝑡. The key idea of the solution is to utilize the performance predictions for image-branch pairs. The scheduler’s objective function is formulated as follows:

where 𝑓𝐴 and 𝑓𝐿 denote the branch accuracy and latency predictors, respectively. Here, 𝑑𝑖𝑗 ∈ {0, 1} indicates a binary decision, where 𝑑𝑖𝑗 = 1 denotes the decision of 𝑖 th branch to run for 𝑗 th image. For each image, only one branch is allocated, i.e., Í𝑀 𝑖=0 𝑑𝑖𝑗 = 1, ∀𝑗 ∈ {0, . . . , 𝑁}. Otherwise, multiple images can be processed by a single branch, resulting 𝑀𝑁 possible configurations in total. In the following, we describe the method to find 𝑐 ∗ .

6.1 Performance Prediction

To find the optimal selection of image-branch pairs, Panopticus first predicts the expected accuracy and latency of each pair. As described in Section 3, the model’s detection capabilities are influenced by spatial characteristics, such as the number, location, and movement of objects. Panopticus predicts changes in the spatial distribution considering these factors. The forecasted spatial distribution is then harnessed to predict the expected accuracy and processing time of possible branch-image selections.

Prediction of spatial distribution. To predict the expected spatial distribution at the incoming time 𝑡, Panopticus utilizes the future states of tracked objects estimated via a 3D Kalman, described in Section 5.1. Based on the objects’ state predictions such as expected locations, each object is categorized considering all property levels described in Table 2. Consequently, objects are classified into one of 80 categories (5 distance levels × 4 velocity levels × 4 size levels). For instance, a pedestrian standing nearby has levels of D0, V0, and S0. The predicted spatial distribution vector 𝐷 ′ 𝑡 is then calculated as follows:

𝐷 ′ 𝑡 = [𝑝𝐷0𝑉 0𝑆0, 𝑝𝐷1𝑉 0𝑆0, . . . , 𝑝𝐷4𝑉 3𝑆3], (4)

where each element represents the ratio of the number of objects in each category to the total number of tracked objects at time 𝑡. In fact, Panopticus calculates 𝐷 ′𝑗 𝑡 for each camera view 𝑗. To do so, Panopticus identifies the camera view that contains the predicted 3D center location of each object. Accordingly, the predicted distribution vectors allow for the relative comparison of expected scene complexities across camera views.

Accuracy prediction. The goal of the accuracy predictor 𝑓𝐴 is to predict the expected accuracy of each branch-image pair. We propose an approach to model the detection performance of the pairs by utilizing the predicted spatial distribution. We employ a regression model to realize the 𝑓𝐴. We use the XGBoost regressor for 𝑓𝐴, trained on a validation set from the nuScenes dataset. The purpose of 𝑓𝐴 is to predict the detection score (detailed in Section 8.2) of each branch based on the spatial distribution vector. For each pair of detection branch and camera view 𝑗, 𝑓𝐴 takes the estimated spatial distribution 𝐷 ′𝑗 𝑡 and a one-hot encoded branch type as inputs. As a result, the predicted scores for 16 detection branches are generated for each view 𝑗. To predict the detection score of the tracker’s branch for each view 𝑗, 𝑓𝐴 utilizes 𝐷 ′𝑗 𝑡 and the average confidence level of tracked objects. The rationale behind using confidence level is that the objects with higher certainty are more likely to appear in the near future.

Latency prediction. Latency prediction involves estimating the processing time of modules such as neural networks within detection branches. In general, these modules have consistent latency profiles at runtime. Accordingly, the expected latency of each detection branch can be determined simply by summing up its modules’ latency profiles. Recall that forecasting the objects’ future states can be performed instantaneously. On the other hand, the latency for updating the states of tracked objects by associating them with newly detected boxes depends on the number of objects in a given space. We modeled the processing time of state update with a simple linear regressor, trained by the same data used for 𝑓𝐴. The linear model is designed to predict the expected update latency as a function of the number of tracked objects.

6.2 Execution Scheduling

Finding the optimal selection between multi-view images and inference branches can be solved using a combinatorial optimization solver. We use integer linear programming (ILP) because the image-branch selection is represented with a binary decision, and also our objective function and constraint are linear. Specifically, we adopt Simplex and branch-andcut algorithms to efficiently find the optimal selection. The scheduling process including performance prediction takes up to 3ms on Orin Nano with limited computing power. Algorithm 1 shows the operational flow of our system which adapts to the surrounding space. At a given time 𝑡, the system first estimates the spatial distributions for each camera view (line 2), based on the state predictions of tracked objects (line 11). Utilizing the spatial distributions, the system predicts the detection scores of all

possible pairs of branches and camera views (line 3). To ensure a uniform comparison across camera views, each score is normalized against the score of the most powerful branch deployed on the target device. Next, the estimated latency for each branch is acquired using offline latency profiles (line 4). As our model includes modules that operate statically, such as the BEV head and tracker update, the effective latency limit is calculated by subtracting the estimated latencies of these modules from the latency target (lines 5-7). Then, the optimal selection of branch-image pairs is determined using the ILP solver (line 8). Taking the scheduler’s decision and incoming images as inputs, the model generates 3D bounding boxes (lines 12-14). These outcomes are subsequently utilized to update the states of tracked objects (line 15). An outdated object without a matched detection box is penalized by halving the confidence level and removed if the confidence is lower than the threshold. Finally, a downstream application utilizes the information of detected objects to provide its functionality such as obstacle avoidance (line 16).

This paper is