Alt tags are the most well known aspect of building accessible web pages, but they are unfortunately often neglected or poorly implemented. Alt tags are brief text descriptions added to images. Screen readers read the contents of a web page to users, and the image descriptions are what they read to communicate what is in images on the page to visually impaired users, since they can’t see them.

Unfortunately, it’s common for images to be completely missing any alt tags. I have also seen alt tags misused in ways that make things harder for a visually impaired user, such as tags that just say “picture” or “image,” tags that are cutesy captions an author added without any reference to what’s in the image (ie, an image of a coffee and a laptop on a blogging page, with the caption “dear diary, I’d love to be selected as a guest writer”). I’ve also seen alt tags that include 3 lines of SEO keywords. Can you imagine trying to listen to what’s on a website only to hear “image of picture” or a long list of SEO keywords?

This is a Chrome extension designed to empower visually impaired users by allowing them to overwrite bad alt tags, and leverage Open AI to insert AI generated descriptions. This allows a visually impaired user to actually access all the content on a webpage that a visually unimpaired user can access (or at least not get slowed down by a long list of SEO keywords).

If you just want the extension, you are welcome to download this repo and follow the instructions in the README.

However, if you’re interested in a step-by-step guide of how to build a Chrome extension with OpenAI, following is a walk through.

Building the extension:

- Get a basic ‘Hello World’ Chrome Extension Up and Running

First, let’s get a basic Chrome boilerplate up and running. Clone this repository and follow the instructions in the README:

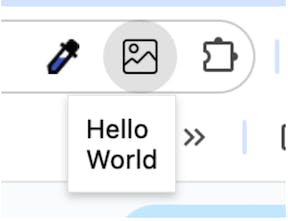

Once you get that up and installed, you should have an image icon in your extension bar (I recommend pinning it to make testing quicker), and when you click on it, should see a popup with “hello world.”

Let’s open the boilerplate code, and walk through the existing files. This will also cover some Chrome Extension basics:

Static/manifest.json – Every Chrome extension has a manifest.json file. It includes basic information and setup about the extension. In our Manifest file, we have a name, description, a background file set to src/background.js, an icon set to image-icon.png (this is the icon that will show up representing the extension on the extensions menu), and it sets popup.html as the file source for our popup.

src/background.js – A background.js file set up in our manifest. The code in this file will run in the background and monitor for events that trigger functionality in the extension.

src/content.js – Any script that is run in the context of the webpage or modifying the webpage gets put in a content script.

src/popup.js, static/popup.css, and static/popup.html – These files control the popup you see when you click the extension icon

Let’s get some basics set up – open static/manifest.json and change the name and description to “Screen Reader Image Description Generator” (or whatever you’d prefer).

-

Enable interact with web pages using a content script

Our extension is going to overwrite alt tags on the website the user is on, which means we need access to the page html. The way to do this in Chrome Extensions is via content scripts. Our content script will be in our src/content.js file.

The simplest way to inject a content script is by adding a “scripts” field to the manifest with a reference to a js file. When you set up a content script this way,, the linked script will be run whenever the extension is loaded. However, in our case, we don’t want our extension to automatically run when a user opens the extension. Some websites have perfectly fine alt tags set on images, so we want to only run the code when the user decides it’s necessary.

We’re going to add a button in our popup and a console log in our content script, so that when the user clicks the button, the content script is loaded, and we can confirm that by seeing our statement printed in the Chrome console.

Popup.html

<button id="generate-alt-tags-button">Generate image descriptions</button>

src/content.js

console.log(‘hello console’)

The way to connect that button click on the popup to the content script involves both popup.js and background.js.

In popup.js, we will grab the button from the DOM and add an event listener. When a user clicks that button, we will send a message signifying the content script should be injected. We’ll name the message “injectContentScript”

const generateAltTagButton = document.body.querySelector('#generate-alt-tags-button');

generateAltTagButton.addEventListener('click', async () => {

chrome.runtime.sendMessage({action: 'injectContentScript'})

});

In background.js, we have the code that monitors events and reacts to them. Here, we’re setting up an event listener, and if the message received is ‘injectContentScript’, it will execute the content script in the active tab (the user’s current web page).

chrome.runtime.onMessage.addListener((message, sender, sendResponse) => {

if (message.action === 'injectContentScript') {

chrome.tabs.query({ active: true, currentWindow: true }, (tabs) => {

chrome.scripting.executeScript({

target: { tabId: tabs[0].id },

files: ['content.js']

});

});

}

});

The last step to set this up is to add “activeTab” and “scripting” permissions to our manifest. The “scripting” permission is required to run any content script. We also have to add permissions for the pages we inject the script into. In this case, we’ll be injecting the script into the user’s current website, aka their active tab, and that is what the activeTab permission allows.

In manifest.json:

"permissions": [

"activeTab",

"scripting"

],

At this point, you may need to remove the extension from Chrome and reload it for it to run correctly. Once it is running, we should see our console log in our Chrome console.

Here is a github link for working code for the repo at this stage.

-

Gathering page images and inserting test alt tags

Our next step is to use our content script file to grab all of the images on the page, so we have that info ready to send in our API calls to get image descriptions. We also want to make sure we’re only making calls for images where it’s helpful to have descriptions. Some images are purely decorative and don’t have any need to slow down screen readers with their descriptions. For example, if you have a search bar that has both the label “search” and a magnifying glass icon. If an image has its alt tag set to an empty string, or has aria-hidden set to true, that means the image does not need to be included in a screen reader, and we can skip generating a description for it.

So first, in content.js, we will gather all of the images on the page. I’m adding a console.log so I can quickly confirm it’s working correctly:

const images = document.querySelectorAll("img");

console.log(images)

Then we will loop through the images, and check for images that we need to generate an alt tag for. That includes all images that don’t have an alt tag, and images that have an alt tag that isn’t an empty string, and images that haven’t been explicitly hidden from screen readers with the aria-hidden attribute.

for (let image of images) {

const imageHasAltTag = image.hasAttribute('alt');

const imageAltTagIsEmptyString = image.hasAttribute('alt') && image.alt === "";

const isAriaHidden = image.ariaHidden ?? false;

if (!imageHasAltTag || !imageAltTagIsEmptyString || !isAriaHidden) {

// this is an image we want to generate an alt tag for!

}

}

Then we can add a test string to set the alt tags to, so we can confirm we have a functional way to set them before we move on to our OpenAI calls. Our content.js now looks like:

function scanPhotos() {

const images = document.querySelectorAll("img");

console.log(images)

for (let image of images) {

const imageHasAltTag = image.hasAttribute('alt');

const imageAltTagIsEmptyString = image.hasAttribute('alt') && image.alt === "";

const isAriaHidden = image.ariaHidden ?? false;

if (!imageHasAltTag || !imageAltTagIsEmptyString || !isAriaHidden) {

image.alt = 'Test Alt Text'

}

}

}

scanPhotos()

At this point, if we open Chrome dev tools Elements, click on an image, we should see “Test Alt Text” set as the alt tag.

A working repo for where the code is at this stage is here.

-

Install OpenAI and generate image descriptions

In order to use OpenAI, you will need to generate an OpenAI key and also add credit to your account. To generate an OpenAI key:

- Go to https://platform.openai.com/docs/overview and, under “Get your API keys”, click “Sign up”

- Follow the instructions to create an OpenAI account

- On the “Make Your First Call” screen, enter a preferred name for your API key, such as “Chrome Image Description Generator Key”

- Click “Generate API Key”

Save this key. Also, keep it private – make sure not to push it into any public git repos.

Now, back in our repo, first we want to install OpenAi. In the terminal inside the project directory, run:

npm install openai

Now in content.js, we’ll import OpenAI by adding this code at the top of the file, with your OpenAI key pasted in line 1:

const openAiSecretKey = 'YOUR_KEY_GOES_HERE'

import OpenAI from "openai";

const openai = new OpenAI({ apiKey: openAiSecretKey, dangerouslyAllowBrowser: true });

“DangerouslyAllowBrowser” allows the call to be made with your key from the browser. Generally, this is an unsafe practice. Since we are only running this project locally, we will leave it like this, rather than set up a back-end retrieval. If you do use OpenAI in other projects, make sure you follow best practices regarding keeping the key secret.

Now we add our call to have OpenAI generate image descriptions. We are calling the create chat completions endpoint (OpenAI docs for the chat completions endpoint).

We have to write our own prompt and also pass in the image’s src URL (more info on AI prompt engineering). You can adapt the prompt as you’d like. I chose to limit the descriptions to 20 works because OpenAI was returning long descriptions. Additionally, I noticed it was fully describing logos like Yelp or Facebook logos (ie, ‘a big blue box with a white lowercase f inside’), which weren’t helpful. In case it’s an infographic, I ask that the word limit is ignored and the full image text is shared.

Here is the full call, which returns the content of the first AI response and also passes the error into a “handleError” function. I’ve included a console.log of each response so we can get quicker feedback on if the call is successful or not:

async function generateDescription(imageSrcUrl) {

const response = await openai.chat.completions.create({

model: "gpt-4o-mini",

messages: [

{

role: "user",

content: [

{ type: "text", text: "Describe this image in 20 words or less. If the image looks like the logo of a large company, just say the company name and then the word logo. If the image has text, share the text. If the image has text and it is more than 20 words, ignore the earlier instruction to limit the words and share the full text."},

{

type: "image_url",

image_url: {

"url": imageSrcUrl,

},

},

],

},

],

}).catch(handleError);

console.log(response)

if (response) { return response.choices[0].message.content;}

}

function handleError(err) {

console.log(err);

}

We add a call to this function into the if statement we wrote before (we also have to add an async keyword at the beginning of the scanImages function to include this asynchronous call):

const imageDescription = await generateDescription(image.src)

if (!imageDescription) {

return;

}

image.alt = imageDescription

Here is a link to the full content.js and repo at this point.

-

Building out the UI

Next, we want to build out our UI so that the user knows what is happening after they click the button to generate the tags. It takes a few seconds for the tags to load, so we want a ‘loading’ message so the user knows it’s working. Additionally, we want to let them know that it’s successful, or if there’s an error. In order to keep things simple, we’ll have a general user message div in the html, and then use popup.js to dynamically insert the appropriate message to the user based on what is happening in the extension.

The way Chrome extensions are set up, our content script (content.js) is separated from our popup.js, and they aren’t able to share variables the way typical JavaScript files are. The way the content script can let the popup know that the tags are loading, or are successfully loaded, is via message passing. We already used message passing when we let the background worker know to inject the content script when a user clicked on the original button.

First, in our html, we’ll add a div with the id ‘user-message’ under our button. I’ve added a little more description for the initial message, as well.

<div id="user-message">

<img src="image-icon.png" width="40" class="icon" alt=""/>

This extension uses OpenAI to generate alternative image descriptions for screen readers.

</div>

Then, in our popup.js, we’ll add a listener that listens to any messages sent that may contain an update to the extension state. We’ll also write some html to inject based on whatever state result we get back from the content script.

const userMessage = document.body.querySelector('#user-message');

chrome.runtime.onMessage.addListener((message, sender, sendResponse) => {

renderUI(message.action)

}

);

function renderUI(extensionState) {

generateAltTagButton.disabled=true;

if (extensionState === 'loading') {

userMessage.innerHTML = '<img src="loading-icon.png" width="50" class="icon" alt=""/> New image descriptions are loading... <br> <br>Please wait. We will update you when the descriptions have loaded.'

} else if (extensionState === 'success') {

userMessage.innerHTML = '<img src="success-icon.png" width="50" class="icon" alt=""/> New image descriptions have been loaded! <br> <br> If you would like to return to the original image descriptions set by the web page author, please refresh the page.'

} else if (extensionState === 'errorGeneric') {

userMessage.innerHTML = '<img src="error-icon.png" width="50" class="icon"alt=""/> There was an error generating new image descriptions. <br> <br> Please refresh the page and try again.'

} else if (extensionState === 'errorAuthentication') {

userMessage.innerHTML = '<img src="error-icon.png" width="50" class="icon"alt=""/> There was an error generating new image descriptions. <br> <br> Your OpenAI key is not valid. Please double check your key and try again.'

} else if (extensionState === 'errorMaxQuota') {

userMessage.innerHTML = '<img src="error-icon.png" width="50" class="icon"alt=""/> There was an error generating new image descriptions. <br> <br> You've either used up your current OpenAI plan and need to add more credit, or you've made too many requests too quickly. Please check your plan, add funds if needed, or slow down the requests.'

}

}

Within our content script, we’ll define a new variable called ‘extensionState’, which can either be ‘initial’ (the extension is loaded but nothing has happened yet), ‘loading’, ‘success’, or ‘error’ (we will add some other error states as well based on OpenAI error messages). We’ll also update the extension state variable and send a message to popup.js every time the state changes changes.

let extensionState = 'initial';

Our error handler for becomes:

function handleError(err) {

if (JSON.stringify(err).includes('401')) {

extensionState = 'errorAuthentication'

chrome.runtime.sendMessage({action: extensionState})

} else if (JSON.stringify(err).includes('429')) {

extensionState = 'errorMaxQuota'

chrome.runtime.sendMessage({action: extensionState})

} else {

extensionState = 'errorGeneric'

chrome.runtime.sendMessage({action: extensionState})

}

console.log(err);

}

And within our scanPhotos function, we set the state to ‘loading’ at the beginning of the function, and to ‘success’ if it fully runs without errors.

async function scanPhotos() {

extensionState = 'loading'

chrome.runtime.sendMessage({action: extensionState})

const images = document.querySelectorAll("img");

for (let image of images) {

const imageHasAltTag = image.hasAttribute('alt');

const imageAltTagIsEmptyString = image.hasAttribute('alt') && image.alt === "";

const isAriaHidden = image.ariaHidden ?? false;

if (!imageHasAltTag || !imageAltTagIsEmptyString || !isAriaHidden) {

const imageDescription = await generateDescription(image.src)

if (!imageDescription) {

return;

}

image.alt = imageDescription

}

}

extensionState = 'success'

chrome.runtime.sendMessage({action: extensionState})

}

-

Fixing confusing popup behavior – persisting extension state when popups close and reopen

You may notice at this point that if you generate alt tags, get a success message, and close and reopen the popup, it will display the initial message prompting the user to generate new alt tags. Even though the generated alt tags are in the code now!

In Chrome, every time you open an extension popup, it is a brand new popup. It won’t remember anything previously done by the extension, or what is running in the content script. However, we can make sure any newly opened popup is rendering the accurate state of the extension by having it call and check the extension state when it’s opened. To do that, we will have a popup pass another message, this time requesting the extension state, and we’ll add a message listener in our content.js that listens for that message and sends back the current state.

popup.js

chrome.tabs.query({active: true, currentWindow: true}, function(tabs) {

chrome.tabs.sendMessage(tabs[0].id, {action: "getExtensionState"}, function(response) {

// if the content script hasn't been injected, then the code in that script hasn't been run, and we'll get an error or no response

if (chrome.runtime.lastError || !response) {

return;

} else if (response) {

// if the code in content script HAS been injected, we'll get a response which tells us what state the code is at (loading, success, error, etc)

renderUI(response.extensionState)

}

});

});

content.js

chrome.runtime.onMessage.addListener(

function(request, sender, sendResponse) {

if (request.action === "getExtensionState")

sendResponse({extensionState});

});

If the content script has never been run (aka the user never clicked the button to generate alt tags), there will be no extension state variable or event listener. In this instance, chrome will return a runtime error in response. So we include a check for an error, and if we receive one, leave the default UI as is.

-

Extension accessibility – aria-live, color contrast, and close button

This extension is designed for people who use screen readers, so now we have to make sure it’s actually usable with a screen reader! Now is a good time to turn on your screen reader and see if it all works well.

There are a few things we want to clean up for accessibility. First of all, we want to make sure all text is a high enough contrast level. For the button, I’ve decided to set the background to #0250C5 and the font to white bold. This has a contrast ratio of 7.1 and is WCAG compliant at both AA and AAA levels. You can check contrast ratios for whatever colors you’d like to use here at the WebAim Contrast Checker.

Second, when using my screen reader, I notice that the screen reader doesn’t automatically read out the updates when the user message changes to a loading, success, or error message. In order to fix this, we will use an html attribute called aria-live. Aria-live allows developers to let the screen readers know to update users of changes. You can set aria-live to either assertive or polite – if it’s set to assertive, updates will be read immediately, regardless of if there are other items waiting to be read in the screen reader queue. If it’s set to polite, the update will be read at the end of everything the screen reader is in the process of reading. In our case, we want to update the user as soon as possible. So in popup-container, the parent element of our user-message element, we will add that attribute.

<div class="popup-container" aria-live="assertive">

Last of all, using the screen reader, I’m noticing there isn’t an easy way to close the popup. When using a mouse, you just click anywhere outside of the popup and it closes, but I can’t quite figure out how to close it using the keyboard. So we will add a ‘close’ button at the bottom of the popup, so users can easily close it and return to the web page.

In popup.html, we add:

<div>

<button id="close-button">Close</button>

</div>

In popup.js, we add the close function to the onclick:

const closeButton = document.body.querySelector('#close-button');

closeButton.addEventListener('click', async () => {

window.close()

});

And that’s it! If you have any questions or suggestions, please reach out.

Here is a link to the final repo.